Mathias Quoy

ETIS, IPAL

Confidence-Based Self-Training for EMG-to-Speech: Leveraging Synthetic EMG for Robust Modeling

Jun 13, 2025

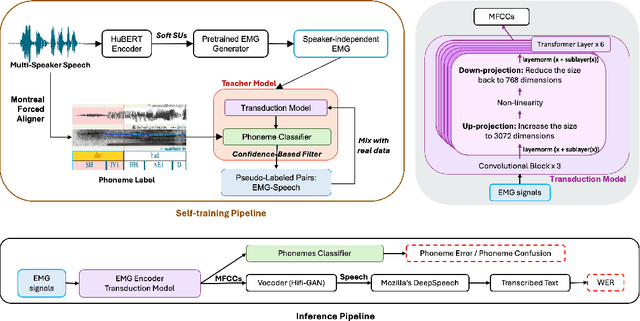

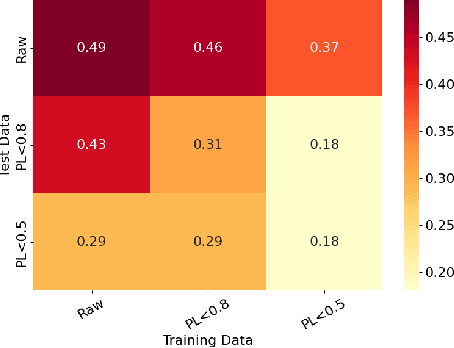

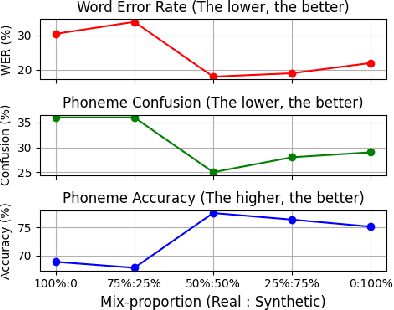

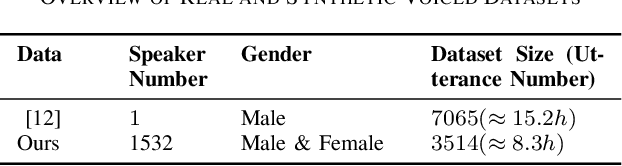

Abstract:Voiced Electromyography (EMG)-to-Speech (V-ETS) models reconstruct speech from muscle activity signals, facilitating applications such as neurolaryngologic diagnostics. Despite its potential, the advancement of V-ETS is hindered by a scarcity of paired EMG-speech data. To address this, we propose a novel Confidence-based Multi-Speaker Self-training (CoM2S) approach, along with a newly curated Libri-EMG dataset. This approach leverages synthetic EMG data generated by a pre-trained model, followed by a proposed filtering mechanism based on phoneme-level confidence to enhance the ETS model through the proposed self-training techniques. Experiments demonstrate our method improves phoneme accuracy, reduces phonological confusion, and lowers word error rate, confirming the effectiveness of our CoM2S approach for V-ETS. In support of future research, we will release the codes and the proposed Libri-EMG dataset-an open-access, time-aligned, multi-speaker voiced EMG and speech recordings.

Developmental Predictive Coding Model for Early Infancy Mono and Bilingual Vocal Continual Learning

Dec 23, 2024Abstract:Understanding how infants perceive speech sounds and language structures is still an open problem. Previous research in artificial neural networks has mainly focused on large dataset-dependent generative models, aiming to replicate language-related phenomena such as ''perceptual narrowing''. In this paper, we propose a novel approach using a small-sized generative neural network equipped with a continual learning mechanism based on predictive coding for mono-and bilingual speech sound learning (referred to as language sound acquisition during ''critical period'') and a compositional optimization mechanism for generation where no learning is involved (later infancy sound imitation). Our model prioritizes interpretability and demonstrates the advantages of online learning: Unlike deep networks requiring substantial offline training, our model continuously updates with new data, making it adaptable and responsive to changing inputs. Through experiments, we demonstrate that if second language acquisition occurs during later infancy, the challenges associated with learning a foreign language after the critical period amplify, replicating the perceptual narrowing effect.

Networks' modulation: How different structural network properties affect the global synchronization of coupled Kuramoto oscillators

Feb 24, 2023Abstract:In a large variety of systems (biological, physical, social etc.), synchronization occurs when different oscillating objects tune their rhythm when they interact with each other. The different underlying network defining the connectivity properties among these objects drives the global dynamics in a complex fashion and affects the global degree of synchrony of the system. Here we study the impact of such types of different network architectures, such as Fully-Connected, Random, Regular ring lattice graph, Small-World and Scale-Free in the global dynamical activity of a system of coupled Kuramoto phase oscillators. We fix the external stimulation parameters and we measure the global degree of synchrony when different fractions of nodes receive stimulus. These nodes are chosen either randomly or based on their respective strong/weak connectivity properties (centrality, shortest path length and clustering coefficient). Our main finding is, that in Scale-Free and Random networks a sophisticated choice of nodes based on their eigenvector centrality and average shortest path length exhibits a systematic trend in achieving higher degree of synchrony. However, this trend does not occur when using the clustering coefficient as a criterion. For the other types of graphs considered, the choice of the stimulated nodes (randomly vs selectively using the aforementioned criteria) does not seem to have a noticeable effect.

On the Relationship Between Variational Inference and Auto-Associative Memory

Oct 14, 2022

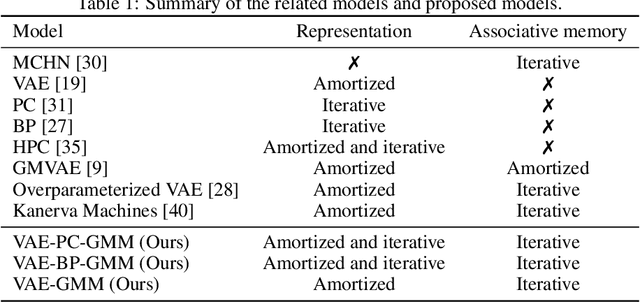

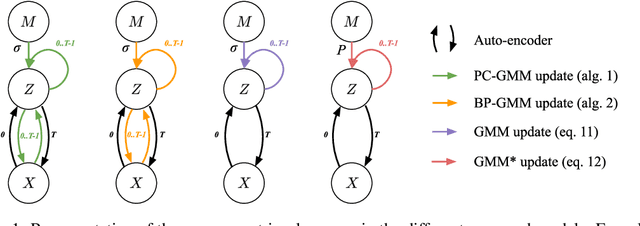

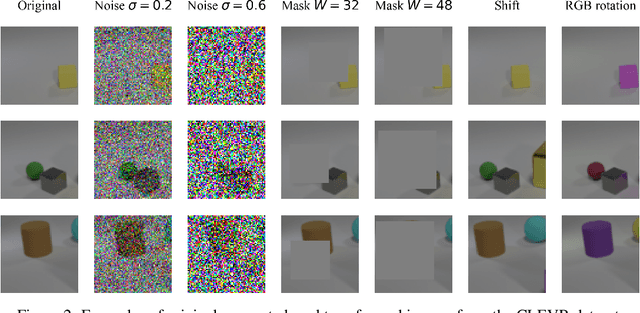

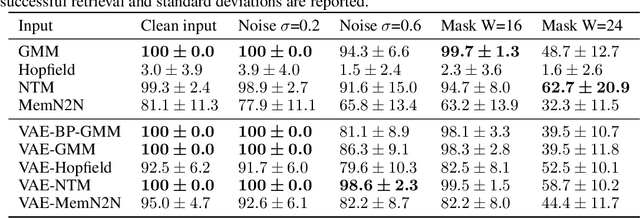

Abstract:In this article, we propose a variational inference formulation of auto-associative memories, allowing us to combine perceptual inference and memory retrieval into the same mathematical framework. In this formulation, the prior probability distribution onto latent representations is made memory dependent, thus pulling the inference process towards previously stored representations. We then study how different neural network approaches to variational inference can be applied in this framework. We compare methods relying on amortized inference such as Variational Auto Encoders and methods relying on iterative inference such as Predictive Coding and suggest combining both approaches to design new auto-associative memory models. We evaluate the obtained algorithms on the CIFAR10 and CLEVR image datasets and compare them with other associative memory models such as Hopfield Networks, End-to-End Memory Networks and Neural Turing Machines.

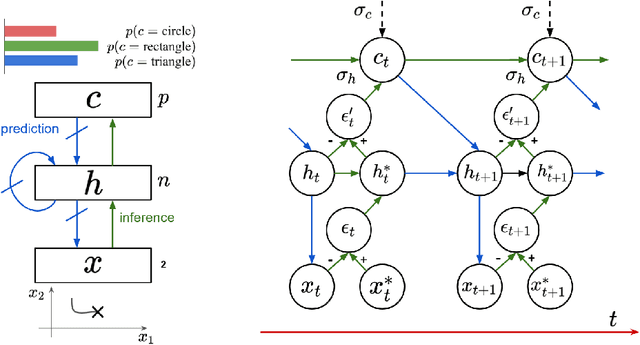

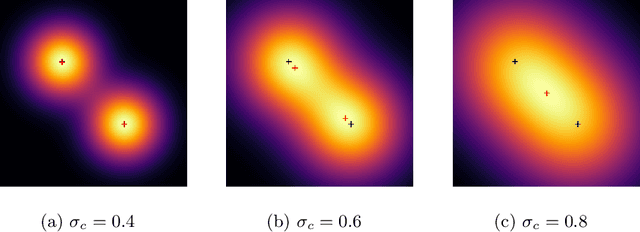

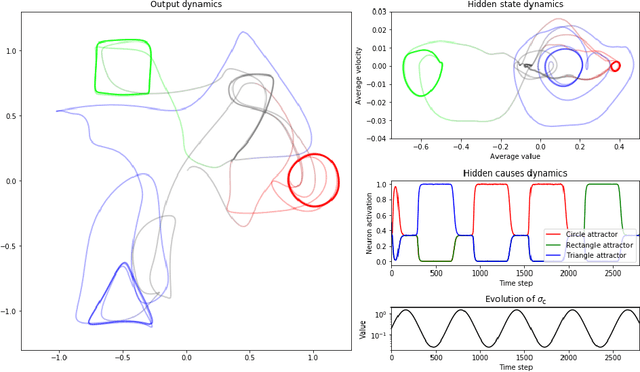

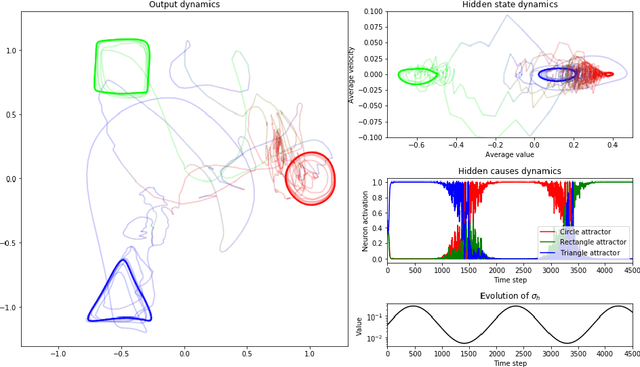

A Predictive Coding Account for Chaotic Itinerancy

Jun 16, 2021

Abstract:As a phenomenon in dynamical systems allowing autonomous switching between stable behaviors, chaotic itinerancy has gained interest in neurorobotics research. In this study, we draw a connection between this phenomenon and the predictive coding theory by showing how a recurrent neural network implementing predictive coding can generate neural trajectories similar to chaotic itinerancy in the presence of input noise. We propose two scenarios generating random and past-independent attractor switching trajectories using our model.

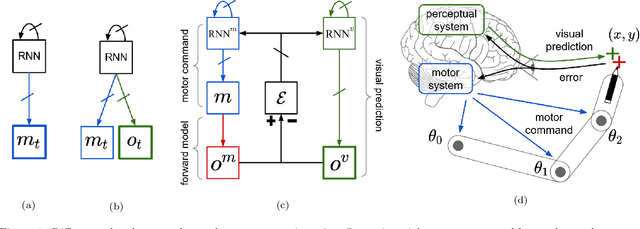

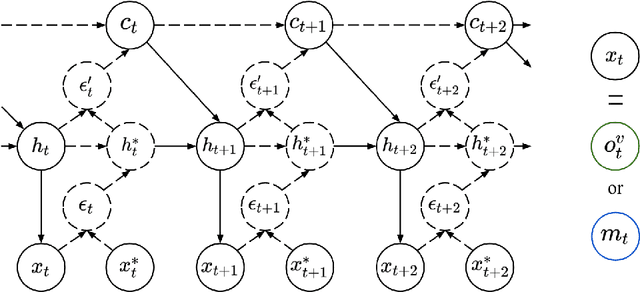

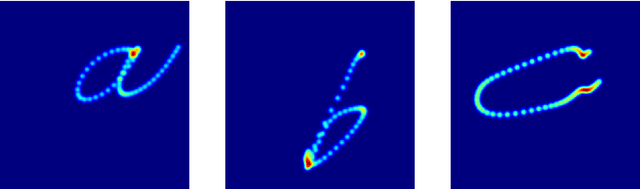

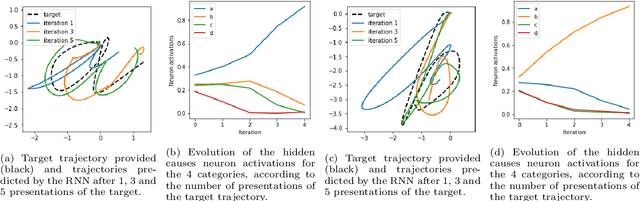

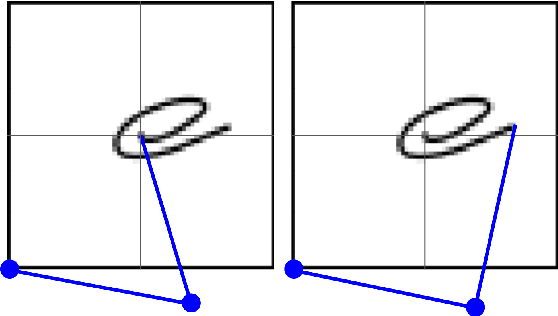

Bidirectional Interaction between Visual and Motor Generative Models using Predictive Coding and Active Inference

Apr 19, 2021

Abstract:In this work, we build upon the Active Inference (AIF) and Predictive Coding (PC) frameworks to propose a neural architecture comprising a generative model for sensory prediction, and a distinct generative model for motor trajectories. We highlight how sequences of sensory predictions can act as rails guiding learning, control and online adaptation of motor trajectories. We furthermore inquire the effects of bidirectional interactions between the motor and the visual modules. The architecture is tested on the control of a simulated robotic arm learning to reproduce handwritten letters.

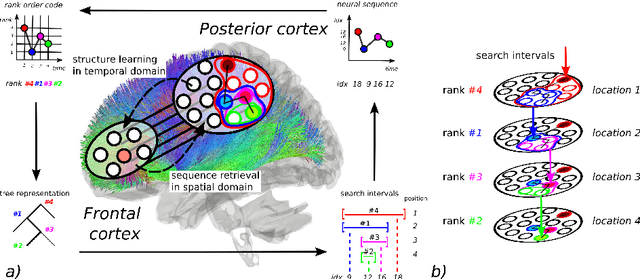

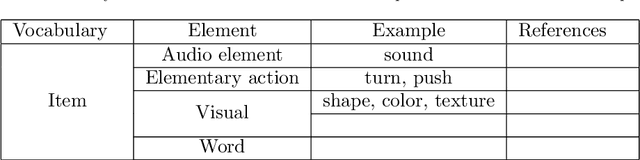

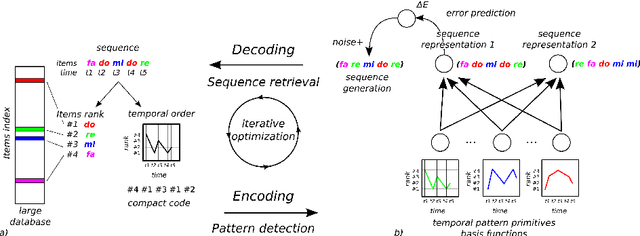

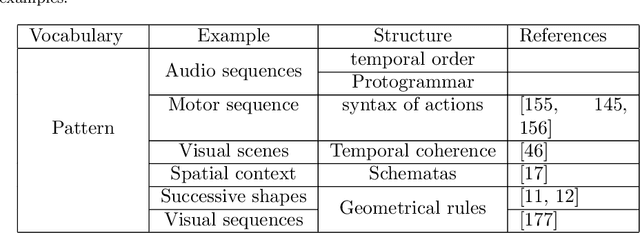

Digital Neural Networks in the Brain: From Mechanisms for Extracting Structure in the World To Self-Structuring the Brain Itself

May 22, 2020

Abstract:In order to keep trace of information, the brain has to resolve the problem where information is and how to index new ones. We propose that the neural mechanism used by the prefrontal cortex (PFC) to detect structure in temporal sequences, based on the temporal order of incoming information, has served as second purpose to the spatial ordering and indexing of brain networks. We call this process, apparent to the manipulation of neural 'addresses' to organize the brain's own network, the 'digitalization' of information. Such tool is important for information processing and preservation, but also for memory formation and retrieval.

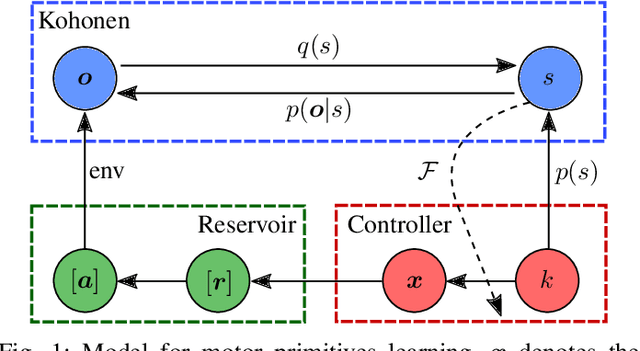

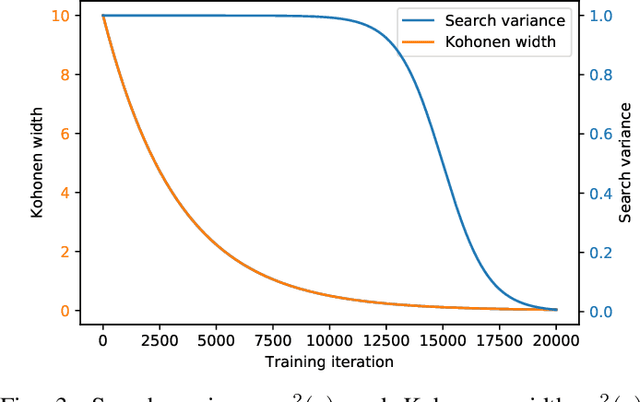

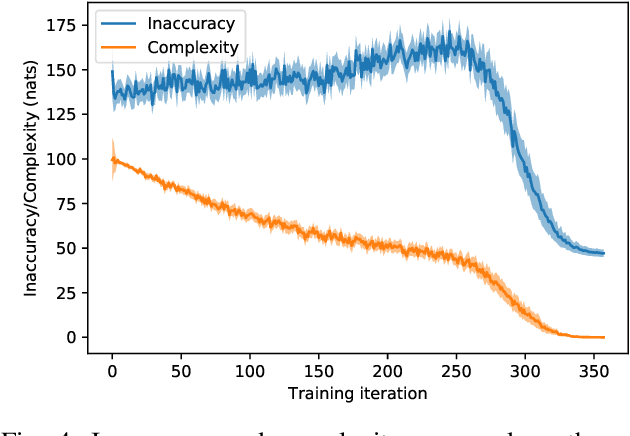

Autonomous learning and chaining of motor primitives using the Free Energy Principle

May 11, 2020

Abstract:In this article, we apply the Free-Energy Principle to the question of motor primitives learning. An echo-state network is used to generate motor trajectories. We combine this network with a perception module and a controller that can influence its dynamics. This new compound network permits the autonomous learning of a repertoire of motor trajectories. To evaluate the repertoires built with our method, we exploit them in a handwriting task where primitives are chained to produce long-range sequences.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge