Alexandre Pitti

ETIS, IPAL

Confidence-Based Self-Training for EMG-to-Speech: Leveraging Synthetic EMG for Robust Modeling

Jun 13, 2025

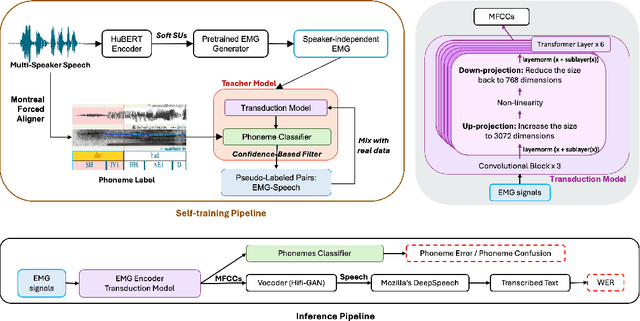

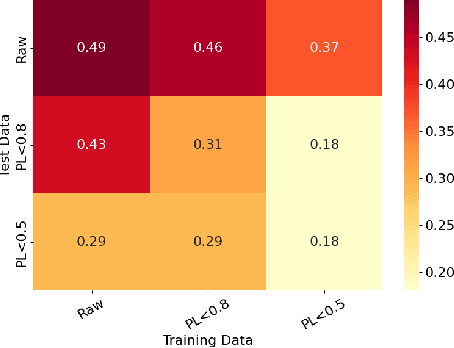

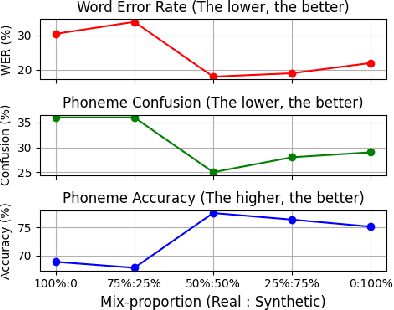

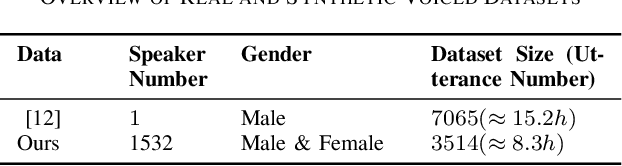

Abstract:Voiced Electromyography (EMG)-to-Speech (V-ETS) models reconstruct speech from muscle activity signals, facilitating applications such as neurolaryngologic diagnostics. Despite its potential, the advancement of V-ETS is hindered by a scarcity of paired EMG-speech data. To address this, we propose a novel Confidence-based Multi-Speaker Self-training (CoM2S) approach, along with a newly curated Libri-EMG dataset. This approach leverages synthetic EMG data generated by a pre-trained model, followed by a proposed filtering mechanism based on phoneme-level confidence to enhance the ETS model through the proposed self-training techniques. Experiments demonstrate our method improves phoneme accuracy, reduces phonological confusion, and lowers word error rate, confirming the effectiveness of our CoM2S approach for V-ETS. In support of future research, we will release the codes and the proposed Libri-EMG dataset-an open-access, time-aligned, multi-speaker voiced EMG and speech recordings.

Less is More: some Computational Principles based on Parcimony, and Limitations of Natural Intelligence

Jun 08, 2025Abstract:Natural intelligence (NI) consistently achieves more with less. Infants learn language, develop abstract concepts, and acquire sensorimotor skills from sparse data, all within tight neural and energy limits. In contrast, today's AI relies on virtually unlimited computational power, energy, and data to reach high performance. This paper argues that constraints in NI are paradoxically catalysts for efficiency, adaptability, and creativity. We first show how limited neural bandwidth promotes concise codes that still capture complex patterns. Spiking neurons, hierarchical structures, and symbolic-like representations emerge naturally from bandwidth constraints, enabling robust generalization. Next, we discuss chaotic itinerancy, illustrating how the brain transits among transient attractors to flexibly retrieve memories and manage uncertainty. We then highlight reservoir computing, where random projections facilitate rapid generalization from small datasets. Drawing on developmental perspectives, we emphasize how intrinsic motivation, along with responsive social environments, drives infant language learning and discovery of meaning. Such active, embodied processes are largely absent in current AI. Finally, we suggest that adopting 'less is more' principles -- energy constraints, parsimonious architectures, and real-world interaction -- can foster the emergence of more efficient, interpretable, and biologically grounded artificial systems.

Developmental Predictive Coding Model for Early Infancy Mono and Bilingual Vocal Continual Learning

Dec 23, 2024Abstract:Understanding how infants perceive speech sounds and language structures is still an open problem. Previous research in artificial neural networks has mainly focused on large dataset-dependent generative models, aiming to replicate language-related phenomena such as ''perceptual narrowing''. In this paper, we propose a novel approach using a small-sized generative neural network equipped with a continual learning mechanism based on predictive coding for mono-and bilingual speech sound learning (referred to as language sound acquisition during ''critical period'') and a compositional optimization mechanism for generation where no learning is involved (later infancy sound imitation). Our model prioritizes interpretability and demonstrates the advantages of online learning: Unlike deep networks requiring substantial offline training, our model continuously updates with new data, making it adaptable and responsive to changing inputs. Through experiments, we demonstrate that if second language acquisition occurs during later infancy, the challenges associated with learning a foreign language after the critical period amplify, replicating the perceptual narrowing effect.

Informational Embodiment: Computational role of information structure in codes and robots

Aug 23, 2024Abstract:The body morphology plays an important role in the way information is perceived and processed by an agent. We address an information theory (IT) account on how the precision of sensors, the accuracy of motors, their placement, the body geometry, shape the information structure in robots and computational codes. As an original idea, we envision the robot's body as a physical communication channel through which information is conveyed, in and out, despite intrinsic noise and material limitations. Following this, entropy, a measure of information and uncertainty, can be used to maximize the efficiency of robot design and of algorithmic codes per se. This is known as the principle of Entropy Maximization (PEM) introduced in biology by Barlow in 1969. The Shannon's source coding theorem provides then a framework to compare different types of bodies in terms of sensorimotor information. In line with PME, we introduce a special class of efficient codes used in IT that reached the Shannon limits in terms of information capacity for error correction and robustness against noise, and parsimony. These efficient codes, which exploit insightfully quantization and randomness, permit to deal with uncertainty, redundancy and compacity. These features can be used for perception and control in intelligent systems. In various examples and closing discussions, we reflect on the broader implications of our framework that we called Informational Embodiment to motor theory and bio-inspired robotics, touching upon concepts like motor synergies, reservoir computing, and morphological computation. These insights can contribute to a deeper understanding of how information theory intersects with the embodiment of intelligence in both natural and artificial systems.

On the Relationship Between Variational Inference and Auto-Associative Memory

Oct 14, 2022

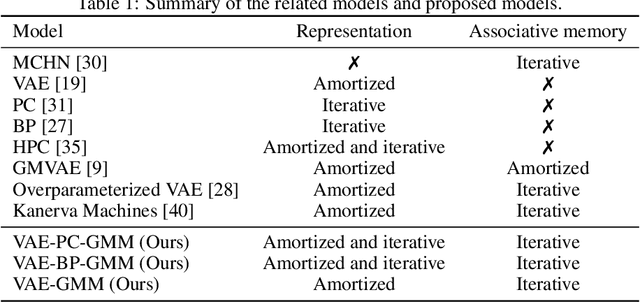

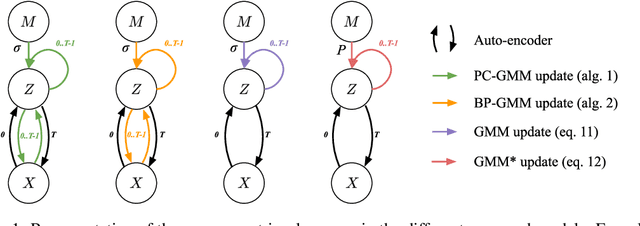

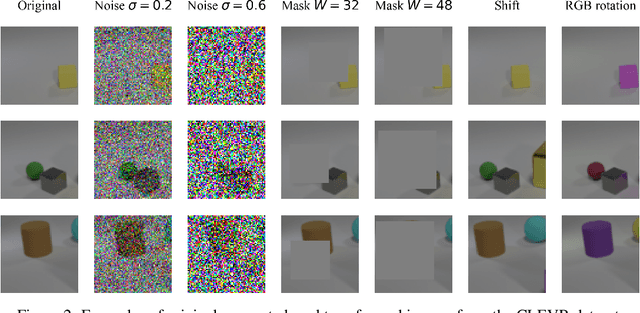

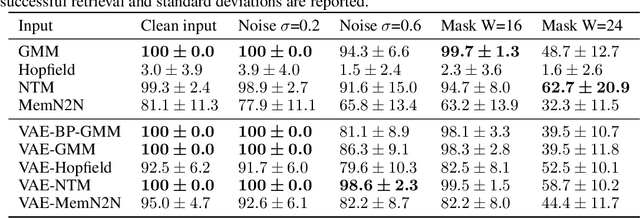

Abstract:In this article, we propose a variational inference formulation of auto-associative memories, allowing us to combine perceptual inference and memory retrieval into the same mathematical framework. In this formulation, the prior probability distribution onto latent representations is made memory dependent, thus pulling the inference process towards previously stored representations. We then study how different neural network approaches to variational inference can be applied in this framework. We compare methods relying on amortized inference such as Variational Auto Encoders and methods relying on iterative inference such as Predictive Coding and suggest combining both approaches to design new auto-associative memory models. We evaluate the obtained algorithms on the CIFAR10 and CLEVR image datasets and compare them with other associative memory models such as Hopfield Networks, End-to-End Memory Networks and Neural Turing Machines.

A Predictive Coding Account for Chaotic Itinerancy

Jun 16, 2021

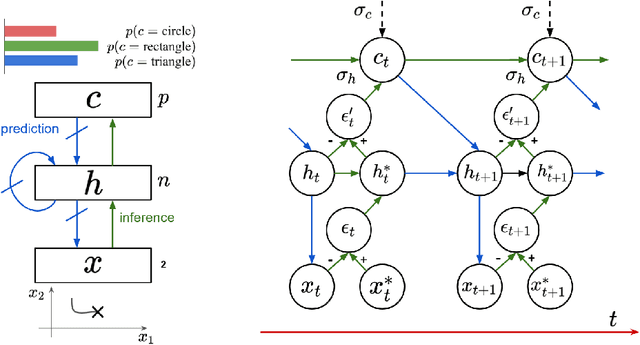

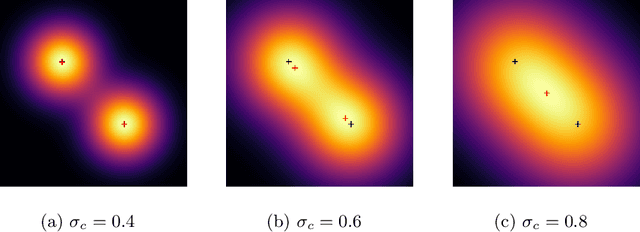

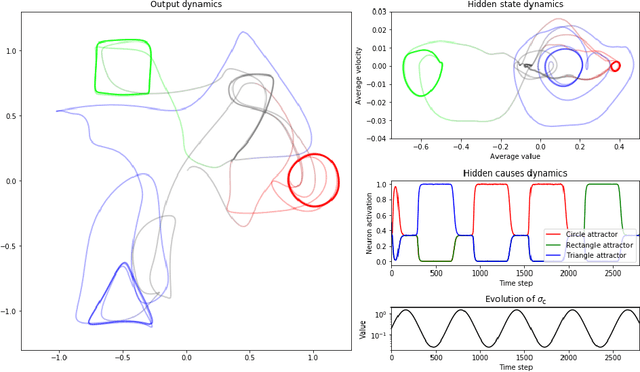

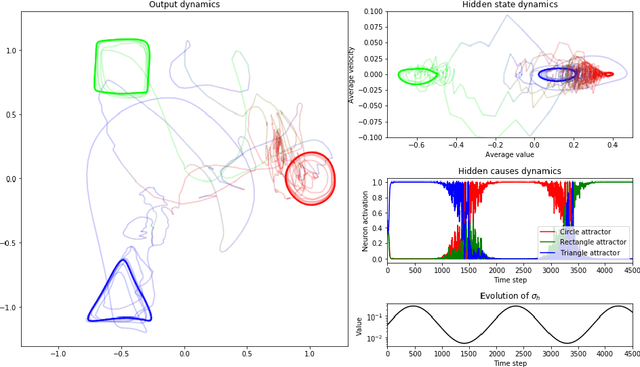

Abstract:As a phenomenon in dynamical systems allowing autonomous switching between stable behaviors, chaotic itinerancy has gained interest in neurorobotics research. In this study, we draw a connection between this phenomenon and the predictive coding theory by showing how a recurrent neural network implementing predictive coding can generate neural trajectories similar to chaotic itinerancy in the presence of input noise. We propose two scenarios generating random and past-independent attractor switching trajectories using our model.

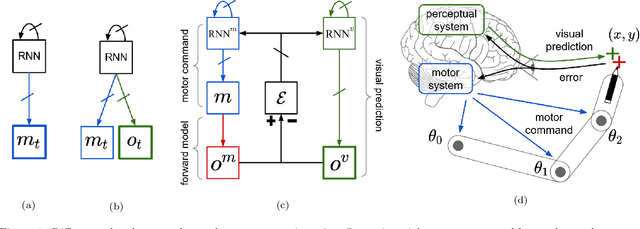

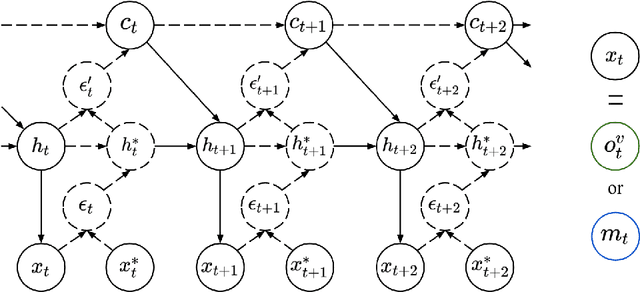

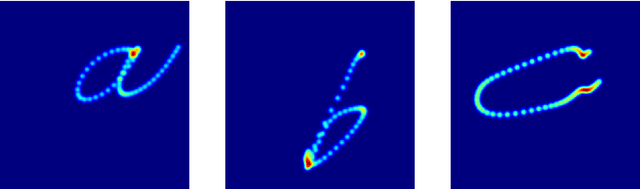

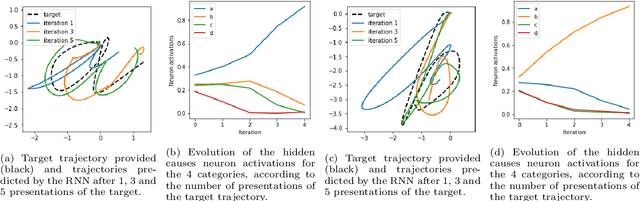

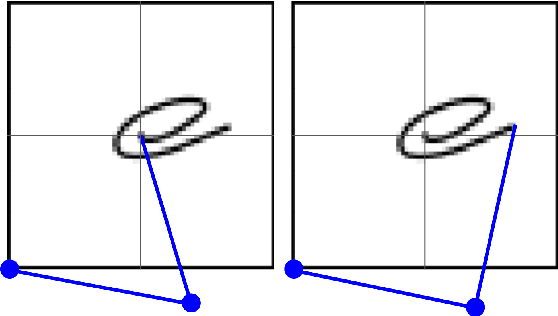

Bidirectional Interaction between Visual and Motor Generative Models using Predictive Coding and Active Inference

Apr 19, 2021

Abstract:In this work, we build upon the Active Inference (AIF) and Predictive Coding (PC) frameworks to propose a neural architecture comprising a generative model for sensory prediction, and a distinct generative model for motor trajectories. We highlight how sequences of sensory predictions can act as rails guiding learning, control and online adaptation of motor trajectories. We furthermore inquire the effects of bidirectional interactions between the motor and the visual modules. The architecture is tested on the control of a simulated robotic arm learning to reproduce handwritten letters.

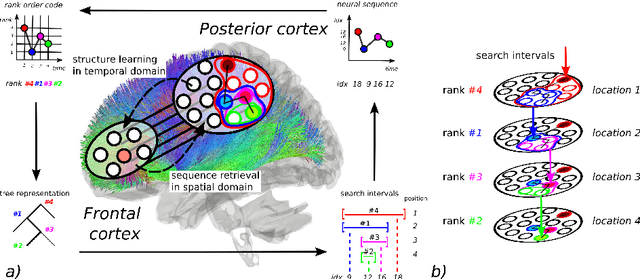

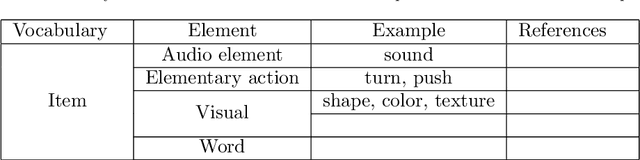

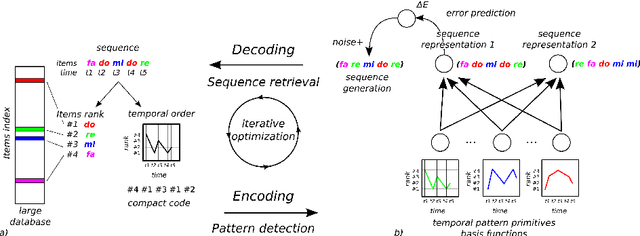

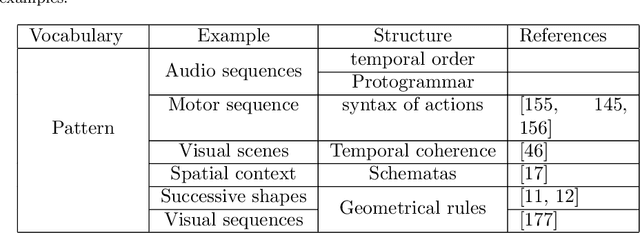

Digital Neural Networks in the Brain: From Mechanisms for Extracting Structure in the World To Self-Structuring the Brain Itself

May 22, 2020

Abstract:In order to keep trace of information, the brain has to resolve the problem where information is and how to index new ones. We propose that the neural mechanism used by the prefrontal cortex (PFC) to detect structure in temporal sequences, based on the temporal order of incoming information, has served as second purpose to the spatial ordering and indexing of brain networks. We call this process, apparent to the manipulation of neural 'addresses' to organize the brain's own network, the 'digitalization' of information. Such tool is important for information processing and preservation, but also for memory formation and retrieval.

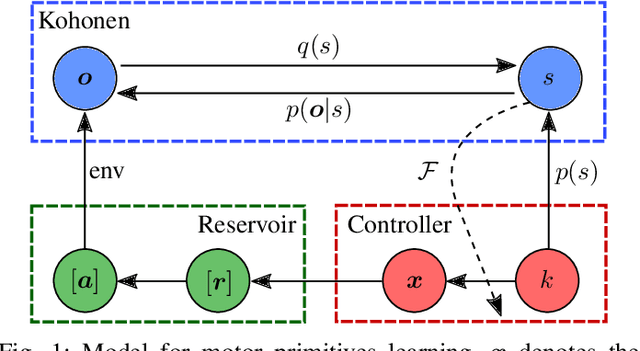

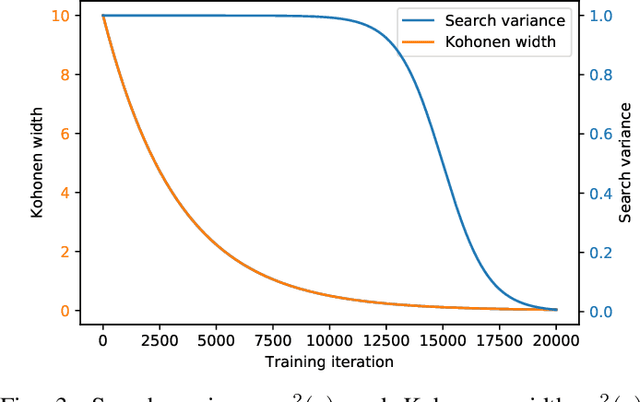

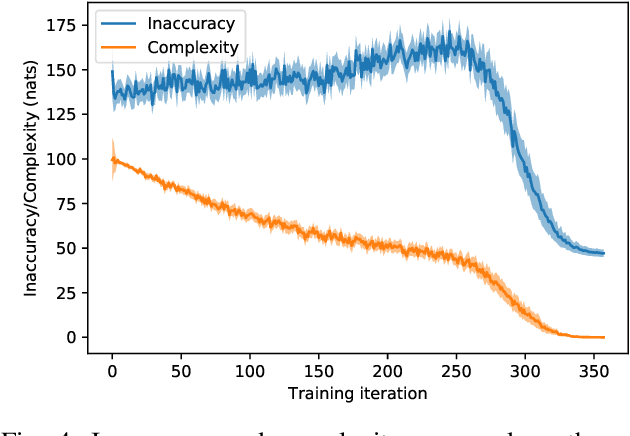

Autonomous learning and chaining of motor primitives using the Free Energy Principle

May 11, 2020

Abstract:In this article, we apply the Free-Energy Principle to the question of motor primitives learning. An echo-state network is used to generate motor trajectories. We combine this network with a perception module and a controller that can influence its dynamics. This new compound network permits the autonomous learning of a repertoire of motor trajectories. To evaluate the repertoires built with our method, we exploit them in a handwriting task where primitives are chained to produce long-range sequences.

Ideas from Developmental Robotics and Embodied AI on the Questions of Ethics in Robots

Mar 20, 2018Abstract:Advances in Artificial Intelligence and robotics are currently questioning theethical framework of their applications to deal with potential drifts, as well as the way inwhich these algorithms learn because they will have a strong impact on the behavior ofrobots and the type of robots. interactions with people. We would like to highlight someprinciples and ideas from cognitive neuroscience and development sciences based on theimportance of the body for intelligence, contrary to the theory of the all-brain or all-algorithm, to represent the world and interacting with others, and their current applicationsin embodied AI and developmental robotics to propose models of architectures andmechanisms for agency, representation of the body, recognition of the intention of others,predictive coding, active inference, the role of feedback and error, imitation, artificialcuriosity and contextual learning. We will explain how these are important for the design ofautonomous systems and beyond what they can tell us for the ethics of systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge