Kohei Nakajima

Memory Determines Learning Direction: A Theory of Gradient-Based Optimization in State Space Models

Oct 01, 2025Abstract:State space models (SSMs) have gained attention by showing potential to outperform Transformers. However, previous studies have not sufficiently addressed the mechanisms underlying their high performance owing to a lack of theoretical explanation of SSMs' learning dynamics. In this study, we provide such an explanation and propose an improved training strategy. The memory capacity of SSMs can be evaluated by examining how input time series are stored in their current state. Such an examination reveals a tradeoff between memory accuracy and length, as well as the theoretical equivalence between the structured state space sequence model (S4) and a simplified S4 with diagonal recurrent weights. This theoretical foundation allows us to elucidate the learning dynamics, proving the importance of initial parameters. Our analytical results suggest that successful learning requires the initial memory structure to be the longest possible even if memory accuracy may deteriorate or the gradient lose the teacher information. Experiments on tasks requiring long memory confirmed that extending memory is difficult, emphasizing the importance of initialization. Furthermore, we found that fixing recurrent weights can be more advantageous than adapting them because it achieves comparable or even higher performance with faster convergence. Our results provide a new theoretical foundation for SSMs and potentially offer a novel optimization strategy.

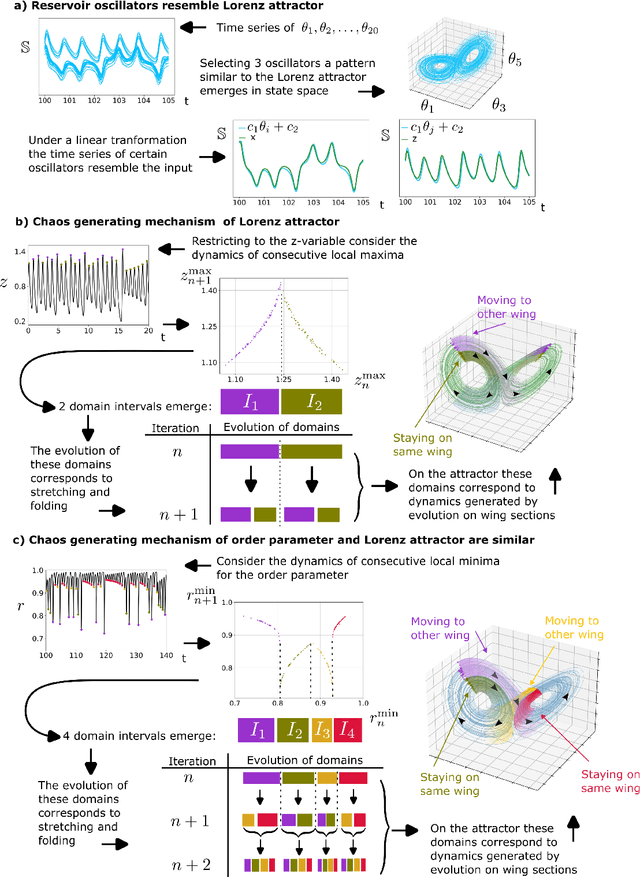

Attractor-merging Crises and Intermittency in Reservoir Computing

Apr 17, 2025Abstract:Reservoir computing can embed attractors into random neural networks (RNNs), generating a ``mirror'' of a target attractor because of its inherent symmetrical constraints. In these RNNs, we report that an attractor-merging crisis accompanied by intermittency emerges simply by adjusting the global parameter. We further reveal its underlying mechanism through a detailed analysis of the phase-space structure and demonstrate that this bifurcation scenario is intrinsic to a general class of RNNs, independent of training data.

Haptic Perception via the Dynamics of Flexible Body Inspired by an Ostrich's Neck

Apr 12, 2025

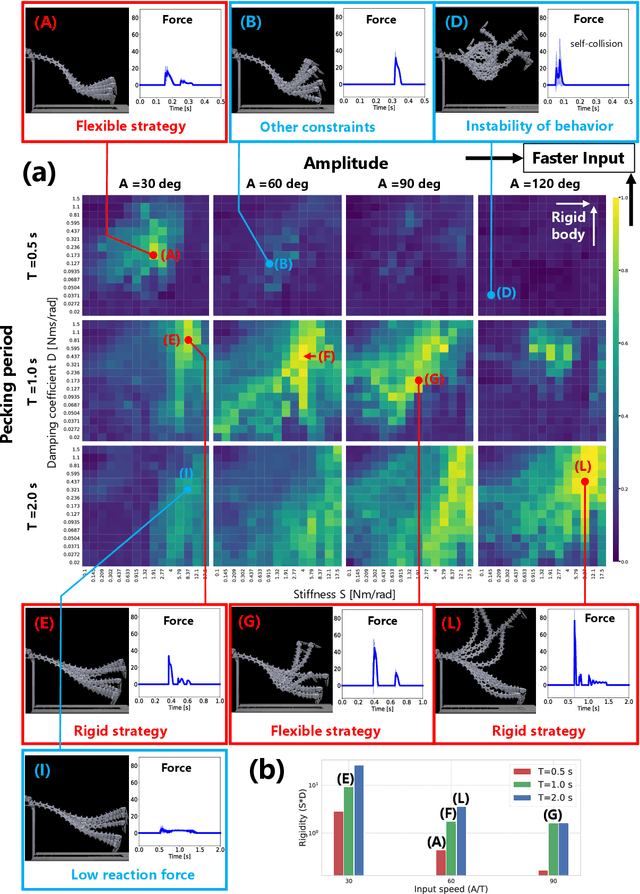

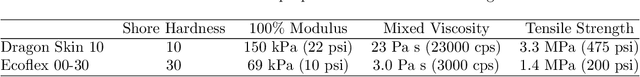

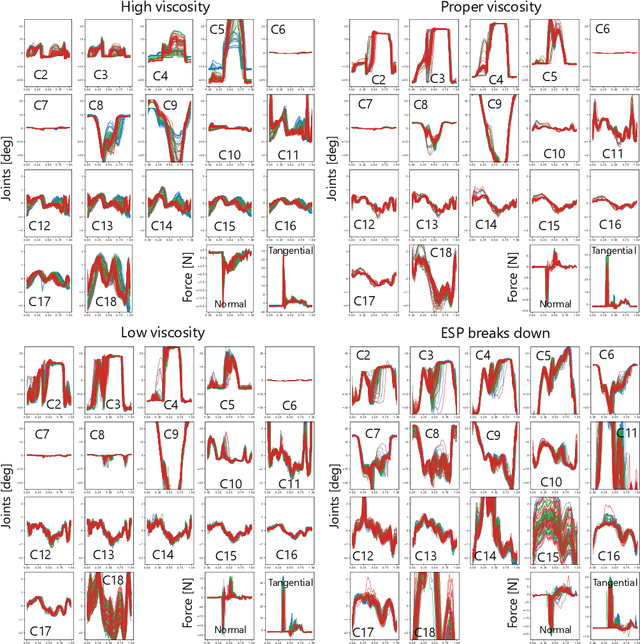

Abstract:In biological systems, haptic perception is achieved through both flexible skin and flexible body. In fully soft robots, the fragility of their bodies and the time delays in sensory processing pose significant challenges. The musculoskeletal system possesses both the deformability inherent in soft materials and the durability of rigid-body robots. Additionally, by outsourcing part of the intelligent information processing to the morphology of the musculoskeletal system, applications for dynamic tasks are expected. This study focuses on the pecking movements of birds, which achieve precise haptic perception through the musculoskeletal system of their flexible neck. Physical reservoir computing is applied to flexible structures inspired by an ostrich neck to analyze the relationship between haptic perception and physical characteristics. Combined experiments using both an actual robot and simulations demonstrate that, under appropriate body viscoelasticity, the flexible structure can distinguish objects of varying softness and memorize this information as behaviors. Drawing on these findings and anatomical insights from the ostrich neck, a haptic sensing system is proposed that possesses separability and this behavioral memory in flexible structures, enabling rapid learning and real-time inference. The results demonstrate that through the dynamics of flexible structures, diverse functions can emerge beyond their original design as manipulators.

Harnessing omnipresent oscillator networks as computational resource

Feb 07, 2025

Abstract:Nature is pervaded with oscillatory behavior. In networks of coupled oscillators patterns can arise when the system synchronizes to an external input. Hence, these networks provide processing and memory of input. We present a universal framework for harnessing oscillator networks as computational resource. This reservoir computing framework is introduced by the ubiquitous model for phase-locking, the Kuramoto model. We force the Kuramoto model by a nonlinear target-system, then after substituting the target-system with a trained feedback-loop it emulates the target-system. Our results are two-fold. Firstly, the trained network inherits performance properties of the Kuramoto model, where all-to-all coupling is performed in linear time with respect to the number of nodes and parameters for synchronization are abundant. Secondly, the learning capabilities of the oscillator network can be explained using Kuramoto model's order parameter. This work provides the foundation for utilizing nature's oscillator networks as a new class of information processing systems.

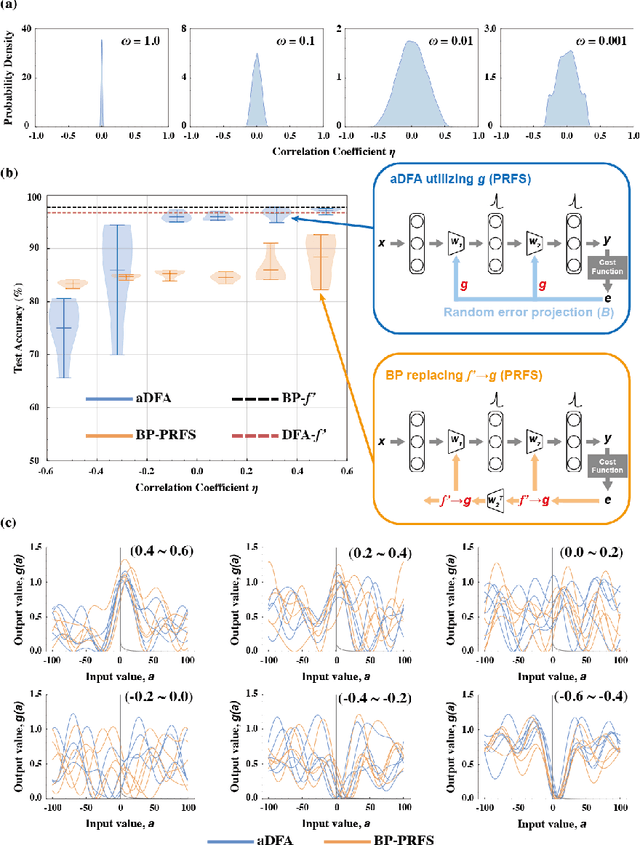

Training Spiking Neural Networks via Augmented Direct Feedback Alignment

Sep 12, 2024

Abstract:Spiking neural networks (SNNs), the models inspired by the mechanisms of real neurons in the brain, transmit and represent information by employing discrete action potentials or spikes. The sparse, asynchronous properties of information processing make SNNs highly energy efficient, leading to SNNs being promising solutions for implementing neural networks in neuromorphic devices. However, the nondifferentiable nature of SNN neurons makes it a challenge to train them. The current training methods of SNNs that are based on error backpropagation (BP) and precisely designing surrogate gradient are difficult to implement and biologically implausible, hindering the implementation of SNNs on neuromorphic devices. Thus, it is important to train SNNs with a method that is both physically implementatable and biologically plausible. In this paper, we propose using augmented direct feedback alignment (aDFA), a gradient-free approach based on random projection, to train SNNs. This method requires only partial information of the forward process during training, so it is easy to implement and biologically plausible. We systematically demonstrate the feasibility of the proposed aDFA-SNNs scheme, propose its effective working range, and analyze its well-performing settings by employing genetic algorithm. We also analyze the impact of crucial features of SNNs on the scheme, thus demonstrating its superiority and stability over BP and conventional direct feedback alignment. Our scheme can achieve competitive performance without accurate prior knowledge about the utilized system, thus providing a valuable reference for physically training SNNs.

How noise affects memory in linear recurrent networks

Sep 05, 2024Abstract:The effects of noise on memory in a linear recurrent network are theoretically investigated. Memory is characterized by its ability to store previous inputs in its instantaneous state of network, which receives a correlated or uncorrelated noise. Two major properties are revealed: First, the memory reduced by noise is uniquely determined by the noise's power spectral density (PSD). Second, the memory will not decrease regardless of noise intensity if the PSD is in a certain class of distribution (including power law). The results are verified using the human brain signals, showing good agreement.

Informational Embodiment: Computational role of information structure in codes and robots

Aug 23, 2024Abstract:The body morphology plays an important role in the way information is perceived and processed by an agent. We address an information theory (IT) account on how the precision of sensors, the accuracy of motors, their placement, the body geometry, shape the information structure in robots and computational codes. As an original idea, we envision the robot's body as a physical communication channel through which information is conveyed, in and out, despite intrinsic noise and material limitations. Following this, entropy, a measure of information and uncertainty, can be used to maximize the efficiency of robot design and of algorithmic codes per se. This is known as the principle of Entropy Maximization (PEM) introduced in biology by Barlow in 1969. The Shannon's source coding theorem provides then a framework to compare different types of bodies in terms of sensorimotor information. In line with PME, we introduce a special class of efficient codes used in IT that reached the Shannon limits in terms of information capacity for error correction and robustness against noise, and parsimony. These efficient codes, which exploit insightfully quantization and randomness, permit to deal with uncertainty, redundancy and compacity. These features can be used for perception and control in intelligent systems. In various examples and closing discussions, we reflect on the broader implications of our framework that we called Informational Embodiment to motor theory and bio-inspired robotics, touching upon concepts like motor synergies, reservoir computing, and morphological computation. These insights can contribute to a deeper understanding of how information theory intersects with the embodiment of intelligence in both natural and artificial systems.

A Jellyfish Cyborg: Exploiting Natural Embodied Intelligence as Soft Robots

Aug 04, 2024Abstract:In the advanced field of bio-inspired robotics, the emergence of cyborgs represents the successful integration of engineering and biological systems. Building on previous research that showed how electrical stimuli could initiate and speed up a jellyfish's movement, this study presents a groundbreaking approach that explores how the natural embodied intelligence of the animal can be harnessed to address pivotal challenges such as spontaneous exploration, navigation in various environments, control of whole-body motion, and real-time predictions of behavior. We have developed a comprehensive data acquisition system and a unique setup for stimulating jellyfish, allowing for a detailed study of their movements. Through careful analysis of both spontaneous behaviors and behaviors induced by targeted stimulation, we have identified subtle differences between natural and induced motion patterns. By using a machine learning method called physical reservoir computing, we have successfully shown that future behaviors can be accurately predicted by directly measuring the jellyfish's body shape when the stimuli align with the animal's natural dynamics. Our findings also reveal significant advancements in motion control and real-time prediction capabilities of jellyfish cyborgs. In summary, this research provides a comprehensive roadmap for optimizing the capabilities of jellyfish cyborgs, with potential implications in marine reconnaissance and sustainable ecological interventions.

Exploiting Chaotic Dynamics as Deep Neural Networks

May 29, 2024Abstract:Chaos presents complex dynamics arising from nonlinearity and a sensitivity to initial states. These characteristics suggest a depth of expressivity that underscores their potential for advanced computational applications. However, strategies to effectively exploit chaotic dynamics for information processing have largely remained elusive. In this study, we reveal that the essence of chaos can be found in various state-of-the-art deep neural networks. Drawing inspiration from this revelation, we propose a novel method that directly leverages chaotic dynamics for deep learning architectures. Our approach is systematically evaluated across distinct chaotic systems. In all instances, our framework presents superior results to conventional deep neural networks in terms of accuracy, convergence speed, and efficiency. Furthermore, we found an active role of transient chaos formation in our scheme. Collectively, this study offers a new path for the integration of chaos, which has long been overlooked in information processing, and provides insights into the prospective fusion of chaotic dynamics within the domains of machine learning and neuromorphic computation.

Thermodynamic limit in learning period three

May 12, 2024

Abstract:A continuous one-dimensional map with period three includes all periods. This raises the following question: Can we obtain any types of periodic orbits solely by learning three data points? We consider learning period three with random neural networks and report the universal property associated with it. We first show that the trained networks have a thermodynamic limit that depends on the choice of target data and network settings. Our analysis reveals that almost all learned periods are unstable and each network has its characteristic attractors (which can even be untrained ones). Here, we propose the concept of characteristic bifurcation expressing embeddable attractors intrinsic to the network, in which the target data points and the scale of the network weights function as bifurcation parameters. In conclusion, learning period three generates various attractors through characteristic bifurcation due to the stability change in latently existing numerous unstable periods of the system.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge