Shuhong Liu

Denoising the Deep Sky: Physics-Based CCD Noise Formation for Astronomical Imaging

Jan 30, 2026Abstract:Astronomical imaging remains noise-limited under practical observing constraints, while standard calibration pipelines mainly remove structured artifacts and leave stochastic noise largely unresolved. Learning-based denoising is promising, yet progress is hindered by scarce paired training data and the need for physically interpretable and reproducible models in scientific workflows. We propose a physics-based noise synthesis framework tailored to CCD noise formation. The pipeline models photon shot noise, photo-response non-uniformity, dark-current noise, readout effects, and localized outliers arising from cosmic-ray hits and hot pixels. To obtain low-noise inputs for synthesis, we average multiple unregistered exposures to produce high-SNR bases. Realistic noisy counterparts synthesized from these bases using our noise model enable the construction of abundant paired datasets for supervised learning. We further introduce a real-world dataset across multi-bands acquired with two twin ground-based telescopes, providing paired raw frames and instrument-pipeline calibrated frames, together with calibration data and stacked high-SNR bases for real-world evaluation.

RealX3D: A Physically-Degraded 3D Benchmark for Multi-view Visual Restoration and Reconstruction

Dec 29, 2025Abstract:We introduce RealX3D, a real-capture benchmark for multi-view visual restoration and 3D reconstruction under diverse physical degradations. RealX3D groups corruptions into four families, including illumination, scattering, occlusion, and blurring, and captures each at multiple severity levels using a unified acquisition protocol that yields pixel-aligned LQ/GT views. Each scene includes high-resolution capture, RAW images, and dense laser scans, from which we derive world-scale meshes and metric depth. Benchmarking a broad range of optimization-based and feed-forward methods shows substantial degradation in reconstruction quality under physical corruptions, underscoring the fragility of current multi-view pipelines in real-world challenging environments.

Hierarchical Structure-Property Alignment for Data-Efficient Molecular Generation and Editing

Nov 11, 2025Abstract:Property-constrained molecular generation and editing are crucial in AI-driven drug discovery but remain hindered by two factors: (i) capturing the complex relationships between molecular structures and multiple properties remains challenging, and (ii) the narrow coverage and incomplete annotations of molecular properties weaken the effectiveness of property-based models. To tackle these limitations, we propose HSPAG, a data-efficient framework featuring hierarchical structure-property alignment. By treating SMILES and molecular properties as complementary modalities, the model learns their relationships at atom, substructure, and whole-molecule levels. Moreover, we select representative samples through scaffold clustering and hard samples via an auxiliary variational auto-encoder (VAE), substantially reducing the required pre-training data. In addition, we incorporate a property relevance-aware masking mechanism and diversified perturbation strategies to enhance generation quality under sparse annotations. Experiments demonstrate that HSPAG captures fine-grained structure-property relationships and supports controllable generation under multiple property constraints. Two real-world case studies further validate the editing capabilities of HSPAG.

DenseSplat: Densifying Gaussian Splatting SLAM with Neural Radiance Prior

Feb 13, 2025Abstract:Gaussian SLAM systems excel in real-time rendering and fine-grained reconstruction compared to NeRF-based systems. However, their reliance on extensive keyframes is impractical for deployment in real-world robotic systems, which typically operate under sparse-view conditions that can result in substantial holes in the map. To address these challenges, we introduce DenseSplat, the first SLAM system that effectively combines the advantages of NeRF and 3DGS. DenseSplat utilizes sparse keyframes and NeRF priors for initializing primitives that densely populate maps and seamlessly fill gaps. It also implements geometry-aware primitive sampling and pruning strategies to manage granularity and enhance rendering efficiency. Moreover, DenseSplat integrates loop closure and bundle adjustment, significantly enhancing frame-to-frame tracking accuracy. Extensive experiments on multiple large-scale datasets demonstrate that DenseSplat achieves superior performance in tracking and mapping compared to current state-of-the-art methods.

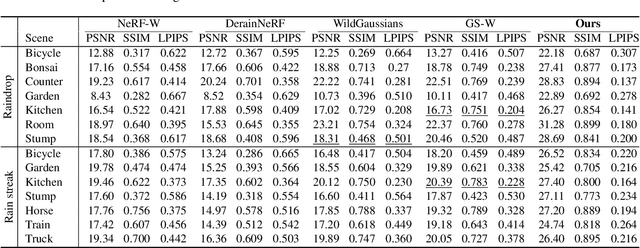

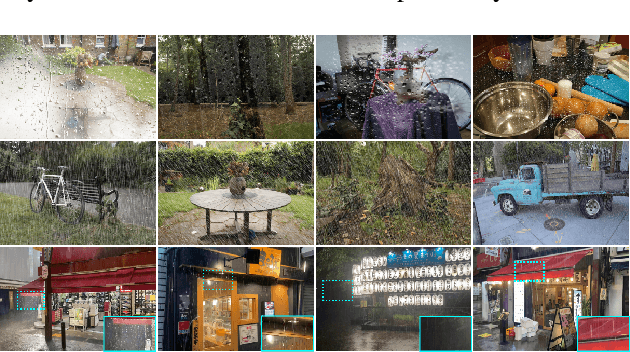

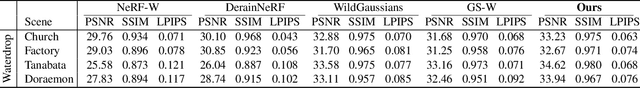

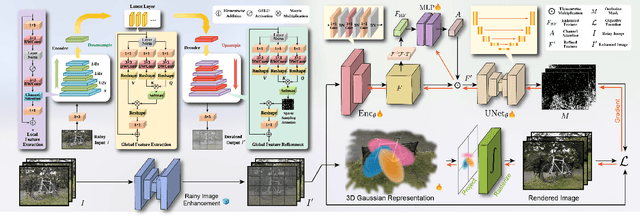

DeRainGS: Gaussian Splatting for Enhanced Scene Reconstruction in Rainy Environments

Aug 22, 2024

Abstract:Reconstruction under adverse rainy conditions poses significant challenges due to reduced visibility and the distortion of visual perception. These conditions can severely impair the quality of geometric maps, which is essential for applications ranging from autonomous planning to environmental monitoring. In response to these challenges, this study introduces the novel task of 3D Reconstruction in Rainy Environments (3DRRE), specifically designed to address the complexities of reconstructing 3D scenes under rainy conditions. To benchmark this task, we construct the HydroViews dataset that comprises a diverse collection of both synthesized and real-world scene images characterized by various intensities of rain streaks and raindrops. Furthermore, we propose DeRainGS, the first 3DGS method tailored for reconstruction in adverse rainy environments. Extensive experiments across a wide range of rain scenarios demonstrate that our method delivers state-of-the-art performance, remarkably outperforming existing occlusion-free methods.

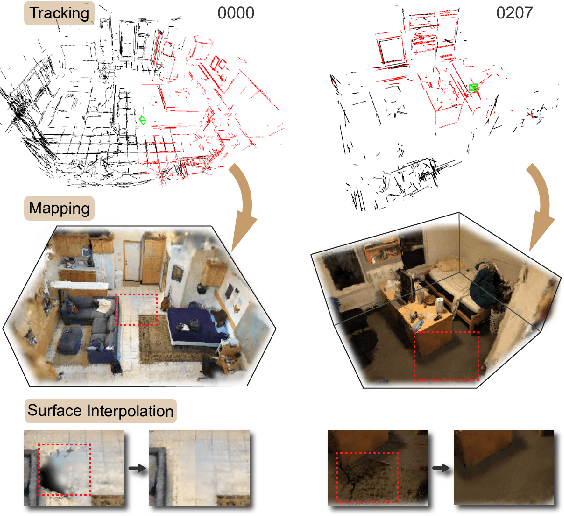

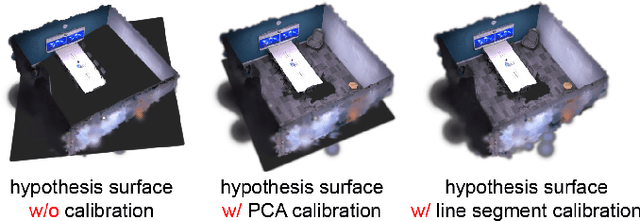

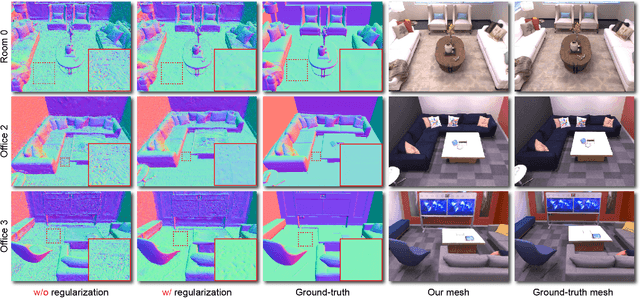

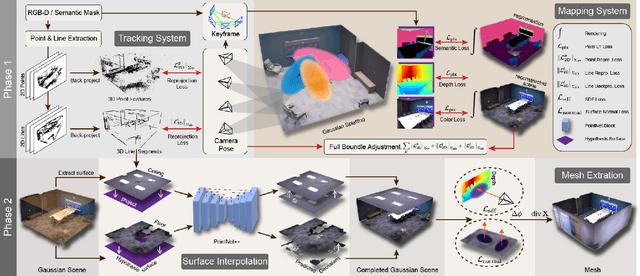

Structure Gaussian SLAM with Manhattan World Hypothesis

May 30, 2024

Abstract:Gaussian SLAM systems have made significant advancements in improving the efficiency and fidelity of real-time reconstructions. However, these systems often encounter incomplete reconstructions in complex indoor environments, characterized by substantial holes due to unobserved geometry caused by obstacles or limited view angles. To address this challenge, we present Manhattan Gaussian SLAM (MG-SLAM), an RGB-D system that leverages the Manhattan World hypothesis to enhance geometric accuracy and completeness. By seamlessly integrating fused line segments derived from structured scenes, MG-SLAM ensures robust tracking in textureless indoor areas. Moreover, The extracted lines and planar surface assumption allow strategic interpolation of new Gaussians in regions of missing geometry, enabling efficient scene completion. Extensive experiments conducted on both synthetic and real-world scenes demonstrate that these advancements enable our method to achieve state-of-the-art performance, marking a substantial improvement in the capabilities of Gaussian SLAM systems.

Exploiting Chaotic Dynamics as Deep Neural Networks

May 29, 2024Abstract:Chaos presents complex dynamics arising from nonlinearity and a sensitivity to initial states. These characteristics suggest a depth of expressivity that underscores their potential for advanced computational applications. However, strategies to effectively exploit chaotic dynamics for information processing have largely remained elusive. In this study, we reveal that the essence of chaos can be found in various state-of-the-art deep neural networks. Drawing inspiration from this revelation, we propose a novel method that directly leverages chaotic dynamics for deep learning architectures. Our approach is systematically evaluated across distinct chaotic systems. In all instances, our framework presents superior results to conventional deep neural networks in terms of accuracy, convergence speed, and efficiency. Furthermore, we found an active role of transient chaos formation in our scheme. Collectively, this study offers a new path for the integration of chaos, which has long been overlooked in information processing, and provides insights into the prospective fusion of chaotic dynamics within the domains of machine learning and neuromorphic computation.

Multi-Modal UAV Detection, Classification and Tracking Algorithm -- Technical Report for CVPR 2024 UG2 Challenge

May 26, 2024

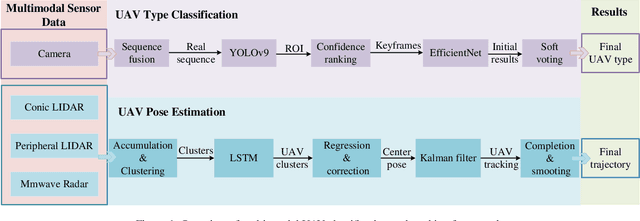

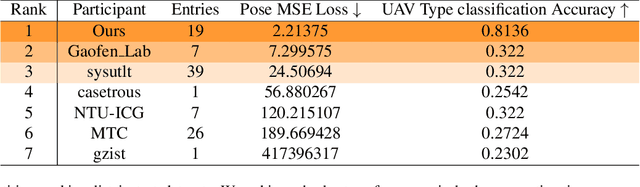

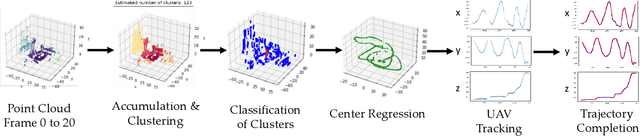

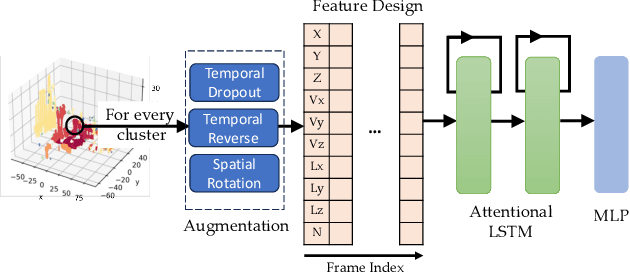

Abstract:This technical report presents the 1st winning model for UG2+, a task in CVPR 2024 UAV Tracking and Pose-Estimation Challenge. This challenge faces difficulties in drone detection, UAV-type classification and 2D/3D trajectory estimation in extreme weather conditions with multi-modal sensor information, including stereo vision, various Lidars, Radars, and audio arrays. Leveraging this information, we propose a multi-modal UAV detection, classification, and 3D tracking method for accurate UAV classification and tracking. A novel classification pipeline which incorporates sequence fusion, region of interest (ROI) cropping, and keyframe selection is proposed. Our system integrates cutting-edge classification techniques and sophisticated post-processing steps to boost accuracy and robustness. The designed pose estimation pipeline incorporates three modules: dynamic points analysis, a multi-object tracker, and trajectory completion techniques. Extensive experiments have validated the effectiveness and precision of our approach. In addition, we also propose a novel dataset pre-processing method and conduct a comprehensive ablation study for our design. We finally achieved the best performance in the classification and tracking of the MMUAD dataset. The code and configuration of our method are available at https://github.com/dtc111111/Multi-Modal-UAV.

MoD-SLAM: Monocular Dense Mapping for Unbounded 3D Scene Reconstruction

Feb 09, 2024

Abstract:Neural implicit representations have recently been demonstrated in many fields including Simultaneous Localization And Mapping (SLAM). Current neural SLAM can achieve ideal results in reconstructing bounded scenes, but this relies on the input of RGB-D images. Neural-based SLAM based only on RGB images is unable to reconstruct the scale of the scene accurately, and it also suffers from scale drift due to errors accumulated during tracking. To overcome these limitations, we present MoD-SLAM, a monocular dense mapping method that allows global pose optimization and 3D reconstruction in real-time in unbounded scenes. Optimizing scene reconstruction by monocular depth estimation and using loop closure detection to update camera pose enable detailed and precise reconstruction on large scenes. Compared to previous work, our approach is more robust, scalable and versatile. Our experiments demonstrate that MoD-SLAM has more excellent mapping performance than prior neural SLAM methods, especially in large borderless scenes.

SGS-SLAM: Semantic Gaussian Splatting For Neural Dense SLAM

Feb 05, 2024Abstract:Semantic understanding plays a crucial role in Dense Simultaneous Localization and Mapping (SLAM), facilitating comprehensive scene interpretation. Recent advancements that integrate Gaussian Splatting into SLAM systems have demonstrated its effectiveness in generating high-quality renderings through the use of explicit 3D Gaussian representations. Building on this progress, we propose SGS-SLAM, the first semantic dense visual SLAM system grounded in 3D Gaussians, which provides precise 3D semantic segmentation alongside high-fidelity reconstructions. Specifically, we propose to employ multi-channel optimization during the mapping process, integrating appearance, geometric, and semantic constraints with key-frame optimization to enhance reconstruction quality. Extensive experiments demonstrate that SGS-SLAM delivers state-of-the-art performance in camera pose estimation, map reconstruction, and semantic segmentation, outperforming existing methods meanwhile preserving real-time rendering ability.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge