Bruno Gas

A New Nonlinear speaker parameterization algorithm for speaker identification

Apr 06, 2022

Abstract:In this paper we propose a new parameterization algorithm based on nonlinear prediction, which is an extension of the classical LPC parameters. The parameters performances are estimated by two different methods: the Arithmetic-Harmonic Sphericity (AHS) and the Auto-Regressive Vector Model (ARVM). Two different methods are proposed for the parameterization based on the Neural Predictive Coding (NPC): classical neural networks initialization and linear initialization. We applied these two parameters to speaker identification. The fist parameters obtained smaller rates. We show for the first parameters how they can be combined with the classical parameters (LPCC, MFCC, etc.) in order to improve the results of only one classical parameterization (MFCC provides 97.55% and MFCC+NPC 98.78%). For the linear initialization, we obtain 100% which is great improvement. This study opens a new way towards different parameterization schemes that offer better accuracy on speaker recognition tasks.

* 5 pages, published in The speaker and Language recognition Workshop. ISCA tutorial and research Workshop. ISBN 84-7490-722-5, May 31 -- June 3, 2004

Learning agent's spatial configuration from sensorimotor invariants

Oct 03, 2018

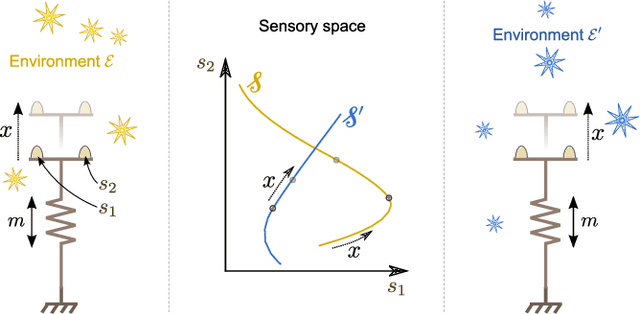

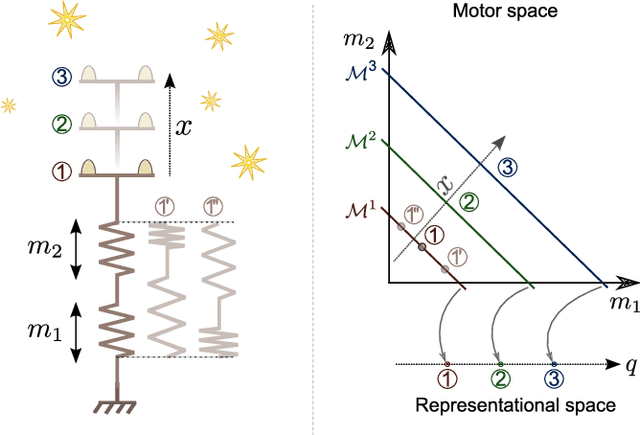

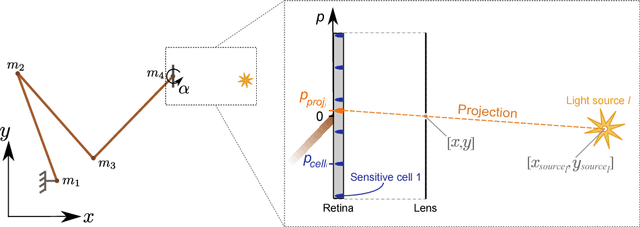

Abstract:The design of robotic systems is largely dictated by our purely human intuition about how we perceive the world. This intuition has been proven incorrect with regard to a number of critical issues, such as visual change blindness. In order to develop truly autonomous robots, we must step away from this intuition and let robotic agents develop their own way of perceiving. The robot should start from scratch and gradually develop perceptual notions, under no prior assumptions, exclusively by looking into its sensorimotor experience and identifying repetitive patterns and invariants. One of the most fundamental perceptual notions, space, cannot be an exception to this requirement. In this paper we look into the prerequisites for the emergence of simplified spatial notions on the basis of a robot's sensorimotor flow. We show that the notion of space as environment-independent cannot be deduced solely from exteroceptive information, which is highly variable and is mainly determined by the contents of the environment. The environment-independent definition of space can be approached by looking into the functions that link the motor commands to changes in exteroceptive inputs. In a sufficiently rich environment, the kernels of these functions correspond uniquely to the spatial configuration of the agent's exteroceptors. We simulate a redundant robotic arm with a retina installed at its end-point and show how this agent can learn the configuration space of its retina. The resulting manifold has the topology of the Cartesian product of a plane and a circle, and corresponds to the planar position and orientation of the retina.

* 26 pages, 5 images, published in Robotics and Autonomous Systems

A Non-linear Approach to Space Dimension Perception by a Naive Agent

Oct 03, 2018

Abstract:Developmental Robotics offers a new approach to numerous AI features that are often taken as granted. Traditionally, perception is supposed to be an inherent capacity of the agent. Moreover, it largely relies on models built by the system's designer. A new approach is to consider perception as an experimentally acquired ability that is learned exclusively through the analysis of the agent's sensorimotor flow. Previous works, based on H.Poincar\'e's intuitions and the sensorimotor contingencies theory, allow a simulated agent to extract the dimension of geometrical space in which it is immersed without any a priori knowledge. Those results are limited to infinitesimal movement's amplitude of the system. In this paper, a non-linear dimension estimation method is proposed to push back this limitation.

Learning an internal representation of the end-effector configuration space

Oct 03, 2018

Abstract:Current machine learning techniques proposed to automatically discover a robot kinematics usually rely on a priori information about the robot's structure, sensors properties or end-effector position. This paper proposes a method to estimate a certain aspect of the forward kinematics model with no such information. An internal representation of the end-effector configuration is generated from unstructured proprioceptive and exteroceptive data flow under very limited assumptions. A mapping from the proprioceptive space to this representational space can then be used to control the robot.

Discovering space - Grounding spatial topology and metric regularity in a naive agent's sensorimotor experience

Oct 03, 2018

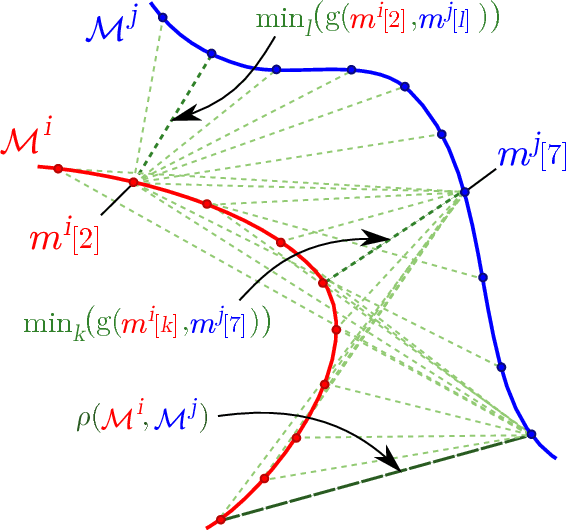

Abstract:In line with the sensorimotor contingency theory, we investigate the problem of the perception of space from a fundamental sensorimotor perspective. Despite its pervasive nature in our perception of the world, the origin of the concept of space remains largely mysterious. For example in the context of artificial perception, this issue is usually circumvented by having engineers pre-define the spatial structure of the problem the agent has to face. We here show that the structure of space can be autonomously discovered by a naive agent in the form of sensorimotor regularities, that correspond to so called compensable sensory experiences: these are experiences that can be generated either by the agent or its environment. By detecting such compensable experiences the agent can infer the topological and metric structure of the external space in which its body is moving. We propose a theoretical description of the nature of these regularities and illustrate the approach on a simulated robotic arm equipped with an eye-like sensor, and which interacts with an object. Finally we show how these regularities can be used to build an internal representation of the sensor's external spatial configuration.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge