Christian Blum

irace-evo: Automatic Algorithm Configuration Extended With LLM-Based Code Evolution

Nov 15, 2025Abstract:Automatic algorithm configuration tools such as irace efficiently tune parameter values but leave algorithmic code unchanged. This paper introduces a first version of irace-evo, an extension of irace that integrates code evolution through large language models (LLMs) to jointly explore parameter and code spaces. The proposed framework enables multi-language support (e.g., C++, Python), reduces token consumption via progressive context management, and employs the Always-From-Original principle to ensure robust and controlled code evolution. We evaluate irace-evo on the Construct, Merge, Solve & Adapt (CMSA) metaheuristic for the Variable-Sized Bin Packing Problem (VSBPP). Experimental results show that irace-evo can discover new algorithm variants that outperform the state-of-the-art CMSA implementation while maintaining low computational and monetary costs. Notably, irace-evo generates competitive algorithmic improvements using lightweight models (e.g., Claude Haiku 3.5) with a total usage cost under 2 euros. These results demonstrate that coupling automatic configuration with LLM-driven code evolution provides a powerful, cost-efficient avenue for advancing heuristic design and metaheuristic optimization.

Combinatorial Optimization for All: Using LLMs to Aid Non-Experts in Improving Optimization Algorithms

Mar 14, 2025Abstract:Large Language Models (LLMs) have shown notable potential in code generation for optimization algorithms, unlocking exciting new opportunities. This paper examines how LLMs, rather than creating algorithms from scratch, can improve existing ones without the need for specialized expertise. To explore this potential, we selected 10 baseline optimization algorithms from various domains (metaheuristics, reinforcement learning, deterministic, and exact methods) to solve the classic Travelling Salesman Problem. The results show that our simple methodology often results in LLM-generated algorithm variants that improve over the baseline algorithms in terms of solution quality, reduction in computational time, and simplification of code complexity, all without requiring specialized optimization knowledge or advanced algorithmic implementation skills.

Multi-Agent Systems Powered by Large Language Models: Applications in Swarm Intelligence

Mar 05, 2025Abstract:This work examines the integration of large language models (LLMs) into multi-agent simulations by replacing the hard-coded programs of agents with LLM-driven prompts. The proposed approach is showcased in the context of two examples of complex systems from the field of swarm intelligence: ant colony foraging and bird flocking. Central to this study is a toolchain that integrates LLMs with the NetLogo simulation platform, leveraging its Python extension to enable communication with GPT-4o via the OpenAI API. This toolchain facilitates prompt-driven behavior generation, allowing agents to respond adaptively to environmental data. For both example applications mentioned above, we employ both structured, rule-based prompts and autonomous, knowledge-driven prompts. Our work demonstrates how this toolchain enables LLMs to study self-organizing processes and induce emergent behaviors within multi-agent environments, paving the way for new approaches to exploring intelligent systems and modeling swarm intelligence inspired by natural phenomena. We provide the code, including simulation files and data at https://github.com/crjimene/swarm_gpt.

Improving Existing Optimization Algorithms with LLMs

Feb 12, 2025Abstract:The integration of Large Language Models (LLMs) into optimization has created a powerful synergy, opening exciting research opportunities. This paper investigates how LLMs can enhance existing optimization algorithms. Using their pre-trained knowledge, we demonstrate their ability to propose innovative heuristic variations and implementation strategies. To evaluate this, we applied a non-trivial optimization algorithm, Construct, Merge, Solve and Adapt (CMSA) -- a hybrid metaheuristic for combinatorial optimization problems that incorporates a heuristic in the solution construction phase. Our results show that an alternative heuristic proposed by GPT-4o outperforms the expert-designed heuristic of CMSA, with the performance gap widening on larger and denser graphs. Project URL: https://imp-opt-algo-llms.surge.sh/

VisGraphVar: A Benchmark Generator for Assessing Variability in Graph Analysis Using Large Vision-Language Models

Nov 22, 2024

Abstract:The fast advancement of Large Vision-Language Models (LVLMs) has shown immense potential. These models are increasingly capable of tackling abstract visual tasks. Geometric structures, particularly graphs with their inherent flexibility and complexity, serve as an excellent benchmark for evaluating these models' predictive capabilities. While human observers can readily identify subtle visual details and perform accurate analyses, our investigation reveals that state-of-the-art LVLMs exhibit consistent limitations in specific visual graph scenarios, especially when confronted with stylistic variations. In response to these challenges, we introduce VisGraphVar (Visual Graph Variability), a customizable benchmark generator able to produce graph images for seven distinct task categories (detection, classification, segmentation, pattern recognition, link prediction, reasoning, matching), designed to systematically evaluate the strengths and limitations of individual LVLMs. We use VisGraphVar to produce 990 graph images and evaluate six LVLMs, employing two distinct prompting strategies, namely zero-shot and chain-of-thought. The findings demonstrate that variations in visual attributes of images (e.g., node labeling and layout) and the deliberate inclusion of visual imperfections, such as overlapping nodes, significantly affect model performance. This research emphasizes the importance of a comprehensive evaluation across graph-related tasks, extending beyond reasoning alone. VisGraphVar offers valuable insights to guide the development of more reliable and robust systems capable of performing advanced visual graph analysis.

A Learning Search Algorithm for the Restricted Longest Common Subsequence Problem

Oct 15, 2024

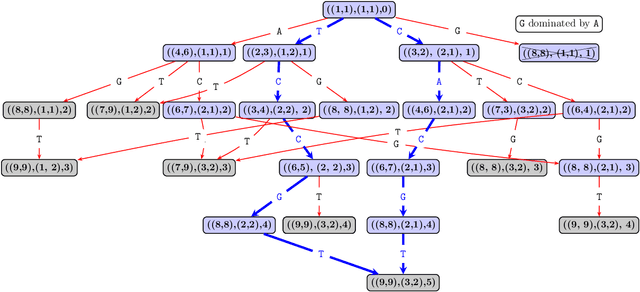

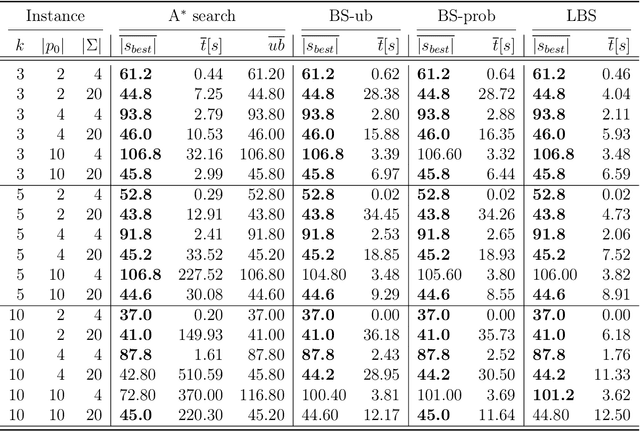

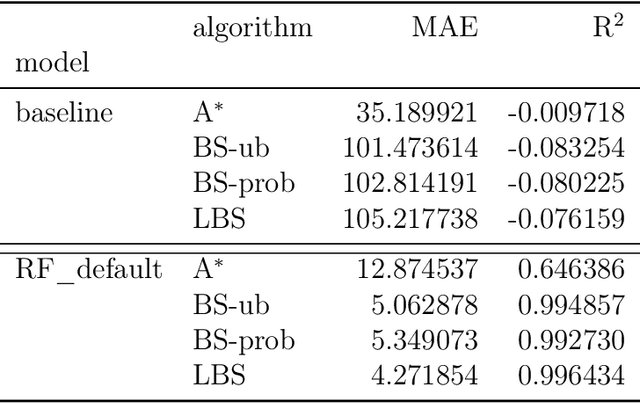

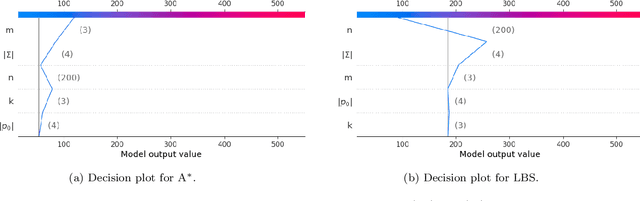

Abstract:This paper addresses the Restricted Longest Common Subsequence (RLCS) problem, an extension of the well-known Longest Common Subsequence (LCS) problem. This problem has significant applications in bioinformatics, particularly for identifying similarities and discovering mutual patterns and important motifs among DNA, RNA, and protein sequences. Building on recent advancements in solving this problem through a general search framework, this paper introduces two novel heuristic approaches designed to enhance the search process by steering it towards promising regions in the search space. The first heuristic employs a probabilistic model to evaluate partial solutions during the search process. The second heuristic is based on a neural network model trained offline using a genetic algorithm. A key aspect of this approach is extracting problem-specific features of partial solutions and the complete problem instance. An effective hybrid method, referred to as the learning beam search, is developed by combining the trained neural network model with a beam search framework. An important contribution of this paper is found in the generation of real-world instances where scientific abstracts serve as input strings, and a set of frequently occurring academic words from the literature are used as restricted patterns. Comprehensive experimental evaluations demonstrate the effectiveness of the proposed approaches in solving the RLCS problem. Finally, an empirical explainability analysis is applied to the obtained results. In this way, key feature combinations and their respective contributions to the success or failure of the algorithms across different problem types are identified.

Accelerating the k-means++ Algorithm by Using Geometric Information

Aug 23, 2024Abstract:In this paper, we propose an acceleration of the exact k-means++ algorithm using geometric information, specifically the Triangle Inequality and additional norm filters, along with a two-step sampling procedure. Our experiments demonstrate that the accelerated version outperforms the standard k-means++ version in terms of the number of visited points and distance calculations, achieving greater speedup as the number of clusters increases. The version utilizing the Triangle Inequality is particularly effective for low-dimensional data, while the additional norm-based filter enhances performance in high-dimensional instances with greater norm variance among points. Additional experiments show the behavior of our algorithms when executed concurrently across multiple jobs and examine how memory performance impacts practical speedup.

Metaheuristics and Large Language Models Join Forces: Towards an Integrated Optimization Approach

May 28, 2024Abstract:Since the rise of Large Language Models (LLMs) a couple of years ago, researchers in metaheuristics (MHs) have wondered how to use their power in a beneficial way within their algorithms. This paper introduces a novel approach that leverages LLMs as pattern recognition tools to improve MHs. The resulting hybrid method, tested in the context of a social network-based combinatorial optimization problem, outperforms existing state-of-the-art approaches that combine machine learning with MHs regarding the obtained solution quality. By carefully designing prompts, we demonstrate that the output obtained from LLMs can be used as problem knowledge, leading to improved results. Lastly, we acknowledge LLMs' potential drawbacks and limitations and consider it essential to examine them to advance this type of research further.

Large Language Models for the Automated Analysis of Optimization Algorithms

Feb 13, 2024Abstract:The ability of Large Language Models (LLMs) to generate high-quality text and code has fuelled their rise in popularity. In this paper, we aim to demonstrate the potential of LLMs within the realm of optimization algorithms by integrating them into STNWeb. This is a web-based tool for the generation of Search Trajectory Networks (STNs), which are visualizations of optimization algorithm behavior. Although visualizations produced by STNWeb can be very informative for algorithm designers, they often require a certain level of prior knowledge to be interpreted. In an attempt to bridge this knowledge gap, we have incorporated LLMs, specifically GPT-4, into STNWeb to produce extensive written reports, complemented by automatically generated plots, thereby enhancing the user experience and reducing the barriers to the adoption of this tool by the research community. Moreover, our approach can be expanded to other tools from the optimization community, showcasing the versatility and potential of LLMs in this field.

Saliency-Driven Hierarchical Learned Image Coding for Machines

Feb 27, 2023

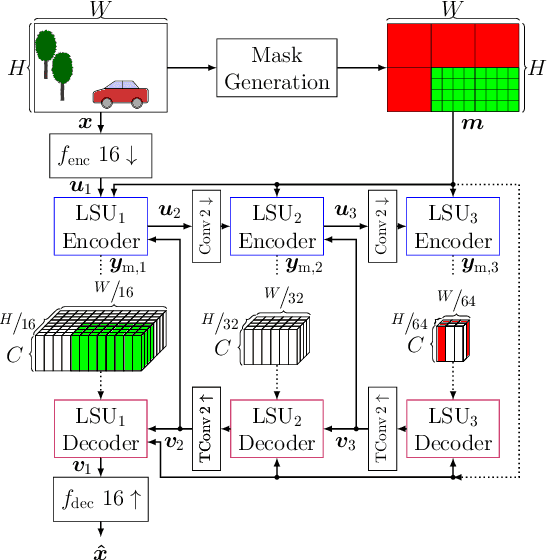

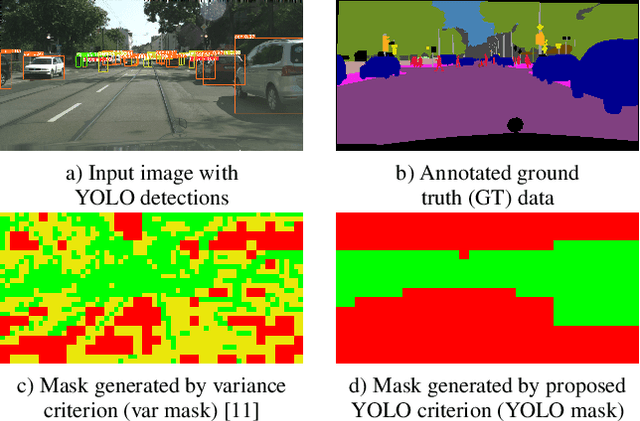

Abstract:We propose to employ a saliency-driven hierarchical neural image compression network for a machine-to-machine communication scenario following the compress-then-analyze paradigm. By that, different areas of the image are coded at different qualities depending on whether salient objects are located in the corresponding area. Areas without saliency are transmitted in latent spaces of lower spatial resolution in order to reduce the bitrate. The saliency information is explicitly derived from the detections of an object detection network. Furthermore, we propose to add saliency information to the training process in order to further specialize the different latent spaces. All in all, our hierarchical model with all proposed optimizations achieves 77.1 % bitrate savings over the latest video coding standard VVC on the Cityscapes dataset and with Mask R-CNN as analysis network at the decoder side. Thereby, it also outperforms traditional, non-hierarchical compression networks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge