Guido Schillaci

Predictive Processing in Cognitive Robotics: a Review

Jan 22, 2021

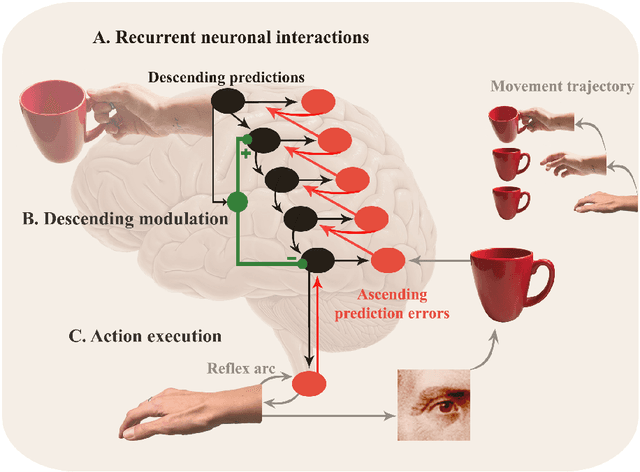

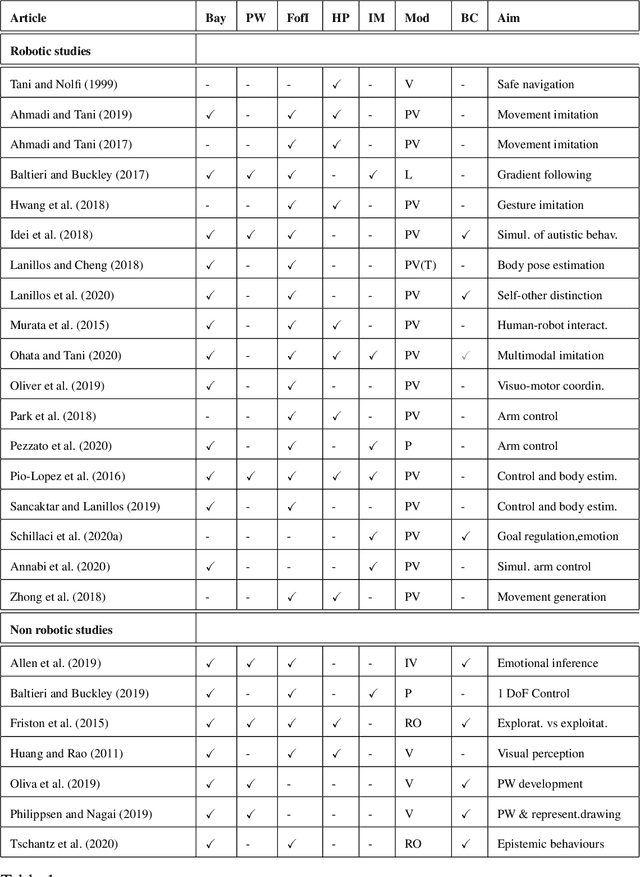

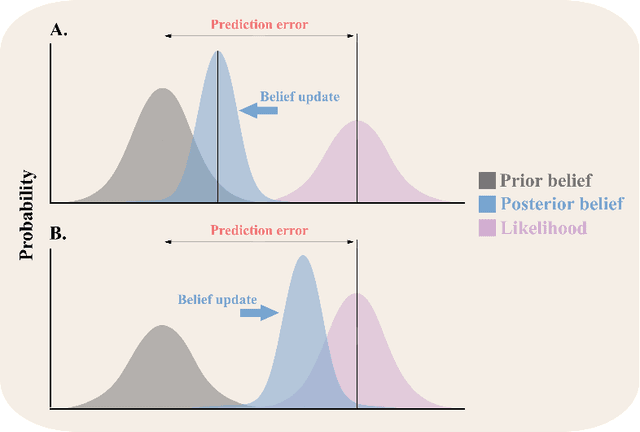

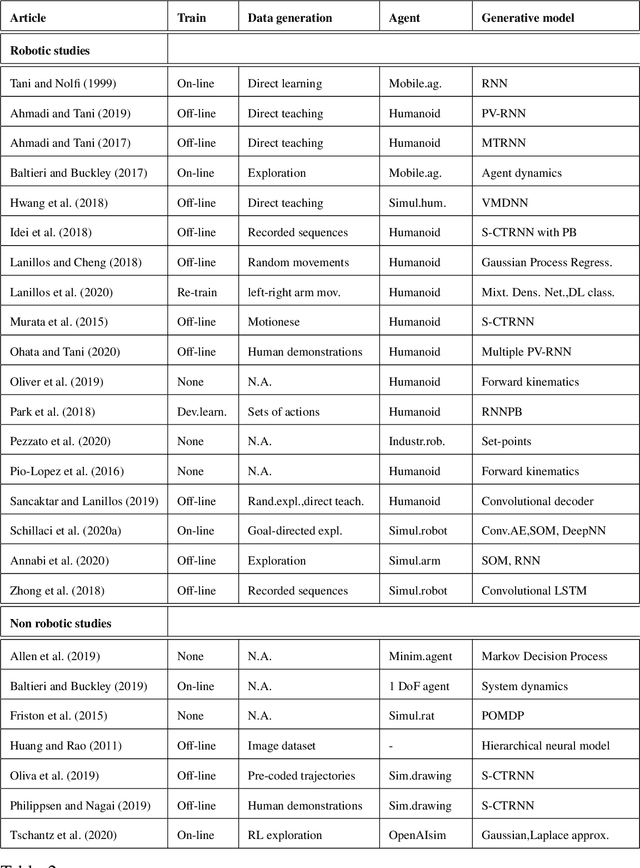

Abstract:Predictive processing has become an influential framework in cognitive sciences. This framework turns the traditional view of perception upside down, claiming that the main flow of information processing is realized in a top-down hierarchical manner. Furthermore, it aims at unifying perception, cognition, and action as a single inferential process. However, in the related literature, the predictive processing framework and its associated schemes such as predictive coding, active inference, perceptual inference, free-energy principle, tend to be used interchangeably. In the field of cognitive robotics there is no clear-cut distinction on which schemes have been implemented and under which assumptions. In this paper, working definitions are set with the main aim of analyzing the state of the art in cognitive robotics research working under the predictive processing framework as well as some related non-robotic models. The analysis suggests that, first, both research in cognitive robotics implementations and non-robotic models needs to be extended to the study of how multiple exteroceptive modalities can be integrated into prediction error minimization schemes. Second, a relevant distinction found here is that cognitive robotics implementations tend to emphasize the learning of a generative model, while in non-robotics models it is almost absent. Third, despite the relevance for active inference, few cognitive robotics implementations examine the issues around control and whether it should result from the substitution of inverse models with proprioceptive predictions. Finally, limited attention has been placed on precision weighting and the tracking of prediction error dynamics. These mechanisms should help to explore more complex behaviors and tasks in cognitive robotics research under the predictive processing framework.

A Multisensory Learning Architecture for Rotation-invariant Object Recognition

Sep 14, 2020

Abstract:This study presents a multisensory machine learning architecture for object recognition by employing a novel dataset that was constructed with the iCub robot, which is equipped with three cameras and a depth sensor. The proposed architecture combines convolutional neural networks to form representations (i.e., features) for grayscaled color images and a multi-layer perceptron algorithm to process depth data. To this end, we aimed to learn joint representations of different modalities (e.g., color and depth) and employ them for recognizing objects. We evaluate the performance of the proposed architecture by benchmarking the results obtained with the models trained separately with the input of different sensors and a state-of-the-art data fusion technique, namely decision level fusion. The results show that our architecture improves the recognition accuracy compared with the models that use inputs from a single modality and decision level multimodal fusion method.

Tracking Emotions: Intrinsic Motivation Grounded on Multi-Level Prediction Error Dynamics

Jul 29, 2020

Abstract:How do cognitive agents decide what is the relevant information to learn and how goals are selected to gain this knowledge? Cognitive agents need to be motivated to perform any action. We discuss that emotions arise when differences between expected and actual rates of progress towards a goal are experienced. Therefore, the tracking of prediction error dynamics has a tight relationship with emotions. Here, we suggest that the tracking of prediction error dynamics allows an artificial agent to be intrinsically motivated to seek new experiences but constrained to those that generate reducible prediction error.We present an intrinsic motivation architecture that generates behaviors towards self-generated and dynamic goals and that regulates goal selection and the balance between exploitation and exploration through multi-level monitoring of prediction error dynamics. This new architecture modulates exploration noise and leverages computational resources according to the dynamics of the overall performance of the learning system. Additionally, it establishes a possible solution to the temporal dynamics of goal selection. The results of the experiments presented here suggest that this architecture outperforms intrinsic motivation approaches where exploratory noise and goals are fixed and a greedy strategy is applied.

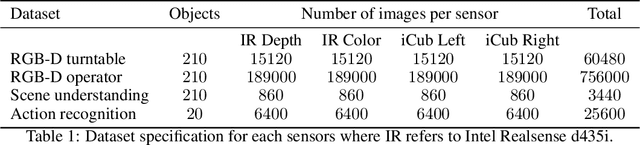

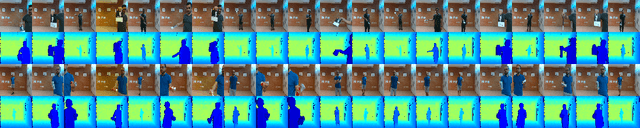

The iCub multisensor datasets for robot and computer vision applications

Mar 04, 2020

Abstract:This document presents novel datasets, constructed by employing the iCub robot equipped with an additional depth sensor and color camera. We used the robot to acquire color and depth information for 210 objects in different acquisition scenarios. At this end, the results were large scale datasets for robot and computer vision applications: object representation, object recognition and classification, and action recognition.

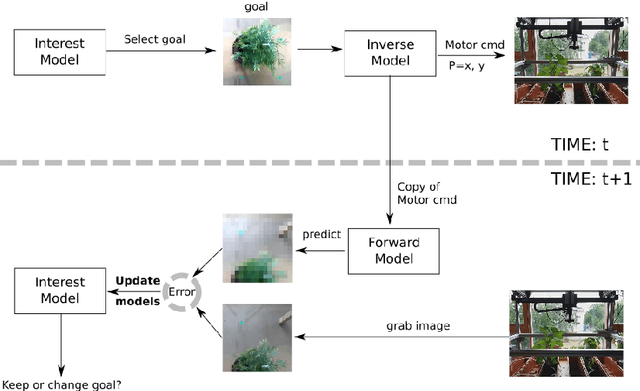

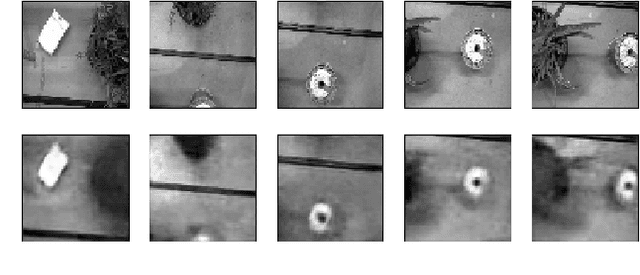

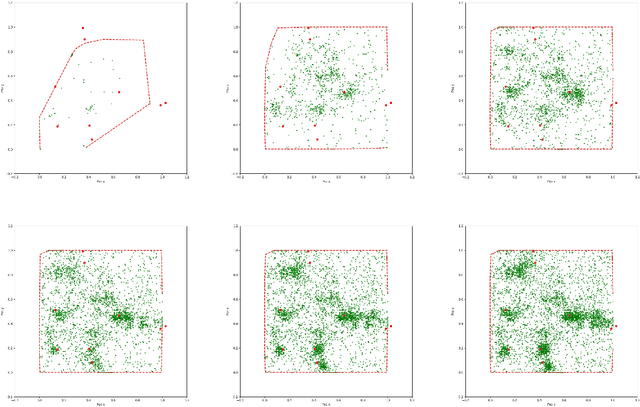

Intrinsic Motivation and Episodic Memories for Robot Exploration of High-Dimensional Sensory Spaces

Jan 07, 2020

Abstract:This work presents an architecture that generates curiosity-driven goal-directed exploration behaviours for an image sensor of a microfarming robot. A combination of deep neural networks for offline unsupervised learning of low-dimensional features from images, and of online learning of shallow neural networks representing the inverse and forward kinematics of the system have been used. The artificial curiosity system assigns interest values to a set of pre-defined goals, and drives the exploration towards those that are expected to maximise the learning progress. We propose the integration of an episodic memory in intrinsic motivation systems to face catastrophic forgetting issues, typically experienced when performing online updates of artificial neural networks. Our results show that adopting an episodic memory system not only prevents the computational models from quickly forgetting knowledge that has been previously acquired, but also provides new avenues for modulating the balance between plasticity and stability of the models.

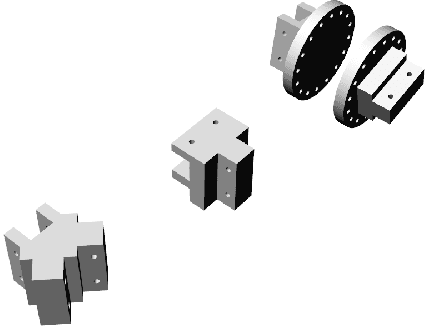

A Customisable Underwater Robot

Jul 20, 2017

Abstract:We present a model of a configurable underwater drone, whose parts are optimised for 3D printing processes. We show how - through the use of printable adapters - several thrusters and ballast configurations can be implemented, allowing different maneuvering possibilities. After introducing the model and illustrating a set of possible configurations, we present a functional prototype based on open source hardware and software solutions. The prototype has been successfully tested in several dives in rivers and lakes around Berlin. The reliability of the printed models has been tested only in relatively shallow waters. However, we strongly believe that their availability as freely downloadable models will motivate the general public to build and to test underwater drones, thus speeding up the development of innovative solutions and applications. The models and their documentation will be available for download at the following link: https://adapt.informatik.hu-berlin.de/schillaci/underwater.html.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge