Egidio Falotico

Model-based Optimal Control for Rigid-Soft Underactuated Systems

Feb 03, 2026Abstract:Continuum soft robots are inherently underactuated and subject to intrinsic input constraints, making dynamic control particularly challenging, especially in hybrid rigid-soft robots. While most existing methods focus on quasi-static behaviors, dynamic tasks such as swing-up require accurate exploitation of continuum dynamics. This has led to studies on simple low-order template systems that often fail to capture the complexity of real continuum deformations. Model-based optimal control offers a systematic solution; however, its application to rigid-soft robots is often limited by the computational cost and inaccuracy of numerical differentiation for high-dimensional models. Building on recent advances in the Geometric Variable Strain model that enable analytical derivatives, this work investigates three optimal control strategies for underactuated soft systems-Direct Collocation, Differential Dynamic Programming, and Nonlinear Model Predictive Control-to perform dynamic swing-up tasks. To address stiff continuum dynamics and constrained actuation, implicit integration schemes and warm-start strategies are employed to improve numerical robustness and computational efficiency. The methods are evaluated in simulation on three Rigid-Soft and high-order soft benchmark systems-the Soft Cart-Pole, the Soft Pendubot, and the Soft Furuta Pendulum- highlighting their performance and computational trade-offs.

The Role of Touch: Towards Optimal Tactile Sensing Distribution in Anthropomorphic Hands for Dexterous In-Hand Manipulation

Sep 18, 2025Abstract:In-hand manipulation tasks, particularly in human-inspired robotic systems, must rely on distributed tactile sensing to achieve precise control across a wide variety of tasks. However, the optimal configuration of this network of sensors is a complex problem, and while the fingertips are a common choice for placing sensors, the contribution of tactile information from other regions of the hand is often overlooked. This work investigates the impact of tactile feedback from various regions of the fingers and palm in performing in-hand object reorientation tasks. We analyze how sensory feedback from different parts of the hand influences the robustness of deep reinforcement learning control policies and investigate the relationship between object characteristics and optimal sensor placement. We identify which tactile sensing configurations contribute to improving the efficiency and accuracy of manipulation. Our results provide valuable insights for the design and use of anthropomorphic end-effectors with enhanced manipulation capabilities.

HERB: Human-augmented Efficient Reinforcement learning for Bin-packing

Apr 23, 2025Abstract:Packing objects efficiently is a fundamental problem in logistics, warehouse automation, and robotics. While traditional packing solutions focus on geometric optimization, packing irregular, 3D objects presents significant challenges due to variations in shape and stability. Reinforcement Learning~(RL) has gained popularity in robotic packing tasks, but training purely from simulation can be inefficient and computationally expensive. In this work, we propose HERB, a human-augmented RL framework for packing irregular objects. We first leverage human demonstrations to learn the best sequence of objects to pack, incorporating latent factors such as space optimization, stability, and object relationships that are difficult to model explicitly. Next, we train a placement algorithm that uses visual information to determine the optimal object positioning inside a packing container. Our approach is validated through extensive performance evaluations, analyzing both packing efficiency and latency. Finally, we demonstrate the real-world feasibility of our method on a robotic system. Experimental results show that our method outperforms geometric and purely RL-based approaches by leveraging human intuition, improving both packing robustness and adaptability. This work highlights the potential of combining human expertise-driven RL to tackle complex real-world packing challenges in robotic systems.

Adaptive Drift Compensation for Soft Sensorized Finger Using Continual Learning

Mar 18, 2025

Abstract:Strain sensors are gaining popularity in soft robotics for acquiring tactile data due to their flexibility and ease of integration. Tactile sensing plays a critical role in soft grippers, enabling them to safely interact with unstructured environments and precisely detect object properties. However, a significant challenge with these systems is their high non-linearity, time-varying behavior, and long-term signal drift. In this paper, we introduce a continual learning (CL) approach to model a soft finger equipped with piezoelectric-based strain sensors for proprioception. To tackle the aforementioned challenges, we propose an adaptive CL algorithm that integrates a Long Short-Term Memory (LSTM) network with a memory buffer for rehearsal and includes a regularization term to keep the model's decision boundary close to the base signal while adapting to time-varying drift. We conduct nine different experiments, resetting the entire setup each time to demonstrate signal drift. We also benchmark our algorithm against two other methods and conduct an ablation study to assess the impact of different components on the overall performance.

SoFFT: Spatial Fourier Transform for Modeling Continuum Soft Robots

Feb 24, 2025

Abstract:Continuum soft robots, composed of flexible materials, exhibit theoretically infinite degrees of freedom, enabling notable adaptability in unstructured environments. Cosserat Rod Theory has emerged as a prominent framework for modeling these robots efficiently, representing continuum soft robots as time-varying curves, known as backbones. In this work, we propose viewing the robot's backbone as a signal in space and time, applying the Fourier transform to describe its deformation compactly. This approach unifies existing modeling strategies within the Cosserat Rod Theory framework, offering insights into commonly used heuristic methods. Moreover, the Fourier transform enables the development of a data-driven methodology to experimentally capture the robot's deformation. The proposed approach is validated through numerical simulations and experiments on a real-world prototype, demonstrating a reduction in the degrees of freedom while preserving the accuracy of the deformation representation.

Sensorimotor Control Strategies for Tactile Robotics

Jan 16, 2025

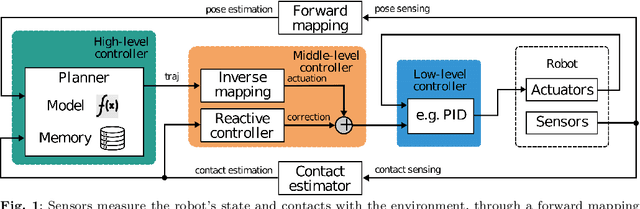

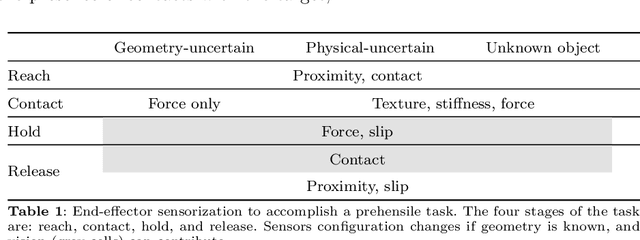

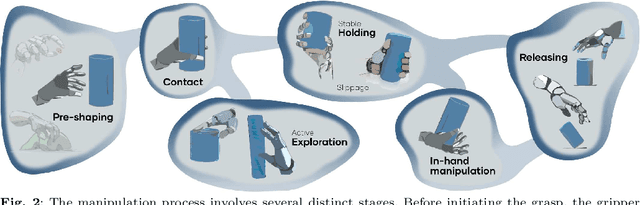

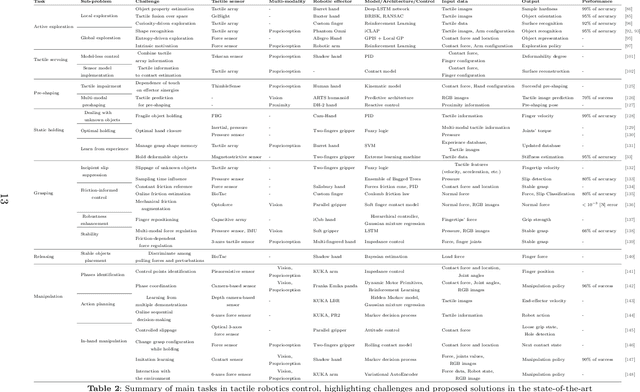

Abstract:How are robots becoming smarter at interacting with their surroundings? Recent advances have reshaped how robots use tactile sensing to perceive and engage with the world. Tactile sensing is a game-changer, allowing robots to embed sensorimotor control strategies to interact with complex environments and skillfully handle heterogeneous objects. Such control frameworks plan contact-driven motions while staying responsive to sudden changes. We review the latest methods for building perception and control systems in tactile robotics while offering practical guidelines for their design and implementation. We also address key challenges to shape the future of intelligent robots.

Towards Interpretable Visuo-Tactile Predictive Models for Soft Robot Interactions

Jul 16, 2024

Abstract:Autonomous systems face the intricate challenge of navigating unpredictable environments and interacting with external objects. The successful integration of robotic agents into real-world situations hinges on their perception capabilities, which involve amalgamating world models and predictive skills. Effective perception models build upon the fusion of various sensory modalities to probe the surroundings. Deep learning applied to raw sensory modalities offers a viable option. However, learning-based perceptive representations become difficult to interpret. This challenge is particularly pronounced in soft robots, where the compliance of structures and materials makes prediction even harder. Our work addresses this complexity by harnessing a generative model to construct a multi-modal perception model for soft robots and to leverage proprioceptive and visual information to anticipate and interpret contact interactions with external objects. A suite of tools to interpret the perception model is furnished, shedding light on the fusion and prediction processes across multiple sensory inputs after the learning phase. We will delve into the outlooks of the perception model and its implications for control purposes.

Rod models in continuum and soft robot control: a review

Jul 08, 2024

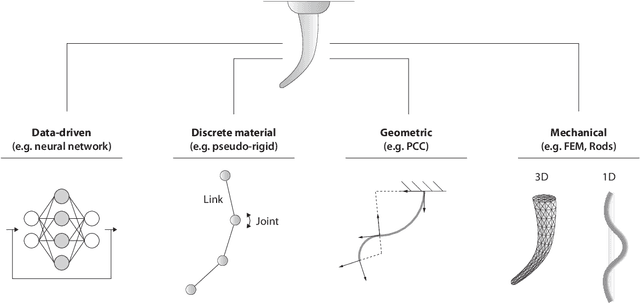

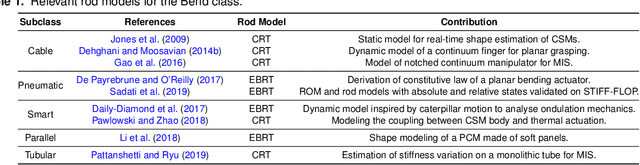

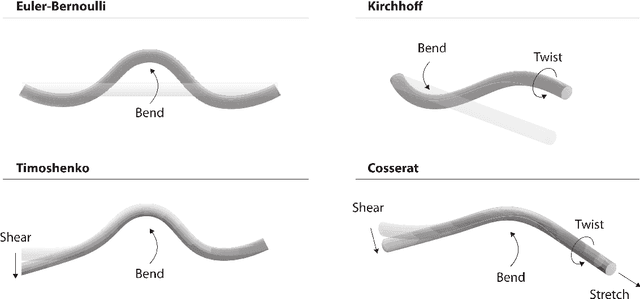

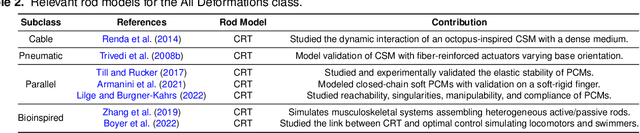

Abstract:Continuum and soft robots can positively impact diverse sectors, from biomedical applications to marine and space exploration, thanks to their potential to adaptively interact with unstructured environments. However, the complex mechanics exhibited by these robots pose diverse challenges in modeling and control. Reduced order continuum mechanical models based on rod theories have emerged as a promising framework, striking a balance between accurately capturing deformations of slender bodies and computational efficiency. This review paper explores rod-based models and control strategies for continuum and soft robots. In particular, it summarizes the mathematical background underlying the four main rod theories applied in soft robotics. Then, it categorizes the literature on rod models applied to continuum and soft robots based on deformation classes, actuation technology, or robot type. Finally, it reviews recent model-based and learning-based control strategies leveraging rod models. The comprehensive review includes a critical discussion of the trends, advantages, limits, and possible future developments of rod models. This paper could guide researchers intending to simulate and control new soft robots and provide feedback to the design and manufacturing community.

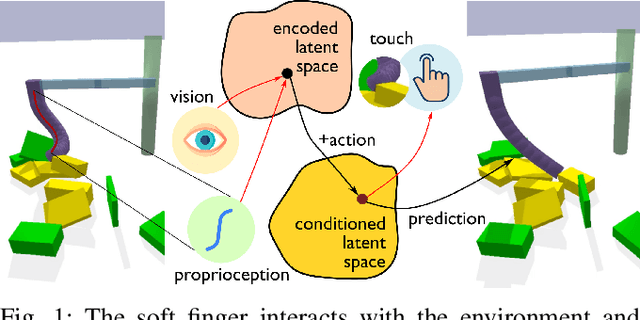

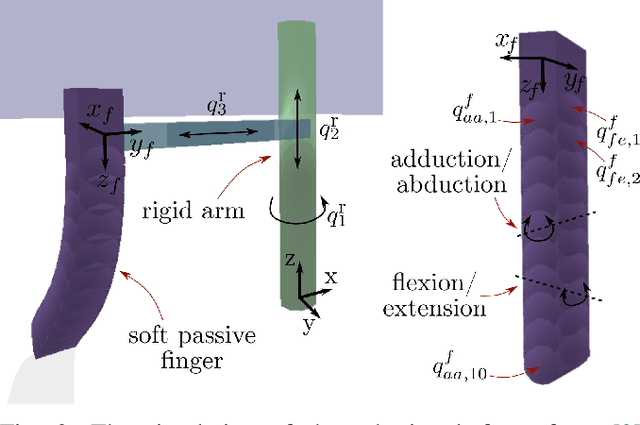

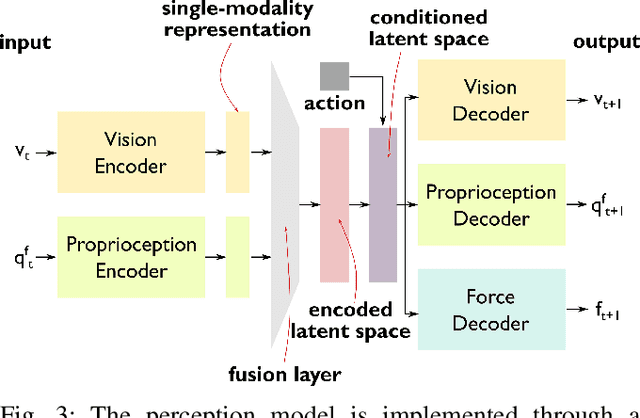

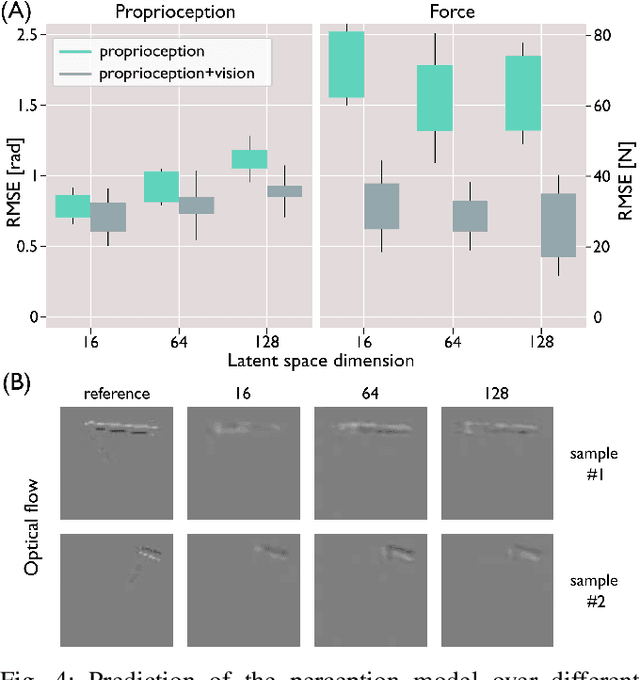

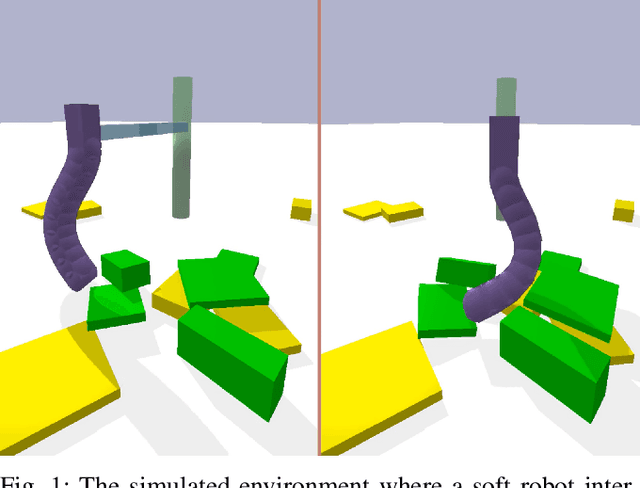

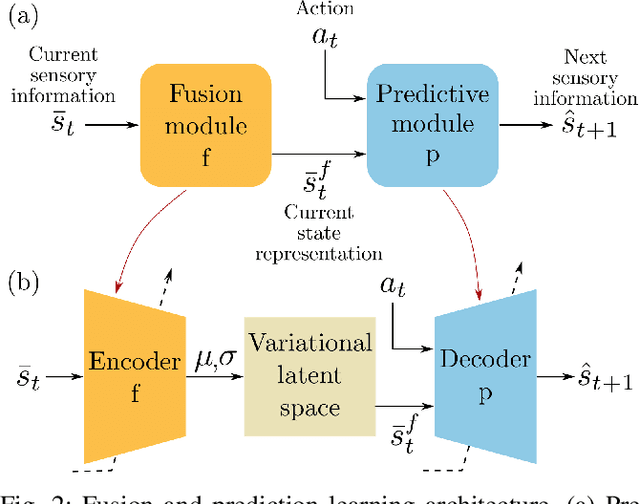

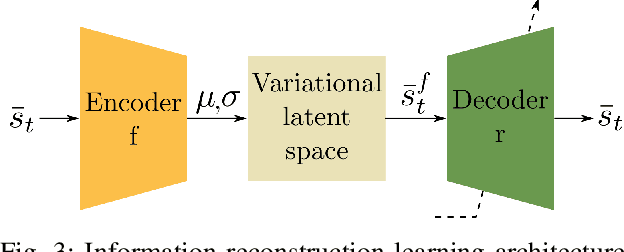

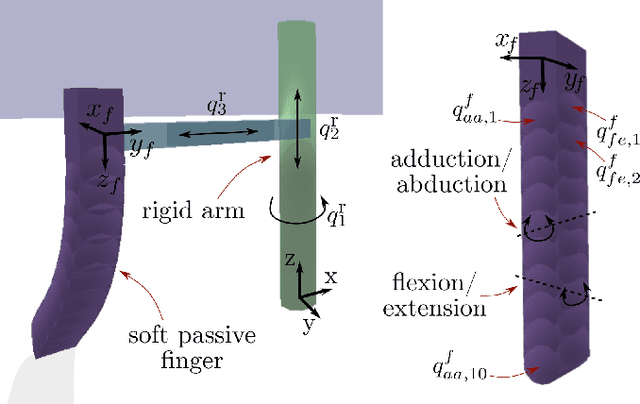

Multi-modal perception for soft robotic interactions using generative models

Apr 05, 2024

Abstract:Perception is essential for the active interaction of physical agents with the external environment. The integration of multiple sensory modalities, such as touch and vision, enhances this perceptual process, creating a more comprehensive and robust understanding of the world. Such fusion is particularly useful for highly deformable bodies such as soft robots. Developing a compact, yet comprehensive state representation from multi-sensory inputs can pave the way for the development of complex control strategies. This paper introduces a perception model that harmonizes data from diverse modalities to build a holistic state representation and assimilate essential information. The model relies on the causality between sensory input and robotic actions, employing a generative model to efficiently compress fused information and predict the next observation. We present, for the first time, a study on how touch can be predicted from vision and proprioception on soft robots, the importance of the cross-modal generation and why this is essential for soft robotic interactions in unstructured environments.

Continual Policy Distillation of Reinforcement Learning-based Controllers for Soft Robotic In-Hand Manipulation

Apr 05, 2024Abstract:Dexterous manipulation, often facilitated by multi-fingered robotic hands, holds solid impact for real-world applications. Soft robotic hands, due to their compliant nature, offer flexibility and adaptability during object grasping and manipulation. Yet, benefits come with challenges, particularly in the control development for finger coordination. Reinforcement Learning (RL) can be employed to train object-specific in-hand manipulation policies, but limiting adaptability and generalizability. We introduce a Continual Policy Distillation (CPD) framework to acquire a versatile controller for in-hand manipulation, to rotate different objects in shape and size within a four-fingered soft gripper. The framework leverages Policy Distillation (PD) to transfer knowledge from expert policies to a continually evolving student policy network. Exemplar-based rehearsal methods are then integrated to mitigate catastrophic forgetting and enhance generalization. The performance of the CPD framework over various replay strategies demonstrates its effectiveness in consolidating knowledge from multiple experts and achieving versatile and adaptive behaviours for in-hand manipulation tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge