Tomoaki Nakamura

Generative Emergent Communication: Large Language Model is a Collective World Model

Dec 31, 2024

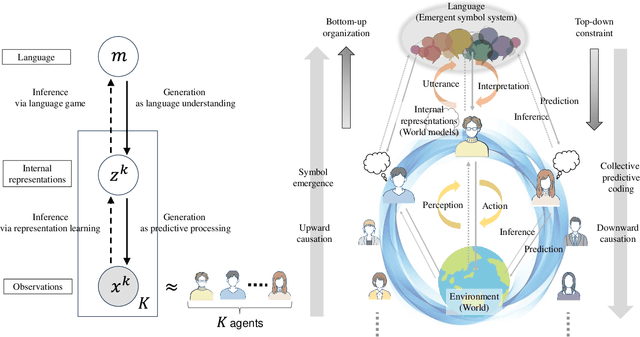

Abstract:This study proposes a unifying theoretical framework called generative emergent communication (generative EmCom) that bridges emergent communication, world models, and large language models (LLMs) through the lens of collective predictive coding (CPC). The proposed framework formalizes the emergence of language and symbol systems through decentralized Bayesian inference across multiple agents, extending beyond conventional discriminative model-based approaches to emergent communication. This study makes the following two key contributions: First, we propose generative EmCom as a novel framework for understanding emergent communication, demonstrating how communication emergence in multi-agent reinforcement learning (MARL) can be derived from control as inference while clarifying its relationship to conventional discriminative approaches. Second, we propose a mathematical formulation showing the interpretation of LLMs as collective world models that integrate multiple agents' experiences through CPC. The framework provides a unified theoretical foundation for understanding how shared symbol systems emerge through collective predictive coding processes, bridging individual cognitive development and societal language evolution. Through mathematical formulations and discussion on prior works, we demonstrate how this framework explains fundamental aspects of language emergence and offers practical insights for understanding LLMs and developing sophisticated AI systems for improving human-AI interaction and multi-agent systems.

Unsupervised Work Behavior Pattern Extraction Based on Hierarchical Probabilistic Model

May 16, 2024

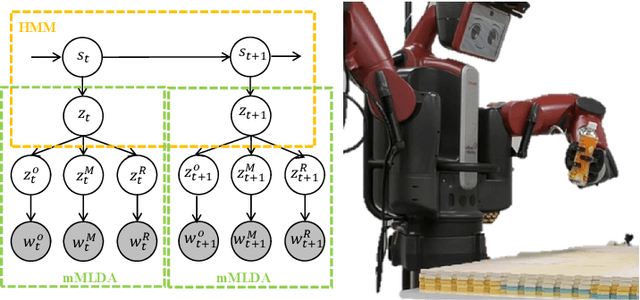

Abstract:Evolving consumer demands and market trends have led to businesses increasingly embracing a production approach that prioritizes flexibility and customization. Consequently, factory workers must engage in tasks that are more complex than before. Thus, productivity depends on each worker's skills in assembling products. Therefore, analyzing the behavior of a worker is crucial for work improvement. However, manual analysis is time consuming and does not provide quick and accurate feedback. Machine learning have been attempted to automate the analyses; however, most of these methods need several labels for training. To this end, we extend the Gaussian process hidden semi-Markov model (GP-HSMM), to enable the rapid and automated analysis of worker behavior without pre-training. The model does not require labeled data and can automatically and accurately segment continuous motions into motion classes. The proposed model is a probabilistic model that hierarchically connects GP-HSMM and HSMM, enabling the extraction of behavioral patterns with different granularities. Furthermore, it mutually infers the parameters between the GP-HSMM and HSMM, resulting in accurate motion pattern extraction. We applied the proposed method to motion data in which workers assembled products at an actual production site. The accuracy of behavior pattern extraction was evaluated using normalized Levenshtein distance (NLD). The smaller the value of NLD, the more accurate is the pattern extraction. The NLD of motion patterns captured by GP-HSMM and HSMM layers in our proposed method was 0.50 and 0.33, respectively, which are the smallest compared to that of the baseline methods.

Control as Probabilistic Inference as an Emergent Communication Mechanism in Multi-Agent Reinforcement Learning

Jul 11, 2023

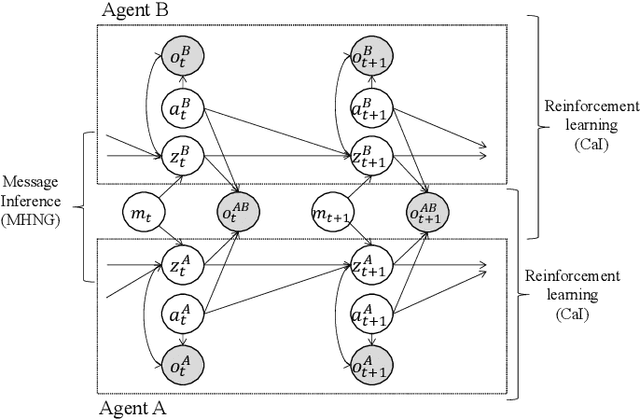

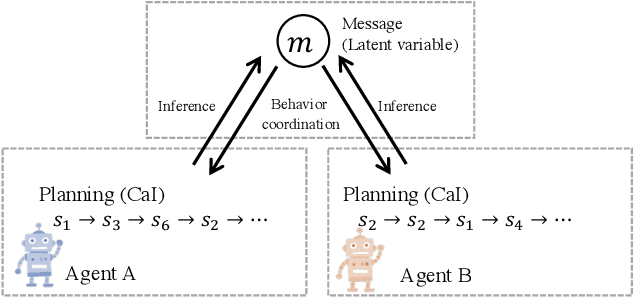

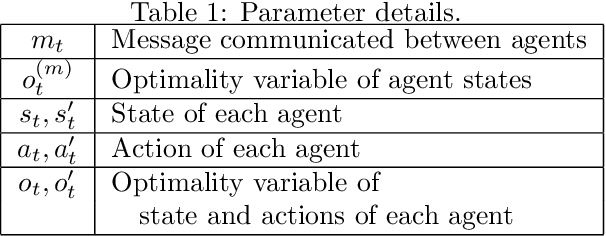

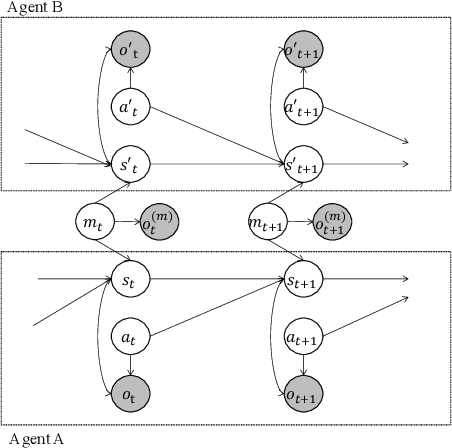

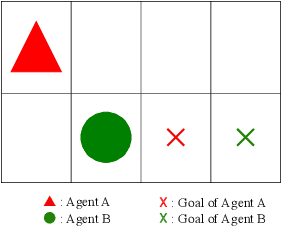

Abstract:This paper proposes a generative probabilistic model integrating emergent communication and multi-agent reinforcement learning. The agents plan their actions by probabilistic inference, called control as inference, and communicate using messages that are latent variables and estimated based on the planned actions. Through these messages, each agent can send information about its actions and know information about the actions of another agent. Therefore, the agents change their actions according to the estimated messages to achieve cooperative tasks. This inference of messages can be considered as communication, and this procedure can be formulated by the Metropolis-Hasting naming game. Through experiments in the grid world environment, we show that the proposed PGM can infer meaningful messages to achieve the cooperative task.

World Models and Predictive Coding for Cognitive and Developmental Robotics: Frontiers and Challenges

Jan 14, 2023Abstract:Creating autonomous robots that can actively explore the environment, acquire knowledge and learn skills continuously is the ultimate achievement envisioned in cognitive and developmental robotics. Their learning processes should be based on interactions with their physical and social world in the manner of human learning and cognitive development. Based on this context, in this paper, we focus on the two concepts of world models and predictive coding. Recently, world models have attracted renewed attention as a topic of considerable interest in artificial intelligence. Cognitive systems learn world models to better predict future sensory observations and optimize their policies, i.e., controllers. Alternatively, in neuroscience, predictive coding proposes that the brain continuously predicts its inputs and adapts to model its own dynamics and control behavior in its environment. Both ideas may be considered as underpinning the cognitive development of robots and humans capable of continual or lifelong learning. Although many studies have been conducted on predictive coding in cognitive robotics and neurorobotics, the relationship between world model-based approaches in AI and predictive coding in robotics has rarely been discussed. Therefore, in this paper, we clarify the definitions, relationships, and status of current research on these topics, as well as missing pieces of world models and predictive coding in conjunction with crucially related concepts such as the free-energy principle and active inference in the context of cognitive and developmental robotics. Furthermore, we outline the frontiers and challenges involved in world models and predictive coding toward the further integration of AI and robotics, as well as the creation of robots with real cognitive and developmental capabilities in the future.

Whole brain Probabilistic Generative Model toward Realizing Cognitive Architecture for Developmental Robots

Mar 15, 2021Abstract:Building a humanlike integrative artificial cognitive system, that is, an artificial general intelligence, is one of the goals in artificial intelligence and developmental robotics. Furthermore, a computational model that enables an artificial cognitive system to achieve cognitive development will be an excellent reference for brain and cognitive science. This paper describes the development of a cognitive architecture using probabilistic generative models (PGMs) to fully mirror the human cognitive system. The integrative model is called a whole-brain PGM (WB-PGM). It is both brain-inspired and PGMbased. In this paper, the process of building the WB-PGM and learning from the human brain to build cognitive architectures is described.

Neuro-SERKET: Development of Integrative Cognitive System through the Composition of Deep Probabilistic Generative Models

Oct 20, 2019

Abstract:This paper describes a framework for the development of an integrative cognitive system based on probabilistic generative models (PGMs) called Neuro-SERKET. Neuro-SERKET is an extension of SERKET, which can compose elemental PGMs developed in a distributed manner and provide a scheme that allows the composed PGMs to learn throughout the system in an unsupervised way. In addition to the head-to-tail connection supported by SERKET, Neuro-SERKET supports tail-to-tail and head-to-head connections, as well as neural network-based modules, i.e., deep generative models. As an example of a Neuro-SERKET application, an integrative model was developed by composing a variational autoencoder (VAE), a Gaussian mixture model (GMM), latent Dirichlet allocation (LDA), and automatic speech recognition (ASR). The model is called VAE+GMM+LDA+ASR. The performance of VAE+GMM+LDA+ASR and the validity of Neuro-SERKET were demonstrated through a multimodal categorization task using image data and a speech signal of numerical digits.

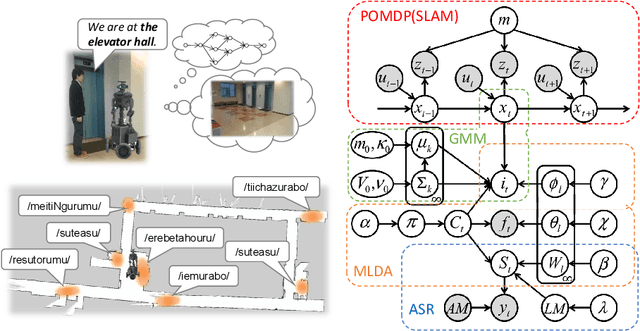

SERKET: An Architecture for Connecting Stochastic Models to Realize a Large-Scale Cognitive Model

Dec 06, 2017

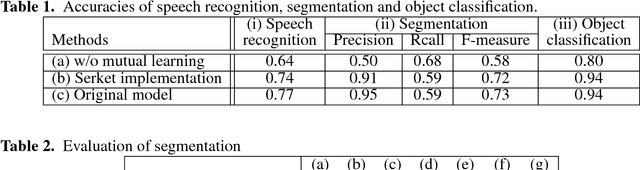

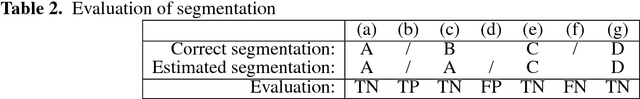

Abstract:To realize human-like robot intelligence, a large-scale cognitive architecture is required for robots to understand the environment through a variety of sensors with which they are equipped. In this paper, we propose a novel framework named Serket that enables the construction of a large-scale generative model and its inference easily by connecting sub-modules to allow the robots to acquire various capabilities through interaction with their environments and others. We consider that large-scale cognitive models can be constructed by connecting smaller fundamental models hierarchically while maintaining their programmatic independence. Moreover, connected modules are dependent on each other, and parameters are required to be optimized as a whole. Conventionally, the equations for parameter estimation have to be derived and implemented depending on the models. However, it becomes harder to derive and implement those of a larger scale model. To solve these problems, in this paper, we propose a method for parameter estimation by communicating the minimal parameters between various modules while maintaining their programmatic independence. Therefore, Serket makes it easy to construct large-scale models and estimate their parameters via the connection of modules. Experimental results demonstrated that the model can be constructed by connecting modules, the parameters can be optimized as a whole, and they are comparable with the original models that we have proposed.

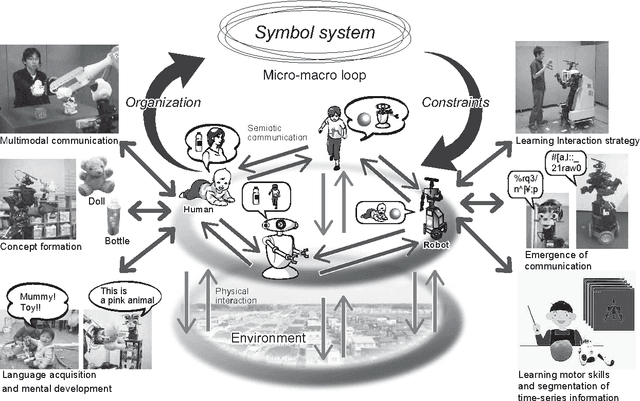

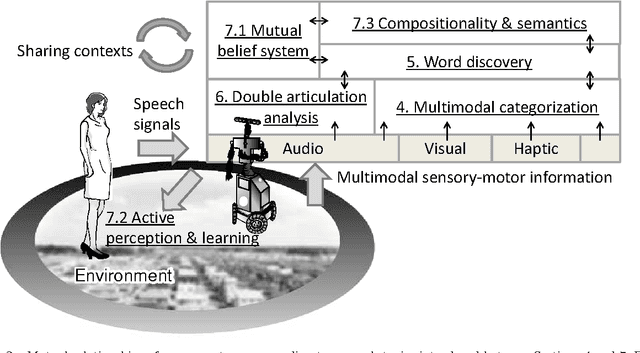

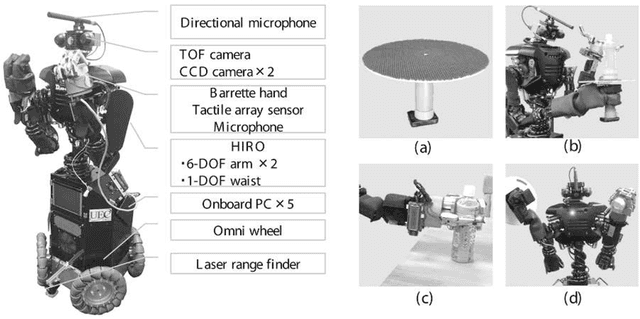

Symbol Emergence in Robotics: A Survey

Sep 29, 2015

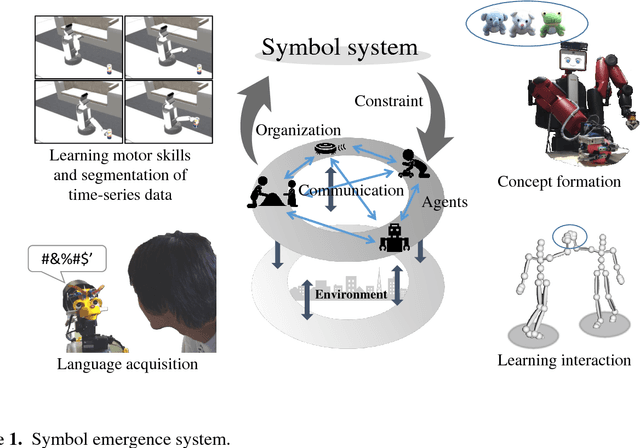

Abstract:Humans can learn the use of language through physical interaction with their environment and semiotic communication with other people. It is very important to obtain a computational understanding of how humans can form a symbol system and obtain semiotic skills through their autonomous mental development. Recently, many studies have been conducted on the construction of robotic systems and machine-learning methods that can learn the use of language through embodied multimodal interaction with their environment and other systems. Understanding human social interactions and developing a robot that can smoothly communicate with human users in the long term, requires an understanding of the dynamics of symbol systems and is crucially important. The embodied cognition and social interaction of participants gradually change a symbol system in a constructive manner. In this paper, we introduce a field of research called symbol emergence in robotics (SER). SER is a constructive approach towards an emergent symbol system. The emergent symbol system is socially self-organized through both semiotic communications and physical interactions with autonomous cognitive developmental agents, i.e., humans and developmental robots. Specifically, we describe some state-of-art research topics concerning SER, e.g., multimodal categorization, word discovery, and a double articulation analysis, that enable a robot to obtain words and their embodied meanings from raw sensory--motor information, including visual information, haptic information, auditory information, and acoustic speech signals, in a totally unsupervised manner. Finally, we suggest future directions of research in SER.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge