Oliver Breitwieser

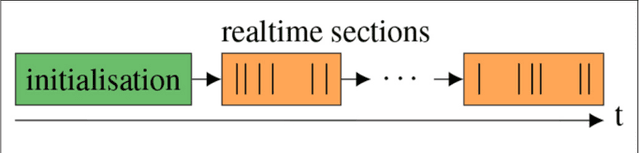

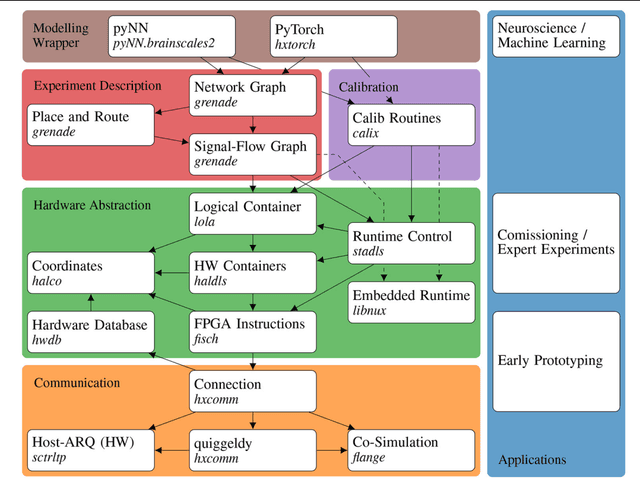

A Scalable Approach to Modeling on Accelerated Neuromorphic Hardware

Mar 21, 2022

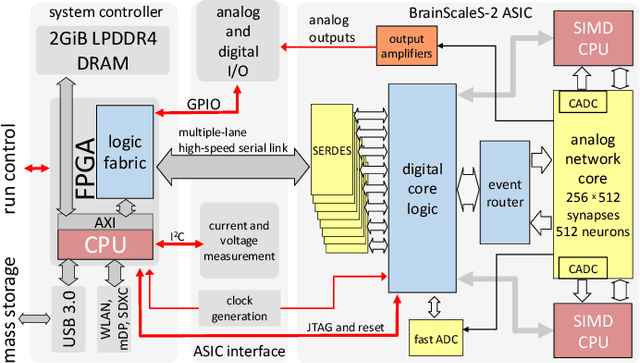

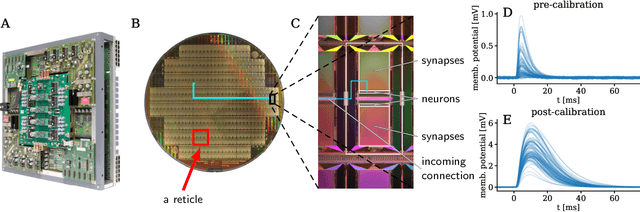

Abstract:Neuromorphic systems open up opportunities to enlarge the explorative space for computational research. However, it is often challenging to unite efficiency and usability. This work presents the software aspects of this endeavor for the BrainScaleS-2 system, a hybrid accelerated neuromorphic hardware architecture based on physical modeling. We introduce key aspects of the BrainScaleS-2 Operating System: experiment workflow, API layering, software design, and platform operation. We present use cases to discuss and derive requirements for the software and showcase the implementation. The focus lies on novel system and software features such as multi-compartmental neurons, fast re-configuration for hardware-in-the-loop training, applications for the embedded processors, the non-spiking operation mode, interactive platform access, and sustainable hardware/software co-development. Finally, we discuss further developments in terms of hardware scale-up, system usability and efficiency.

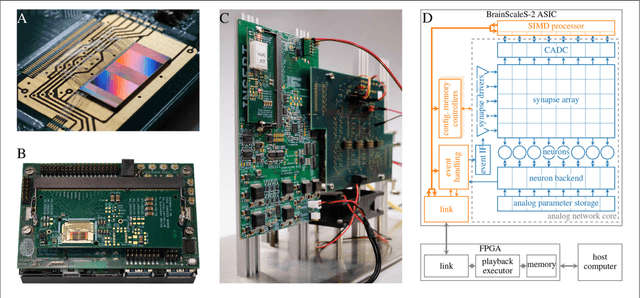

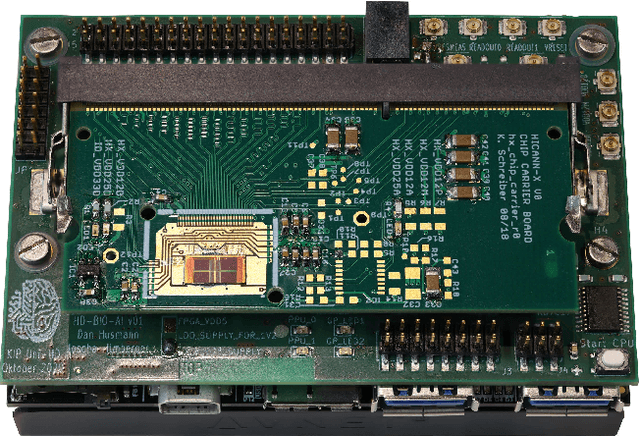

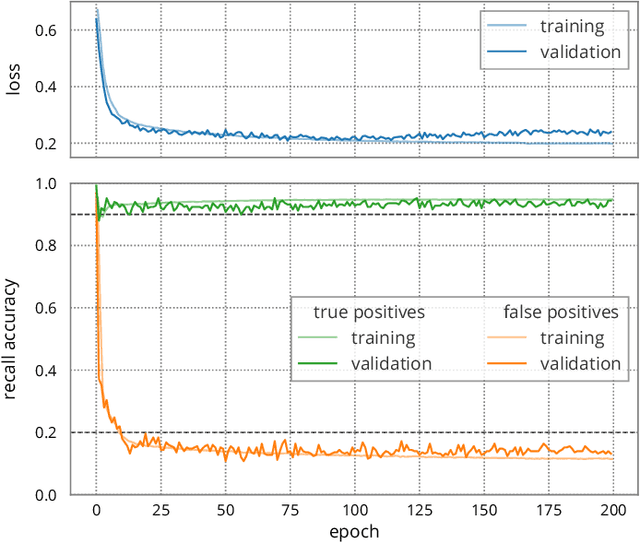

Demonstrating Analog Inference on the BrainScaleS-2 Mobile System

Mar 29, 2021

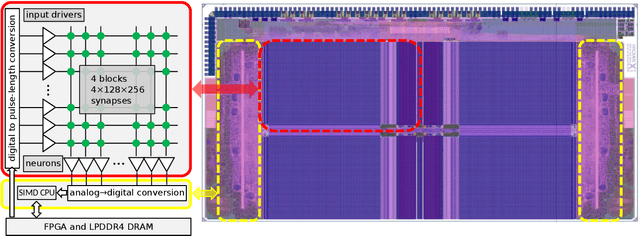

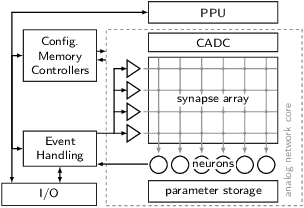

Abstract:We present the BrainScaleS-2 mobile system as a compact analog inference engine based on the BrainScaleS-2 ASIC and demonstrate its capabilities at classifying a medical electrocardiogram dataset. The analog network core of the ASIC is utilized to perform the multiply-accumulate operations of a convolutional deep neural network. We measure a total energy consumption of 192uJ for the ASIC and achieve a classification time of 276us per electrocardiographic patient sample. Patients with atrial fibrillation are correctly identified with a detection rate of 93.7(7)% at 14.0(10)% false positives. The system is directly applicable to edge inference applications due to its small size, power envelope and flexible I/O capabilities. Possible future applications can furthermore combine conventional machine learning layers with online-learning in spiking neural networks on a single BrainScaleS-2 ASIC. The system has successfully participated and proven to operate reliably in the independently judged competition "Pilotinnovationswettbewerb 'Energieeffizientes KI-System'" of the German Federal Ministry of Education and Research (BMBF).

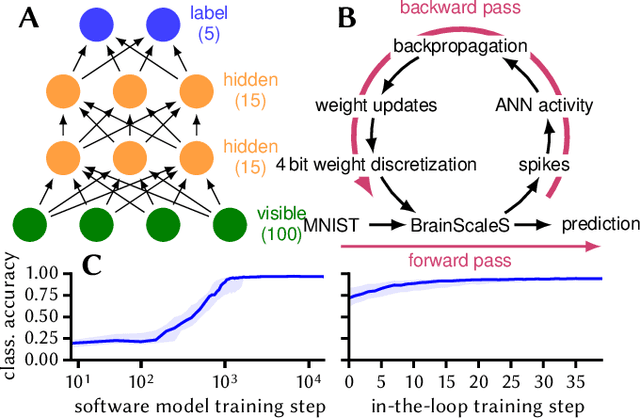

Inference with Artificial Neural Networks on Analog Neuromorphic Hardware

Jul 01, 2020

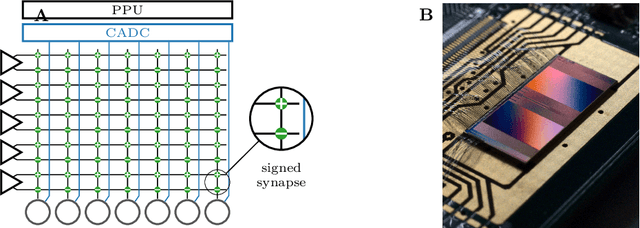

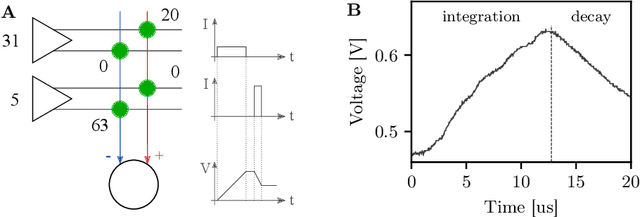

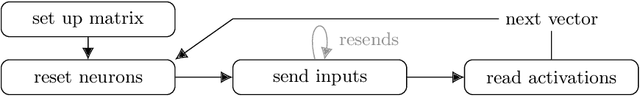

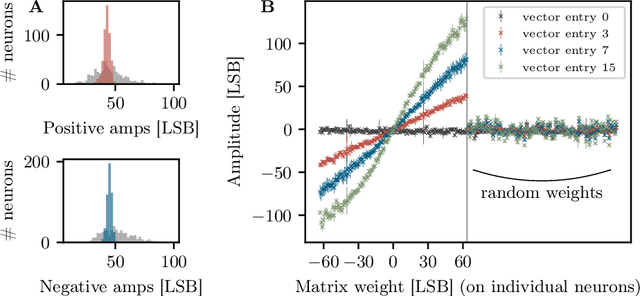

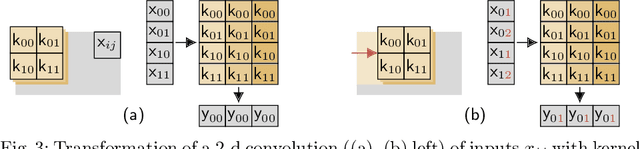

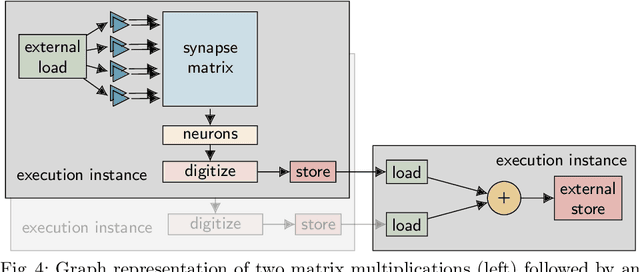

Abstract:The neuromorphic BrainScaleS-2 ASIC comprises mixed-signal neurons and synapse circuits as well as two versatile digital microprocessors. Primarily designed to emulate spiking neural networks, the system can also operate in a vector-matrix multiplication and accumulation mode for artificial neural networks. Analog multiplication is carried out in the synapse circuits, while the results are accumulated on the neurons' membrane capacitors. Designed as an analog, in-memory computing device, it promises high energy efficiency. Fixed-pattern noise and trial-to-trial variations, however, require the implemented networks to cope with a certain level of perturbations. Further limitations are imposed by the digital resolution of the input values (5 bit), matrix weights (6 bit) and resulting neuron activations (8 bit). In this paper, we discuss BrainScaleS-2 as an analog inference accelerator and present calibration as well as optimization strategies, highlighting the advantages of training with hardware in the loop. Among other benchmarks, we classify the MNIST handwritten digits dataset using a two-dimensional convolution and two dense layers. We reach 98.0% test accuracy, closely matching the performance of the same network evaluated in software.

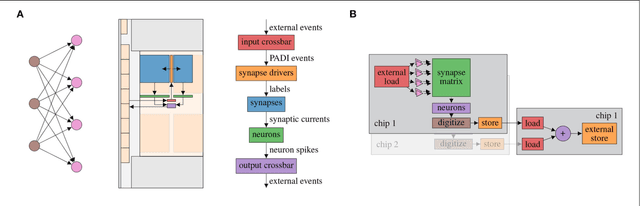

hxtorch: PyTorch for BrainScaleS-2 -- Perceptrons on Analog Neuromorphic Hardware

Jul 01, 2020

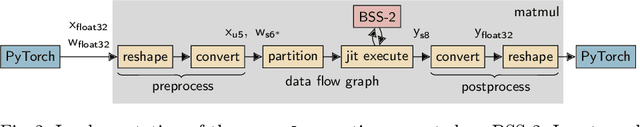

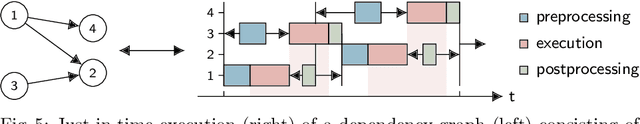

Abstract:We present software facilitating the usage of the BrainScaleS-2 analog neuromorphic hardware system as an inference accelerator for artificial neural networks. The accelerator hardware is transparently integrated into the PyTorch machine learning framework using its extension interface. In particular, we provide accelerator support for vector-matrix multiplications and convolutions; corresponding software-based autograd functionality is provided for hardware-in-the-loop training. Automatic partitioning of neural networks onto one or multiple accelerator chips is supported. We analyze implementation runtime overhead during training as well as inference, provide measurements for existing setups and evaluate the results in terms of the accelerator hardware design limitations. As an application of the introduced framework, we present a model that classifies activities of daily living with smartphone sensor data.

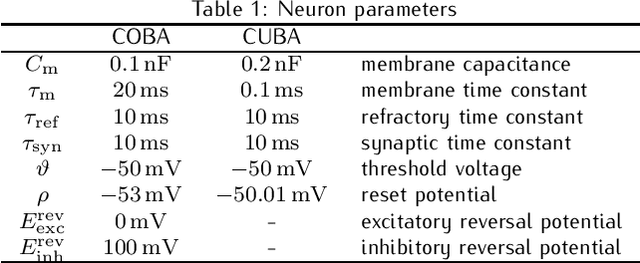

Versatile emulation of spiking neural networks on an accelerated neuromorphic substrate

Dec 30, 2019

Abstract:We present first experimental results on the novel BrainScaleS-2 neuromorphic architecture based on an analog neuro-synaptic core and augmented by embedded microprocessors for complex plasticity and experiment control. The high acceleration factor of 1000 compared to biological dynamics enables the execution of computationally expensive tasks, by allowing the fast emulation of long-duration experiments or rapid iteration over many consecutive trials. The flexibility of our architecture is demonstrated in a suite of five distinct experiments, which emphasize different aspects of the BrainScaleS-2 system.

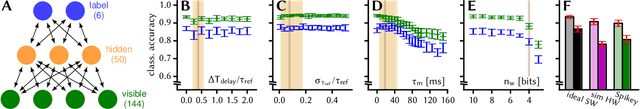

Fast and deep neuromorphic learning with time-to-first-spike coding

Dec 24, 2019

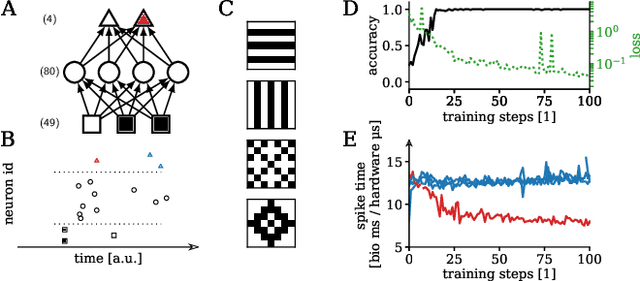

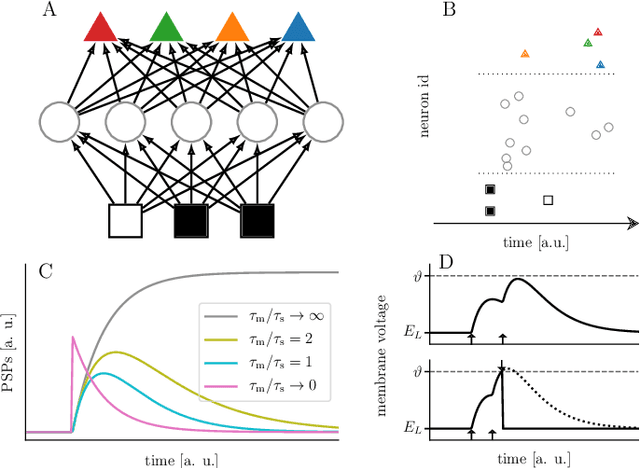

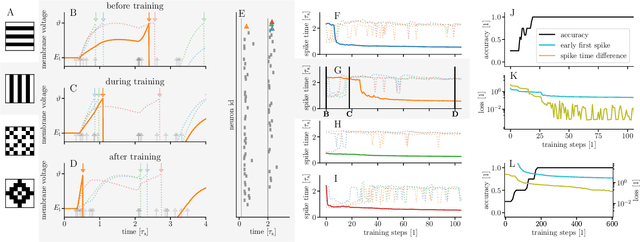

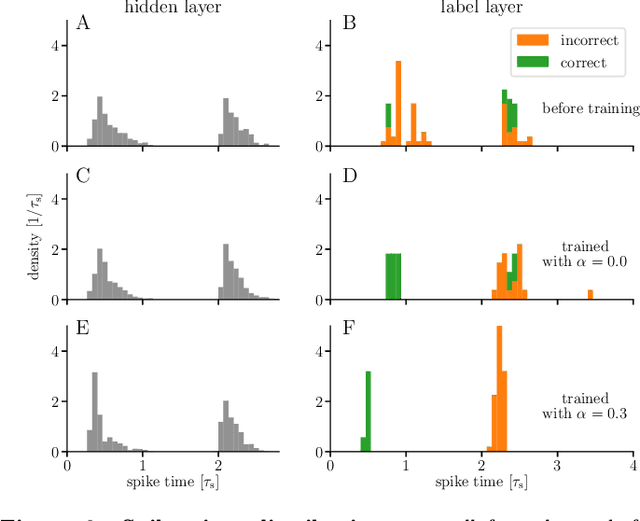

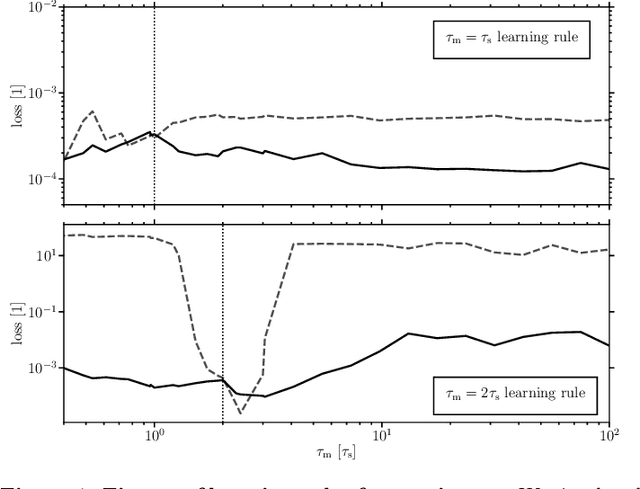

Abstract:For a biological agent operating under environmental pressure, energy consumption and reaction times are of critical importance. Similarly, engineered systems also strive for short time-to-solution and low energy-to-solution characteristics. At the level of neuronal implementation, this implies achieving the desired results with as few and as early spikes as possible. In the time-to-first-spike coding framework, both of these goals are inherently emerging features of learning. Here, we describe a rigorous derivation of error-backpropagation-based learning for hierarchical networks of leaky integrate-and-fire neurons. We explicitly address two issues that are relevant for both biological plausibility and applicability to neuromorphic substrates by incorporating dynamics with finite time constants and by optimizing the backward pass with respect to substrate variability. This narrows the gap between previous models of first-spike-time learning and biological neuronal dynamics, thereby also enabling fast and energy-efficient inference on analog neuromorphic devices that inherit these dynamics from their biological archetypes, which we demonstrate on two generations of the BrainScaleS analog neuromorphic architecture.

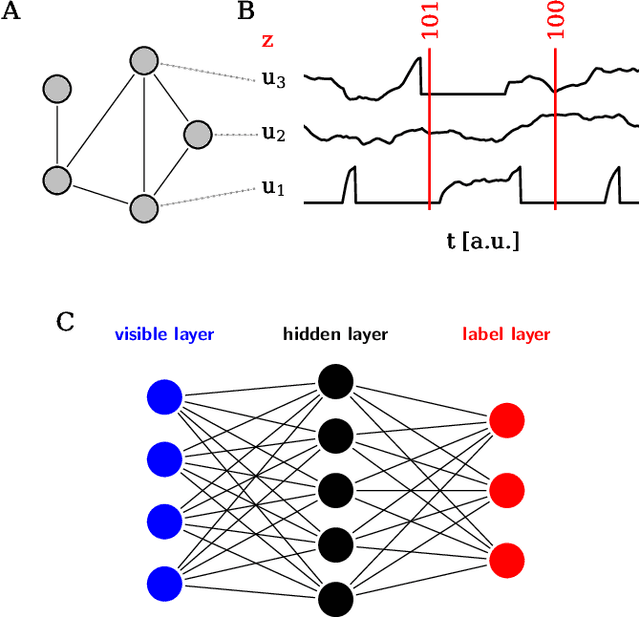

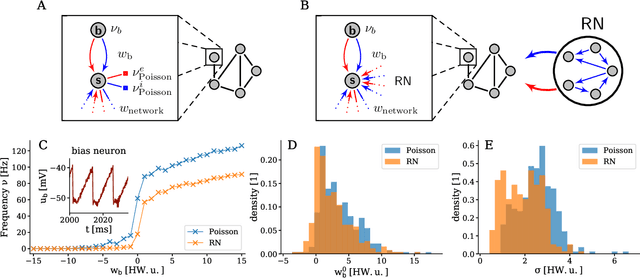

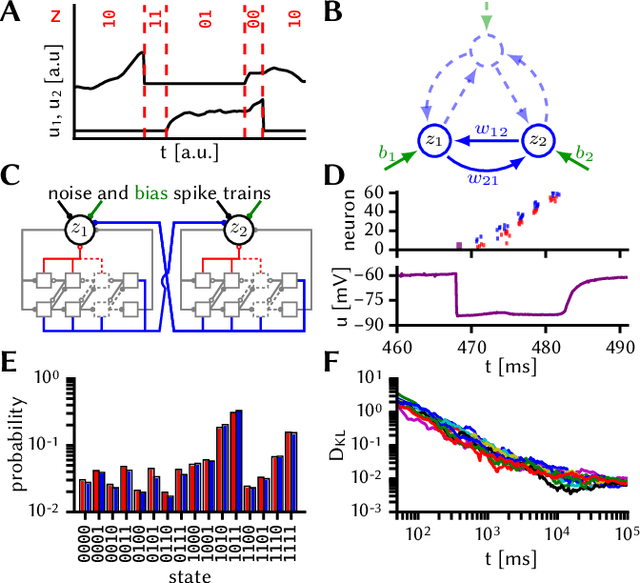

Stochasticity from function - why the Bayesian brain may need no noise

Sep 21, 2018

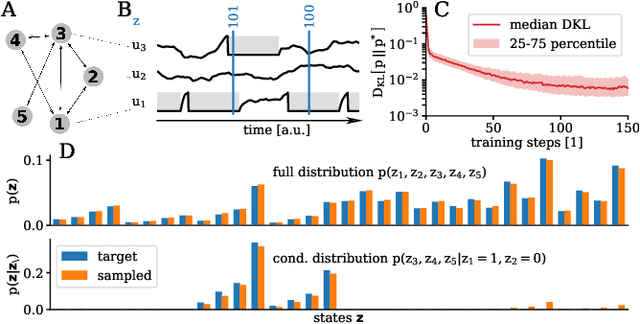

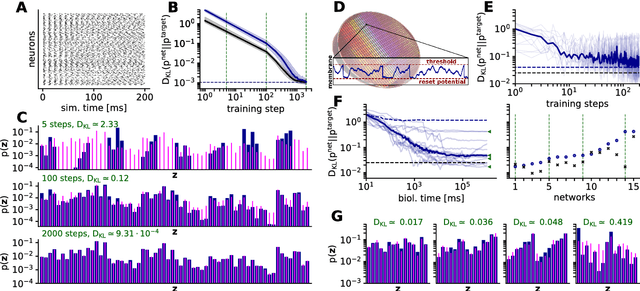

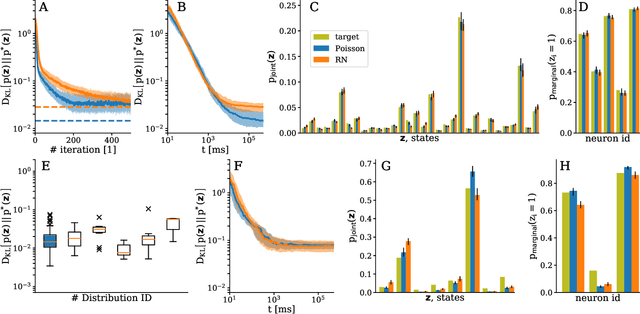

Abstract:An increasing body of evidence suggests that the trial-to-trial variability of spiking activity in the brain is not mere noise, but rather the reflection of a sampling-based encoding scheme for probabilistic computing. Since the precise statistical properties of neural activity are important in this context, many models assume an ad-hoc source of well-behaved, explicit noise, either on the input or on the output side of single neuron dynamics, most often assuming an independent Poisson process in either case. However, these assumptions are somewhat problematic: neighboring neurons tend to share receptive fields, rendering both their input and their output correlated; at the same time, neurons are known to behave largely deterministically, as a function of their membrane potential and conductance. We suggest that spiking neural networks may, in fact, have no need for noise to perform sampling-based Bayesian inference. We study analytically the effect of auto- and cross-correlations in functionally Bayesian spiking networks and demonstrate how their effect translates to synaptic interaction strengths, rendering them controllable through synaptic plasticity. This allows even small ensembles of interconnected deterministic spiking networks to simultaneously and co-dependently shape their output activity through learning, enabling them to perform complex Bayesian computation without any need for noise, which we demonstrate in silico, both in classical simulation and in neuromorphic emulation. These results close a gap between the abstract models and the biology of functionally Bayesian spiking networks, effectively reducing the architectural constraints imposed on physical neural substrates required to perform probabilistic computing, be they biological or artificial.

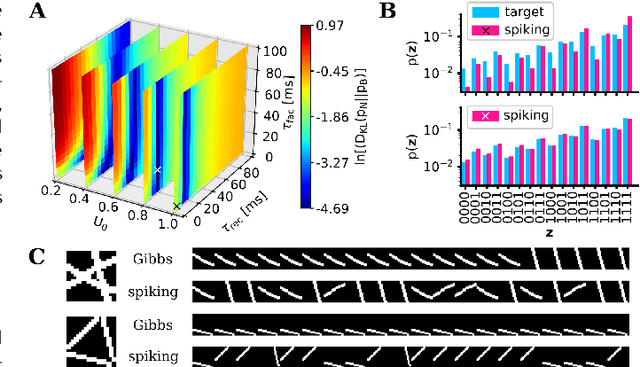

Generative models on accelerated neuromorphic hardware

Jul 11, 2018

Abstract:The traditional von Neumann computer architecture faces serious obstacles, both in terms of miniaturization and in terms of heat production, with increasing performance. Artificial neural (neuromorphic) substrates represent an alternative approach to tackle this challenge. A special subset of these systems follow the principle of "physical modeling" as they directly use the physical properties of the underlying substrate to realize computation with analog components. While these systems are potentially faster and/or more energy efficient than conventional computers, they require robust models that can cope with their inherent limitations in terms of controllability and range of parameters. A natural source of inspiration for robust models is neuroscience as the brain faces similar challenges. It has been recently suggested that sampling with the spiking dynamics of neurons is potentially suitable both as a generative and a discriminative model for artificial neural substrates. In this work we present the implementation of sampling with leaky integrate-and-fire neurons on the BrainScaleS physical model system. We prove the sampling property of the network and demonstrate its applicability to high-dimensional datasets. The required stochasticity is provided by a spiking random network on the same substrate. This allows the system to run in a self-contained fashion without external stochastic input from the host environment. The implementation provides a basis as a building block in large-scale biologically relevant emulations, as a fast approximate sampler or as a framework to realize on-chip learning on (future generations of) accelerated spiking neuromorphic hardware. Our work contributes to the development of robust computation on physical model systems.

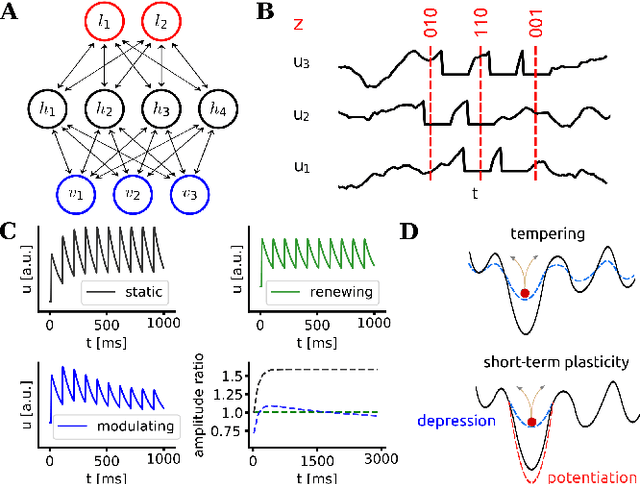

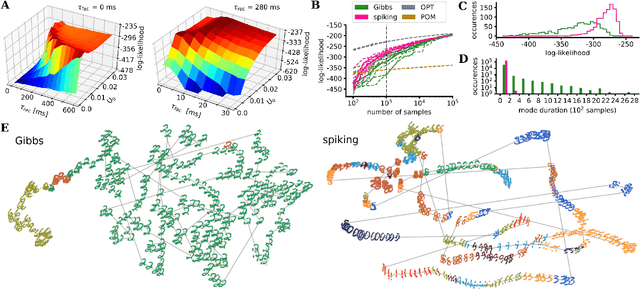

Spiking neurons with short-term synaptic plasticity form superior generative networks

Oct 10, 2017

Abstract:Spiking networks that perform probabilistic inference have been proposed both as models of cortical computation and as candidates for solving problems in machine learning. However, the evidence for spike-based computation being in any way superior to non-spiking alternatives remains scarce. We propose that short-term plasticity can provide spiking networks with distinct computational advantages compared to their classical counterparts. In this work, we use networks of leaky integrate-and-fire neurons that are trained to perform both discriminative and generative tasks in their forward and backward information processing paths, respectively. During training, the energy landscape associated with their dynamics becomes highly diverse, with deep attractor basins separated by high barriers. Classical algorithms solve this problem by employing various tempering techniques, which are both computationally demanding and require global state updates. We demonstrate how similar results can be achieved in spiking networks endowed with local short-term synaptic plasticity. Additionally, we discuss how these networks can even outperform tempering-based approaches when the training data is imbalanced. We thereby show how biologically inspired, local, spike-triggered synaptic dynamics based simply on a limited pool of synaptic resources can allow spiking networks to outperform their non-spiking relatives.

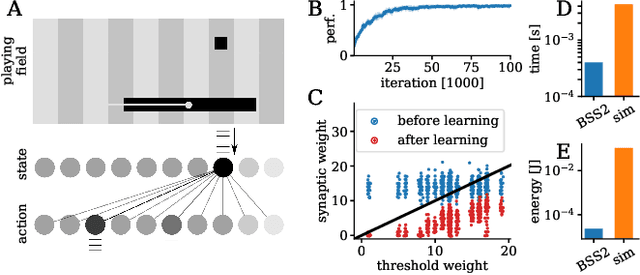

Pattern representation and recognition with accelerated analog neuromorphic systems

Jul 03, 2017

Abstract:Despite being originally inspired by the central nervous system, artificial neural networks have diverged from their biological archetypes as they have been remodeled to fit particular tasks. In this paper, we review several possibilites to reverse map these architectures to biologically more realistic spiking networks with the aim of emulating them on fast, low-power neuromorphic hardware. Since many of these devices employ analog components, which cannot be perfectly controlled, finding ways to compensate for the resulting effects represents a key challenge. Here, we discuss three different strategies to address this problem: the addition of auxiliary network components for stabilizing activity, the utilization of inherently robust architectures and a training method for hardware-emulated networks that functions without perfect knowledge of the system's dynamics and parameters. For all three scenarios, we corroborate our theoretical considerations with experimental results on accelerated analog neuromorphic platforms.

* accepted at ISCAS 2017

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge