Johannes Schemmel

Kirchhoff-Institute for Physics, Heidelberg, Germany

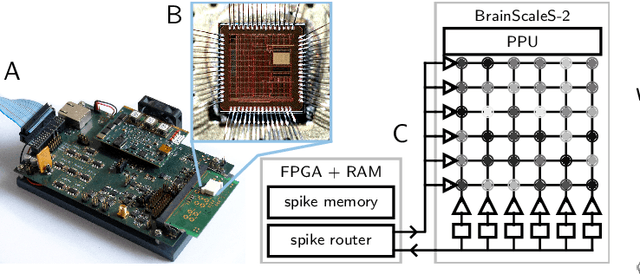

Real-time processing of analog signals on accelerated neuromorphic hardware

Feb 04, 2026Abstract:Sensory processing with neuromorphic systems is typically done by using either event-based sensors or translating input signals to spikes before presenting them to the neuromorphic processor. Here, we offer an alternative approach: direct analog signal injection eliminates superfluous and power-intensive analog-to-digital and digital-to-analog conversions, making it particularly suitable for efficient near-sensor processing. We demonstrate this by using the accelerated BrainScaleS-2 mixed-signal neuromorphic research platform and interfacing it directly to microphones and a servo-motor-driven actuator. Utilizing BrainScaleS-2's 1000-fold acceleration factor, we employ a spiking neural network to transform interaural time differences into a spatial code and thereby predict the location of sound sources. Our primary contributions are the first demonstrations of direct, continuous-valued sensor data injection into the analog compute units of the BrainScaleS-2 ASIC, and actuator control using its embedded microprocessors. This enables a fully on-chip processing pipeline$\unicode{x2014}$from sensory input handling, via spiking neural network processing to physical action. We showcase this by programming the system to localize and align a servo motor with the spatial direction of transient noise peaks in real-time.

Short-reach Optical Communications: A Real-world Task for Neuromorphic Hardware

Dec 04, 2024Abstract:Spiking neural networks (SNNs) emulated on dedicated neuromorphic accelerators promise to offer energy-efficient signal processing. However, the neuromorphic advantage over traditional algorithms still remains to be demonstrated in real-world applications. Here, we describe an intensity-modulation, direct-detection (IM/DD) task that is relevant to high-speed optical communication systems used in data centers. Compared to other machine learning-inspired benchmarks, the task offers several advantages. First, the dataset is inherently time-dependent, i.e., there is a time dimension that can be natively mapped to the dynamic evolution of SNNs. Second, small-scale SNNs can achieve the target accuracy required by technical communication standards. Third, due to the small scale and the defined target accuracy, the task facilitates the optimization for real-world aspects, such as energy efficiency, resource requirements, and system complexity.

Integrating programmable plasticity in experiment descriptions for analog neuromorphic hardware

Dec 04, 2024

Abstract:The study of plasticity in spiking neural networks is an active area of research. However, simulations that involve complex plasticity rules, dense connectivity/high synapse counts, complex neuron morphologies, or extended simulation times can be computationally demanding. The BrainScaleS-2 neuromorphic architecture has been designed to address this challenge by supporting "hybrid" plasticity, which combines the concepts of programmability and inherently parallel emulation. In particular, observables that are expensive in numerical simulation, such as per-synapse correlation measurements, are implemented directly in the synapse circuits. The evaluation of the observables, the decision to perform an update, and the magnitude of an update, are all conducted in a conventional program that runs simultaneously with the analog neural network. Consequently, these systems can offer a scalable and flexible solution in such cases. While previous work on the platform has already reported on the use of different kinds of plasticity, the descriptions for the spiking neural network experiment topology and protocol, and the plasticity algorithm have not been connected. In this work, we introduce an integrated framework for describing spiking neural network experiments and plasticity rules in a unified high-level experiment description language for the BrainScaleS-2 platform and demonstrate its use.

Demonstrating the Advantages of Analog Wafer-Scale Neuromorphic Hardware

Dec 03, 2024

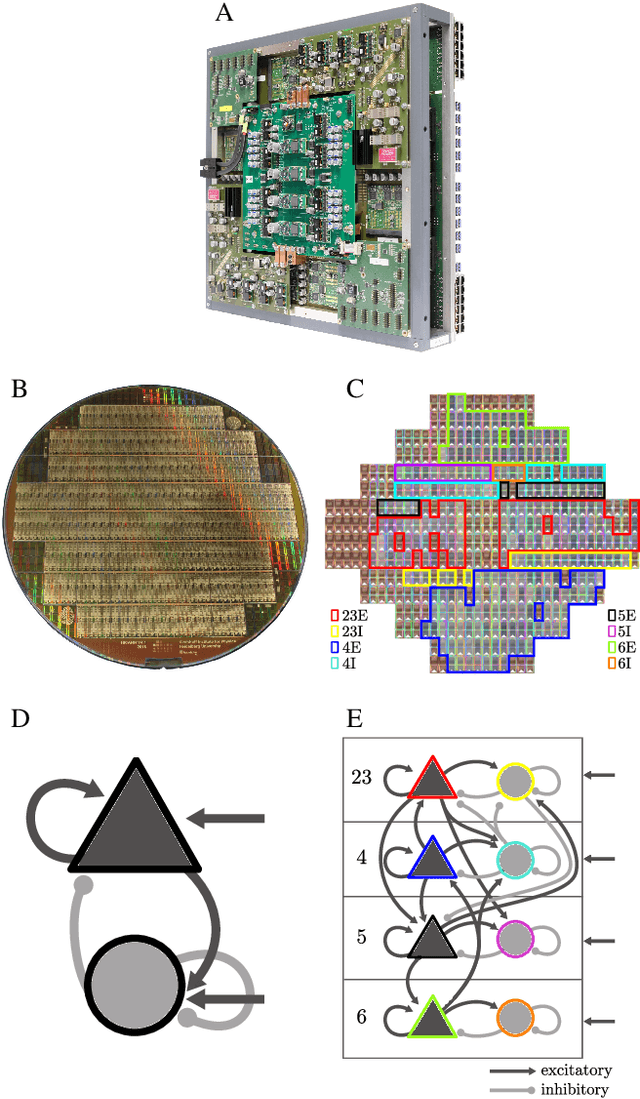

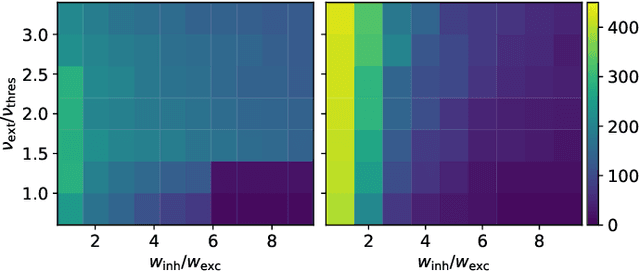

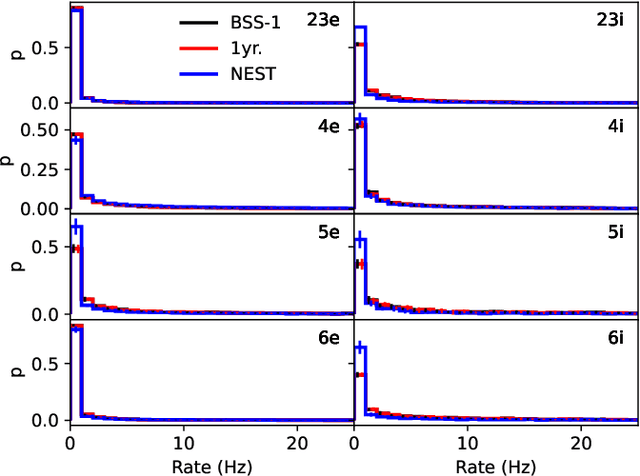

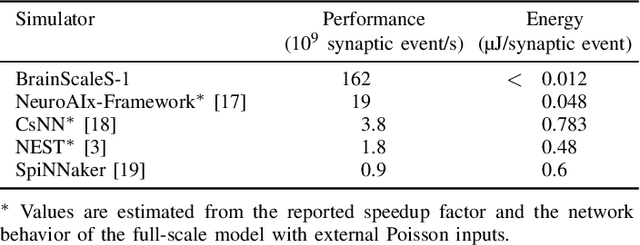

Abstract:As numerical simulations grow in size and complexity, they become increasingly resource-intensive in terms of time and energy. While specialized hardware accelerators often provide order-of-magnitude gains and are state of the art in other scientific fields, their availability and applicability in computational neuroscience is still limited. In this field, neuromorphic accelerators, particularly mixed-signal architectures like the BrainScaleS systems, offer the most significant performance benefits. These systems maintain a constant, accelerated emulation speed independent of network model and size. This is especially beneficial when traditional simulators reach their limits, such as when modeling complex neuron dynamics, incorporating plasticity mechanisms, or running long or repetitive experiments. However, the analog nature of these systems introduces new challenges. In this paper we demonstrate the capabilities and advantages of the BrainScaleS-1 system and how it can be used in combination with conventional software simulations. We report the emulation time and energy consumption for two biologically inspired networks adapted to the neuromorphic hardware substrate: a balanced random network based on Brunel and the cortical microcircuit from Potjans and Diesmann.

Reproduction of AdEx dynamics on neuromorphic hardware through data embedding and simulation-based inference

Dec 03, 2024Abstract:The development of mechanistic models of physical systems is essential for understanding their behavior and formulating predictions that can be validated experimentally. Calibration of these models, especially for complex systems, requires automated optimization methods due to the impracticality of manual parameter tuning. In this study, we use an autoencoder to automatically extract relevant features from the membrane trace of a complex neuron model emulated on the BrainScaleS-2 neuromorphic system, and subsequently leverage sequential neural posterior estimation (SNPE), a simulation-based inference algorithm, to approximate the posterior distribution of neuron parameters. Our results demonstrate that the autoencoder is able to extract essential features from the observed membrane traces, with which the SNPE algorithm is able to find an approximation of the posterior distribution. This suggests that the combination of an autoencoder with the SNPE algorithm is a promising optimization method for complex systems.

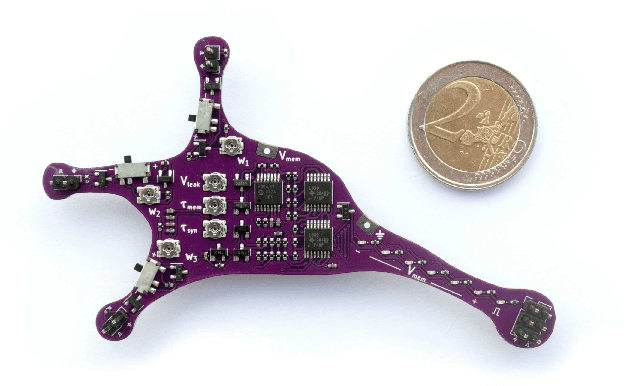

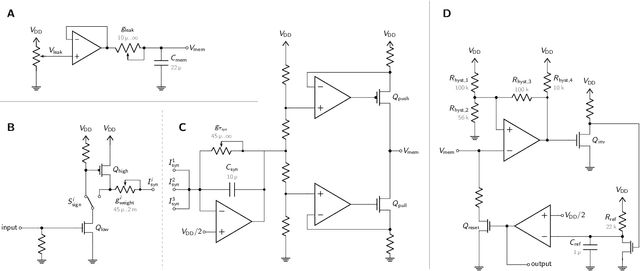

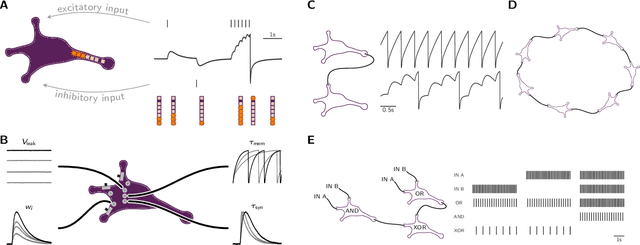

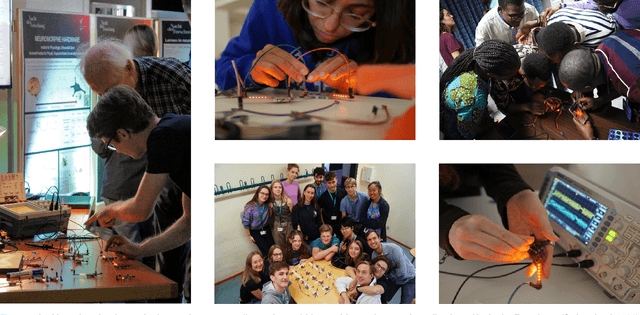

Lu.i -- A low-cost electronic neuron for education and outreach

Apr 25, 2024

Abstract:With an increasing presence of science throughout all parts of society, there is a rising expectation for researchers to effectively communicate their work and, equally, for teachers to discuss contemporary findings in their classrooms. While the community can resort to an established set of teaching aids for the fundamental concepts of most natural sciences, there is a need for similarly illustrative experiments and demonstrators in neuroscience. We therefore introduce Lu.i: a parametrizable electronic implementation of the leaky-integrate-and-fire neuron model in an engaging form factor. These palm-sized neurons can be used to visualize and experience the dynamics of individual cells and small spiking neural networks. When stimulated with real or simulated sensory input, Lu.i demonstrates brain-inspired information processing in the hands of a student. As such, it is actively used at workshops, in classrooms, and for science communication. As a versatile tool for teaching and outreach, Lu.i nurtures the comprehension of neuroscience research and neuromorphic engineering among future generations of scientists and in the general public.

jaxsnn: Event-driven Gradient Estimation for Analog Neuromorphic Hardware

Jan 30, 2024Abstract:Traditional neuromorphic hardware architectures rely on event-driven computation, where the asynchronous transmission of events, such as spikes, triggers local computations within synapses and neurons. While machine learning frameworks are commonly used for gradient-based training, their emphasis on dense data structures poses challenges for processing asynchronous data such as spike trains. This problem is particularly pronounced for typical tensor data structures. In this context, we present a novel library (jaxsnn) built on top of JAX, that departs from conventional machine learning frameworks by providing flexibility in the data structures used and the handling of time, while maintaining Autograd functionality and composability. Our library facilitates the simulation of spiking neural networks and gradient estimation, with a focus on compatibility with time-continuous neuromorphic backends, such as the BrainScaleS-2 system, during the forward pass. This approach opens avenues for more efficient and flexible training of spiking neural networks, bridging the gap between traditional neuromorphic architectures and contemporary machine learning frameworks.

Towards Large-scale Network Emulation on Analog Neuromorphic Hardware

Jan 30, 2024Abstract:We present a novel software feature for the BrainScaleS-2 accelerated neuromorphic platform that facilitates the emulation of partitioned large-scale spiking neural networks. This approach is well suited for many deep spiking neural networks, where the constraint of the largest recurrent subnetwork fitting on the substrate or the limited fan-in of neurons is often not a limitation in practice. We demonstrate the training of two deep spiking neural network models, using the MNIST and EuroSAT datasets, that exceed the physical size constraints of a single-chip BrainScaleS-2 system. The ability to emulate and train networks larger than the substrate provides a pathway for accurate performance evaluation in planned or scaled systems, ultimately advancing the development and understanding of large-scale models and neuromorphic computing architectures.

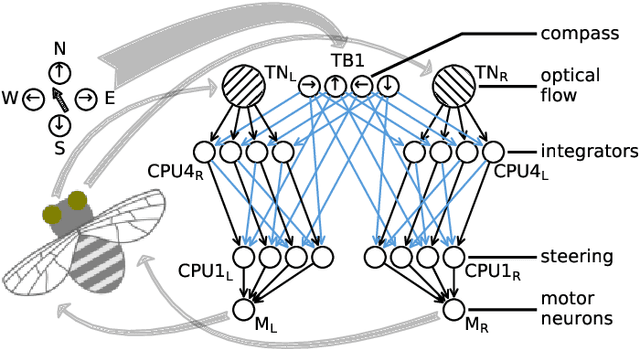

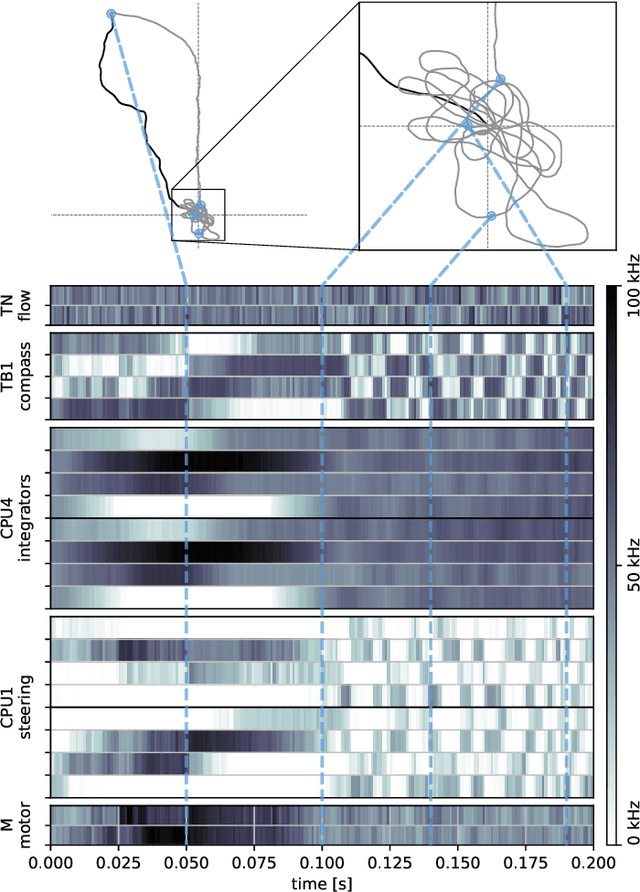

Emulating insect brains for neuromorphic navigation

Dec 31, 2023

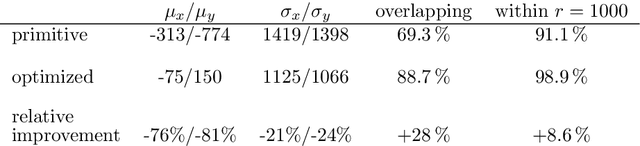

Abstract:Bees display the remarkable ability to return home in a straight line after meandering excursions to their environment. Neurobiological imaging studies have revealed that this capability emerges from a path integration mechanism implemented within the insect's brain. In the present work, we emulate this neural network on the neuromorphic mixed-signal processor BrainScaleS-2 to guide bees, virtually embodied on a digital co-processor, back to their home location after randomly exploring their environment. To realize the underlying neural integrators, we introduce single-neuron spike-based short-term memory cells with axo-axonic synapses. All entities, including environment, sensory organs, brain, actuators, and the virtual body, run autonomously on a single BrainScaleS-2 microchip. The functioning network is fine-tuned for better precision and reliability through an evolution strategy. As BrainScaleS-2 emulates neural processes 1000 times faster than biology, 4800 consecutive bee journeys distributed over 320 generations occur within only half an hour on a single neuromorphic core.

NeuroBench: Advancing Neuromorphic Computing through Collaborative, Fair and Representative Benchmarking

Apr 15, 2023

Abstract:The field of neuromorphic computing holds great promise in terms of advancing computing efficiency and capabilities by following brain-inspired principles. However, the rich diversity of techniques employed in neuromorphic research has resulted in a lack of clear standards for benchmarking, hindering effective evaluation of the advantages and strengths of neuromorphic methods compared to traditional deep-learning-based methods. This paper presents a collaborative effort, bringing together members from academia and the industry, to define benchmarks for neuromorphic computing: NeuroBench. The goals of NeuroBench are to be a collaborative, fair, and representative benchmark suite developed by the community, for the community. In this paper, we discuss the challenges associated with benchmarking neuromorphic solutions, and outline the key features of NeuroBench. We believe that NeuroBench will be a significant step towards defining standards that can unify the goals of neuromorphic computing and drive its technological progress. Please visit neurobench.ai for the latest updates on the benchmark tasks and metrics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge