Yannik Stradmann

Real-time processing of analog signals on accelerated neuromorphic hardware

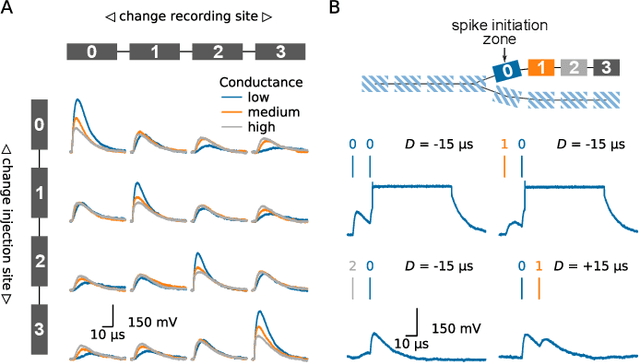

Feb 04, 2026Abstract:Sensory processing with neuromorphic systems is typically done by using either event-based sensors or translating input signals to spikes before presenting them to the neuromorphic processor. Here, we offer an alternative approach: direct analog signal injection eliminates superfluous and power-intensive analog-to-digital and digital-to-analog conversions, making it particularly suitable for efficient near-sensor processing. We demonstrate this by using the accelerated BrainScaleS-2 mixed-signal neuromorphic research platform and interfacing it directly to microphones and a servo-motor-driven actuator. Utilizing BrainScaleS-2's 1000-fold acceleration factor, we employ a spiking neural network to transform interaural time differences into a spatial code and thereby predict the location of sound sources. Our primary contributions are the first demonstrations of direct, continuous-valued sensor data injection into the analog compute units of the BrainScaleS-2 ASIC, and actuator control using its embedded microprocessors. This enables a fully on-chip processing pipeline$\unicode{x2014}$from sensory input handling, via spiking neural network processing to physical action. We showcase this by programming the system to localize and align a servo motor with the spatial direction of transient noise peaks in real-time.

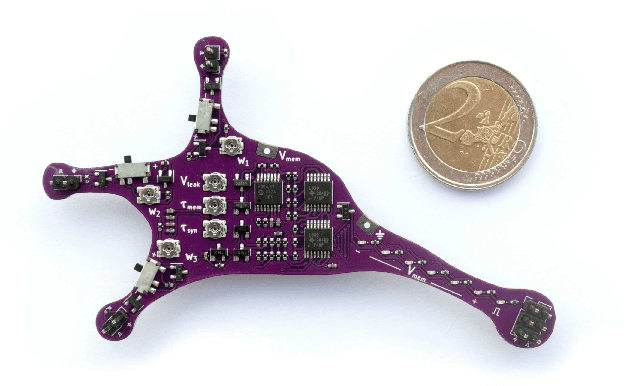

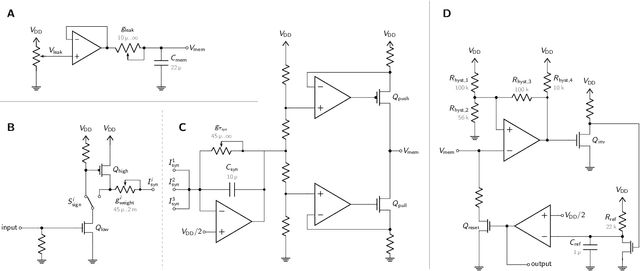

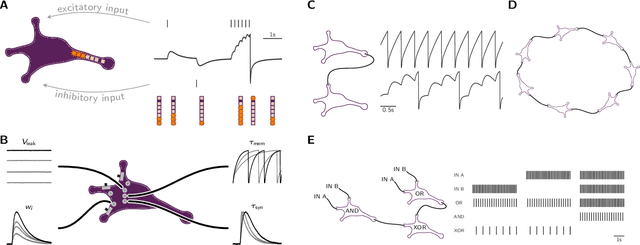

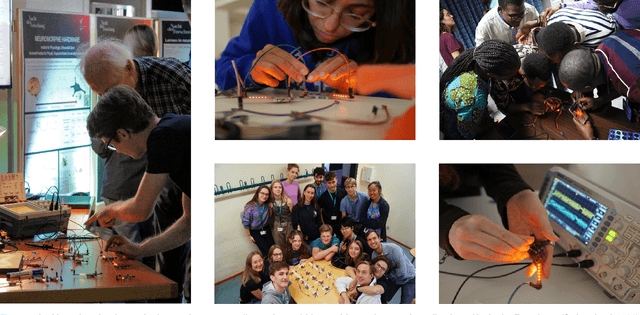

Lu.i -- A low-cost electronic neuron for education and outreach

Apr 25, 2024

Abstract:With an increasing presence of science throughout all parts of society, there is a rising expectation for researchers to effectively communicate their work and, equally, for teachers to discuss contemporary findings in their classrooms. While the community can resort to an established set of teaching aids for the fundamental concepts of most natural sciences, there is a need for similarly illustrative experiments and demonstrators in neuroscience. We therefore introduce Lu.i: a parametrizable electronic implementation of the leaky-integrate-and-fire neuron model in an engaging form factor. These palm-sized neurons can be used to visualize and experience the dynamics of individual cells and small spiking neural networks. When stimulated with real or simulated sensory input, Lu.i demonstrates brain-inspired information processing in the hands of a student. As such, it is actively used at workshops, in classrooms, and for science communication. As a versatile tool for teaching and outreach, Lu.i nurtures the comprehension of neuroscience research and neuromorphic engineering among future generations of scientists and in the general public.

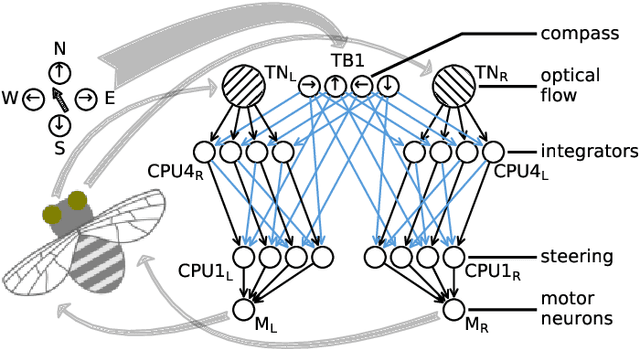

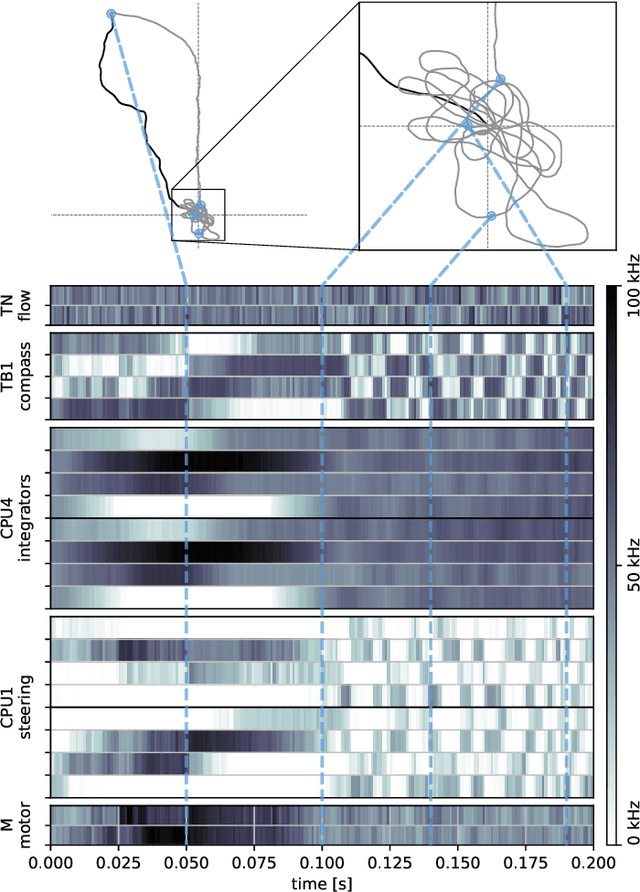

Emulating insect brains for neuromorphic navigation

Dec 31, 2023

Abstract:Bees display the remarkable ability to return home in a straight line after meandering excursions to their environment. Neurobiological imaging studies have revealed that this capability emerges from a path integration mechanism implemented within the insect's brain. In the present work, we emulate this neural network on the neuromorphic mixed-signal processor BrainScaleS-2 to guide bees, virtually embodied on a digital co-processor, back to their home location after randomly exploring their environment. To realize the underlying neural integrators, we introduce single-neuron spike-based short-term memory cells with axo-axonic synapses. All entities, including environment, sensory organs, brain, actuators, and the virtual body, run autonomously on a single BrainScaleS-2 microchip. The functioning network is fine-tuned for better precision and reliability through an evolution strategy. As BrainScaleS-2 emulates neural processes 1000 times faster than biology, 4800 consecutive bee journeys distributed over 320 generations occur within only half an hour on a single neuromorphic core.

A Scalable Approach to Modeling on Accelerated Neuromorphic Hardware

Mar 21, 2022

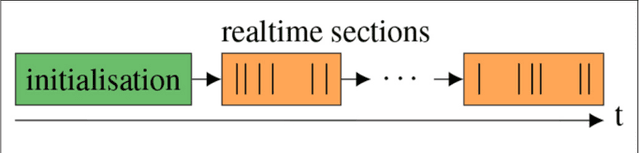

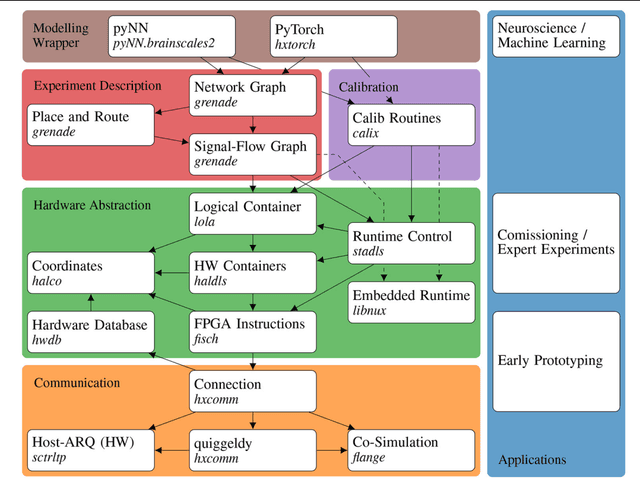

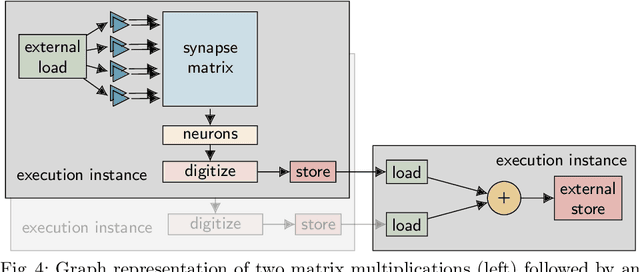

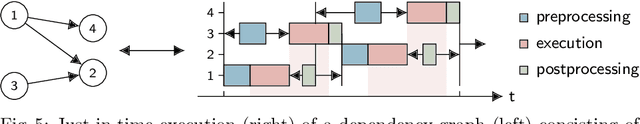

Abstract:Neuromorphic systems open up opportunities to enlarge the explorative space for computational research. However, it is often challenging to unite efficiency and usability. This work presents the software aspects of this endeavor for the BrainScaleS-2 system, a hybrid accelerated neuromorphic hardware architecture based on physical modeling. We introduce key aspects of the BrainScaleS-2 Operating System: experiment workflow, API layering, software design, and platform operation. We present use cases to discuss and derive requirements for the software and showcase the implementation. The focus lies on novel system and software features such as multi-compartmental neurons, fast re-configuration for hardware-in-the-loop training, applications for the embedded processors, the non-spiking operation mode, interactive platform access, and sustainable hardware/software co-development. Finally, we discuss further developments in terms of hardware scale-up, system usability and efficiency.

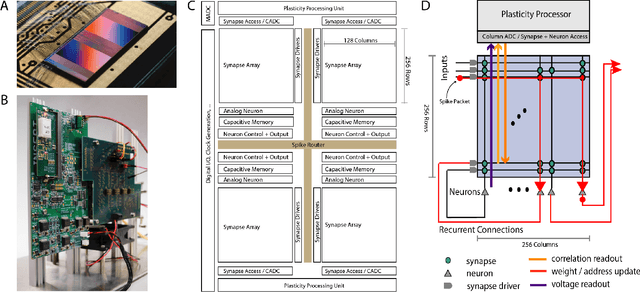

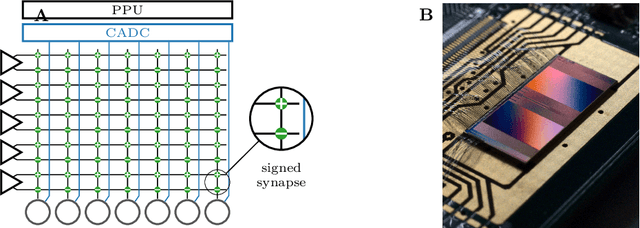

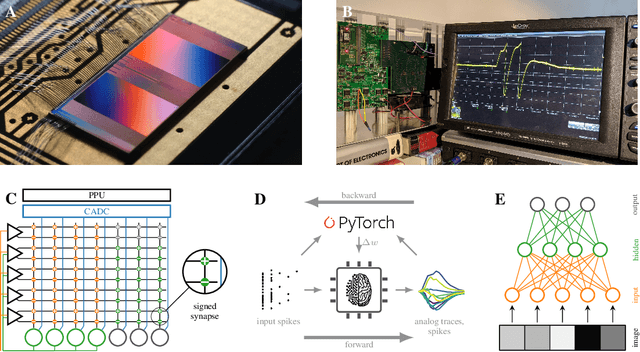

The BrainScaleS-2 accelerated neuromorphic system with hybrid plasticity

Feb 03, 2022

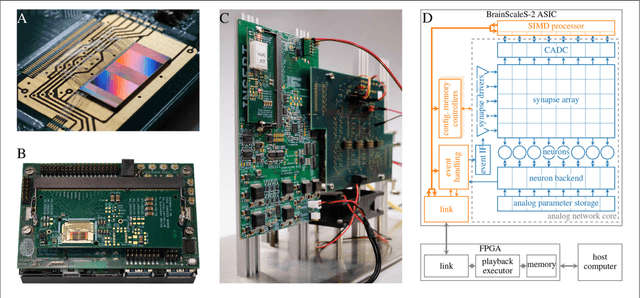

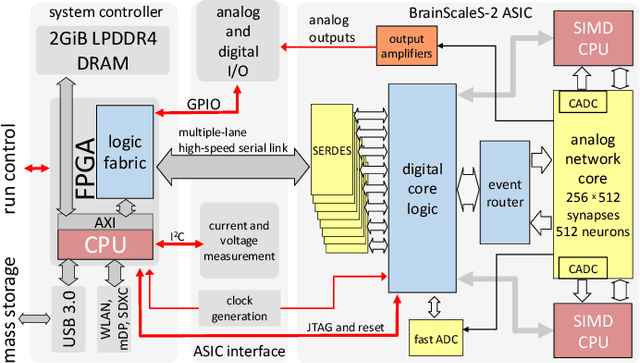

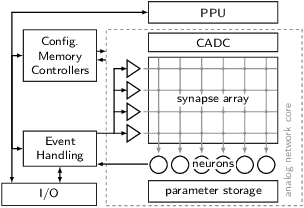

Abstract:Since the beginning of information processing by electronic components, the nervous system has served as a metaphor for the organization of computational primitives. Brain-inspired computing today encompasses a class of approaches ranging from using novel nano-devices for computation to research into large-scale neuromorphic architectures, such as TrueNorth, SpiNNaker, BrainScaleS, Tianjic, and Loihi. While implementation details differ, spiking neural networks - sometimes referred to as the third generation of neural networks - are the common abstraction used to model computation with such systems. Here we describe the second generation of the BrainScaleS neuromorphic architecture, emphasizing applications enabled by this architecture. It combines a custom analog accelerator core supporting the accelerated physical emulation of bio-inspired spiking neural network primitives with a tightly coupled digital processor and a digital event-routing network.

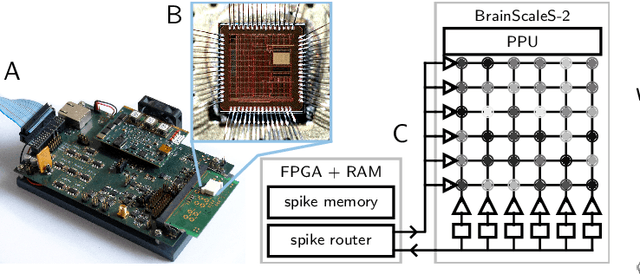

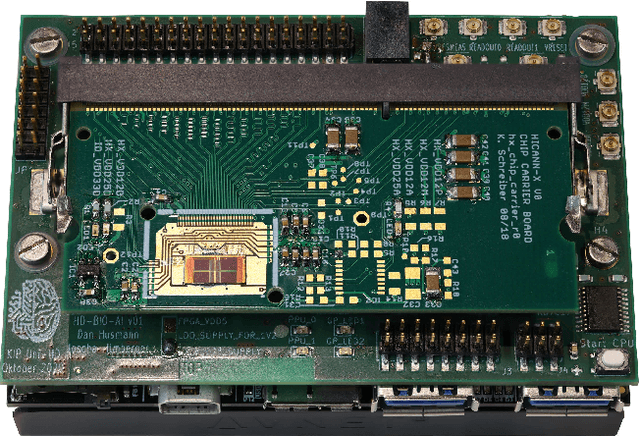

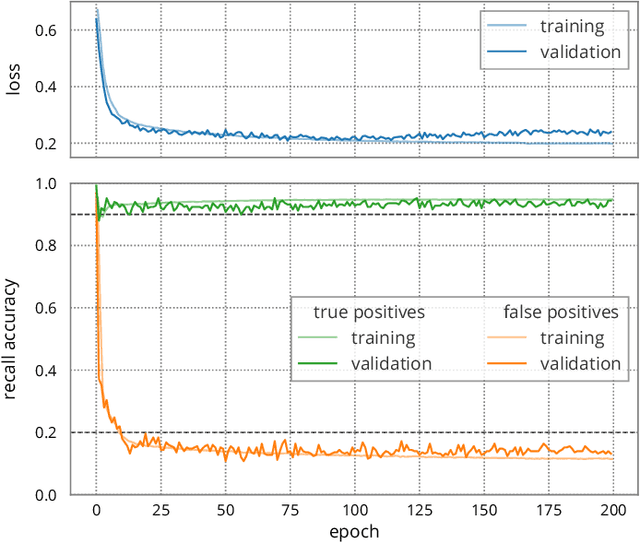

Demonstrating Analog Inference on the BrainScaleS-2 Mobile System

Mar 29, 2021

Abstract:We present the BrainScaleS-2 mobile system as a compact analog inference engine based on the BrainScaleS-2 ASIC and demonstrate its capabilities at classifying a medical electrocardiogram dataset. The analog network core of the ASIC is utilized to perform the multiply-accumulate operations of a convolutional deep neural network. We measure a total energy consumption of 192uJ for the ASIC and achieve a classification time of 276us per electrocardiographic patient sample. Patients with atrial fibrillation are correctly identified with a detection rate of 93.7(7)% at 14.0(10)% false positives. The system is directly applicable to edge inference applications due to its small size, power envelope and flexible I/O capabilities. Possible future applications can furthermore combine conventional machine learning layers with online-learning in spiking neural networks on a single BrainScaleS-2 ASIC. The system has successfully participated and proven to operate reliably in the independently judged competition "Pilotinnovationswettbewerb 'Energieeffizientes KI-System'" of the German Federal Ministry of Education and Research (BMBF).

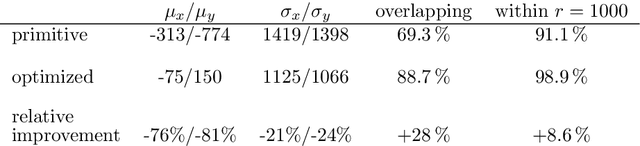

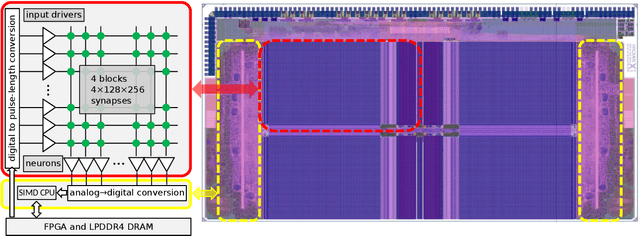

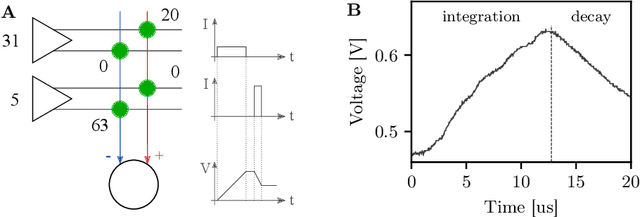

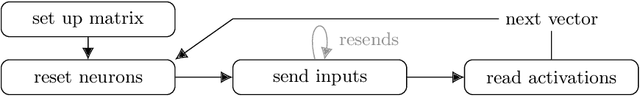

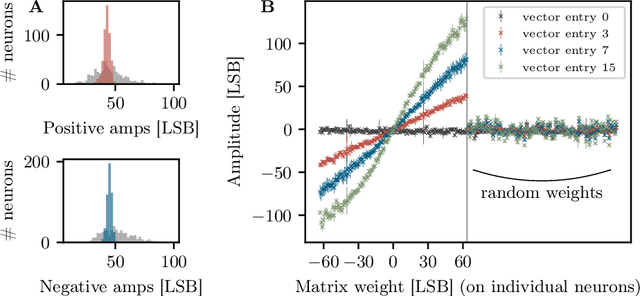

Inference with Artificial Neural Networks on Analog Neuromorphic Hardware

Jul 01, 2020

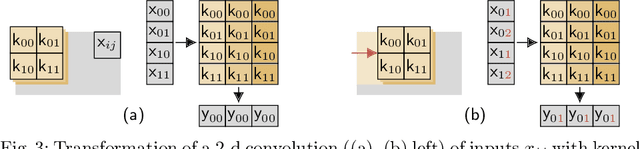

Abstract:The neuromorphic BrainScaleS-2 ASIC comprises mixed-signal neurons and synapse circuits as well as two versatile digital microprocessors. Primarily designed to emulate spiking neural networks, the system can also operate in a vector-matrix multiplication and accumulation mode for artificial neural networks. Analog multiplication is carried out in the synapse circuits, while the results are accumulated on the neurons' membrane capacitors. Designed as an analog, in-memory computing device, it promises high energy efficiency. Fixed-pattern noise and trial-to-trial variations, however, require the implemented networks to cope with a certain level of perturbations. Further limitations are imposed by the digital resolution of the input values (5 bit), matrix weights (6 bit) and resulting neuron activations (8 bit). In this paper, we discuss BrainScaleS-2 as an analog inference accelerator and present calibration as well as optimization strategies, highlighting the advantages of training with hardware in the loop. Among other benchmarks, we classify the MNIST handwritten digits dataset using a two-dimensional convolution and two dense layers. We reach 98.0% test accuracy, closely matching the performance of the same network evaluated in software.

hxtorch: PyTorch for BrainScaleS-2 -- Perceptrons on Analog Neuromorphic Hardware

Jul 01, 2020

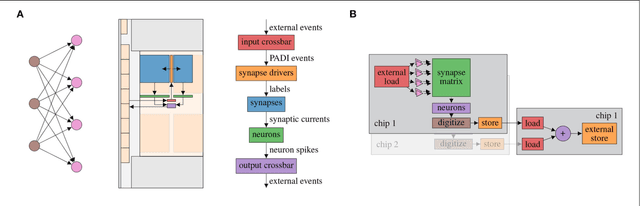

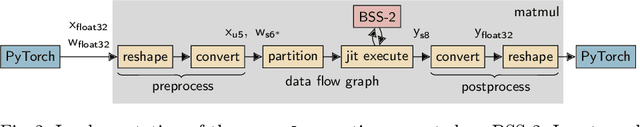

Abstract:We present software facilitating the usage of the BrainScaleS-2 analog neuromorphic hardware system as an inference accelerator for artificial neural networks. The accelerator hardware is transparently integrated into the PyTorch machine learning framework using its extension interface. In particular, we provide accelerator support for vector-matrix multiplications and convolutions; corresponding software-based autograd functionality is provided for hardware-in-the-loop training. Automatic partitioning of neural networks onto one or multiple accelerator chips is supported. We analyze implementation runtime overhead during training as well as inference, provide measurements for existing setups and evaluate the results in terms of the accelerator hardware design limitations. As an application of the introduced framework, we present a model that classifies activities of daily living with smartphone sensor data.

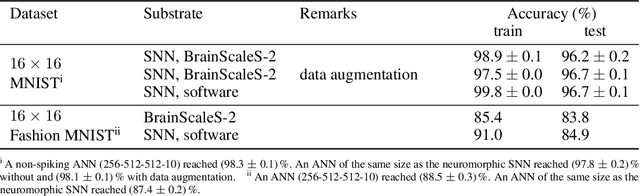

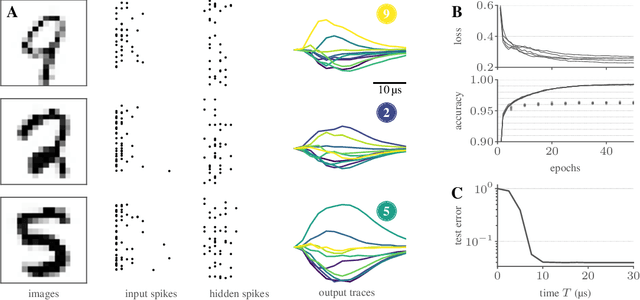

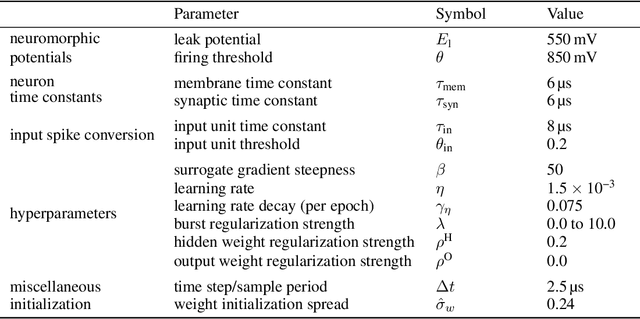

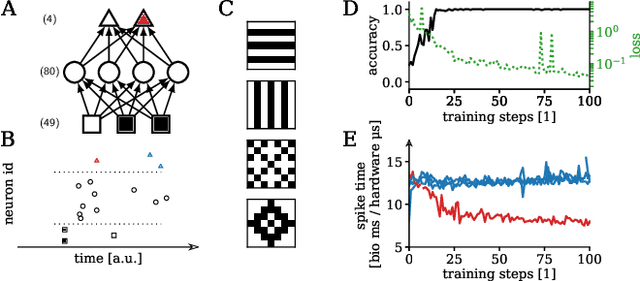

Training spiking multi-layer networks with surrogate gradients on an analog neuromorphic substrate

Jun 12, 2020

Abstract:Spiking neural networks are nature's solution for parallel information processing with high temporal precision at a low metabolic energy cost. To that end, biological neurons integrate inputs as an analog sum and communicate their outputs digitally as spikes, i.e., sparse binary events in time. These architectural principles can be mirrored effectively in analog neuromorphic hardware. Nevertheless, training spiking neural networks with sparse activity on hardware devices remains a major challenge. Primarily this is due to the lack of suitable training methods that take into account device-specific imperfections and operate at the level of individual spikes instead of firing rates. To tackle this issue, we developed a hardware-in-the-loop strategy to train multi-layer spiking networks using surrogate gradients on the analog BrainScales-2 chip. Specifically, we used the hardware to compute the forward pass of the network, while the backward pass was computed in software. We evaluated our approach on downscaled 16x16 versions of the MNIST and the fashion MNIST datasets in which spike latencies encoded pixel intensities. The analog neuromorphic substrate closely matched the performance of equivalently sized networks implemented in software. It is capable of processing 70 k patterns per second with a power consumption of less than 300 mW. Added activity regularization resulted in sparse network activity with about 20 spikes per input, at little to no reduction in classification performance. Thus, overall, our work demonstrates low-energy spiking network processing on an analog neuromorphic substrate and sets several new benchmarks for hardware systems in terms of classification accuracy, processing speed, and efficiency. Importantly, our work emphasizes the value of hardware-in-the-loop training and paves the way toward energy-efficient information processing on non-von-Neumann architectures.

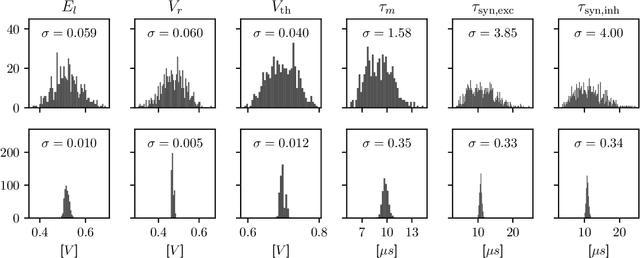

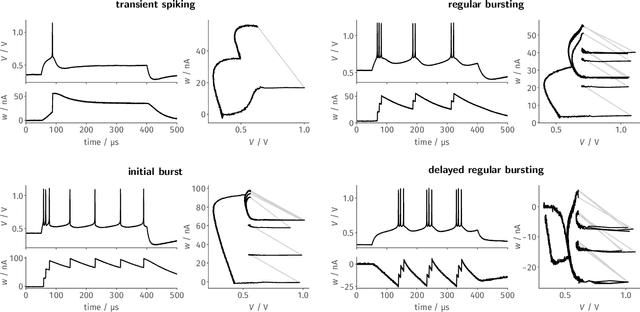

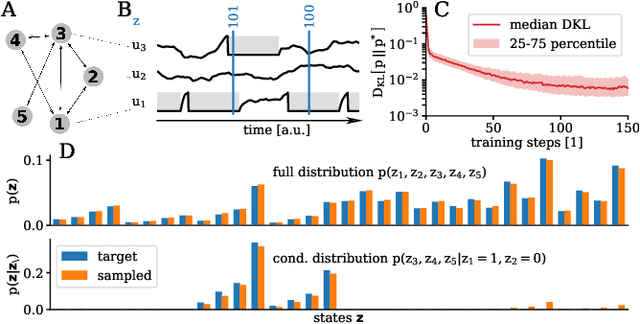

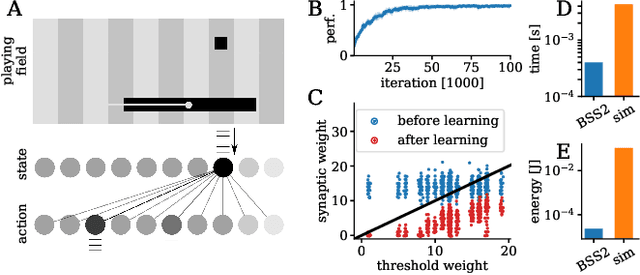

Versatile emulation of spiking neural networks on an accelerated neuromorphic substrate

Dec 30, 2019

Abstract:We present first experimental results on the novel BrainScaleS-2 neuromorphic architecture based on an analog neuro-synaptic core and augmented by embedded microprocessors for complex plasticity and experiment control. The high acceleration factor of 1000 compared to biological dynamics enables the execution of computationally expensive tasks, by allowing the fast emulation of long-duration experiments or rapid iteration over many consecutive trials. The flexibility of our architecture is demonstrated in a suite of five distinct experiments, which emphasize different aspects of the BrainScaleS-2 system.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge