Timo C. Wunderlich

SpiNNaker2: A Large-Scale Neuromorphic System for Event-Based and Asynchronous Machine Learning

Jan 09, 2024Abstract:The joint progress of artificial neural networks (ANNs) and domain specific hardware accelerators such as GPUs and TPUs took over many domains of machine learning research. This development is accompanied by a rapid growth of the required computational demands for larger models and more data. Concurrently, emerging properties of foundation models such as in-context learning drive new opportunities for machine learning applications. However, the computational cost of such applications is a limiting factor of the technology in data centers, and more importantly in mobile devices and edge systems. To mediate the energy footprint and non-trivial latency of contemporary systems, neuromorphic computing systems deeply integrate computational principles of neurobiological systems by leveraging low-power analog and digital technologies. SpiNNaker2 is a digital neuromorphic chip developed for scalable machine learning. The event-based and asynchronous design of SpiNNaker2 allows the composition of large-scale systems involving thousands of chips. This work features the operating principles of SpiNNaker2 systems, outlining the prototype of novel machine learning applications. These applications range from ANNs over bio-inspired spiking neural networks to generalized event-based neural networks. With the successful development and deployment of SpiNNaker2, we aim to facilitate the advancement of event-based and asynchronous algorithms for future generations of machine learning systems.

EventProp: Backpropagation for Exact Gradients in Spiking Neural Networks

Sep 21, 2020

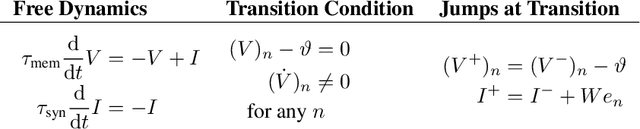

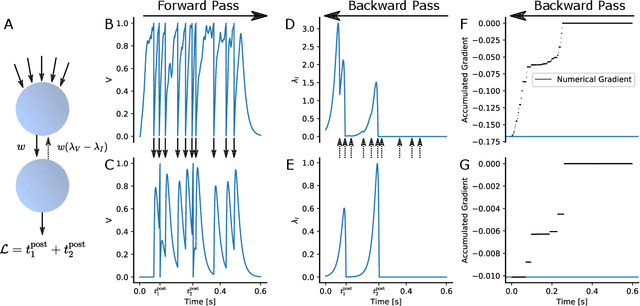

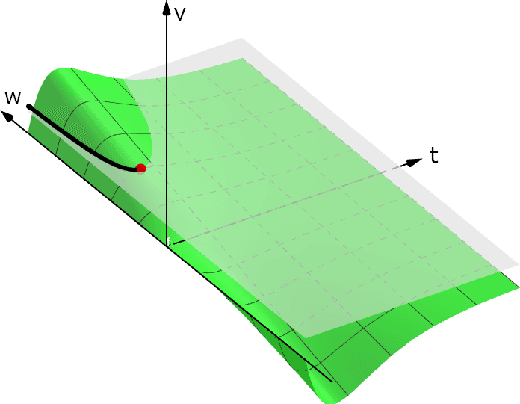

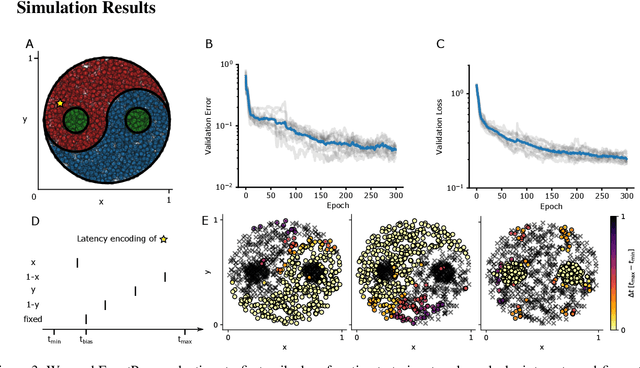

Abstract:We derive the backpropagation algorithm for spiking neural networks composed of leaky integrate-and-fire neurons operating in continuous time. This algorithm, EventProp, computes the exact gradient of an arbitrary loss function of spike times and membrane potentials by backpropagating errors in time. For the first time, by leveraging methods from optimal control theory, we are able to backpropagate errors through spike discontinuities without approximations or smoothing operations. As errors are backpropagated in an event-based manner (at spike times), EventProp requires storing state variables only at these times, providing favorable memory requirements. EventProp can be applied to spiking networks with arbitrary connectivity, including recurrent, convolutional and deep feed-forward architectures. While we consider the leaky integrate-and-fire neuron model in this work, our methodology to derive the gradient can be applied to other spiking neuron models. We demonstrate learning using gradients computed via EventProp in a deep spiking network using an event-based simulator and a non-linearly separable dataset encoded using spike time latencies. Our work supports the rigorous study of gradient-based methods to train spiking neural networks while providing insights toward the development of learning algorithms in neuromorphic hardware.

hxtorch: PyTorch for BrainScaleS-2 -- Perceptrons on Analog Neuromorphic Hardware

Jul 01, 2020

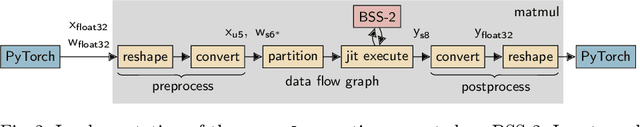

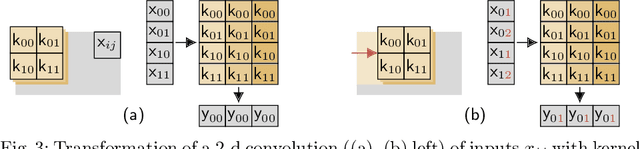

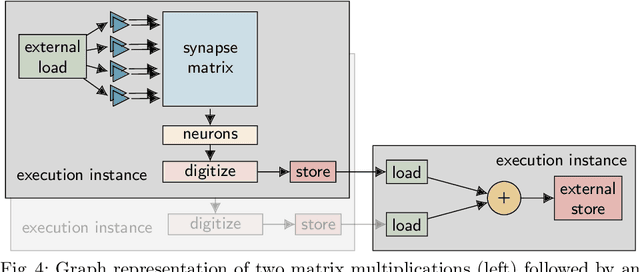

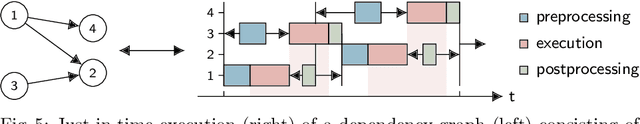

Abstract:We present software facilitating the usage of the BrainScaleS-2 analog neuromorphic hardware system as an inference accelerator for artificial neural networks. The accelerator hardware is transparently integrated into the PyTorch machine learning framework using its extension interface. In particular, we provide accelerator support for vector-matrix multiplications and convolutions; corresponding software-based autograd functionality is provided for hardware-in-the-loop training. Automatic partitioning of neural networks onto one or multiple accelerator chips is supported. We analyze implementation runtime overhead during training as well as inference, provide measurements for existing setups and evaluate the results in terms of the accelerator hardware design limitations. As an application of the introduced framework, we present a model that classifies activities of daily living with smartphone sensor data.

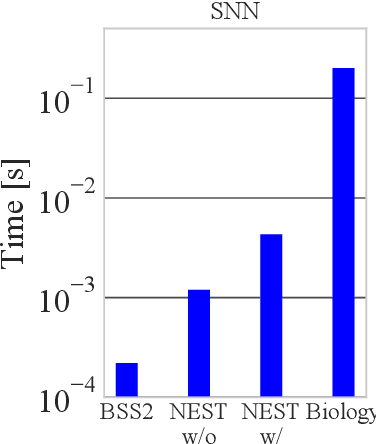

Versatile emulation of spiking neural networks on an accelerated neuromorphic substrate

Dec 30, 2019

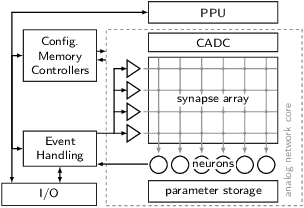

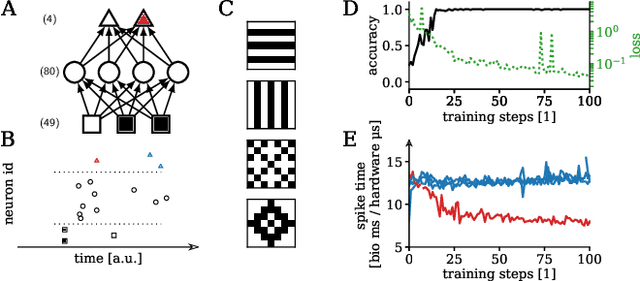

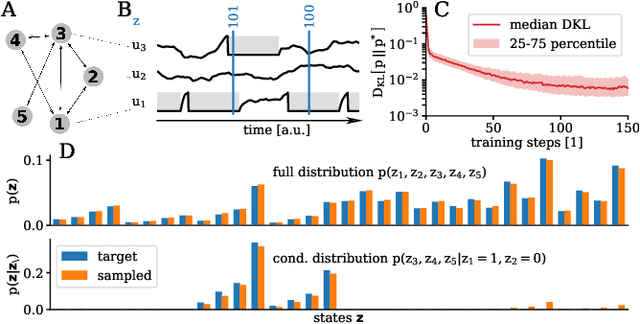

Abstract:We present first experimental results on the novel BrainScaleS-2 neuromorphic architecture based on an analog neuro-synaptic core and augmented by embedded microprocessors for complex plasticity and experiment control. The high acceleration factor of 1000 compared to biological dynamics enables the execution of computationally expensive tasks, by allowing the fast emulation of long-duration experiments or rapid iteration over many consecutive trials. The flexibility of our architecture is demonstrated in a suite of five distinct experiments, which emphasize different aspects of the BrainScaleS-2 system.

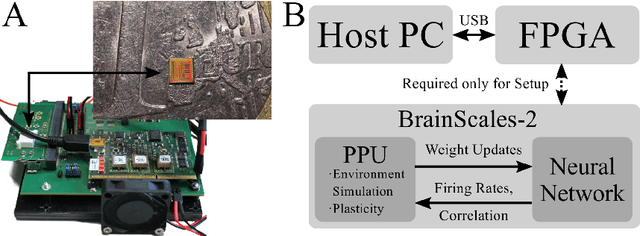

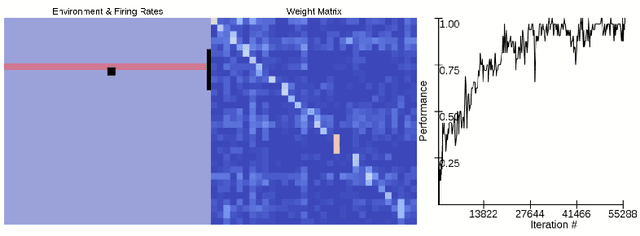

Brain-Inspired Hardware for Artificial Intelligence: Accelerated Learning in a Physical-Model Spiking Neural Network

Oct 01, 2019

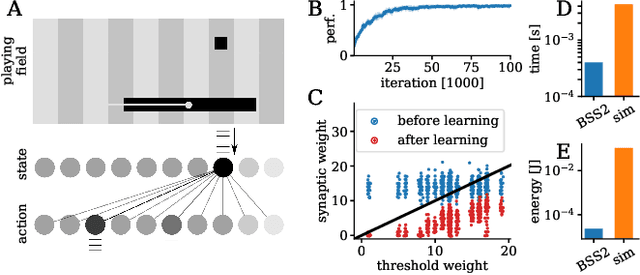

Abstract:Future developments in artificial intelligence will profit from the existence of novel, non-traditional substrates for brain-inspired computing. Neuromorphic computers aim to provide such a substrate that reproduces the brain's capabilities in terms of adaptive, low-power information processing. We present results from a prototype chip of the BrainScaleS-2 mixed-signal neuromorphic system that adopts a physical-model approach with a 1000-fold acceleration of spiking neural network dynamics relative to biological real time. Using the embedded plasticity processor, we both simulate the Pong arcade video game and implement a local plasticity rule that enables reinforcement learning, allowing the on-chip neural network to learn to play the game. The experiment demonstrates key aspects of the employed approach, such as accelerated and flexible learning, high energy efficiency and resilience to noise.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge