Eric Müller

Kirchhoff-Institute for Physics, Heidelberg, Germany

Integrating programmable plasticity in experiment descriptions for analog neuromorphic hardware

Dec 04, 2024

Abstract:The study of plasticity in spiking neural networks is an active area of research. However, simulations that involve complex plasticity rules, dense connectivity/high synapse counts, complex neuron morphologies, or extended simulation times can be computationally demanding. The BrainScaleS-2 neuromorphic architecture has been designed to address this challenge by supporting "hybrid" plasticity, which combines the concepts of programmability and inherently parallel emulation. In particular, observables that are expensive in numerical simulation, such as per-synapse correlation measurements, are implemented directly in the synapse circuits. The evaluation of the observables, the decision to perform an update, and the magnitude of an update, are all conducted in a conventional program that runs simultaneously with the analog neural network. Consequently, these systems can offer a scalable and flexible solution in such cases. While previous work on the platform has already reported on the use of different kinds of plasticity, the descriptions for the spiking neural network experiment topology and protocol, and the plasticity algorithm have not been connected. In this work, we introduce an integrated framework for describing spiking neural network experiments and plasticity rules in a unified high-level experiment description language for the BrainScaleS-2 platform and demonstrate its use.

Short-reach Optical Communications: A Real-world Task for Neuromorphic Hardware

Dec 04, 2024Abstract:Spiking neural networks (SNNs) emulated on dedicated neuromorphic accelerators promise to offer energy-efficient signal processing. However, the neuromorphic advantage over traditional algorithms still remains to be demonstrated in real-world applications. Here, we describe an intensity-modulation, direct-detection (IM/DD) task that is relevant to high-speed optical communication systems used in data centers. Compared to other machine learning-inspired benchmarks, the task offers several advantages. First, the dataset is inherently time-dependent, i.e., there is a time dimension that can be natively mapped to the dynamic evolution of SNNs. Second, small-scale SNNs can achieve the target accuracy required by technical communication standards. Third, due to the small scale and the defined target accuracy, the task facilitates the optimization for real-world aspects, such as energy efficiency, resource requirements, and system complexity.

Demonstrating the Advantages of Analog Wafer-Scale Neuromorphic Hardware

Dec 03, 2024

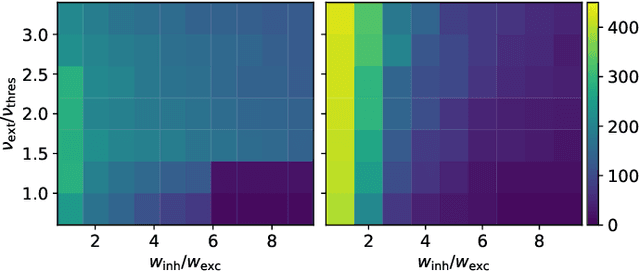

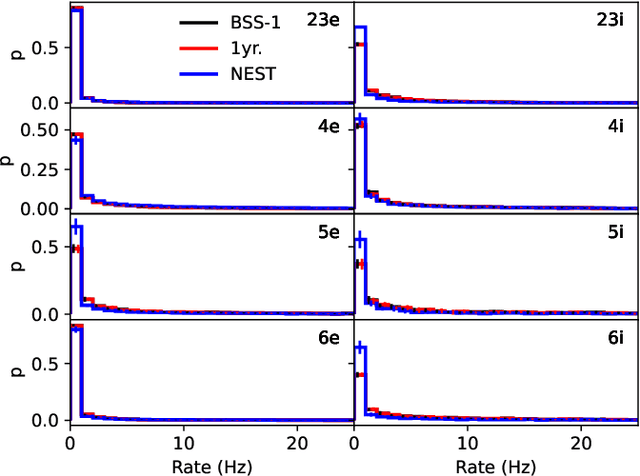

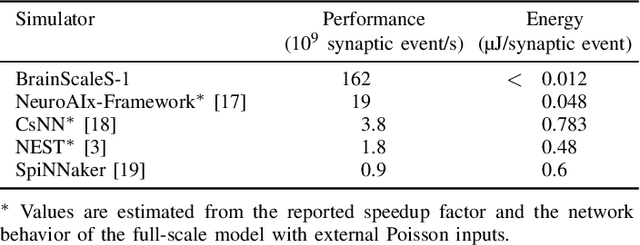

Abstract:As numerical simulations grow in size and complexity, they become increasingly resource-intensive in terms of time and energy. While specialized hardware accelerators often provide order-of-magnitude gains and are state of the art in other scientific fields, their availability and applicability in computational neuroscience is still limited. In this field, neuromorphic accelerators, particularly mixed-signal architectures like the BrainScaleS systems, offer the most significant performance benefits. These systems maintain a constant, accelerated emulation speed independent of network model and size. This is especially beneficial when traditional simulators reach their limits, such as when modeling complex neuron dynamics, incorporating plasticity mechanisms, or running long or repetitive experiments. However, the analog nature of these systems introduces new challenges. In this paper we demonstrate the capabilities and advantages of the BrainScaleS-1 system and how it can be used in combination with conventional software simulations. We report the emulation time and energy consumption for two biologically inspired networks adapted to the neuromorphic hardware substrate: a balanced random network based on Brunel and the cortical microcircuit from Potjans and Diesmann.

Reproduction of AdEx dynamics on neuromorphic hardware through data embedding and simulation-based inference

Dec 03, 2024Abstract:The development of mechanistic models of physical systems is essential for understanding their behavior and formulating predictions that can be validated experimentally. Calibration of these models, especially for complex systems, requires automated optimization methods due to the impracticality of manual parameter tuning. In this study, we use an autoencoder to automatically extract relevant features from the membrane trace of a complex neuron model emulated on the BrainScaleS-2 neuromorphic system, and subsequently leverage sequential neural posterior estimation (SNPE), a simulation-based inference algorithm, to approximate the posterior distribution of neuron parameters. Our results demonstrate that the autoencoder is able to extract essential features from the observed membrane traces, with which the SNPE algorithm is able to find an approximation of the posterior distribution. This suggests that the combination of an autoencoder with the SNPE algorithm is a promising optimization method for complex systems.

Towards Large-scale Network Emulation on Analog Neuromorphic Hardware

Jan 30, 2024Abstract:We present a novel software feature for the BrainScaleS-2 accelerated neuromorphic platform that facilitates the emulation of partitioned large-scale spiking neural networks. This approach is well suited for many deep spiking neural networks, where the constraint of the largest recurrent subnetwork fitting on the substrate or the limited fan-in of neurons is often not a limitation in practice. We demonstrate the training of two deep spiking neural network models, using the MNIST and EuroSAT datasets, that exceed the physical size constraints of a single-chip BrainScaleS-2 system. The ability to emulate and train networks larger than the substrate provides a pathway for accurate performance evaluation in planned or scaled systems, ultimately advancing the development and understanding of large-scale models and neuromorphic computing architectures.

jaxsnn: Event-driven Gradient Estimation for Analog Neuromorphic Hardware

Jan 30, 2024Abstract:Traditional neuromorphic hardware architectures rely on event-driven computation, where the asynchronous transmission of events, such as spikes, triggers local computations within synapses and neurons. While machine learning frameworks are commonly used for gradient-based training, their emphasis on dense data structures poses challenges for processing asynchronous data such as spike trains. This problem is particularly pronounced for typical tensor data structures. In this context, we present a novel library (jaxsnn) built on top of JAX, that departs from conventional machine learning frameworks by providing flexibility in the data structures used and the handling of time, while maintaining Autograd functionality and composability. Our library facilitates the simulation of spiking neural networks and gradient estimation, with a focus on compatibility with time-continuous neuromorphic backends, such as the BrainScaleS-2 system, during the forward pass. This approach opens avenues for more efficient and flexible training of spiking neural networks, bridging the gap between traditional neuromorphic architectures and contemporary machine learning frameworks.

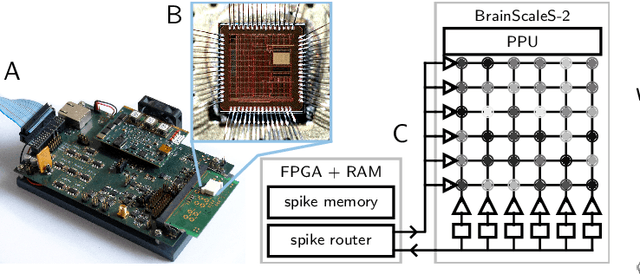

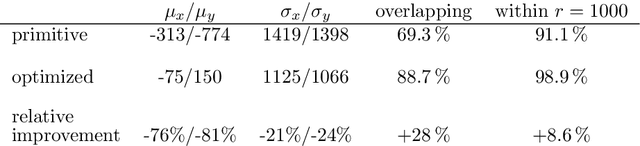

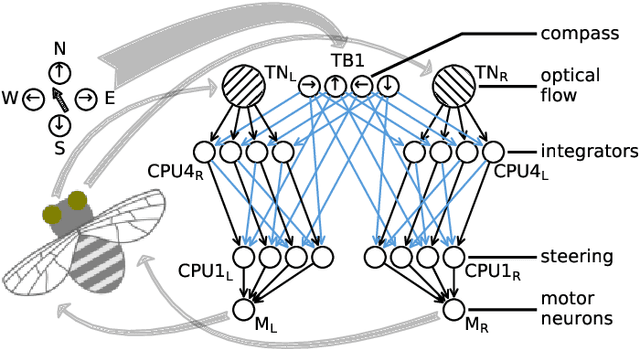

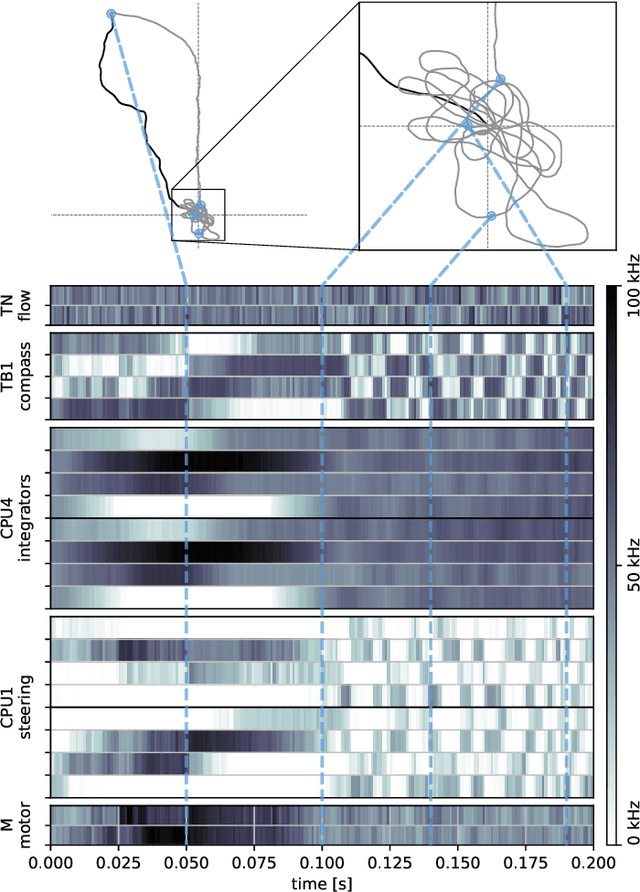

Emulating insect brains for neuromorphic navigation

Dec 31, 2023

Abstract:Bees display the remarkable ability to return home in a straight line after meandering excursions to their environment. Neurobiological imaging studies have revealed that this capability emerges from a path integration mechanism implemented within the insect's brain. In the present work, we emulate this neural network on the neuromorphic mixed-signal processor BrainScaleS-2 to guide bees, virtually embodied on a digital co-processor, back to their home location after randomly exploring their environment. To realize the underlying neural integrators, we introduce single-neuron spike-based short-term memory cells with axo-axonic synapses. All entities, including environment, sensory organs, brain, actuators, and the virtual body, run autonomously on a single BrainScaleS-2 microchip. The functioning network is fine-tuned for better precision and reliability through an evolution strategy. As BrainScaleS-2 emulates neural processes 1000 times faster than biology, 4800 consecutive bee journeys distributed over 320 generations occur within only half an hour on a single neuromorphic core.

Simulation-based Inference for Model Parameterization on Analog Neuromorphic Hardware

Mar 28, 2023Abstract:The BrainScaleS-2 (BSS-2) system implements physical models of neurons as well as synapses and aims for an energy-efficient and fast emulation of biological neurons. When replicating neuroscientific experiment results, a major challenge is finding suitable model parameters. This study investigates the suitability of the sequential neural posterior estimation (SNPE) algorithm for parameterizing a multi-compartmental neuron model emulated on the BSS-2 analog neuromorphic hardware system. In contrast to other optimization methods such as genetic algorithms or stochastic searches, the SNPE algorithms belongs to the class of approximate Bayesian computing (ABC) methods and estimates the posterior distribution of the model parameters; access to the posterior allows classifying the confidence in parameter estimations and unveiling correlation between model parameters. In previous applications, the SNPE algorithm showed a higher computational efficiency than traditional ABC methods. For our multi-compartmental model, we show that the approximated posterior is in agreement with experimental observations and that the identified correlation between parameters is in agreement with theoretical expectations. Furthermore, we show that the algorithm can deal with high-dimensional observations and parameter spaces. These results suggest that the SNPE algorithm is a promising approach for automating the parameterization of complex models, especially when dealing with characteristic properties of analog neuromorphic substrates, such as trial-to-trial variations or limited parameter ranges.

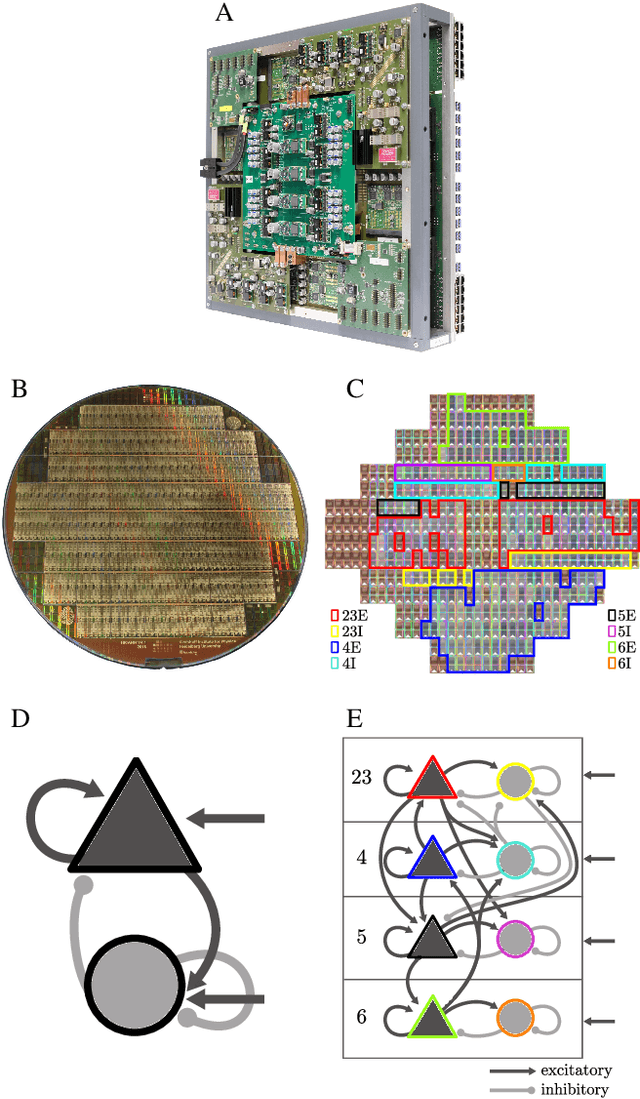

From Clean Room to Machine Room: Commissioning of the First-Generation BrainScaleS Wafer-Scale Neuromorphic System

Mar 22, 2023Abstract:The first-generation of BrainScaleS, also referred to as BrainScaleS-1, is a neuromorphic system for emulating large-scale networks of spiking neurons. Following a "physical modeling" principle, its VLSI circuits are designed to emulate the dynamics of biological examples: analog circuits implement neurons and synapses with time constants that arise from their electronic components' intrinsic properties. It operates in continuous time, with dynamics typically matching an acceleration factor of 10000 compared to the biological regime. A fault-tolerant design allows it to achieve wafer-scale integration despite unavoidable analog variability and component failures. In this paper, we present the commissioning process of a BrainScaleS-1 wafer module, providing a short description of the system's physical components, illustrating the steps taken during its assembly and the measures taken to operate it. Furthermore, we reflect on the system's development process and the lessons learned to conclude with a demonstration of its functionality by emulating a wafer-scale synchronous firing chain, the largest spiking network emulation ran with analog components and individual synapses to date.

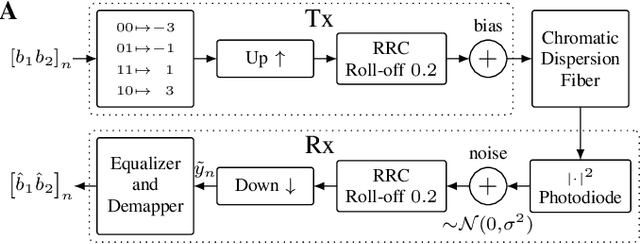

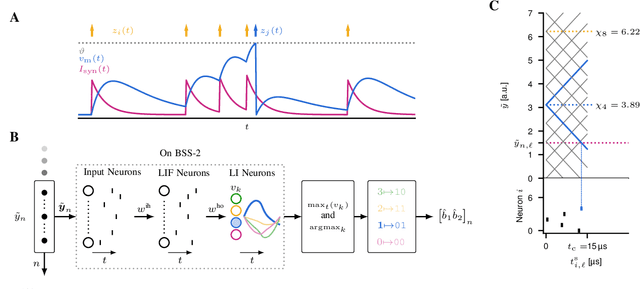

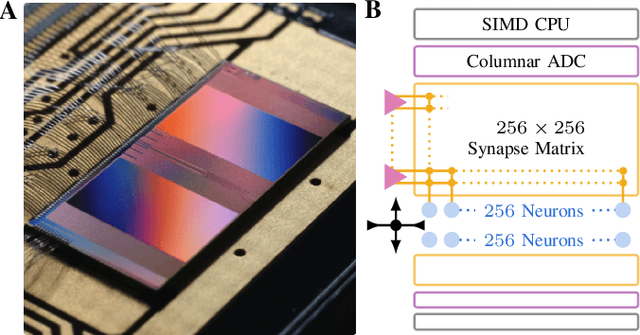

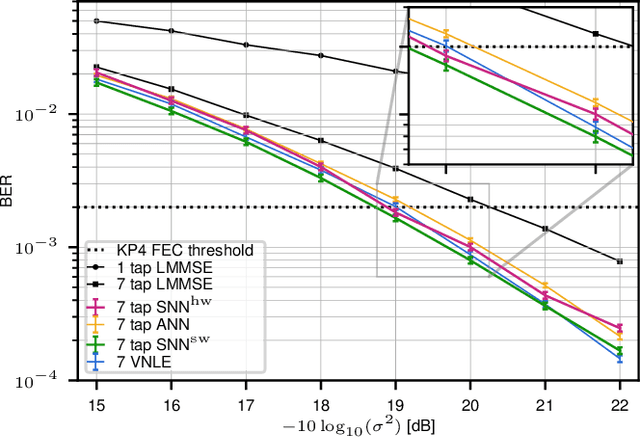

Spiking Neural Network Nonlinear Demapping on Neuromorphic Hardware for IM/DD Optical Communication

Feb 28, 2023

Abstract:Neuromorphic computing implementing spiking neural networks (SNN) is a promising technology for reducing the footprint of optical transceivers, as required by the fast-paced growth of data center traffic. In this work, an SNN nonlinear demapper is designed and evaluated on a simulated intensity-modulation direct-detection link with chromatic dispersion. The SNN demapper is implemented in software and on the analog neuromorphic hardware system BrainScaleS-2 (BSS-2). For comparison, linear equalization (LE), Volterra nonlinear equalization (VNLE), and nonlinear demapping by an artificial neural network (ANN) implemented in software are considered. At a pre-forward error correction bit error rate of 2e-3, the software SNN outperforms LE by 1.5 dB, VNLE by 0.3 dB and the ANN by 0.5 dB. The hardware penalty of the SNN on BSS-2 is only 0.2 dB, i.e., also on hardware, the SNN performs better than all software implementations of the reference approaches. Hence, this work demonstrates that SNN demappers implemented on electrical analog hardware can realize powerful and accurate signal processing fulfilling the strict requirements of optical communications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge