Philipp Spilger

Integrating programmable plasticity in experiment descriptions for analog neuromorphic hardware

Dec 04, 2024

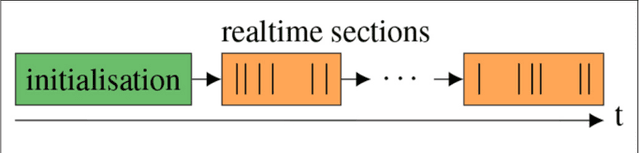

Abstract:The study of plasticity in spiking neural networks is an active area of research. However, simulations that involve complex plasticity rules, dense connectivity/high synapse counts, complex neuron morphologies, or extended simulation times can be computationally demanding. The BrainScaleS-2 neuromorphic architecture has been designed to address this challenge by supporting "hybrid" plasticity, which combines the concepts of programmability and inherently parallel emulation. In particular, observables that are expensive in numerical simulation, such as per-synapse correlation measurements, are implemented directly in the synapse circuits. The evaluation of the observables, the decision to perform an update, and the magnitude of an update, are all conducted in a conventional program that runs simultaneously with the analog neural network. Consequently, these systems can offer a scalable and flexible solution in such cases. While previous work on the platform has already reported on the use of different kinds of plasticity, the descriptions for the spiking neural network experiment topology and protocol, and the plasticity algorithm have not been connected. In this work, we introduce an integrated framework for describing spiking neural network experiments and plasticity rules in a unified high-level experiment description language for the BrainScaleS-2 platform and demonstrate its use.

jaxsnn: Event-driven Gradient Estimation for Analog Neuromorphic Hardware

Jan 30, 2024Abstract:Traditional neuromorphic hardware architectures rely on event-driven computation, where the asynchronous transmission of events, such as spikes, triggers local computations within synapses and neurons. While machine learning frameworks are commonly used for gradient-based training, their emphasis on dense data structures poses challenges for processing asynchronous data such as spike trains. This problem is particularly pronounced for typical tensor data structures. In this context, we present a novel library (jaxsnn) built on top of JAX, that departs from conventional machine learning frameworks by providing flexibility in the data structures used and the handling of time, while maintaining Autograd functionality and composability. Our library facilitates the simulation of spiking neural networks and gradient estimation, with a focus on compatibility with time-continuous neuromorphic backends, such as the BrainScaleS-2 system, during the forward pass. This approach opens avenues for more efficient and flexible training of spiking neural networks, bridging the gap between traditional neuromorphic architectures and contemporary machine learning frameworks.

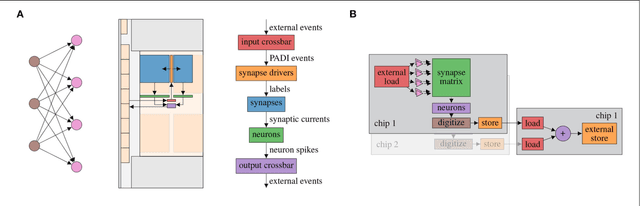

Towards Large-scale Network Emulation on Analog Neuromorphic Hardware

Jan 30, 2024Abstract:We present a novel software feature for the BrainScaleS-2 accelerated neuromorphic platform that facilitates the emulation of partitioned large-scale spiking neural networks. This approach is well suited for many deep spiking neural networks, where the constraint of the largest recurrent subnetwork fitting on the substrate or the limited fan-in of neurons is often not a limitation in practice. We demonstrate the training of two deep spiking neural network models, using the MNIST and EuroSAT datasets, that exceed the physical size constraints of a single-chip BrainScaleS-2 system. The ability to emulate and train networks larger than the substrate provides a pathway for accurate performance evaluation in planned or scaled systems, ultimately advancing the development and understanding of large-scale models and neuromorphic computing architectures.

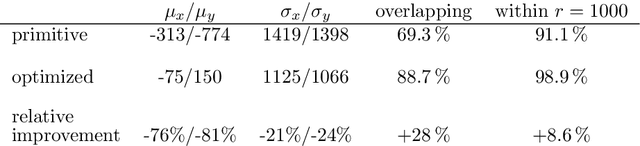

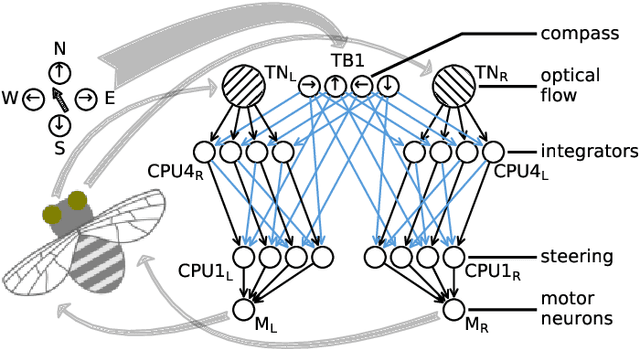

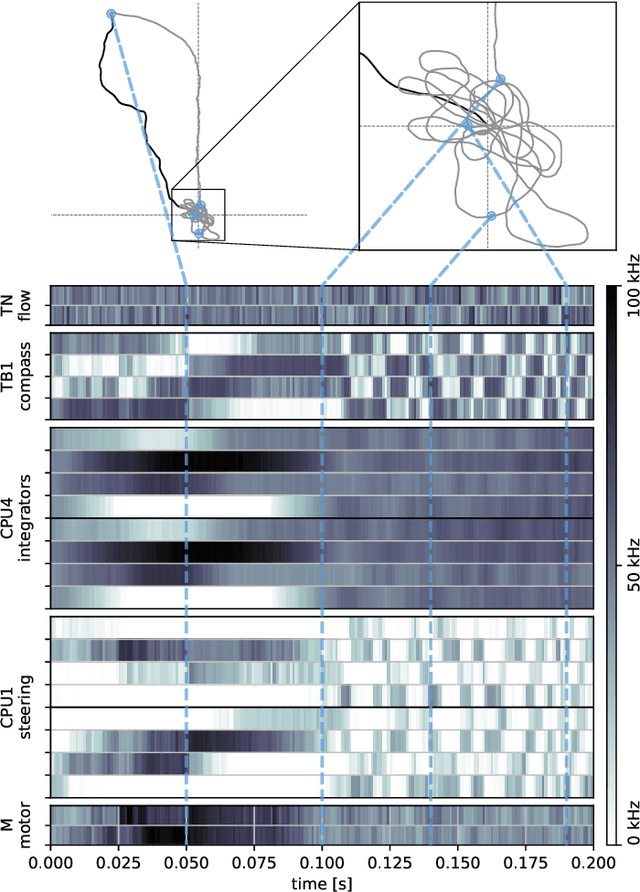

Emulating insect brains for neuromorphic navigation

Dec 31, 2023

Abstract:Bees display the remarkable ability to return home in a straight line after meandering excursions to their environment. Neurobiological imaging studies have revealed that this capability emerges from a path integration mechanism implemented within the insect's brain. In the present work, we emulate this neural network on the neuromorphic mixed-signal processor BrainScaleS-2 to guide bees, virtually embodied on a digital co-processor, back to their home location after randomly exploring their environment. To realize the underlying neural integrators, we introduce single-neuron spike-based short-term memory cells with axo-axonic synapses. All entities, including environment, sensory organs, brain, actuators, and the virtual body, run autonomously on a single BrainScaleS-2 microchip. The functioning network is fine-tuned for better precision and reliability through an evolution strategy. As BrainScaleS-2 emulates neural processes 1000 times faster than biology, 4800 consecutive bee journeys distributed over 320 generations occur within only half an hour on a single neuromorphic core.

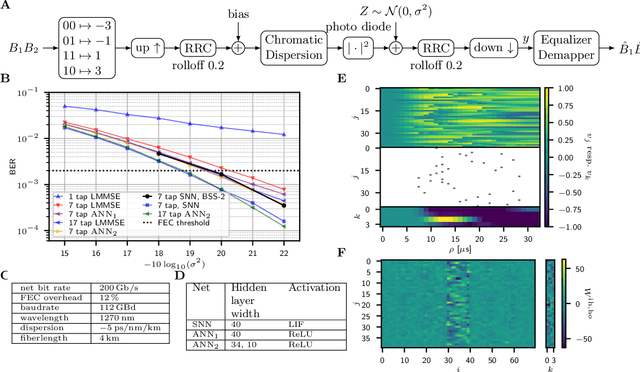

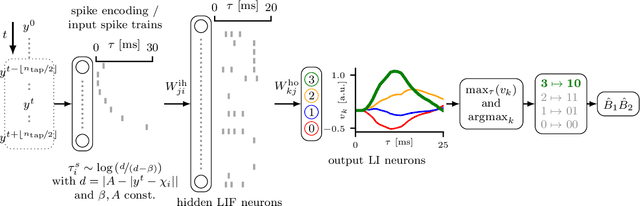

Spiking Neural Network Nonlinear Demapping on Neuromorphic Hardware for IM/DD Optical Communication

Feb 28, 2023

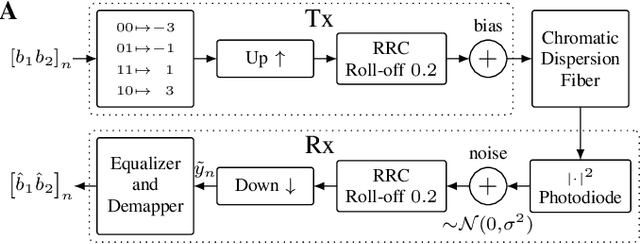

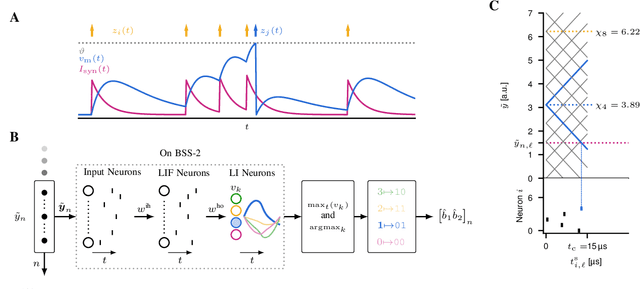

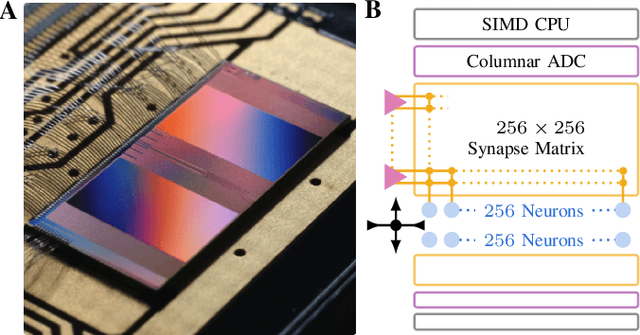

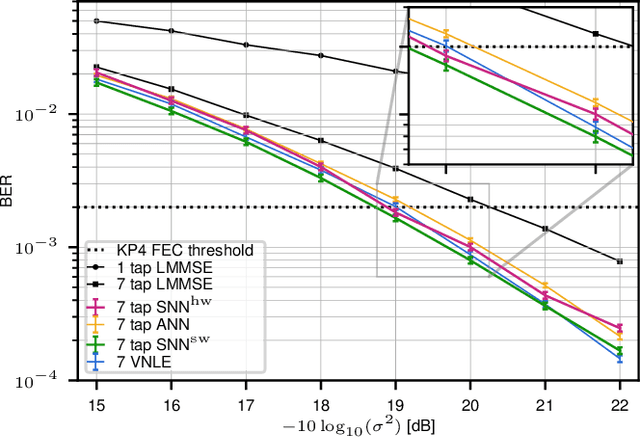

Abstract:Neuromorphic computing implementing spiking neural networks (SNN) is a promising technology for reducing the footprint of optical transceivers, as required by the fast-paced growth of data center traffic. In this work, an SNN nonlinear demapper is designed and evaluated on a simulated intensity-modulation direct-detection link with chromatic dispersion. The SNN demapper is implemented in software and on the analog neuromorphic hardware system BrainScaleS-2 (BSS-2). For comparison, linear equalization (LE), Volterra nonlinear equalization (VNLE), and nonlinear demapping by an artificial neural network (ANN) implemented in software are considered. At a pre-forward error correction bit error rate of 2e-3, the software SNN outperforms LE by 1.5 dB, VNLE by 0.3 dB and the ANN by 0.5 dB. The hardware penalty of the SNN on BSS-2 is only 0.2 dB, i.e., also on hardware, the SNN performs better than all software implementations of the reference approaches. Hence, this work demonstrates that SNN demappers implemented on electrical analog hardware can realize powerful and accurate signal processing fulfilling the strict requirements of optical communications.

hxtorch.snn: Machine-learning-inspired Spiking Neural Network Modeling on BrainScaleS-2

Dec 23, 2022Abstract:Neuromorphic systems require user-friendly software to support the design and optimization of experiments. In this work, we address this need by presenting our development of a machine learning-based modeling framework for the BrainScaleS-2 neuromorphic system. This work represents an improvement over previous efforts, which either focused on the matrix-multiplication mode of BrainScaleS-2 or lacked full automation. Our framework, called hxtorch.snn, enables the hardware-in-the-loop training of spiking neural networks within PyTorch, including support for auto differentiation in a fully-automated hardware experiment workflow. In addition, hxtorch.snn facilitates seamless transitions between emulating on hardware and simulating in software. We demonstrate the capabilities of hxtorch.snn on a classification task using the Yin-Yang dataset employing a gradient-based approach with surrogate gradients and densely sampled membrane observations from the BrainScaleS-2 hardware system.

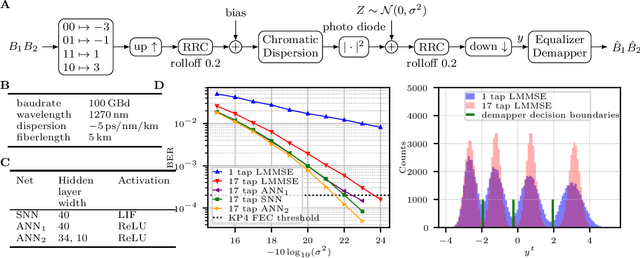

Spiking Neural Network Equalization on Neuromorphic Hardware for IM/DD Optical Communication

Jun 01, 2022

Abstract:A spiking neural network (SNN) non-linear equalizer model is implemented on the mixed-signal neuromorphic hardware system BrainScaleS-2 and evaluated for an IM/DD link. The BER 2e-3 is achieved with a hardware penalty less than 1 dB, outperforming numeric linear equalization.

Spiking Neural Network Equalization for IM/DD Optical Communication

May 09, 2022

Abstract:A spiking neural network (SNN) equalizer model suitable for electronic neuromorphic hardware is designed for an IM/DD link. The SNN achieves the same bit-error-rate as an artificial neural network, outperforming linear equalization.

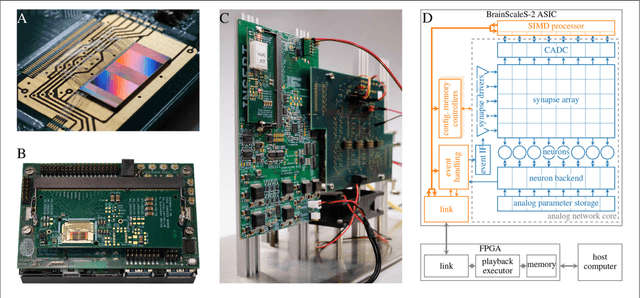

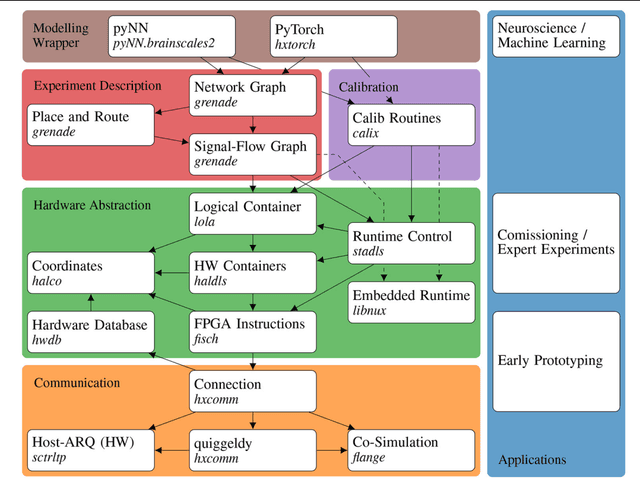

A Scalable Approach to Modeling on Accelerated Neuromorphic Hardware

Mar 21, 2022

Abstract:Neuromorphic systems open up opportunities to enlarge the explorative space for computational research. However, it is often challenging to unite efficiency and usability. This work presents the software aspects of this endeavor for the BrainScaleS-2 system, a hybrid accelerated neuromorphic hardware architecture based on physical modeling. We introduce key aspects of the BrainScaleS-2 Operating System: experiment workflow, API layering, software design, and platform operation. We present use cases to discuss and derive requirements for the software and showcase the implementation. The focus lies on novel system and software features such as multi-compartmental neurons, fast re-configuration for hardware-in-the-loop training, applications for the embedded processors, the non-spiking operation mode, interactive platform access, and sustainable hardware/software co-development. Finally, we discuss further developments in terms of hardware scale-up, system usability and efficiency.

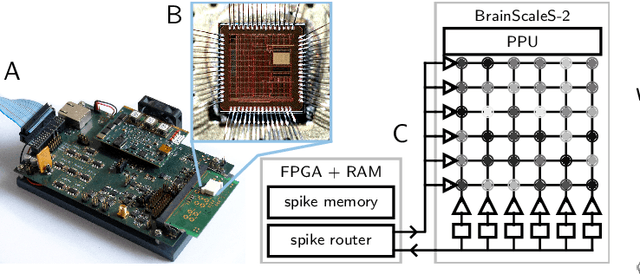

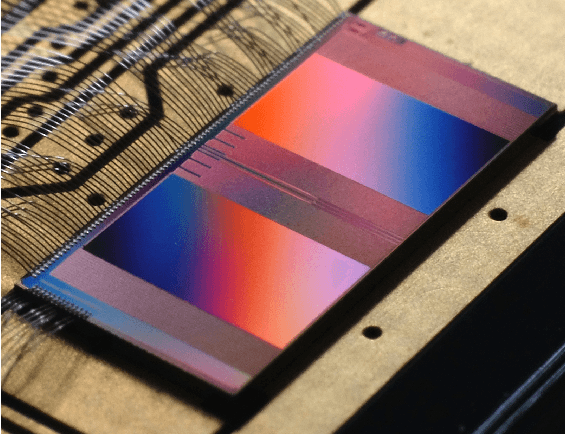

Demonstrating Analog Inference on the BrainScaleS-2 Mobile System

Mar 29, 2021

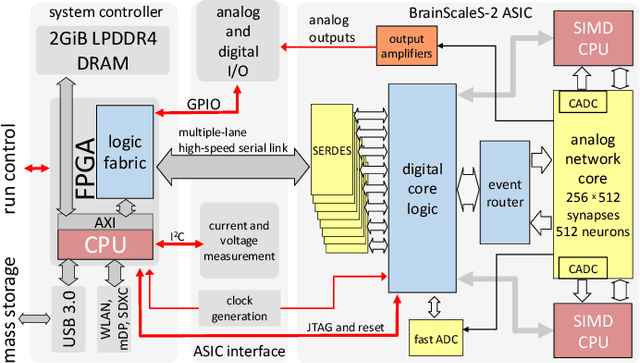

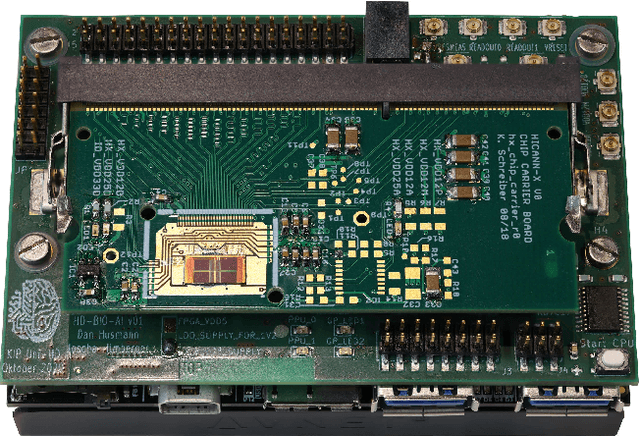

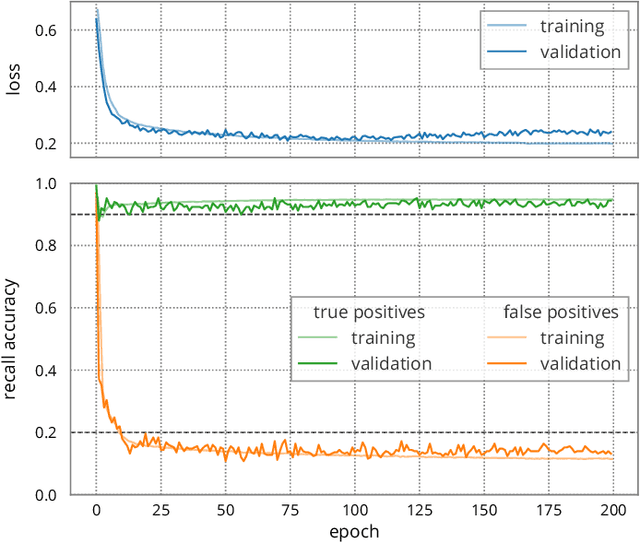

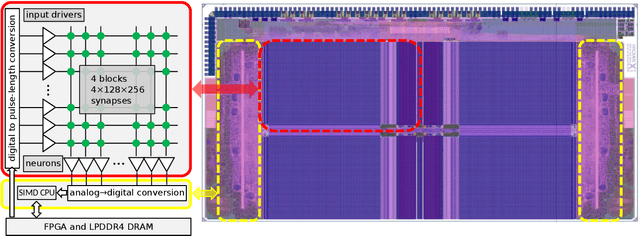

Abstract:We present the BrainScaleS-2 mobile system as a compact analog inference engine based on the BrainScaleS-2 ASIC and demonstrate its capabilities at classifying a medical electrocardiogram dataset. The analog network core of the ASIC is utilized to perform the multiply-accumulate operations of a convolutional deep neural network. We measure a total energy consumption of 192uJ for the ASIC and achieve a classification time of 276us per electrocardiographic patient sample. Patients with atrial fibrillation are correctly identified with a detection rate of 93.7(7)% at 14.0(10)% false positives. The system is directly applicable to edge inference applications due to its small size, power envelope and flexible I/O capabilities. Possible future applications can furthermore combine conventional machine learning layers with online-learning in spiking neural networks on a single BrainScaleS-2 ASIC. The system has successfully participated and proven to operate reliably in the independently judged competition "Pilotinnovationswettbewerb 'Energieeffizientes KI-System'" of the German Federal Ministry of Education and Research (BMBF).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge