Dominik Dold

Energy efficiency analysis of Spiking Neural Networks for space applications

May 16, 2025Abstract:While the exponential growth of the space sector and new operative concepts ask for higher spacecraft autonomy, the development of AI-assisted space systems was so far hindered by the low availability of power and energy typical of space applications. In this context, Spiking Neural Networks (SNN) are highly attractive due to their theoretically superior energy efficiency due to their inherently sparse activity induced by neurons communicating by means of binary spikes. Nevertheless, the ability of SNN to reach such efficiency on real world tasks is still to be demonstrated in practice. To evaluate the feasibility of utilizing SNN onboard spacecraft, this work presents a numerical analysis and comparison of different SNN techniques applied to scene classification for the EuroSAT dataset. Such tasks are of primary importance for space applications and constitute a valuable test case given the abundance of competitive methods available to establish a benchmark. Particular emphasis is placed on models based on temporal coding, where crucial information is encoded in the timing of neuron spikes. These models promise even greater efficiency of resulting networks, as they maximize the sparsity properties inherent in SNN. A reliable metric capable of comparing different architectures in a hardware-agnostic way is developed to establish a clear theoretical dependence between architecture parameters and the energy consumption that can be expected onboard the spacecraft. The potential of this novel method and his flexibility to describe specific hardware platforms is demonstrated by its application to predicting the energy consumption of a BrainChip Akida AKD1000 neuromorphic processor.

Causal pieces: analysing and improving spiking neural networks piece by piece

Apr 18, 2025Abstract:We introduce a novel concept for spiking neural networks (SNNs) derived from the idea of "linear pieces" used to analyse the expressiveness and trainability of artificial neural networks (ANNs). We prove that the input domain of SNNs decomposes into distinct causal regions where its output spike times are locally Lipschitz continuous with respect to the input spike times and network parameters. The number of such regions - which we call "causal pieces" - is a measure of the approximation capabilities of SNNs. In particular, we demonstrate in simulation that parameter initialisations which yield a high number of causal pieces on the training set strongly correlate with SNN training success. Moreover, we find that feedforward SNNs with purely positive weights exhibit a surprisingly high number of causal pieces, allowing them to achieve competitive performance levels on benchmark tasks. We believe that causal pieces are not only a powerful and principled tool for improving SNNs, but might also open up new ways of comparing SNNs and ANNs in the future.

Continuous Design and Reprogramming of Totimorphic Structures for Space Applications

Nov 22, 2024

Abstract:Recently, a class of mechanical lattices with reconfigurable, zero-stiffness structures has been proposed, called Totimorphic structures. In this work, we introduce a computational framework that allows continuous reprogramming of a Totimorphic lattice's effective properties, such as mechanical and optical properties, via continuous geometric changes alone. Our approach is differentiable and guarantees valid Totimorphic lattice configurations throughout the optimisation process, thus providing not only specific configurations with desired properties but also trajectories through configuration space connecting them. It enables re-programmable structures where actuators are controlled via automatic differentiation on an objective-dependent cost function, altering the lattice structure at all times to achieve a given objective - which is interchangeable to achieve different functionalities. Our main interest lies in deep space applications where harsh, extreme, and resource-constrained environments demand solutions that offer flexibility, resource efficiency, and autonomy. We illustrate our framework through two proofs of concept: a re-programmable metamaterial as well as a space telescope mirror with adjustable focal length, both made from Totimorphic structures. The introduced framework is easily adjustable to a variety of Totimorphic designs and objectives, providing a light-weight model for endowing physical prototypes of Totimorphic structures with autonomous self-configuration and self-repair capabilities.

Towards Large-scale Network Emulation on Analog Neuromorphic Hardware

Jan 30, 2024Abstract:We present a novel software feature for the BrainScaleS-2 accelerated neuromorphic platform that facilitates the emulation of partitioned large-scale spiking neural networks. This approach is well suited for many deep spiking neural networks, where the constraint of the largest recurrent subnetwork fitting on the substrate or the limited fan-in of neurons is often not a limitation in practice. We demonstrate the training of two deep spiking neural network models, using the MNIST and EuroSAT datasets, that exceed the physical size constraints of a single-chip BrainScaleS-2 system. The ability to emulate and train networks larger than the substrate provides a pathway for accurate performance evaluation in planned or scaled systems, ultimately advancing the development and understanding of large-scale models and neuromorphic computing architectures.

Differentiable graph-structured models for inverse design of lattice materials

Apr 11, 2023

Abstract:Materials possessing flexible physico-chemical properties that adapt on-demand to the hostile environmental conditions of deep space will become essential in defining the future of space exploration. A promising venue for inspiration towards the design of environment-specific materials is in the intricate micro-architectures and lattice geometry found throughout nature. However, the immense design space covered by such irregular topologies is challenging to probe analytically. For this reason, most synthetic lattice materials have to date been based on periodic architectures instead. Here, we propose a computational approach using a graph representation for both regular and irregular lattice materials. Our method uses differentiable message passing algorithms to calculate mechanical properties, and therefore allows using automatic differentiation to adjust both the geometric structure and attributes of individual lattice elements to design materials with desired properties. The introduced methodology is applicable to any system representable as a heterogeneous graph, including other types of materials.

Detection, Explanation and Filtering of Cyber Attacks Combining Symbolic and Sub-Symbolic Methods

Dec 23, 2022

Abstract:Machine learning (ML) on graph-structured data has recently received deepened interest in the context of intrusion detection in the cybersecurity domain. Due to the increasing amounts of data generated by monitoring tools as well as more and more sophisticated attacks, these ML methods are gaining traction. Knowledge graphs and their corresponding learning techniques such as Graph Neural Networks (GNNs) with their ability to seamlessly integrate data from multiple domains using human-understandable vocabularies, are finding application in the cybersecurity domain. However, similar to other connectionist models, GNNs are lacking transparency in their decision making. This is especially important as there tend to be a high number of false positive alerts in the cybersecurity domain, such that triage needs to be done by domain experts, requiring a lot of man power. Therefore, we are addressing Explainable AI (XAI) for GNNs to enhance trust management by exploring combining symbolic and sub-symbolic methods in the area of cybersecurity that incorporate domain knowledge. We experimented with this approach by generating explanations in an industrial demonstrator system. The proposed method is shown to produce intuitive explanations for alerts for a diverse range of scenarios. Not only do the explanations provide deeper insights into the alerts, but they also lead to a reduction of false positive alerts by 66% and by 93% when including the fidelity metric.

Selected Trends in Artificial Intelligence for Space Applications

Dec 17, 2022

Abstract:The development and adoption of artificial intelligence (AI) technologies in space applications is growing quickly as the consensus increases on the potential benefits introduced. As more and more aerospace engineers are becoming aware of new trends in AI, traditional approaches are revisited to consider the applications of emerging AI technologies. Already at the time of writing, the scope of AI-related activities across academia, the aerospace industry and space agencies is so wide that an in-depth review would not fit in these pages. In this chapter we focus instead on two main emerging trends we believe capture the most relevant and exciting activities in the field: differentiable intelligence and on-board machine learning. Differentiable intelligence, in a nutshell, refers to works making extensive use of automatic differentiation frameworks to learn the parameters of machine learning or related models. Onboard machine learning considers the problem of moving inference, as well as learning, onboard. Within these fields, we discuss a few selected projects originating from the European Space Agency's (ESA) Advanced Concepts Team (ACT), giving priority to advanced topics going beyond the transposition of established AI techniques and practices to the space domain.

Neuromorphic Computing and Sensing in Space

Dec 17, 2022Abstract:The term ``neuromorphic'' refers to systems that are closely resembling the architecture and/or the dynamics of biological neural networks. Typical examples are novel computer chips designed to mimic the architecture of a biological brain, or sensors that get inspiration from, e.g., the visual or olfactory systems in insects and mammals to acquire information about the environment. This approach is not without ambition as it promises to enable engineered devices able to reproduce the level of performance observed in biological organisms -- the main immediate advantage being the efficient use of scarce resources, which translates into low power requirements. The emphasis on low power and energy efficiency of neuromorphic devices is a perfect match for space applications. Spacecraft -- especially miniaturized ones -- have strict energy constraints as they need to operate in an environment which is scarce with resources and extremely hostile. In this work we present an overview of early attempts made to study a neuromorphic approach in a space context at the European Space Agency's (ESA) Advanced Concepts Team (ACT).

Neuro-symbolic computing with spiking neural networks

Aug 04, 2022

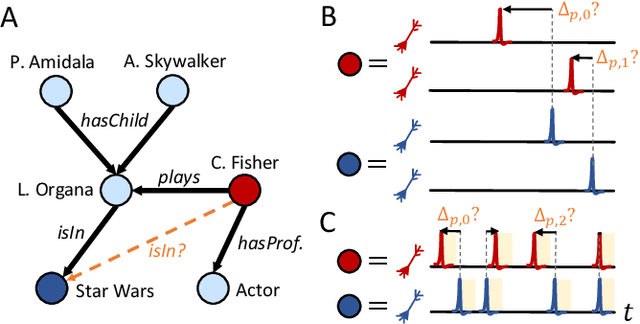

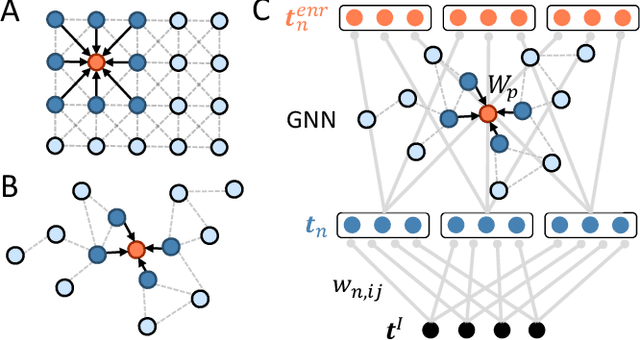

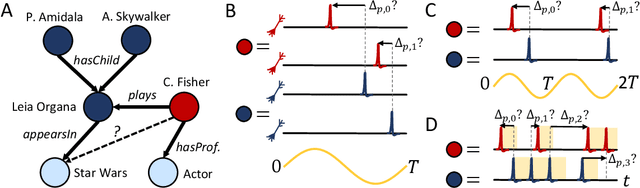

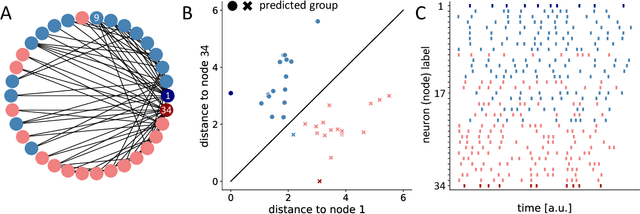

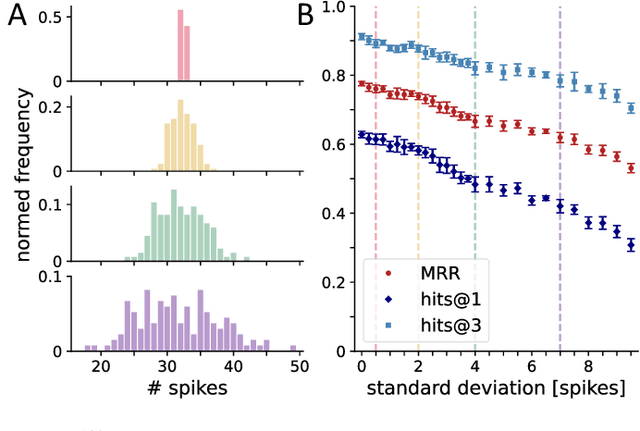

Abstract:Knowledge graphs are an expressive and widely used data structure due to their ability to integrate data from different domains in a sensible and machine-readable way. Thus, they can be used to model a variety of systems such as molecules and social networks. However, it still remains an open question how symbolic reasoning could be realized in spiking systems and, therefore, how spiking neural networks could be applied to such graph data. Here, we extend previous work on spike-based graph algorithms by demonstrating how symbolic and multi-relational information can be encoded using spiking neurons, allowing reasoning over symbolic structures like knowledge graphs with spiking neural networks. The introduced framework is enabled by combining the graph embedding paradigm and the recent progress in training spiking neural networks using error backpropagation. The presented methods are applicable to a variety of spiking neuron models and can be trained end-to-end in combination with other differentiable network architectures, which we demonstrate by implementing a spiking relational graph neural network.

Relational representation learning with spike trains

May 18, 2022

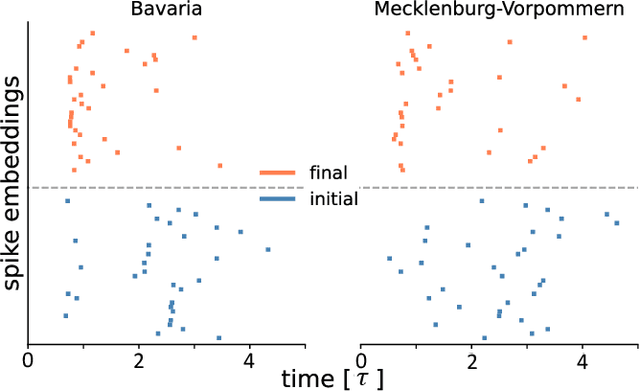

Abstract:Relational representation learning has lately received an increase in interest due to its flexibility in modeling a variety of systems like interacting particles, materials and industrial projects for, e.g., the design of spacecraft. A prominent method for dealing with relational data are knowledge graph embedding algorithms, where entities and relations of a knowledge graph are mapped to a low-dimensional vector space while preserving its semantic structure. Recently, a graph embedding method has been proposed that maps graph elements to the temporal domain of spiking neural networks. However, it relies on encoding graph elements through populations of neurons that only spike once. Here, we present a model that allows us to learn spike train-based embeddings of knowledge graphs, requiring only one neuron per graph element by fully utilizing the temporal domain of spike patterns. This coding scheme can be implemented with arbitrary spiking neuron models as long as gradients with respect to spike times can be calculated, which we demonstrate for the integrate-and-fire neuron model. In general, the presented results show how relational knowledge can be integrated into spike-based systems, opening up the possibility of merging event-based computing and relational data to build powerful and energy efficient artificial intelligence applications and reasoning systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge