Stephan Grimm

Detection, Explanation and Filtering of Cyber Attacks Combining Symbolic and Sub-Symbolic Methods

Dec 23, 2022

Abstract:Machine learning (ML) on graph-structured data has recently received deepened interest in the context of intrusion detection in the cybersecurity domain. Due to the increasing amounts of data generated by monitoring tools as well as more and more sophisticated attacks, these ML methods are gaining traction. Knowledge graphs and their corresponding learning techniques such as Graph Neural Networks (GNNs) with their ability to seamlessly integrate data from multiple domains using human-understandable vocabularies, are finding application in the cybersecurity domain. However, similar to other connectionist models, GNNs are lacking transparency in their decision making. This is especially important as there tend to be a high number of false positive alerts in the cybersecurity domain, such that triage needs to be done by domain experts, requiring a lot of man power. Therefore, we are addressing Explainable AI (XAI) for GNNs to enhance trust management by exploring combining symbolic and sub-symbolic methods in the area of cybersecurity that incorporate domain knowledge. We experimented with this approach by generating explanations in an industrial demonstrator system. The proposed method is shown to produce intuitive explanations for alerts for a diverse range of scenarios. Not only do the explanations provide deeper insights into the alerts, but they also lead to a reduction of false positive alerts by 66% and by 93% when including the fidelity metric.

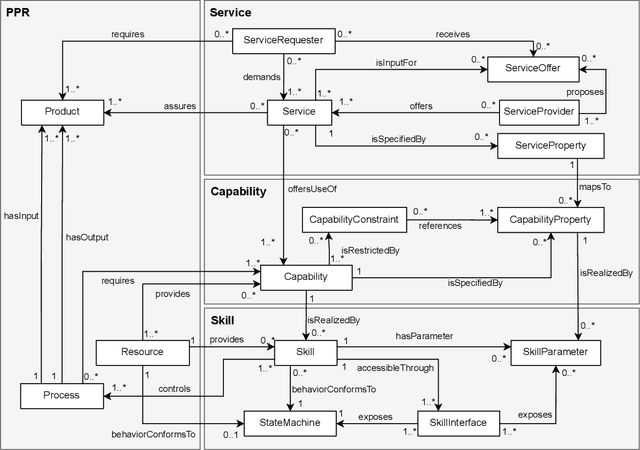

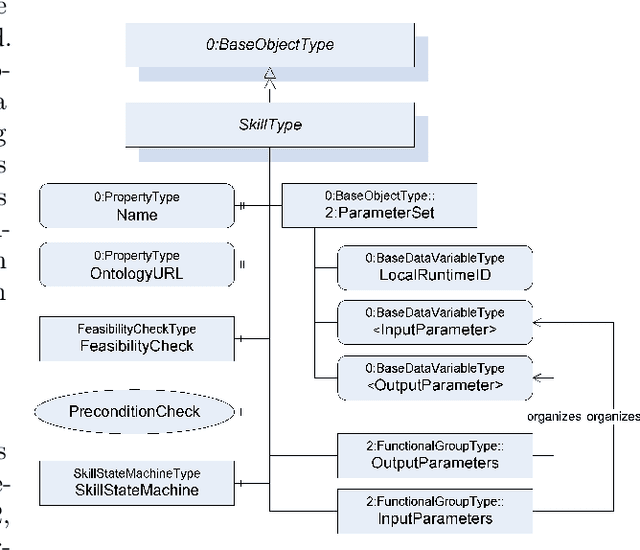

A Reference Model for Common Understanding of Capabilities and Skills in Manufacturing

Sep 15, 2022

Abstract:In manufacturing, many use cases of Industry 4.0 require vendor-neutral and machine-readable information models to describe, implement and execute resource functions. Such models have been researched under the terms capabilities and skills. Standardization of such models is required, but currently not available. This paper presents a reference model developed jointly by members of various organizations in a working group of the Plattform Industrie 4.0. This model covers definitions of most important aspects of capabilities and skills. It can be seen as a basis for further standardization efforts.

Combining Sub-Symbolic and Symbolic Methods for Explainability

Dec 03, 2021

Abstract:Similarly to other connectionist models, Graph Neural Networks (GNNs) lack transparency in their decision-making. A number of sub-symbolic approaches have been developed to provide insights into the GNN decision making process. These are first important steps on the way to explainability, but the generated explanations are often hard to understand for users that are not AI experts. To overcome this problem, we introduce a conceptual approach combining sub-symbolic and symbolic methods for human-centric explanations, that incorporate domain knowledge and causality. We furthermore introduce the notion of fidelity as a metric for evaluating how close the explanation is to the GNN's internal decision making process. The evaluation with a chemical dataset and ontology shows the explanatory value and reliability of our method.

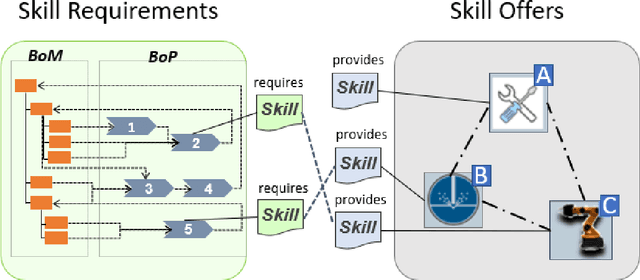

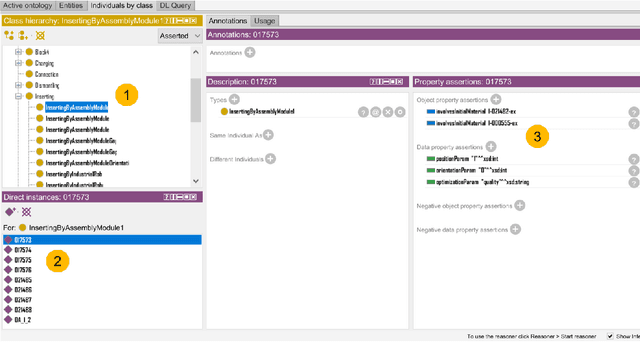

Ontology-Based Skill Description Learning for Flexible Production Systems

Nov 25, 2021

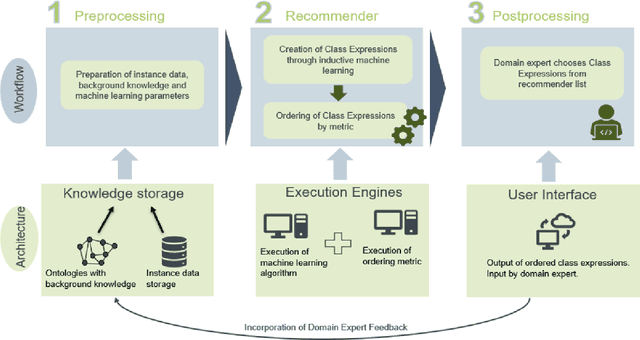

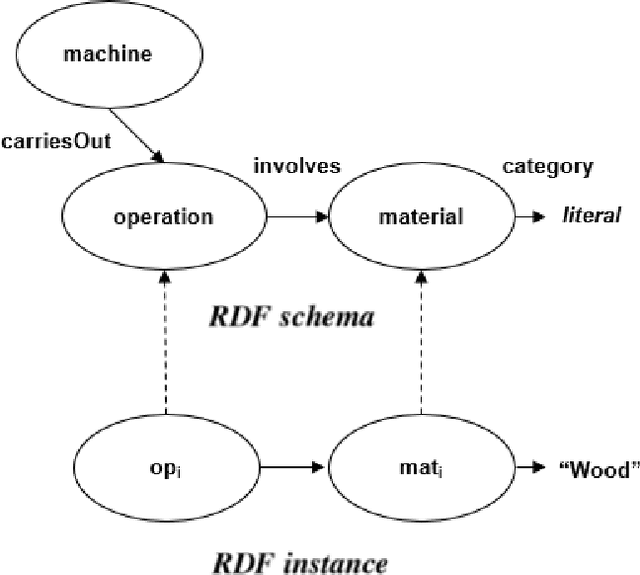

Abstract:The increasing importance of resource-efficient production entails that manufacturing companies have to create a more dynamic production environment, with flexible manufacturing machines and processes. To fully utilize this potential of dynamic manufacturing through automatic production planning, formal skill descriptions of the machines are essential. However, generating those skill descriptions in a manual fashion is labor-intensive and requires extensive domain-knowledge. In this contribution an ontology-based semi-automatic skill description system that utilizes production logs and industrial ontologies through inductive logic programming is introduced and benefits and drawbacks of the proposed solution are evaluated.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge