Martin Ringsquandl

Wiki-TabNER:Advancing Table Interpretation Through Named Entity Recognition

Mar 07, 2024

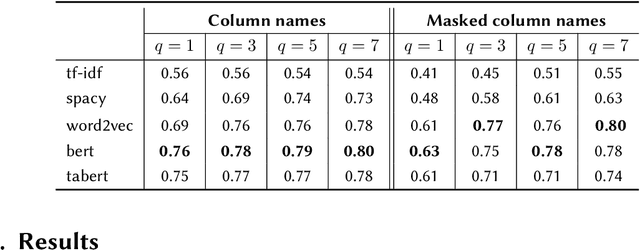

Abstract:Web tables contain a large amount of valuable knowledge and have inspired tabular language models aimed at tackling table interpretation (TI) tasks. In this paper, we analyse a widely used benchmark dataset for evaluation of TI tasks, particularly focusing on the entity linking task. Our analysis reveals that this dataset is overly simplified, potentially reducing its effectiveness for thorough evaluation and failing to accurately represent tables as they appear in the real-world. To overcome this drawback, we construct and annotate a new more challenging dataset. In addition to introducing the new dataset, we also introduce a novel problem aimed at addressing the entity linking task: named entity recognition within cells. Finally, we propose a prompting framework for evaluating the newly developed large language models (LLMs) on this novel TI task. We conduct experiments on prompting LLMs under various settings, where we use both random and similarity-based selection to choose the examples presented to the models. Our ablation study helps us gain insights into the impact of the few-shot examples. Additionally, we perform qualitative analysis to gain insights into the challenges encountered by the models and to understand the limitations of the proposed dataset.

Adversarial Attacks on Tables with Entity Swap

Sep 15, 2023

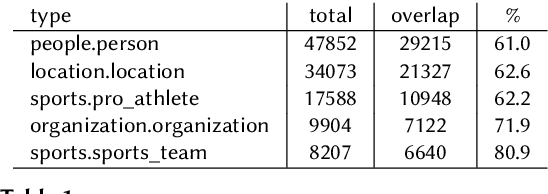

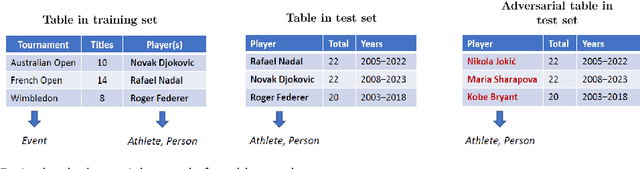

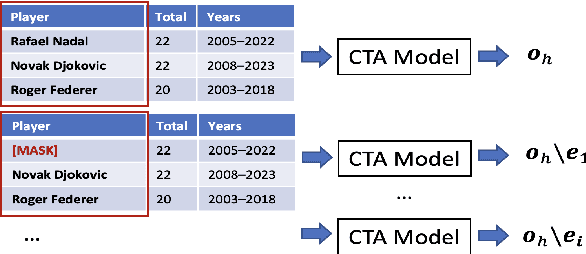

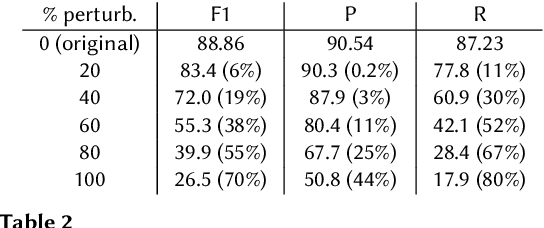

Abstract:The capabilities of large language models (LLMs) have been successfully applied in the context of table representation learning. The recently proposed tabular language models have reported state-of-the-art results across various tasks for table interpretation. However, a closer look into the datasets commonly used for evaluation reveals an entity leakage from the train set into the test set. Motivated by this observation, we explore adversarial attacks that represent a more realistic inference setup. Adversarial attacks on text have been shown to greatly affect the performance of LLMs, but currently, there are no attacks targeting tabular language models. In this paper, we propose an evasive entity-swap attack for the column type annotation (CTA) task. Our CTA attack is the first black-box attack on tables, where we employ a similarity-based sampling strategy to generate adversarial examples. The experimental results show that the proposed attack generates up to a 70% drop in performance.

Active Learning with Tabular Language Models

Nov 08, 2022

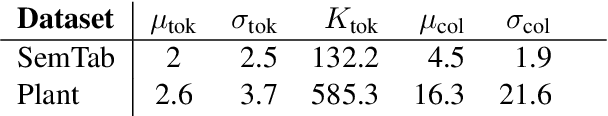

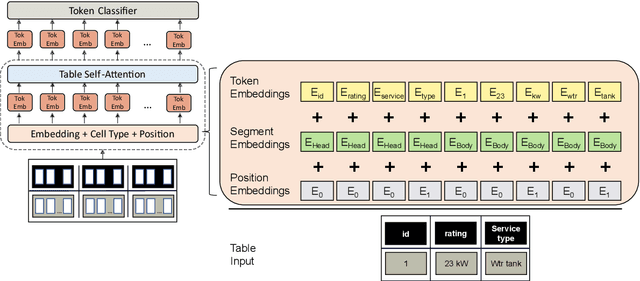

Abstract:Despite recent advancements in tabular language model research, real-world applications are still challenging. In industry, there is an abundance of tables found in spreadsheets, but acquisition of substantial amounts of labels is expensive, since only experts can annotate the often highly technical and domain-specific tables. Active learning could potentially reduce labeling costs, however, so far there are no works related to active learning in conjunction with tabular language models. In this paper we investigate different acquisition functions in a real-world industrial tabular language model use case for sub-cell named entity recognition. Our results show that cell-level acquisition functions with built-in diversity can significantly reduce the labeling effort, while enforced table diversity is detrimental. We further see open fundamental questions concerning computational efficiency and the perspective of human annotators.

Named Entity Recognition in Industrial Tables using Tabular Language Models

Sep 29, 2022

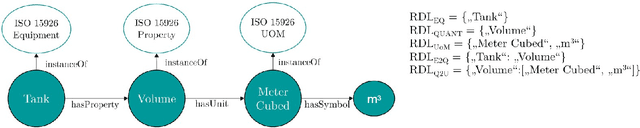

Abstract:Specialized transformer-based models for encoding tabular data have gained interest in academia. Although tabular data is omnipresent in industry, applications of table transformers are still missing. In this paper, we study how these models can be applied to an industrial Named Entity Recognition (NER) problem where the entities are mentioned in tabular-structured spreadsheets. The highly technical nature of spreadsheets as well as the lack of labeled data present major challenges for fine-tuning transformer-based models. Therefore, we develop a dedicated table data augmentation strategy based on available domain-specific knowledge graphs. We show that this boosts performance in our low-resource scenario considerably. Further, we investigate the benefits of tabular structure as inductive bias compared to tables as linearized sequences. Our experiments confirm that a table transformer outperforms other baselines and that its tabular inductive bias is vital for convergence of transformer-based models.

Combining Sub-Symbolic and Symbolic Methods for Explainability

Dec 03, 2021

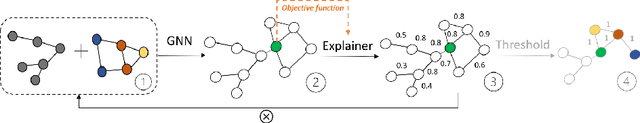

Abstract:Similarly to other connectionist models, Graph Neural Networks (GNNs) lack transparency in their decision-making. A number of sub-symbolic approaches have been developed to provide insights into the GNN decision making process. These are first important steps on the way to explainability, but the generated explanations are often hard to understand for users that are not AI experts. To overcome this problem, we introduce a conceptual approach combining sub-symbolic and symbolic methods for human-centric explanations, that incorporate domain knowledge and causality. We furthermore introduce the notion of fidelity as a metric for evaluating how close the explanation is to the GNN's internal decision making process. The evaluation with a chemical dataset and ontology shows the explanatory value and reliability of our method.

Demystifying Graph Neural Network Explanations

Nov 25, 2021

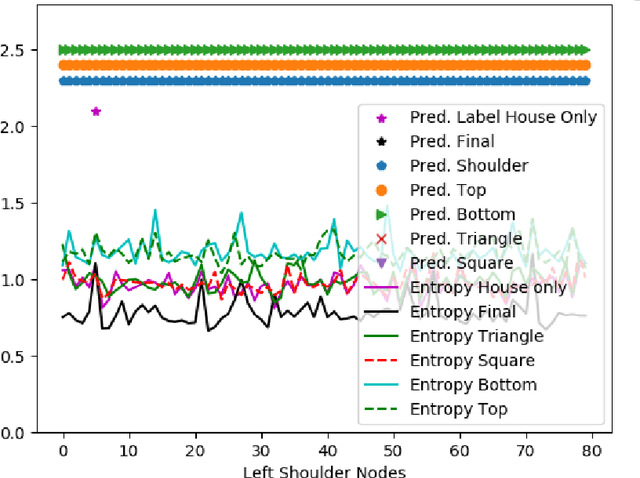

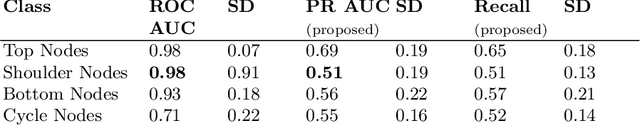

Abstract:Graph neural networks (GNNs) are quickly becoming the standard approach for learning on graph structured data across several domains, but they lack transparency in their decision-making. Several perturbation-based approaches have been developed to provide insights into the decision making process of GNNs. As this is an early research area, the methods and data used to evaluate the generated explanations lack maturity. We explore these existing approaches and identify common pitfalls in three main areas: (1) synthetic data generation process, (2) evaluation metrics, and (3) the final presentation of the explanation. For this purpose, we perform an empirical study to explore these pitfalls along with their unintended consequences and propose remedies to mitigate their effects.

Generating Table Vector Representations

Oct 28, 2021

Abstract:High-quality Web tables are rich sources of information that can be used to populate Knowledge Graphs (KG). The focus of this paper is an evaluation of methods for table-to-class annotation, which is a sub-task of Table Interpretation (TI). We provide a formal definition for table classification as a machine learning task. We propose an experimental setup and we evaluate 5 fundamentally different approaches to find the best method for generating vector table representations. Our findings indicate that although transfer learning methods achieve high F1 score on the table classification task, dedicated table encoding models are a promising direction as they appear to capture richer semantics.

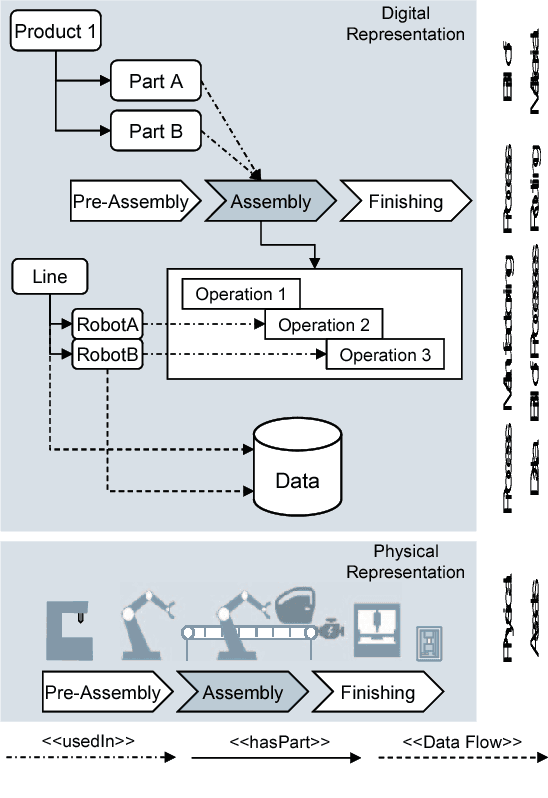

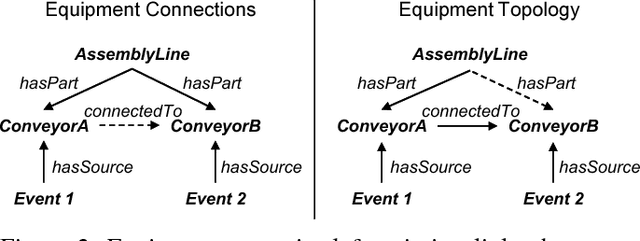

On Event-Driven Knowledge Graph Completion in Digital Factories

Sep 08, 2021

Abstract:Smart factories are equipped with machines that can sense their manufacturing environments, interact with each other, and control production processes. Smooth operation of such factories requires that the machines and engineering personnel that conduct their monitoring and diagnostics share a detailed common industrial knowledge about the factory, e.g., in the form of knowledge graphs. Creation and maintenance of such knowledge is expensive and requires automation. In this work we show how machine learning that is specifically tailored towards industrial applications can help in knowledge graph completion. In particular, we show how knowledge completion can benefit from event logs that are common in smart factories. We evaluate this on the knowledge graph from a real world-inspired smart factory with encouraging results.

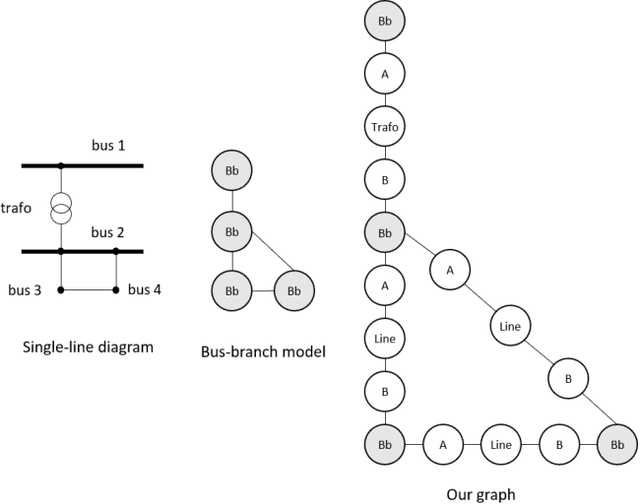

Power to the Relational Inductive Bias: Graph Neural Networks in Electrical Power Grids

Sep 08, 2021

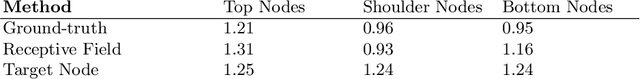

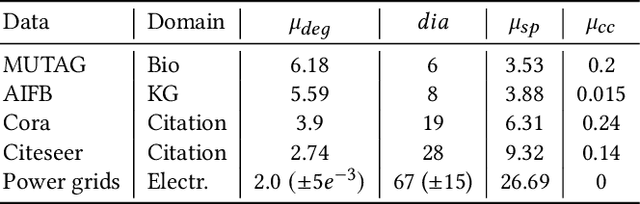

Abstract:The application of graph neural networks (GNNs) to the domain of electrical power grids has high potential impact on smart grid monitoring. Even though there is a natural correspondence of power flow to message-passing in GNNs, their performance on power grids is not well-understood. We argue that there is a gap between GNN research driven by benchmarks which contain graphs that differ from power grids in several important aspects. Additionally, inductive learning of GNNs across multiple power grid topologies has not been explored with real-world data. We address this gap by means of (i) defining power grid graph datasets in inductive settings, (ii) an exploratory analysis of graph properties, and (iii) an empirical study of the concrete learning task of state estimation on real-world power grids. Our results show that GNNs are more robust to noise with up to 400% lower error compared to baselines. Furthermore, due to the unique properties of electrical grids, we do not observe the well known over-smoothing phenomenon of GNNs and find the best performing models to be exceptionally deep with up to 13 layers. This is in stark contrast to existing benchmark datasets where the consensus is that 2 to 3 layer GNNs perform best. Our results demonstrate that a key challenge in this domain is to effectively handle long-range dependence.

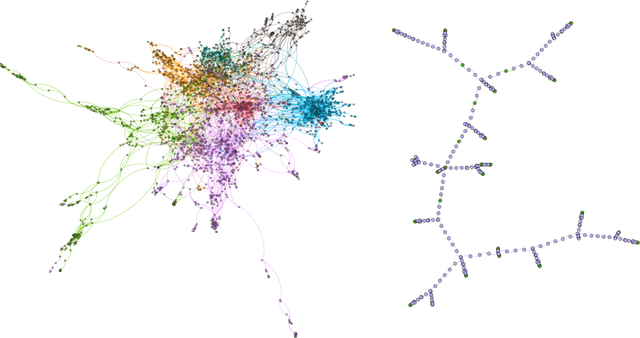

Neural Multi-Hop Reasoning With Logical Rules on Biomedical Knowledge Graphs

Mar 18, 2021

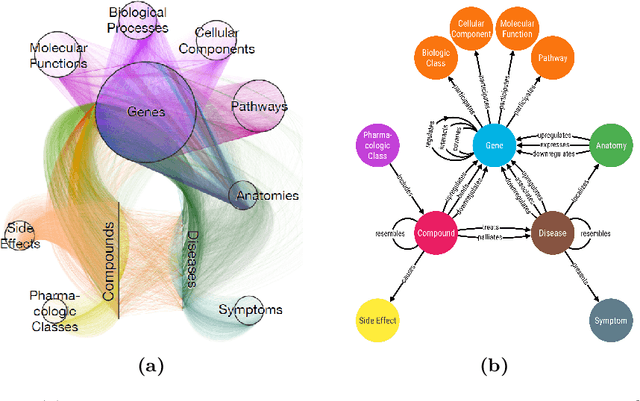

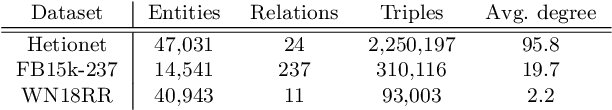

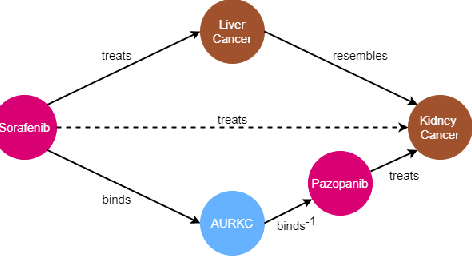

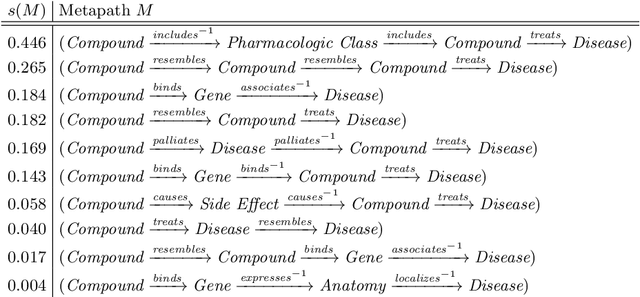

Abstract:Biomedical knowledge graphs permit an integrative computational approach to reasoning about biological systems. The nature of biological data leads to a graph structure that differs from those typically encountered in benchmarking datasets. To understand the implications this may have on the performance of reasoning algorithms, we conduct an empirical study based on the real-world task of drug repurposing. We formulate this task as a link prediction problem where both compounds and diseases correspond to entities in a knowledge graph. To overcome apparent weaknesses of existing algorithms, we propose a new method, PoLo, that combines policy-guided walks based on reinforcement learning with logical rules. These rules are integrated into the algorithm by using a novel reward function. We apply our method to Hetionet, which integrates biomedical information from 29 prominent bioinformatics databases. Our experiments show that our approach outperforms several state-of-the-art methods for link prediction while providing interpretability.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge