Marcel Hildebrandt

A Knowledge Graph Perspective on Supply Chain Resilience

May 15, 2023

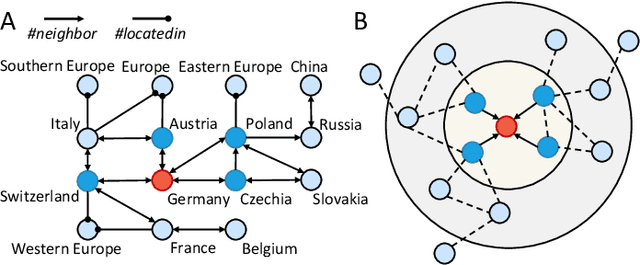

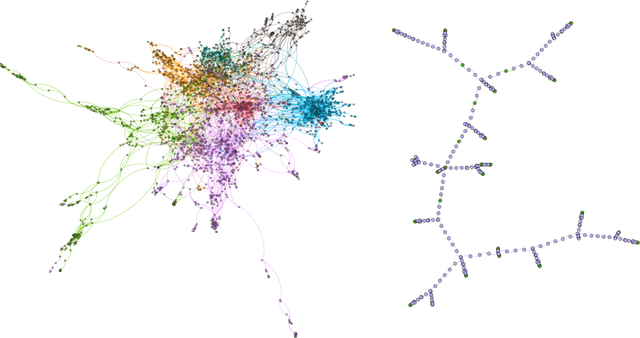

Abstract:Global crises and regulatory developments require increased supply chain transparency and resilience. Companies do not only need to react to a dynamic environment but have to act proactively and implement measures to prevent production delays and reduce risks in the supply chains. However, information about supply chains, especially at the deeper levels, is often intransparent and incomplete, making it difficult to obtain precise predictions about prospective risks. By connecting different data sources, we model the supply network as a knowledge graph and achieve transparency up to tier-3 suppliers. To predict missing information in the graph, we apply state-of-the-art knowledge graph completion methods and attain a mean reciprocal rank of 0.4377 with the best model. Further, we apply graph analysis algorithms to identify critical entities in the supply network, supporting supply chain managers in automated risk identification.

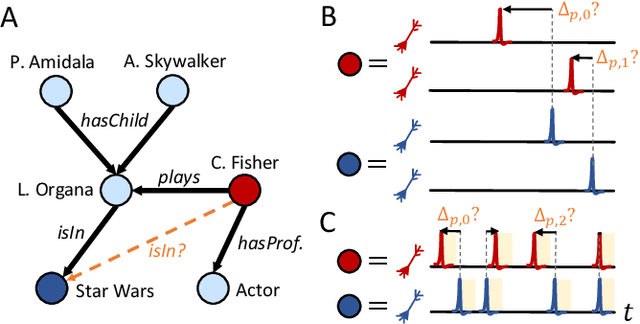

Neuro-symbolic computing with spiking neural networks

Aug 04, 2022

Abstract:Knowledge graphs are an expressive and widely used data structure due to their ability to integrate data from different domains in a sensible and machine-readable way. Thus, they can be used to model a variety of systems such as molecules and social networks. However, it still remains an open question how symbolic reasoning could be realized in spiking systems and, therefore, how spiking neural networks could be applied to such graph data. Here, we extend previous work on spike-based graph algorithms by demonstrating how symbolic and multi-relational information can be encoded using spiking neurons, allowing reasoning over symbolic structures like knowledge graphs with spiking neural networks. The introduced framework is enabled by combining the graph embedding paradigm and the recent progress in training spiking neural networks using error backpropagation. The presented methods are applicable to a variety of spiking neuron models and can be trained end-to-end in combination with other differentiable network architectures, which we demonstrate by implementing a spiking relational graph neural network.

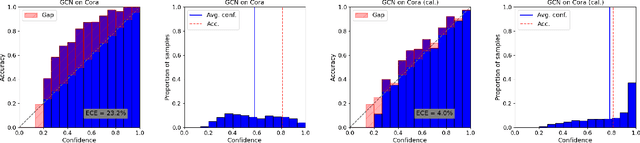

On Calibration of Graph Neural Networks for Node Classification

Jun 03, 2022

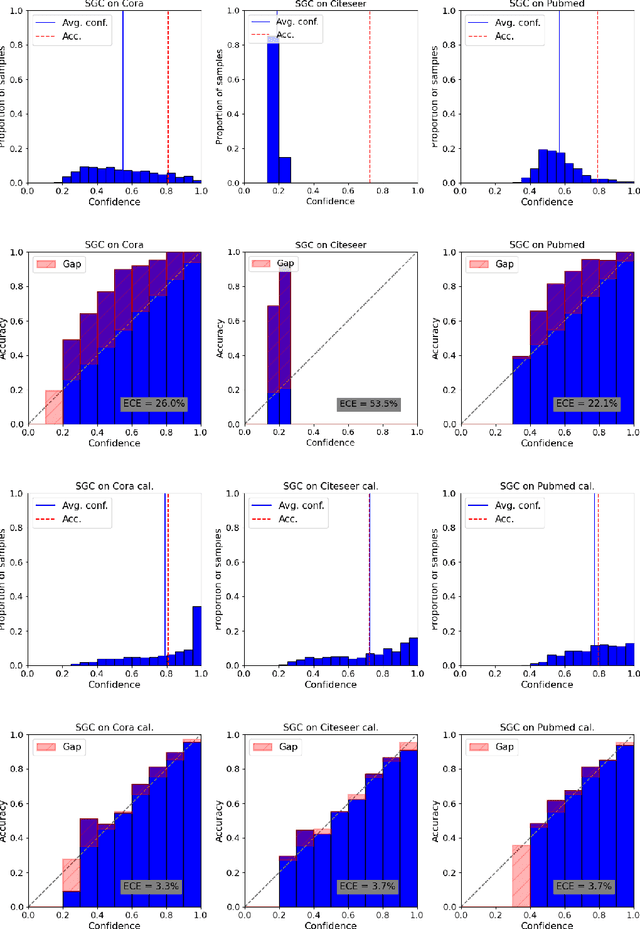

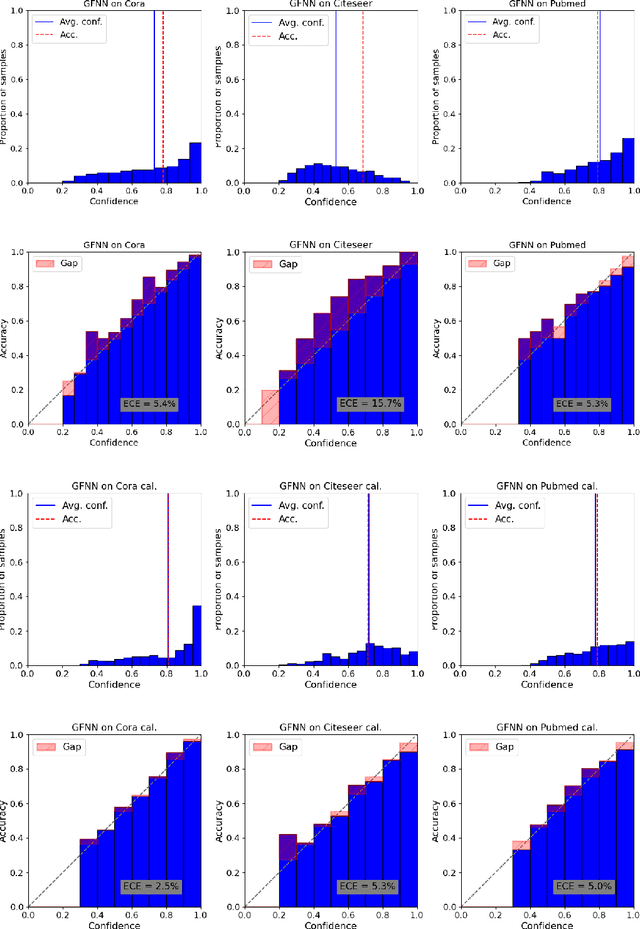

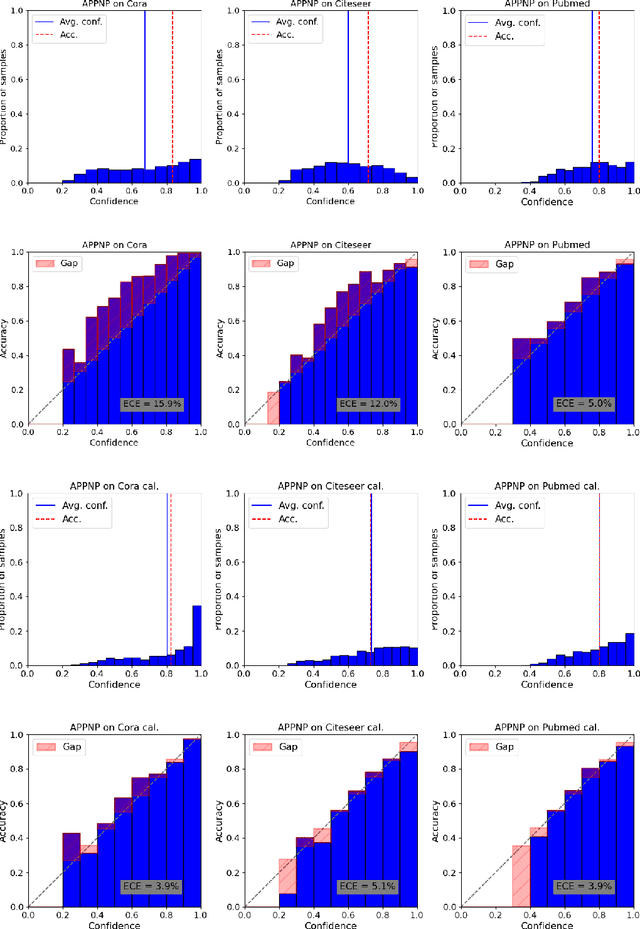

Abstract:Graphs can model real-world, complex systems by representing entities and their interactions in terms of nodes and edges. To better exploit the graph structure, graph neural networks have been developed, which learn entity and edge embeddings for tasks such as node classification and link prediction. These models achieve good performance with respect to accuracy, but the confidence scores associated with the predictions might not be calibrated. That means that the scores might not reflect the ground-truth probabilities of the predicted events, which would be especially important for safety-critical applications. Even though graph neural networks are used for a wide range of tasks, the calibration thereof has not been sufficiently explored yet. We investigate the calibration of graph neural networks for node classification, study the effect of existing post-processing calibration methods, and analyze the influence of model capacity, graph density, and a new loss function on calibration. Further, we propose a topology-aware calibration method that takes the neighboring nodes into account and yields improved calibration compared to baseline methods.

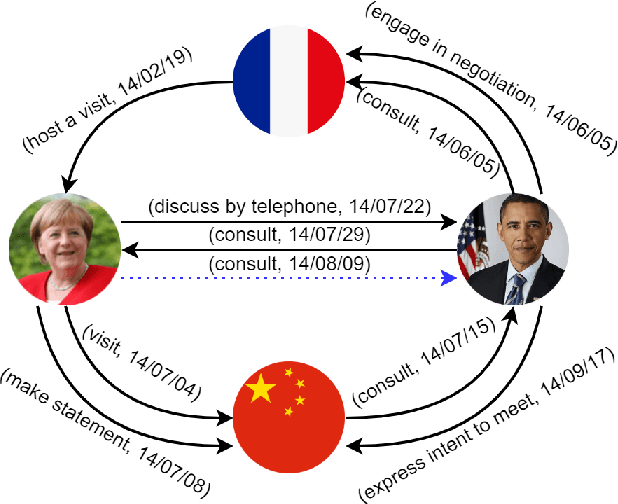

TLogic: Temporal Logical Rules for Explainable Link Forecasting on Temporal Knowledge Graphs

Dec 15, 2021

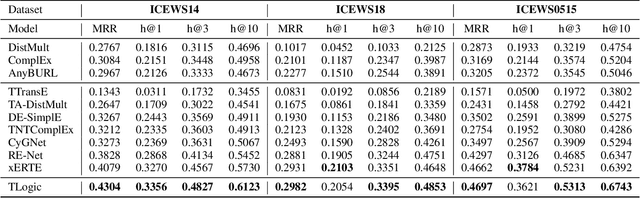

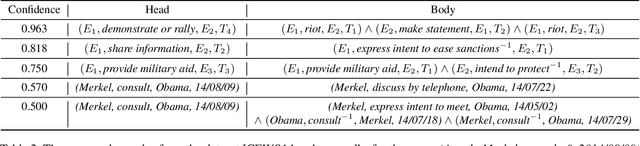

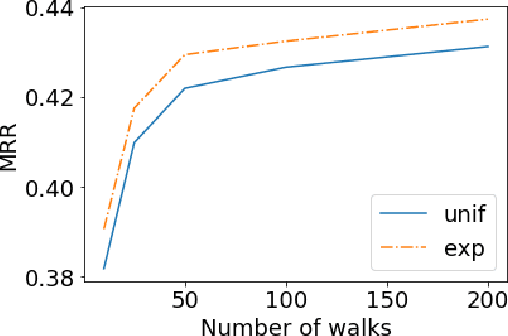

Abstract:Conventional static knowledge graphs model entities in relational data as nodes, connected by edges of specific relation types. However, information and knowledge evolve continuously, and temporal dynamics emerge, which are expected to influence future situations. In temporal knowledge graphs, time information is integrated into the graph by equipping each edge with a timestamp or a time range. Embedding-based methods have been introduced for link prediction on temporal knowledge graphs, but they mostly lack explainability and comprehensible reasoning chains. Particularly, they are usually not designed to deal with link forecasting -- event prediction involving future timestamps. We address the task of link forecasting on temporal knowledge graphs and introduce TLogic, an explainable framework that is based on temporal logical rules extracted via temporal random walks. We compare TLogic with state-of-the-art baselines on three benchmark datasets and show better overall performance while our method also provides explanations that preserve time consistency. Furthermore, in contrast to most state-of-the-art embedding-based methods, TLogic works well in the inductive setting where already learned rules are transferred to related datasets with a common vocabulary.

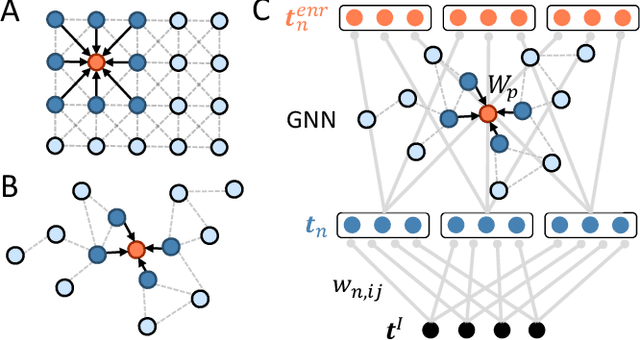

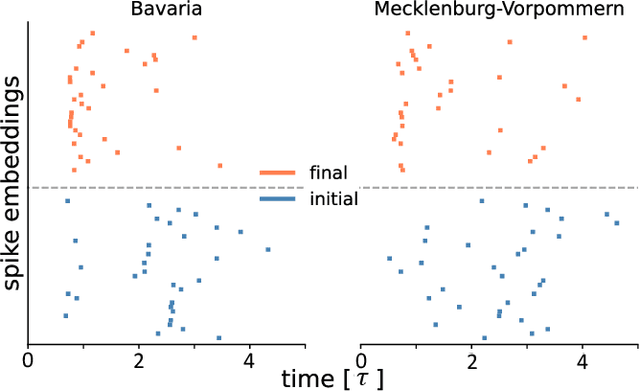

Learning through structure: towards deep neuromorphic knowledge graph embeddings

Sep 21, 2021

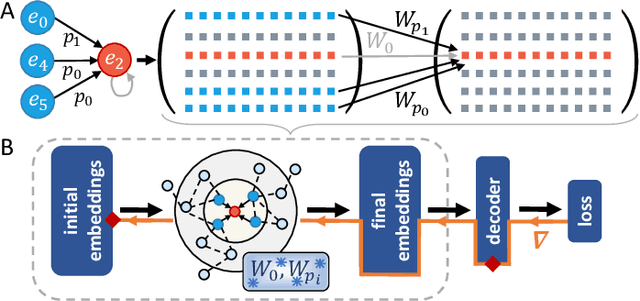

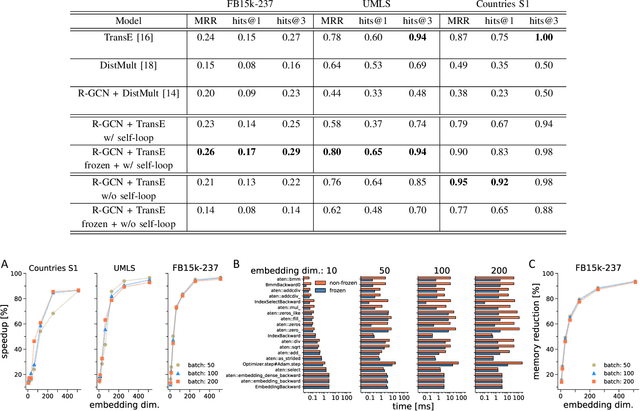

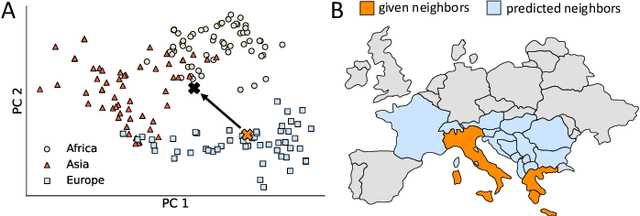

Abstract:Computing latent representations for graph-structured data is an ubiquitous learning task in many industrial and academic applications ranging from molecule synthetization to social network analysis and recommender systems. Knowledge graphs are among the most popular and widely used data representations related to the Semantic Web. Next to structuring factual knowledge in a machine-readable format, knowledge graphs serve as the backbone of many artificial intelligence applications and allow the ingestion of context information into various learning algorithms. Graph neural networks attempt to encode graph structures in low-dimensional vector spaces via a message passing heuristic between neighboring nodes. Over the recent years, a multitude of different graph neural network architectures demonstrated ground-breaking performances in many learning tasks. In this work, we propose a strategy to map deep graph learning architectures for knowledge graph reasoning to neuromorphic architectures. Based on the insight that randomly initialized and untrained (i.e., frozen) graph neural networks are able to preserve local graph structures, we compose a frozen neural network with shallow knowledge graph embedding models. We experimentally show that already on conventional computing hardware, this leads to a significant speedup and memory reduction while maintaining a competitive performance level. Moreover, we extend the frozen architecture to spiking neural networks, introducing a novel, event-based and highly sparse knowledge graph embedding algorithm that is suitable for implementation in neuromorphic hardware.

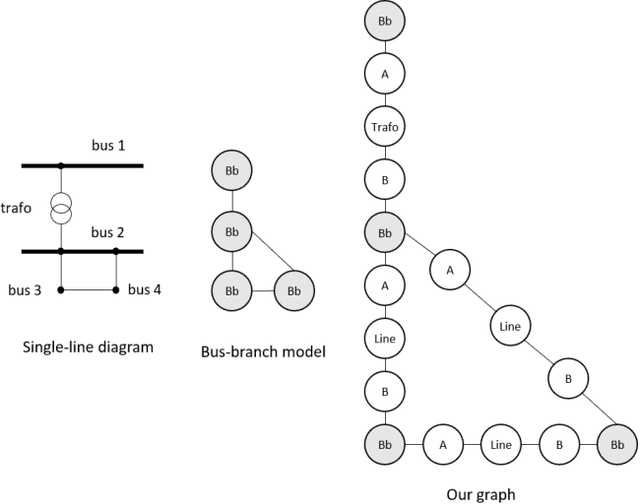

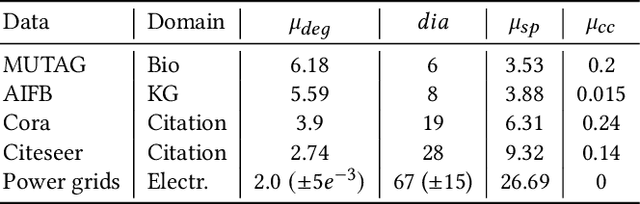

Power to the Relational Inductive Bias: Graph Neural Networks in Electrical Power Grids

Sep 08, 2021

Abstract:The application of graph neural networks (GNNs) to the domain of electrical power grids has high potential impact on smart grid monitoring. Even though there is a natural correspondence of power flow to message-passing in GNNs, their performance on power grids is not well-understood. We argue that there is a gap between GNN research driven by benchmarks which contain graphs that differ from power grids in several important aspects. Additionally, inductive learning of GNNs across multiple power grid topologies has not been explored with real-world data. We address this gap by means of (i) defining power grid graph datasets in inductive settings, (ii) an exploratory analysis of graph properties, and (iii) an empirical study of the concrete learning task of state estimation on real-world power grids. Our results show that GNNs are more robust to noise with up to 400% lower error compared to baselines. Furthermore, due to the unique properties of electrical grids, we do not observe the well known over-smoothing phenomenon of GNNs and find the best performing models to be exceptionally deep with up to 13 layers. This is in stark contrast to existing benchmark datasets where the consensus is that 2 to 3 layer GNNs perform best. Our results demonstrate that a key challenge in this domain is to effectively handle long-range dependence.

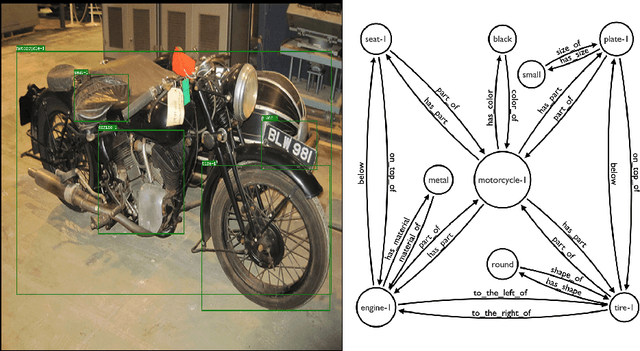

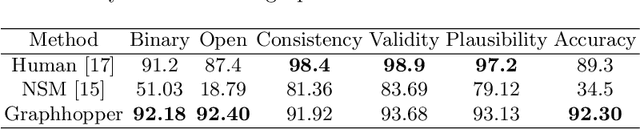

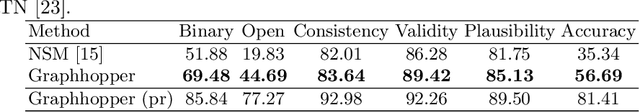

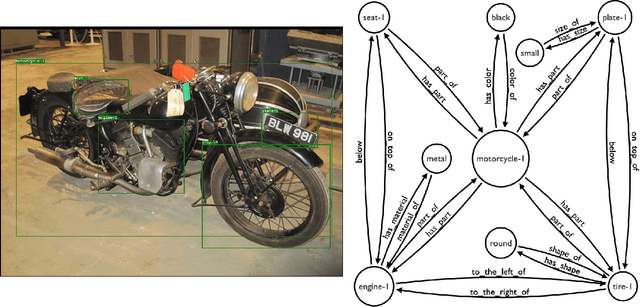

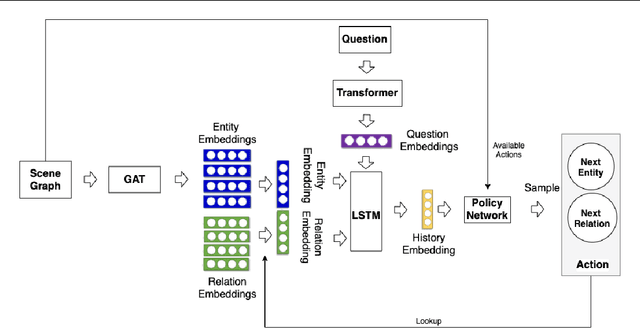

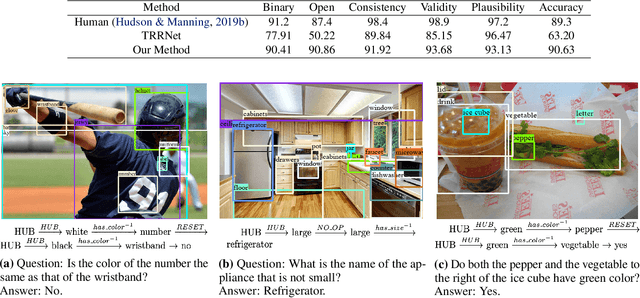

Graphhopper: Multi-Hop Scene Graph Reasoning for Visual Question Answering

Jul 13, 2021

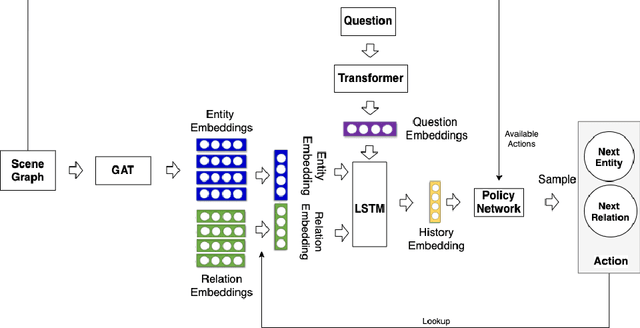

Abstract:Visual Question Answering (VQA) is concerned with answering free-form questions about an image. Since it requires a deep semantic and linguistic understanding of the question and the ability to associate it with various objects that are present in the image, it is an ambitious task and requires multi-modal reasoning from both computer vision and natural language processing. We propose Graphhopper, a novel method that approaches the task by integrating knowledge graph reasoning, computer vision, and natural language processing techniques. Concretely, our method is based on performing context-driven, sequential reasoning based on the scene entities and their semantic and spatial relationships. As a first step, we derive a scene graph that describes the objects in the image, as well as their attributes and their mutual relationships. Subsequently, a reinforcement learning agent is trained to autonomously navigate in a multi-hop manner over the extracted scene graph to generate reasoning paths, which are the basis for deriving answers. We conduct an experimental study on the challenging dataset GQA, based on both manually curated and automatically generated scene graphs. Our results show that we keep up with a human performance on manually curated scene graphs. Moreover, we find that Graphhopper outperforms another state-of-the-art scene graph reasoning model on both manually curated and automatically generated scene graphs by a significant margin.

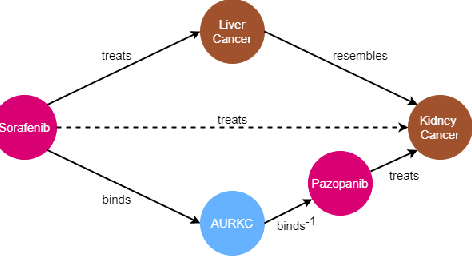

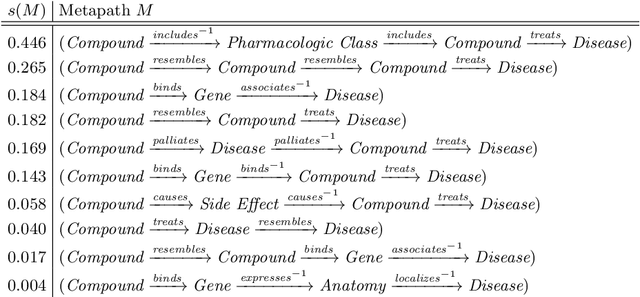

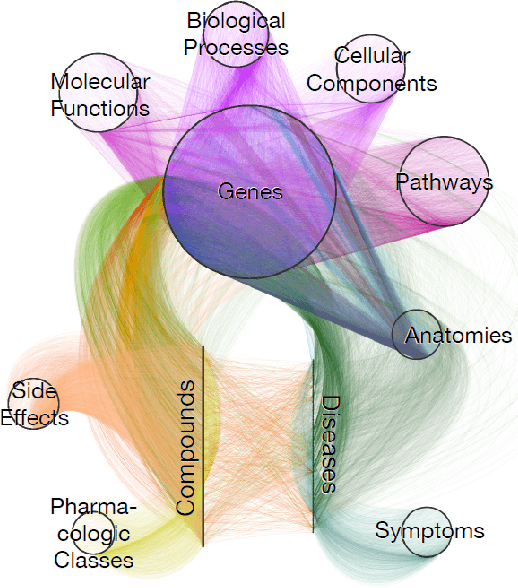

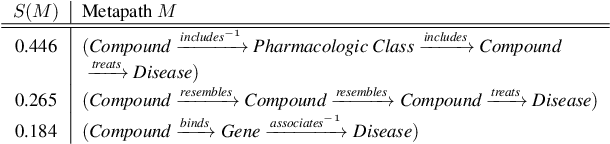

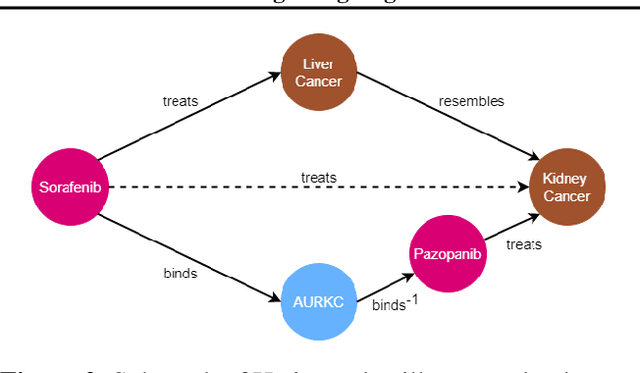

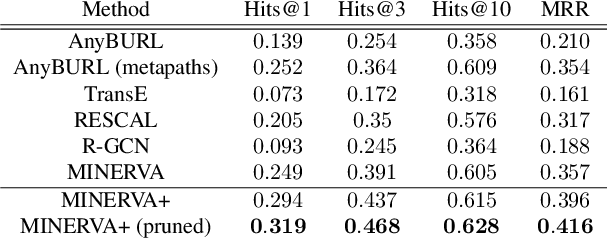

Neural Multi-Hop Reasoning With Logical Rules on Biomedical Knowledge Graphs

Mar 18, 2021

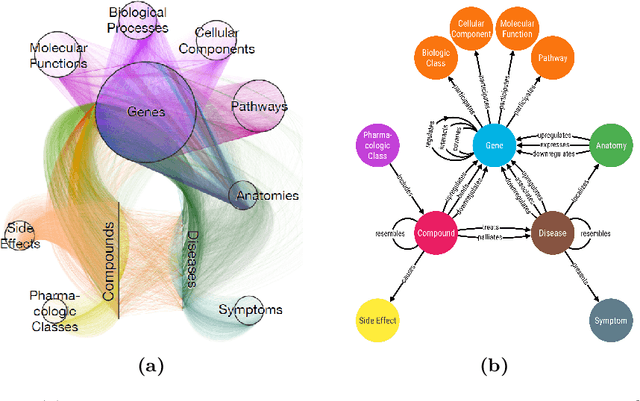

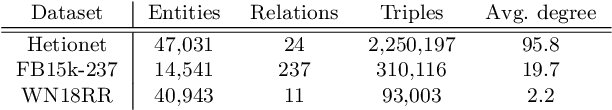

Abstract:Biomedical knowledge graphs permit an integrative computational approach to reasoning about biological systems. The nature of biological data leads to a graph structure that differs from those typically encountered in benchmarking datasets. To understand the implications this may have on the performance of reasoning algorithms, we conduct an empirical study based on the real-world task of drug repurposing. We formulate this task as a link prediction problem where both compounds and diseases correspond to entities in a knowledge graph. To overcome apparent weaknesses of existing algorithms, we propose a new method, PoLo, that combines policy-guided walks based on reinforcement learning with logical rules. These rules are integrated into the algorithm by using a novel reward function. We apply our method to Hetionet, which integrates biomedical information from 29 prominent bioinformatics databases. Our experiments show that our approach outperforms several state-of-the-art methods for link prediction while providing interpretability.

Integrating Logical Rules Into Neural Multi-Hop Reasoning for Drug Repurposing

Jul 10, 2020

Abstract:The graph structure of biomedical data differs from those in typical knowledge graph benchmark tasks. A particular property of biomedical data is the presence of long-range dependencies, which can be captured by patterns described as logical rules. We propose a novel method that combines these rules with a neural multi-hop reasoning approach that uses reinforcement learning. We conduct an empirical study based on the real-world task of drug repurposing by formulating this task as a link prediction problem. We apply our method to the biomedical knowledge graph Hetionet and show that our approach outperforms several baseline methods.

Scene Graph Reasoning for Visual Question Answering

Jul 02, 2020

Abstract:Visual question answering is concerned with answering free-form questions about an image. Since it requires a deep linguistic understanding of the question and the ability to associate it with various objects that are present in the image, it is an ambitious task and requires techniques from both computer vision and natural language processing. We propose a novel method that approaches the task by performing context-driven, sequential reasoning based on the objects and their semantic and spatial relationships present in the scene. As a first step, we derive a scene graph which describes the objects in the image, as well as their attributes and their mutual relationships. A reinforcement agent then learns to autonomously navigate over the extracted scene graph to generate paths, which are then the basis for deriving answers. We conduct a first experimental study on the challenging GQA dataset with manually curated scene graphs, where our method almost reaches the level of human performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge