Andreas Grübl

Kirchhoff-Institute for Physics, Heidelberg, Germany

Demonstrating the Advantages of Analog Wafer-Scale Neuromorphic Hardware

Dec 03, 2024

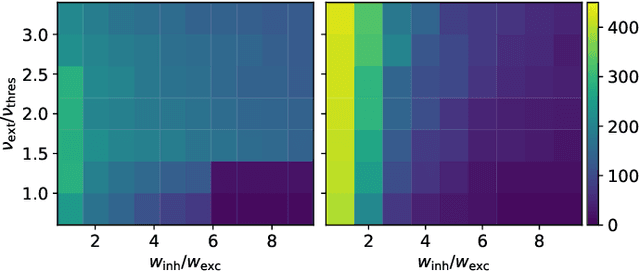

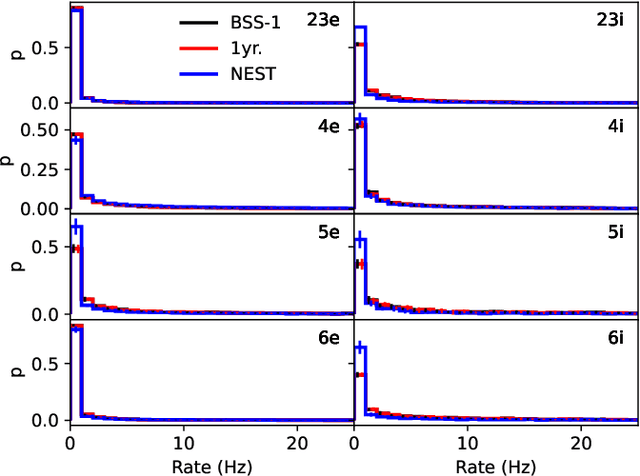

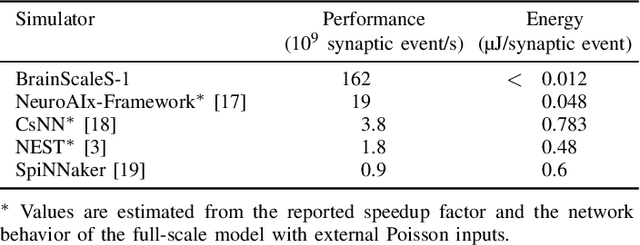

Abstract:As numerical simulations grow in size and complexity, they become increasingly resource-intensive in terms of time and energy. While specialized hardware accelerators often provide order-of-magnitude gains and are state of the art in other scientific fields, their availability and applicability in computational neuroscience is still limited. In this field, neuromorphic accelerators, particularly mixed-signal architectures like the BrainScaleS systems, offer the most significant performance benefits. These systems maintain a constant, accelerated emulation speed independent of network model and size. This is especially beneficial when traditional simulators reach their limits, such as when modeling complex neuron dynamics, incorporating plasticity mechanisms, or running long or repetitive experiments. However, the analog nature of these systems introduces new challenges. In this paper we demonstrate the capabilities and advantages of the BrainScaleS-1 system and how it can be used in combination with conventional software simulations. We report the emulation time and energy consumption for two biologically inspired networks adapted to the neuromorphic hardware substrate: a balanced random network based on Brunel and the cortical microcircuit from Potjans and Diesmann.

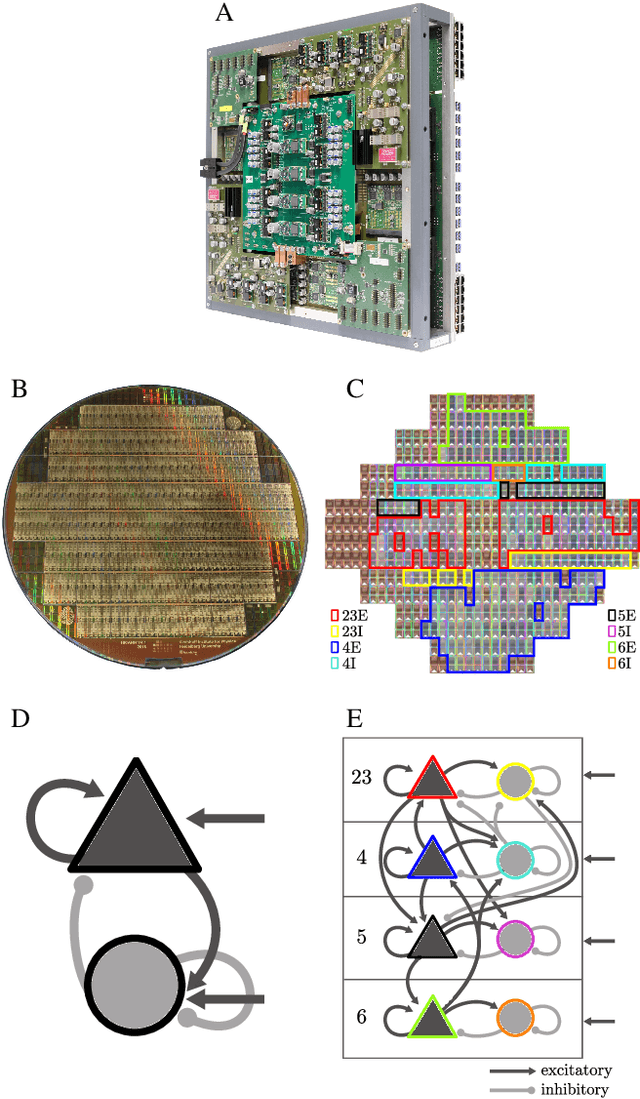

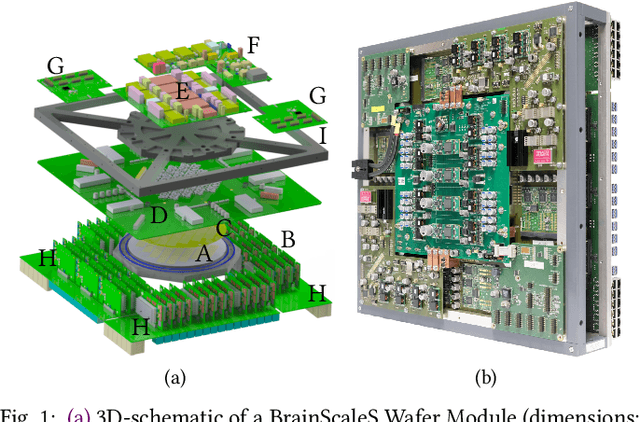

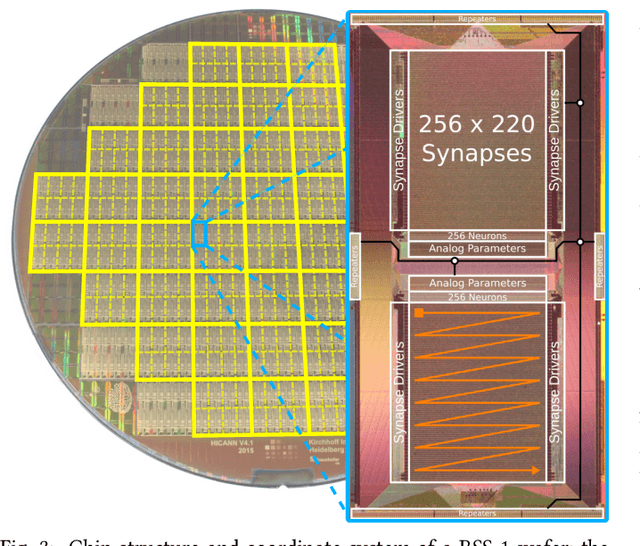

From Clean Room to Machine Room: Commissioning of the First-Generation BrainScaleS Wafer-Scale Neuromorphic System

Mar 22, 2023Abstract:The first-generation of BrainScaleS, also referred to as BrainScaleS-1, is a neuromorphic system for emulating large-scale networks of spiking neurons. Following a "physical modeling" principle, its VLSI circuits are designed to emulate the dynamics of biological examples: analog circuits implement neurons and synapses with time constants that arise from their electronic components' intrinsic properties. It operates in continuous time, with dynamics typically matching an acceleration factor of 10000 compared to the biological regime. A fault-tolerant design allows it to achieve wafer-scale integration despite unavoidable analog variability and component failures. In this paper, we present the commissioning process of a BrainScaleS-1 wafer module, providing a short description of the system's physical components, illustrating the steps taken during its assembly and the measures taken to operate it. Furthermore, we reflect on the system's development process and the lessons learned to conclude with a demonstration of its functionality by emulating a wafer-scale synchronous firing chain, the largest spiking network emulation ran with analog components and individual synapses to date.

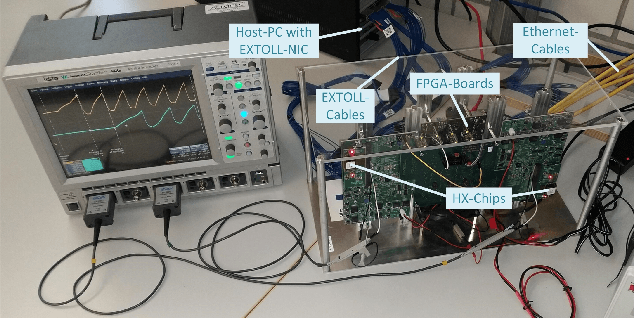

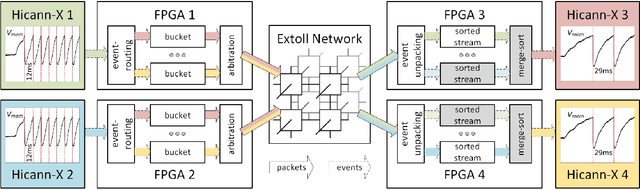

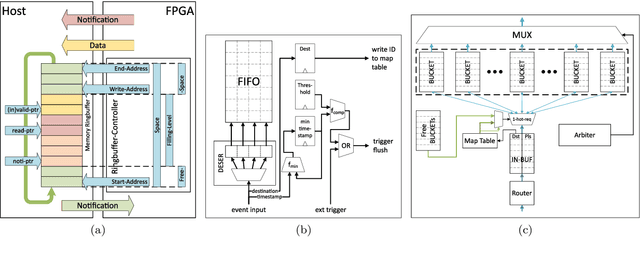

Demonstrating BrainScaleS-2 Inter-Chip Pulse-Communication using EXTOLL

Mar 03, 2022

Abstract:The BrainScaleS-2 (BSS-2) Neuromorphic Computing System currently consists of multiple single-chip setups, which are connected to a compute cluster via Gigabit-Ethernet network technology. This is convenient for small experiments, where the neural networks fit into a single chip. When modeling networks of larger size, neurons have to be connected across chip boundaries. We implement these connections for BSS-2 using the EXTOLL networking technology. This provides high bandwidths and low latencies, as well as high message rates. Here, we describe the targeted pulse-routing implementation and required extensions to the BSS-2 software stack. We as well demonstrate feed-forward pulse-routing on BSS-2 using a scaled-down version without temporal merging.

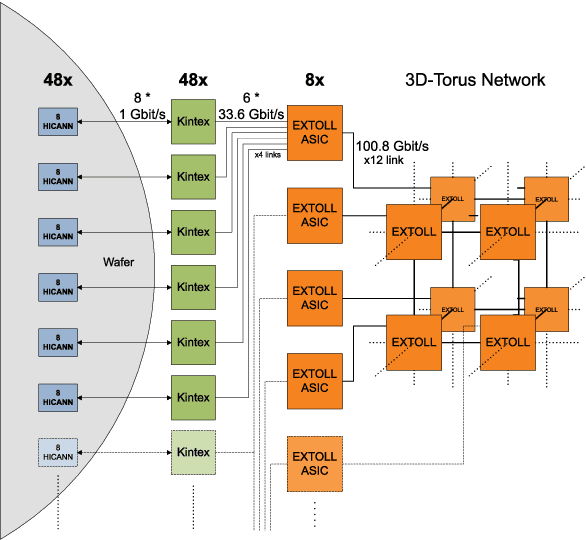

BrainScaleS Large Scale Spike Communication using Extoll

Dec 14, 2021

Abstract:The BrainScaleS Neuromorphic Computing System is currently connected to a compute cluster via Gigabit-Ethernet network technology. This is convenient for the currently used experiment mode, where neuronal networks cover at most one wafer module. When modelling networks of larger size, as for example a full sized cortical microcircuit model, one has to think about connecting neurons across wafer modules to larger networks. This can be done, using the Extoll networking technology, which provides high bandwidth and low latencies, as well as a low overhead packet protocol format.

Inference with Artificial Neural Networks on Analog Neuromorphic Hardware

Jul 01, 2020

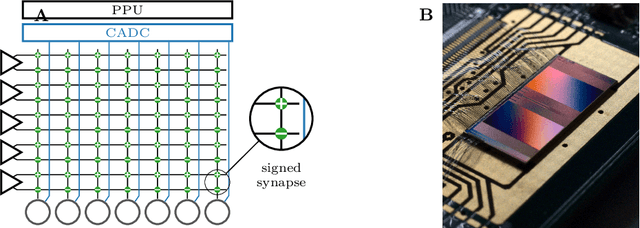

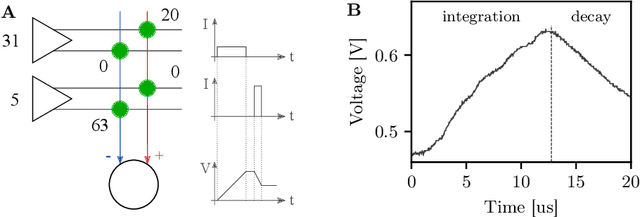

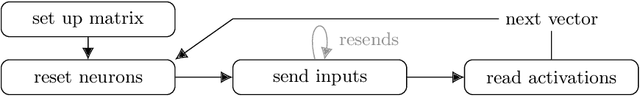

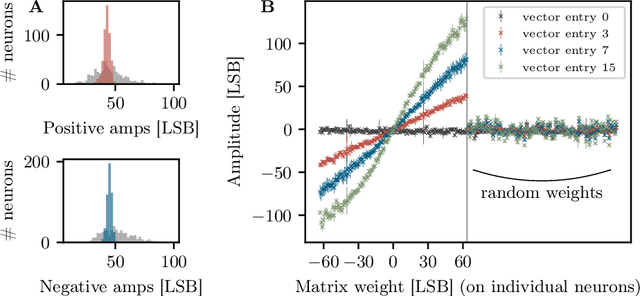

Abstract:The neuromorphic BrainScaleS-2 ASIC comprises mixed-signal neurons and synapse circuits as well as two versatile digital microprocessors. Primarily designed to emulate spiking neural networks, the system can also operate in a vector-matrix multiplication and accumulation mode for artificial neural networks. Analog multiplication is carried out in the synapse circuits, while the results are accumulated on the neurons' membrane capacitors. Designed as an analog, in-memory computing device, it promises high energy efficiency. Fixed-pattern noise and trial-to-trial variations, however, require the implemented networks to cope with a certain level of perturbations. Further limitations are imposed by the digital resolution of the input values (5 bit), matrix weights (6 bit) and resulting neuron activations (8 bit). In this paper, we discuss BrainScaleS-2 as an analog inference accelerator and present calibration as well as optimization strategies, highlighting the advantages of training with hardware in the loop. Among other benchmarks, we classify the MNIST handwritten digits dataset using a two-dimensional convolution and two dense layers. We reach 98.0% test accuracy, closely matching the performance of the same network evaluated in software.

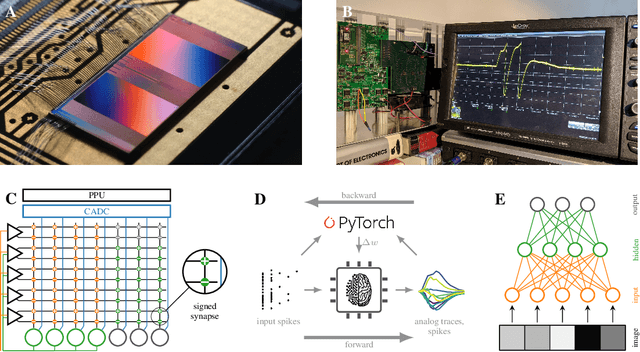

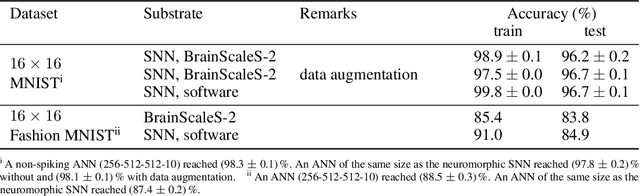

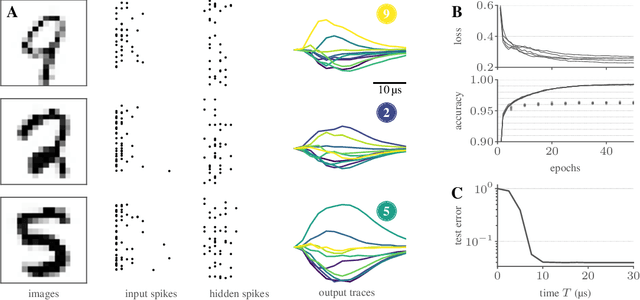

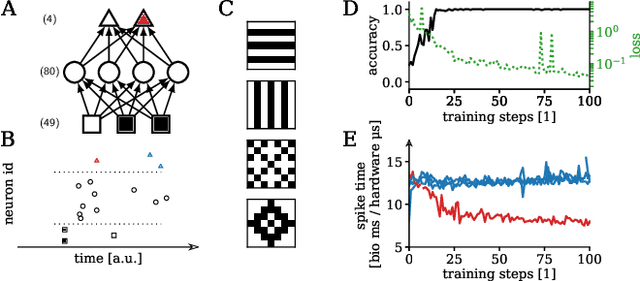

Training spiking multi-layer networks with surrogate gradients on an analog neuromorphic substrate

Jun 12, 2020

Abstract:Spiking neural networks are nature's solution for parallel information processing with high temporal precision at a low metabolic energy cost. To that end, biological neurons integrate inputs as an analog sum and communicate their outputs digitally as spikes, i.e., sparse binary events in time. These architectural principles can be mirrored effectively in analog neuromorphic hardware. Nevertheless, training spiking neural networks with sparse activity on hardware devices remains a major challenge. Primarily this is due to the lack of suitable training methods that take into account device-specific imperfections and operate at the level of individual spikes instead of firing rates. To tackle this issue, we developed a hardware-in-the-loop strategy to train multi-layer spiking networks using surrogate gradients on the analog BrainScales-2 chip. Specifically, we used the hardware to compute the forward pass of the network, while the backward pass was computed in software. We evaluated our approach on downscaled 16x16 versions of the MNIST and the fashion MNIST datasets in which spike latencies encoded pixel intensities. The analog neuromorphic substrate closely matched the performance of equivalently sized networks implemented in software. It is capable of processing 70 k patterns per second with a power consumption of less than 300 mW. Added activity regularization resulted in sparse network activity with about 20 spikes per input, at little to no reduction in classification performance. Thus, overall, our work demonstrates low-energy spiking network processing on an analog neuromorphic substrate and sets several new benchmarks for hardware systems in terms of classification accuracy, processing speed, and efficiency. Importantly, our work emphasizes the value of hardware-in-the-loop training and paves the way toward energy-efficient information processing on non-von-Neumann architectures.

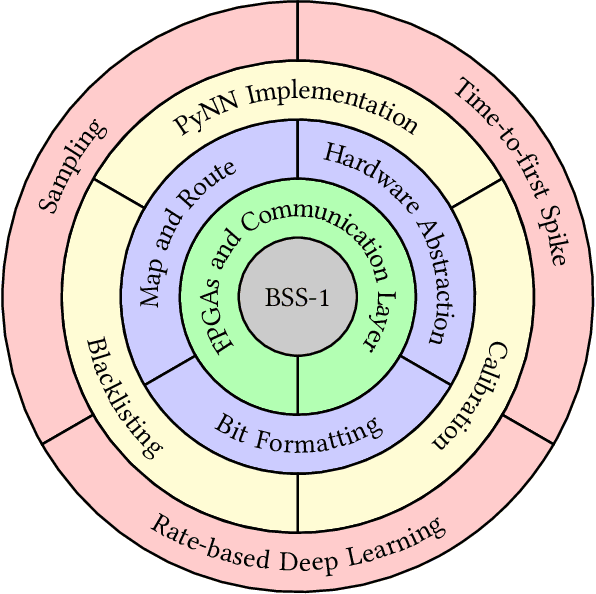

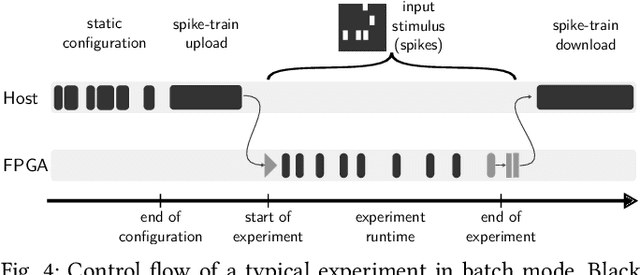

The Operating System of the Neuromorphic BrainScaleS-1 System

Mar 30, 2020

Abstract:BrainScaleS-1 is a wafer-scale mixed-signal accelerated neuromorphic system targeted for research in the fields of computational neuroscience and beyond-von-Neumann computing. The BrainScaleS Operating System (BrainScaleS OS) is a software stack giving users the possibility to emulate networks described in the high-level network description language PyNN with minimal knowledge of the system. At the same time, expert usage is facilitated by allowing to hook into the system at any depth of the stack. We present operation and development methodologies implemented for the BrainScaleS-1 neuromorphic architecture and walk through the individual components of BrainScaleS OS constituting the software stack for BrainScaleS-1 platform operation.

Verification and Design Methods for the BrainScaleS Neuromorphic Hardware System

Mar 25, 2020

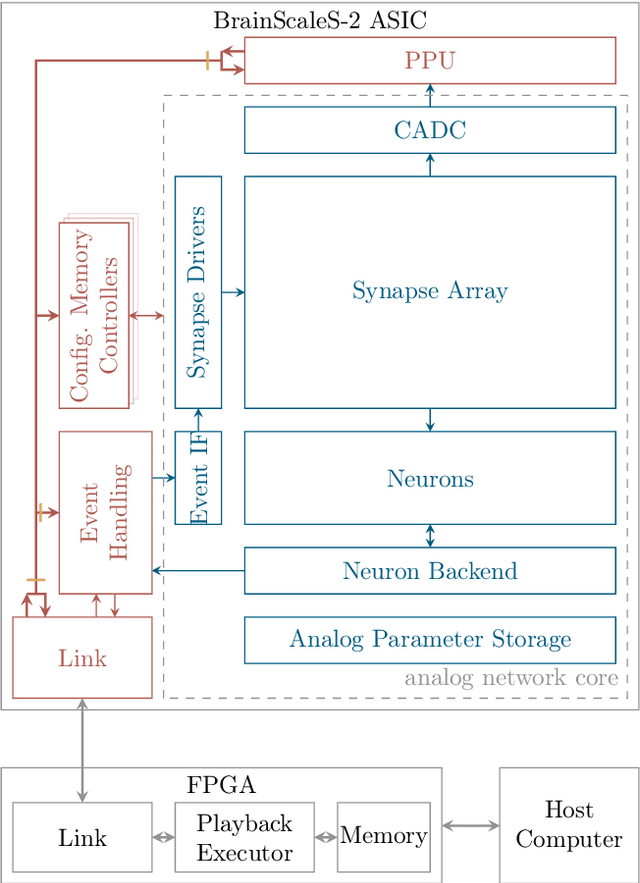

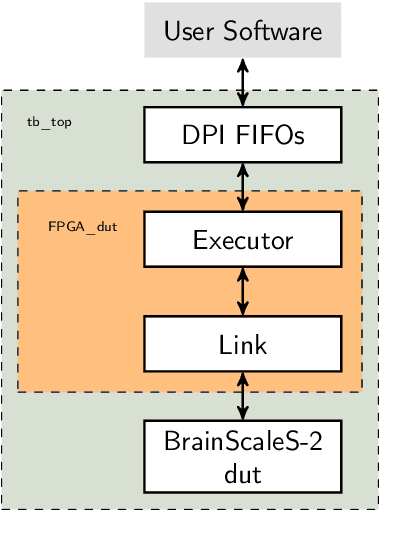

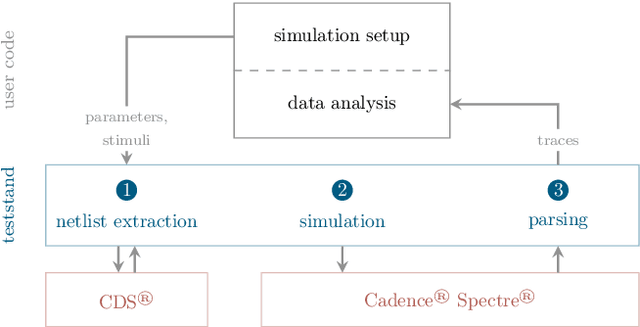

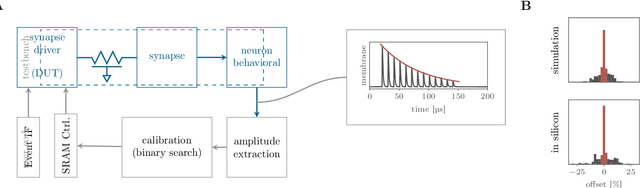

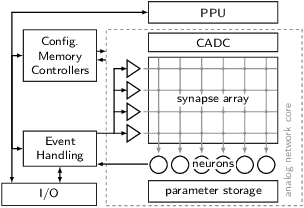

Abstract:This paper presents verification and implementation methods that have been developed for the design of the BrainScaleS-2 65nm ASICs. The 2nd generation BrainScaleS chips are mixed-signal devices with tight coupling between full-custom analog neuromorphic circuits and two general purpose microprocessors (PPU) with SIMD extension for on-chip learning and plasticity. Simulation methods for automated analysis and pre-tapeout calibration of the highly parameterizable analog neuron and synapse circuits and for hardware-software co-development of the digital logic and software stack are presented. Accelerated operation of neuromorphic circuits and highly-parallel digital data buses between the full-custom neuromorphic part and the PPU require custom methodologies to close the digital signal timing at the interfaces. Novel extensions to the standard digital physical implementation design flow are highlighted. We present early results from the first full-size BrainScaleS-2 ASIC containing 512 neurons and 130K synapses, demonstrating the successful application of these methods. An application example illustrates the full functionality of the BrainScaleS-2 hybrid plasticity architecture.

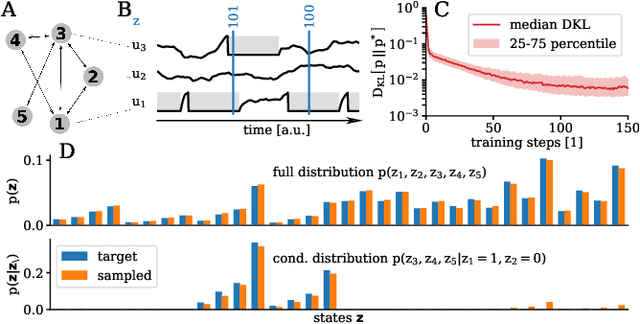

Versatile emulation of spiking neural networks on an accelerated neuromorphic substrate

Dec 30, 2019

Abstract:We present first experimental results on the novel BrainScaleS-2 neuromorphic architecture based on an analog neuro-synaptic core and augmented by embedded microprocessors for complex plasticity and experiment control. The high acceleration factor of 1000 compared to biological dynamics enables the execution of computationally expensive tasks, by allowing the fast emulation of long-duration experiments or rapid iteration over many consecutive trials. The flexibility of our architecture is demonstrated in a suite of five distinct experiments, which emphasize different aspects of the BrainScaleS-2 system.

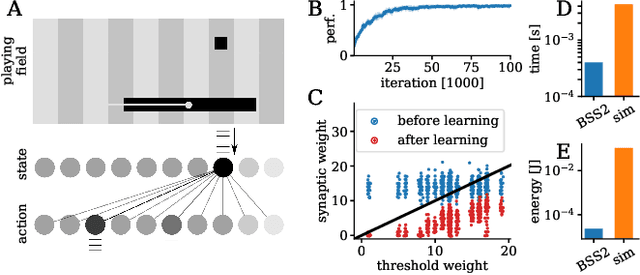

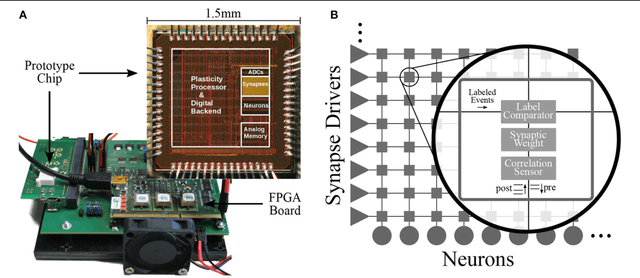

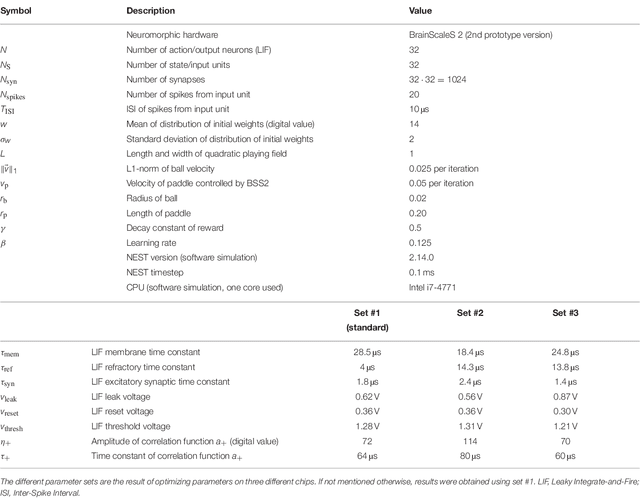

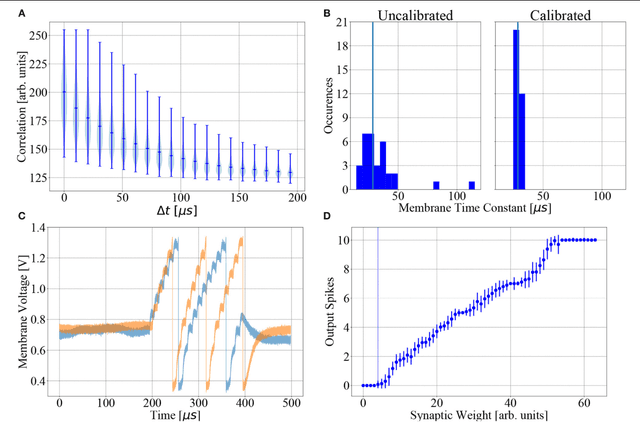

Demonstrating Advantages of Neuromorphic Computation: A Pilot Study

Nov 29, 2018

Abstract:Neuromorphic devices represent an attempt to mimic aspects of the brain's architecture and dynamics with the aim of replicating its hallmark functional capabilities in terms of computational power, robust learning and energy efficiency. We employ a single-chip prototype of the BrainScaleS 2 neuromorphic system to implement a proof-of-concept demonstration of reward-modulated spike-timing-dependent plasticity in a spiking network that learns to play the Pong video game by smooth pursuit. This system combines an electronic mixed-signal substrate for emulating neuron and synapse dynamics with an embedded digital processor for on-chip learning, which in this work also serves to simulate the virtual environment and learning agent. The analog emulation of neuronal membrane dynamics enables a 1000-fold acceleration with respect to biological real-time, with the entire chip operating on a power budget of 57mW. Compared to an equivalent simulation using state-of-the-art software, the on-chip emulation is at least one order of magnitude faster and three orders of magnitude more energy-efficient. We demonstrate how on-chip learning can mitigate the effects of fixed-pattern noise, which is unavoidable in analog substrates, while making use of temporal variability for action exploration. Learning compensates imperfections of the physical substrate, as manifested in neuronal parameter variability, by adapting synaptic weights to match respective excitability of individual neurons.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge