Anna Schroeder

An Efficient Quantum Classifier Based on Hamiltonian Representations

Apr 13, 2025

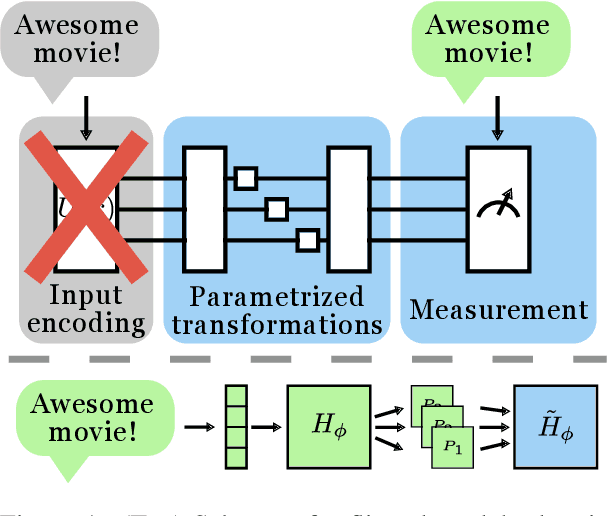

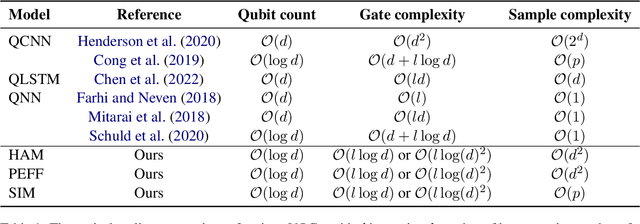

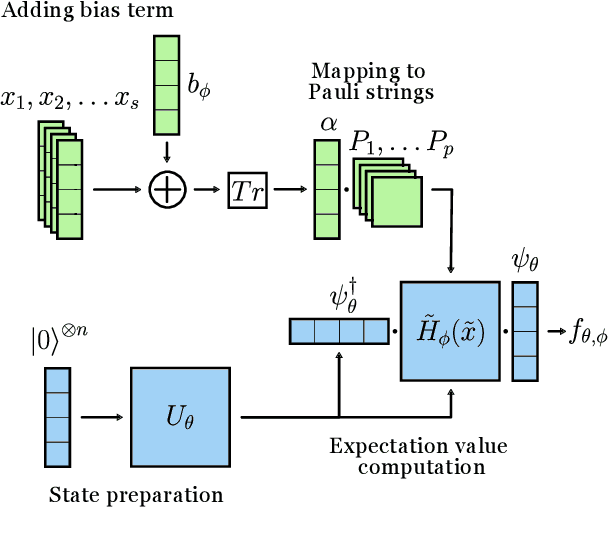

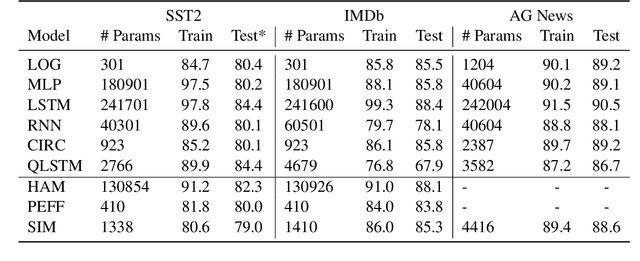

Abstract:Quantum machine learning (QML) is a discipline that seeks to transfer the advantages of quantum computing to data-driven tasks. However, many studies rely on toy datasets or heavy feature reduction, raising concerns about their scalability. Progress is further hindered by hardware limitations and the significant costs of encoding dense vector representations on quantum devices. To address these challenges, we propose an efficient approach called Hamiltonian classifier that circumvents the costs associated with data encoding by mapping inputs to a finite set of Pauli strings and computing predictions as their expectation values. In addition, we introduce two classifier variants with different scaling in terms of parameters and sample complexity. We evaluate our approach on text and image classification tasks, against well-established classical and quantum models. The Hamiltonian classifier delivers performance comparable to or better than these methods. Notably, our method achieves logarithmic complexity in both qubits and quantum gates, making it well-suited for large-scale, real-world applications. We make our implementation available on GitHub.

Pattern representation and recognition with accelerated analog neuromorphic systems

Jul 03, 2017

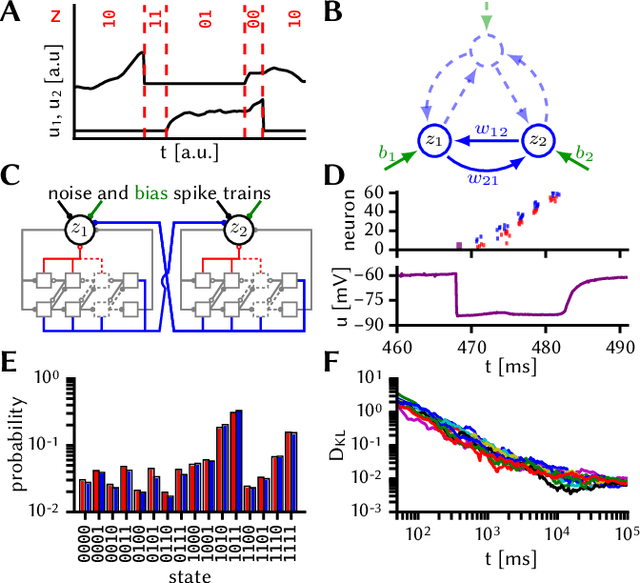

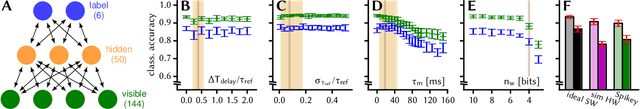

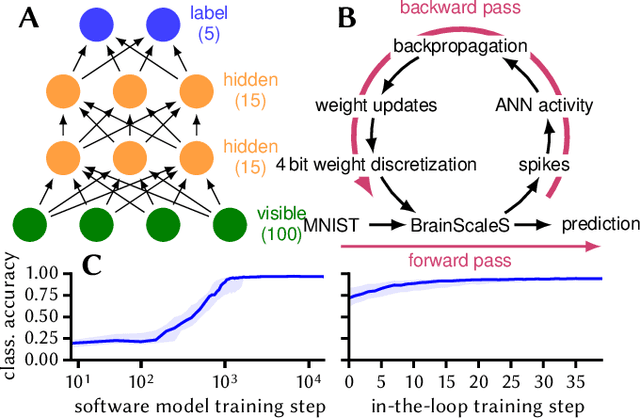

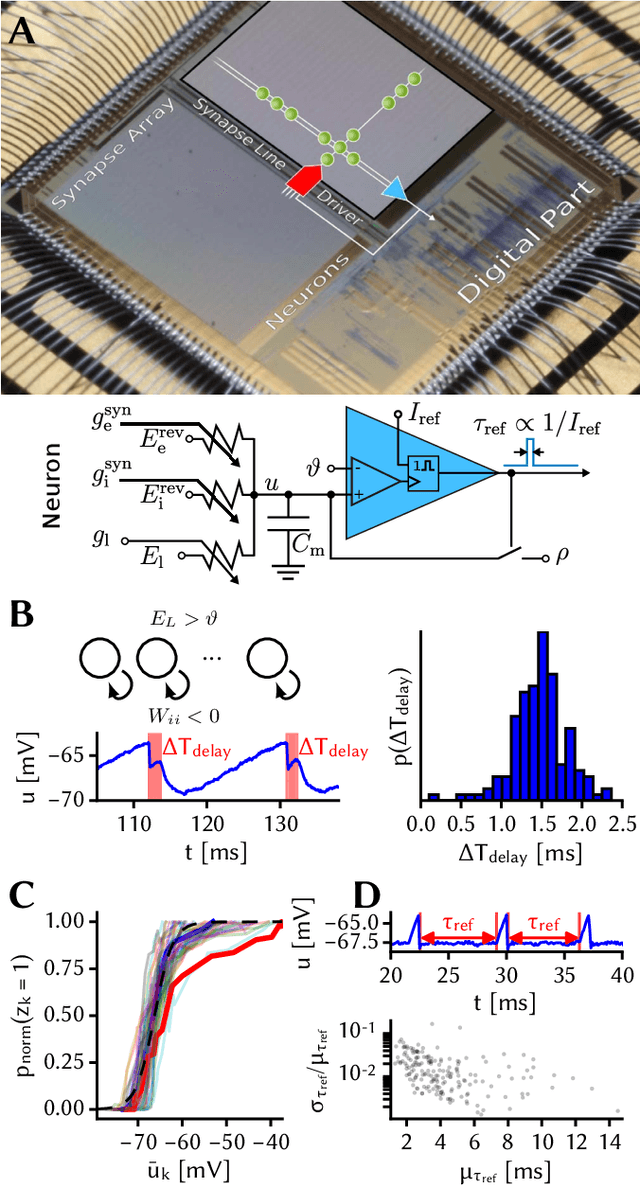

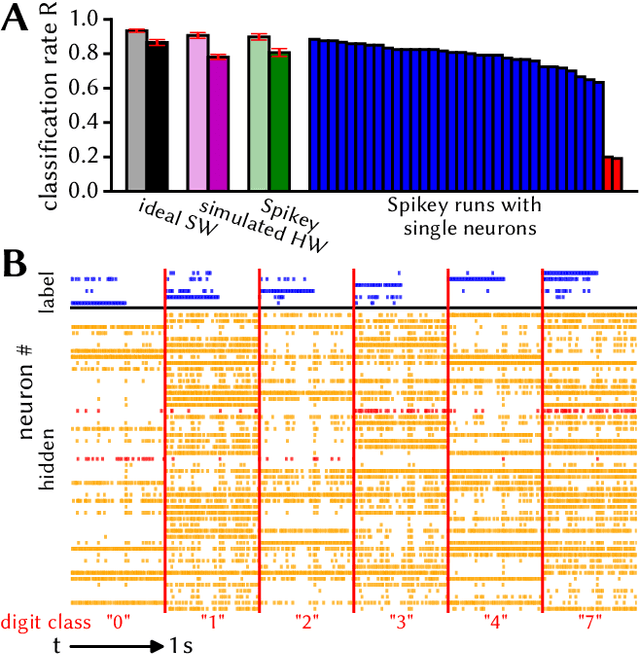

Abstract:Despite being originally inspired by the central nervous system, artificial neural networks have diverged from their biological archetypes as they have been remodeled to fit particular tasks. In this paper, we review several possibilites to reverse map these architectures to biologically more realistic spiking networks with the aim of emulating them on fast, low-power neuromorphic hardware. Since many of these devices employ analog components, which cannot be perfectly controlled, finding ways to compensate for the resulting effects represents a key challenge. Here, we discuss three different strategies to address this problem: the addition of auxiliary network components for stabilizing activity, the utilization of inherently robust architectures and a training method for hardware-emulated networks that functions without perfect knowledge of the system's dynamics and parameters. For all three scenarios, we corroborate our theoretical considerations with experimental results on accelerated analog neuromorphic platforms.

* accepted at ISCAS 2017

Robustness from structure: Inference with hierarchical spiking networks on analog neuromorphic hardware

Mar 12, 2017

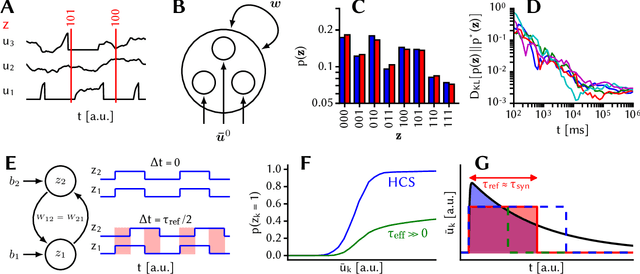

Abstract:How spiking networks are able to perform probabilistic inference is an intriguing question, not only for understanding information processing in the brain, but also for transferring these computational principles to neuromorphic silicon circuits. A number of computationally powerful spiking network models have been proposed, but most of them have only been tested, under ideal conditions, in software simulations. Any implementation in an analog, physical system, be it in vivo or in silico, will generally lead to distorted dynamics due to the physical properties of the underlying substrate. In this paper, we discuss several such distortive effects that are difficult or impossible to remove by classical calibration routines or parameter training. We then argue that hierarchical networks of leaky integrate-and-fire neurons can offer the required robustness for physical implementation and demonstrate this with both software simulations and emulation on an accelerated analog neuromorphic device.

* accepted at IJCNN 2017

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge