Nikos G. Tsagarakis

A High-Force Gripper with Embedded Multimodal Sensing for Powerful and Perception Driven Grasping

Apr 07, 2025Abstract:Modern humanoid robots have shown their promising potential for executing various tasks involving the grasping and manipulation of objects using their end-effectors. Nevertheless, in the most of the cases, the grasping and manipulation actions involve low to moderate payload and interaction forces. This is due to limitations often presented by the end-effectors, which can not match their arm-reachable payload, and hence limit the payload that can be grasped and manipulated. In addition, grippers usually do not embed adequate perception in their hardware, and grasping actions are mainly driven by perception sensors installed in the rest of the robot body, frequently affected by occlusions due to the arm motions during the execution of the grasping and manipulation tasks. To address the above, we developed a modular high grasping force gripper equipped with embedded multi-modal perception functionalities. The proposed gripper can generate a grasping force of 110 N in a compact implementation. The high grasping force capability is combined with embedded multi-modal sensing, which includes an eye-in-hand camera, a Time-of-Flight (ToF) distance sensor, an Inertial Measurement Unit (IMU) and an omnidirectional microphone, permitting the implementation of perception-driven grasping functionalities. We extensively evaluated the grasping force capacity of the gripper by introducing novel payload evaluation metrics that are a function of the robot arm's dynamic motion and gripper thermal states. We also evaluated the embedded multi-modal sensing by performing perception-guided enhanced grasping operations.

* 8 pages, 15 figures

Task-Driven Computational Framework for Simultaneously Optimizing Design and Mounted Pose of Modular Reconfigurable Manipulators

May 03, 2024

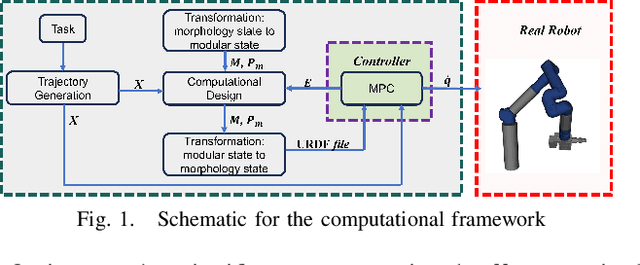

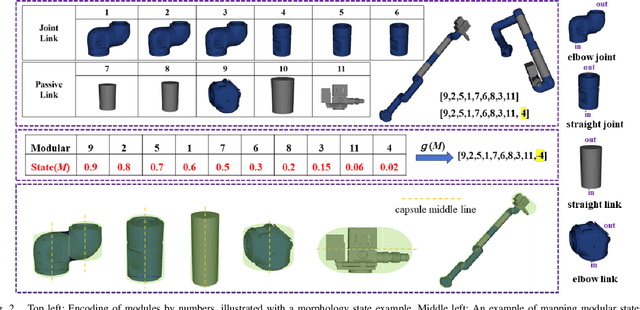

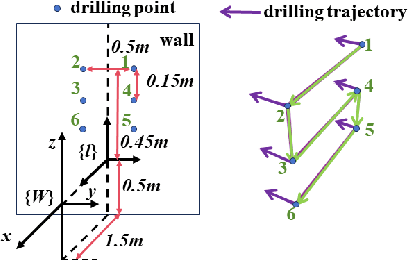

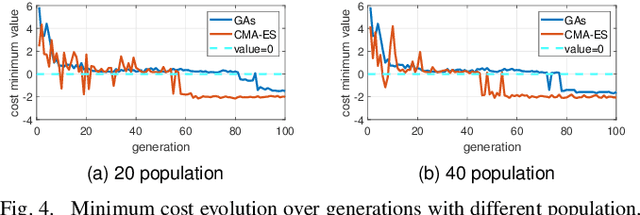

Abstract:Modular reconfigurable manipulators enable quick adaptation and versatility to address different application environments and tailor to the specific requirements of the tasks. Task performance significantly depends on the manipulator's mounted pose and morphology design, therefore posing the need of methodologies for selecting suitable modular robot configurations and mounted pose that can address the specific task requirements and required performance. Morphological changes in modular robots can be derived through a discrete optimization process involving the selective addition or removal of modules. In contrast, the adjustment of the mounted pose operates within a continuous space, allowing for smooth and precise alterations in both orientation and position. This work introduces a computational framework that simultaneously optimizes modular manipulators' mounted pose and morphology. The core of the work is that we design a mapping function that \textit{implicitly} captures the morphological state of manipulators in the continuous space. This transformation function unifies the optimization of mounted pose and morphology within a continuous space. Furthermore, our optimization framework incorporates a array of performance metrics, such as minimum joint effort and maximum manipulability, and considerations for trajectory execution error and physical and safety constraints. To highlight our method's benefits, we compare it with previous methods that framed such problem as a combinatorial optimization problem and demonstrate its practicality in selecting the modular robot configuration for executing a drilling task with the CONCERT modular robotic platform.

Design and Validation of a Multi-Arm Relocatable Manipulator for Space Applications

Jan 24, 2023

Abstract:This work presents the computational design and validation of the Multi-Arm Relocatable Manipulator (MARM), a three-limb robot for space applications, with particular reference to the MIRROR (i.e., the Multi-arm Installation Robot for Readying ORUs and Reflectors) use-case scenario as proposed by the European Space Agency. A holistic computational design and validation pipeline is proposed, with the aim of comparing different limb designs, as well as ensuring that valid limb candidates enable MARM to perform the complex loco-manipulation tasks required. Motivated by the task complexity in terms of kinematic reachability, (self)-collision avoidance, contact wrench limits, and motor torque limits affecting Earth experiments, this work leverages on multiple state-of-art planning and control approaches to aid the robot design and validation. These include sampling-based planning on manifolds, non-linear trajectory optimization, and quadratic programs for inverse dynamics computations with constraints. Finally, we present the attained MARM design and conduct preliminary tests for hardware validation through a set of lab experiments.

Prototyping fast and agile motions for legged robots with Horizon

Jun 17, 2022

Abstract:For legged robots to perform agile, highly dynamic and contact-rich motions, whole-body trajectories computation of under-actuated complex systems subject to non-linear dynamics is required. In this work, we present hands-on applications of Horizon, a novel open-source framework for trajectory optimization tailored to robotic systems, that provides a collection of tools to simplify dynamic motion generation. Horizon was tested on a broad range of behaviours involving several robotic platforms: we introduce its building blocks and describe the complete procedure to generate three complex motions using its intuitive and straightforward API.

Loco-Manipulation Planning for Legged Robots: Offline and Online Strategies

May 20, 2022

Abstract:The deployment of robots within realistic environments requires the capability to plan and refine the loco-manipulation trajectories on the fly to avoid unexpected interactions with a dynamic environment. This extended abstract provides a pipeline to offline plan a configuration space global trajectory based on a randomized strategy, and to online locally refine it depending on any change of the dynamic environment and the robot state. The offline planner directly plans in the contact space, and additionally seeks for whole-body feasible configurations compliant with the sampled contact states. The planned trajectory, made by a discrete set of contacts and configurations, can be seen as a graph and it can be online refined during the execution of the global trajectory. The online refinement is carried out by a graph optimization planner exploiting visual information. It locally acts on the global initial plan to account for possible changes in the environment. While the offline planner is a concluded work, tested on the humanoid COMAN+, the online local planner is still a work-in-progress which has been tested on a reduced model of the CENTAURO robot to avoid dynamic and static obstacles interfering with a wheeled motion task. Both the COMAN+ and the CENTAURO robots have been designed at the Italian Institute of Technology (IIT).

Agile Actions with a Centaur-Type Humanoid: A Decoupled Approach

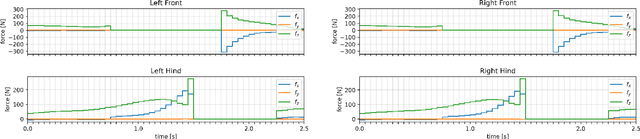

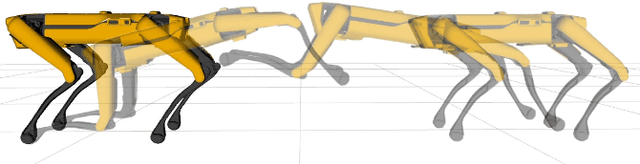

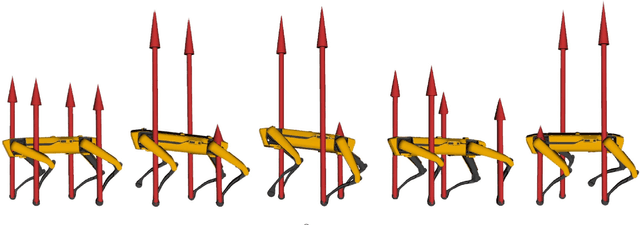

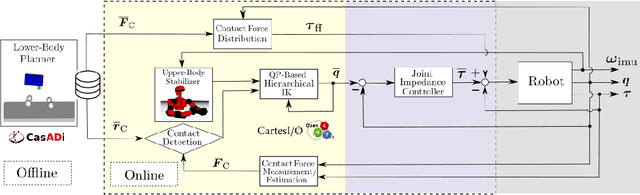

Mar 12, 2021

Abstract:The kinematic features of a centaur-type humanoid platform, combined with a powerful actuation, enable the experimentation of a variety of agile and dynamic motions. However, the higher number of degrees-of-freedom and the increased weight of the system, compared to the bipedal and quadrupedal counterparts, pose significant research challenges in terms of computational load and real implementation. To this end, this work presents a control architecture to perform agile actions, conceived for torque-controlled platforms, which decouples for computational purposes offline optimal control planning of lower-body primitives, based on a template kinematic model, and online control of the upper-body motion to maintain balance. Three stabilizing strategies are presented, whose performance is compared in two types of simulated jumps, while experimental validation is performed on a half-squat jump using the CENTAURO robot.

Curved patch mapping and tracking for irregular terrain modeling: Application to bipedal robot foot placement

Apr 05, 2020

Abstract:Legged robots need to make contact with irregular surfaces, when operating in unstructured natural terrains. Representing and perceiving these areas to reason about potential contact between a robot and its surrounding environment, is still largely an open problem. This paper introduces a new framework to model and map local rough terrain surfaces, for tasks such as bipedal robot foot placement. The system operates in real-time, on data from an RGB-D and an IMU sensor. We introduce a set of parametrized patch models and an algorithm to fit them in the environment. Potential contacts are identified as bounded curved patches of approximately the same size as the robot's foot sole. This includes sparse seed point sampling, point cloud neighborhood search, and patch fitting and validation. We also present a mapping and tracking system, where patches are maintained in a local spatial map around the robot as it moves. A bio-inspired sampling algorithm is introduced for finding salient contacts. We include a dense volumetric fusion layer for spatiotemporally tracking, using multiple depth data to reconstruct a local point cloud. We present experimental results on a mini-biped robot that performs foot placements on rocks, implementing a 3D foothold perception system, that uses the developed patch mapping and tracking framework.

* arXiv admin note: text overlap with arXiv:1612.06164

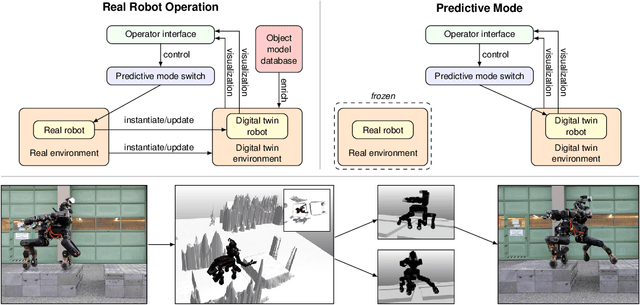

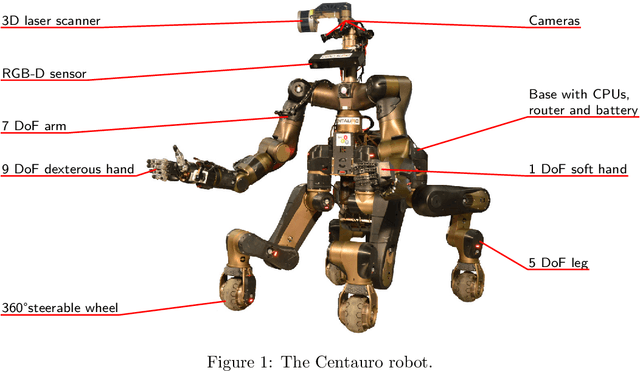

Flexible Disaster Response of Tomorrow -- Final Presentation and Evaluation of the CENTAURO System

Sep 19, 2019

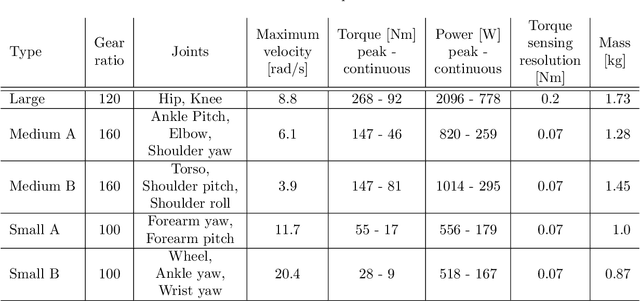

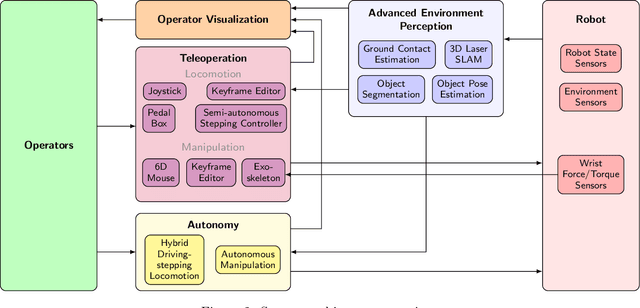

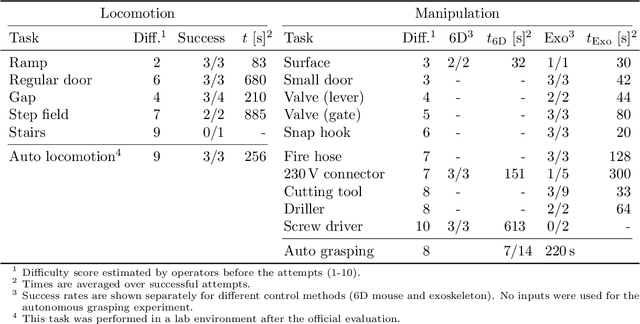

Abstract:Mobile manipulation robots have high potential to support rescue forces in disaster-response missions. Despite the difficulties imposed by real-world scenarios, robots are promising to perform mission tasks from a safe distance. In the CENTAURO project, we developed a disaster-response system which consists of the highly flexible Centauro robot and suitable control interfaces including an immersive tele-presence suit and support-operator controls on different levels of autonomy. In this article, we give an overview of the final CENTAURO system. In particular, we explain several high-level design decisions and how those were derived from requirements and extensive experience of Kerntechnische Hilfsdienst GmbH, Karlsruhe, Germany (KHG). We focus on components which were recently integrated and report about a systematic evaluation which demonstrated system capabilities and revealed valuable insights.

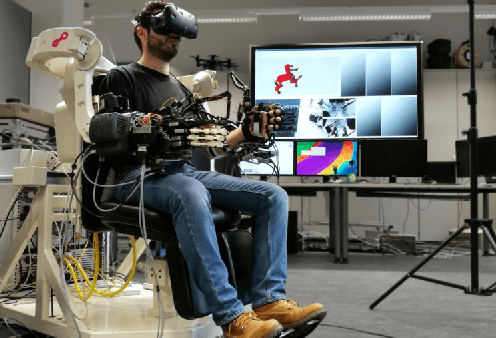

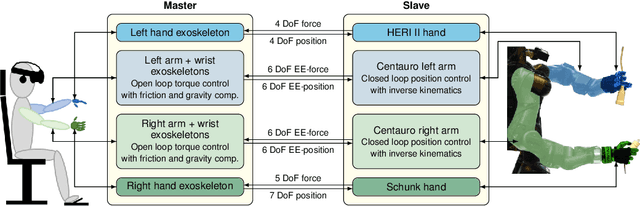

Remote Mobile Manipulation with the Centauro Robot: Full-body Telepresence and Autonomous Operator Assistance

Aug 05, 2019

Abstract:Solving mobile manipulation tasks in inaccessible and dangerous environments is an important application of robots to support humans. Example domains are construction and maintenance of manned and unmanned stations on the moon and other planets. Suitable platforms require flexible and robust hardware, a locomotion approach that allows for navigating a wide variety of terrains, dexterous manipulation capabilities, and respective user interfaces. We present the CENTAURO system which has been designed for these requirements and consists of the Centauro robot and a set of advanced operator interfaces with complementary strength enabling the system to solve a wide range of realistic mobile manipulation tasks. The robot possesses a centaur-like body plan and is driven by torque-controlled compliant actuators. Four articulated legs ending in steerable wheels allow for omnidirectional driving as well as for making steps. An anthropomorphic upper body with two arms ending in five-finger hands enables human-like manipulation. The robot perceives its environment through a suite of multimodal sensors. The resulting platform complexity goes beyond the complexity of most known systems which puts the focus on a suitable operator interface. An operator can control the robot through a telepresence suit, which allows for flexibly solving a large variety of mobile manipulation tasks. Locomotion and manipulation functionalities on different levels of autonomy support the operation. The proposed user interfaces enable solving a wide variety of tasks without previous task-specific training. The integrated system is evaluated in numerous teleoperated experiments that are described along with lessons learned.

V2CNet: A Deep Learning Framework to Translate Videos to Commands for Robotic Manipulation

Mar 23, 2019

Abstract:We propose V2CNet, a new deep learning framework to automatically translate the demonstration videos to commands that can be directly used in robotic applications. Our V2CNet has two branches and aims at understanding the demonstration video in a fine-grained manner. The first branch has the encoder-decoder architecture to encode the visual features and sequentially generate the output words as a command, while the second branch uses a Temporal Convolutional Network (TCN) to learn the fine-grained actions. By jointly training both branches, the network is able to model the sequential information of the command, while effectively encodes the fine-grained actions. The experimental results on our new large-scale dataset show that V2CNet outperforms recent state-of-the-art methods by a substantial margin, while its output can be applied in real robotic applications. The source code and trained models will be made available.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge