Diego Rodriguez

Dexterity Inc

A Self-Supervised Framework for Space Object Behaviour Characterisation

Apr 08, 2025Abstract:Foundation Models, pre-trained on large unlabelled datasets before task-specific fine-tuning, are increasingly being applied to specialised domains. Recent examples include ClimaX for climate and Clay for satellite Earth observation, but a Foundation Model for Space Object Behavioural Analysis has not yet been developed. As orbital populations grow, automated methods for characterising space object behaviour are crucial for space safety. We present a Space Safety and Sustainability Foundation Model focusing on space object behavioural analysis using light curves (LCs). We implemented a Perceiver-Variational Autoencoder (VAE) architecture, pre-trained with self-supervised reconstruction and masked reconstruction on 227,000 LCs from the MMT-9 observatory. The VAE enables anomaly detection, motion prediction, and LC generation. We fine-tuned the model for anomaly detection & motion prediction using two independent LC simulators (CASSANDRA and GRIAL respectively), using CAD models of boxwing, Sentinel-3, SMOS, and Starlink platforms. Our pre-trained model achieved a reconstruction error of 0.01%, identifying potentially anomalous light curves through reconstruction difficulty. After fine-tuning, the model scored 88% and 82% accuracy, with 0.90 and 0.95 ROC AUC scores respectively in both anomaly detection and motion mode prediction (sun-pointing, spin, etc.). Analysis of high-confidence anomaly predictions on real data revealed distinct patterns including characteristic object profiles and satellite glinting. Here, we demonstrate how self-supervised learning can simultaneously enable anomaly detection, motion prediction, and synthetic data generation from rich representations learned in pre-training. Our work therefore supports space safety and sustainability through automated monitoring and simulation capabilities.

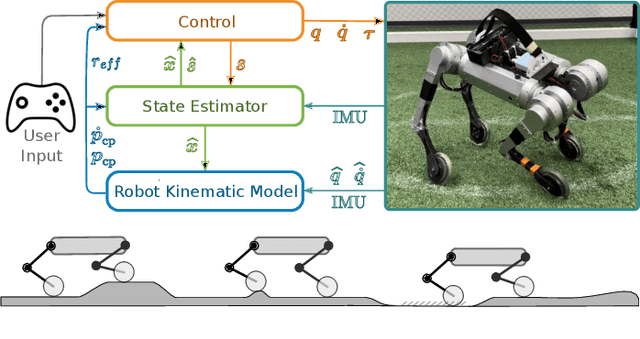

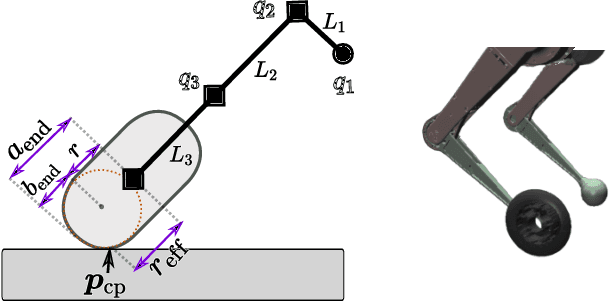

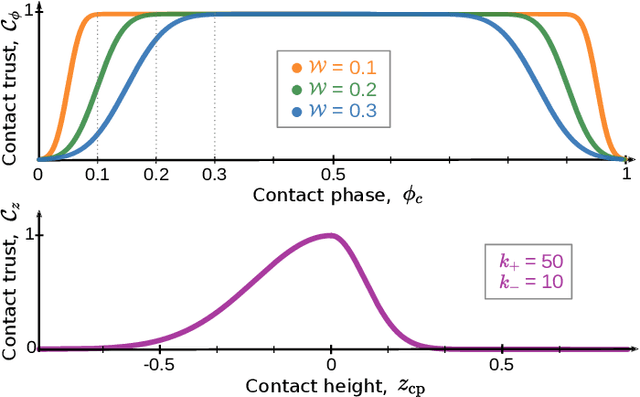

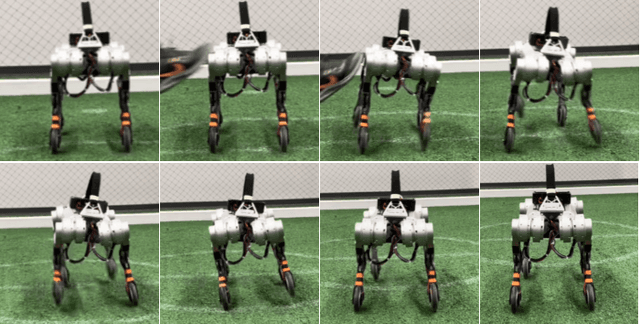

State Estimation for Hybrid Locomotion of Driving-Stepping Quadrupeds

Nov 21, 2022

Abstract:Fast and versatile locomotion can be achieved with wheeled quadruped robots that drive quickly on flat terrain, but are also able to overcome challenging terrain by adapting their body pose and by making steps. In this paper, we present a state estimation approach for four-legged robots with non-steerable wheels that enables hybrid driving-stepping locomotion capabilities. We formulate a Kalman Filter (KF) for state estimation that integrates driven wheels into the filter equations and estimates the robot state (position and velocity) as well as the contribution of driving with wheels to the above state. Our estimation approach allows us to use the control framework of the Mini Cheetah quadruped robot with minor modifications. We tested our approach on this robot that we augmented with actively driven wheels in simulation and in the real world. The experimental results are available at https://www.ais.uni-bonn.de/%7Ehosseini/se-dsq .

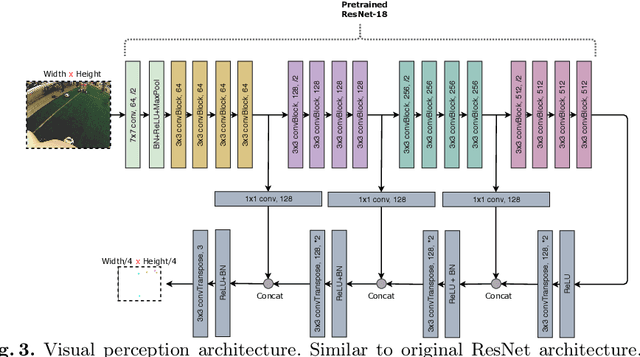

Mapless Humanoid Navigation Using Learned Latent Dynamics

Aug 09, 2021

Abstract:In this paper, we propose a novel Deep Reinforcement Learning approach to address the mapless navigation problem, in which the locomotion actions of a humanoid robot are taken online based on the knowledge encoded in learned models. Planning happens by generating open-loop trajectories in a learned latent space that captures the dynamics of the environment. Our planner considers visual (RGB images) and non-visual observations (e.g., attitude estimations). This confers the agent upon awareness not only of the scenario, but also of its own state. In addition, we incorporate a termination likelihood predictor model as an auxiliary loss function of the control policy, which enables the agent to anticipate terminal states of success and failure. In this manner, the sample efficiency of the approach for episodic tasks is increased. Our model is evaluated on the NimbRo-OP2X humanoid robot that navigates in scenes avoiding collisions efficiently in simulation and with the real hardware.

DeepWalk: Omnidirectional Bipedal Gait by Deep Reinforcement Learning

Jun 01, 2021

Abstract:Bipedal walking is one of the most difficult but exciting challenges in robotics. The difficulties arise from the complexity of high-dimensional dynamics, sensing and actuation limitations combined with real-time and computational constraints. Deep Reinforcement Learning (DRL) holds the promise to address these issues by fully exploiting the robot dynamics with minimal craftsmanship. In this paper, we propose a novel DRL approach that enables an agent to learn omnidirectional locomotion for humanoid (bipedal) robots. Notably, the locomotion behaviors are accomplished by a single control policy (a single neural network). We achieve this by introducing a new curriculum learning method that gradually increases the task difficulty by scheduling target velocities. In addition, our method does not require reference motions which facilities its application to robots with different kinematics, and reduces the overall complexity. Finally, different strategies for sim-to-real transfer are presented which allow us to transfer the learned policy to a real humanoid robot.

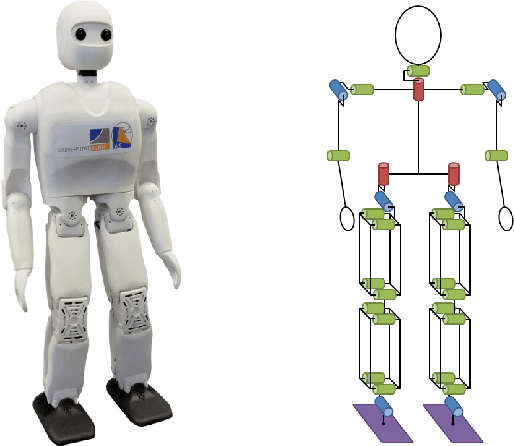

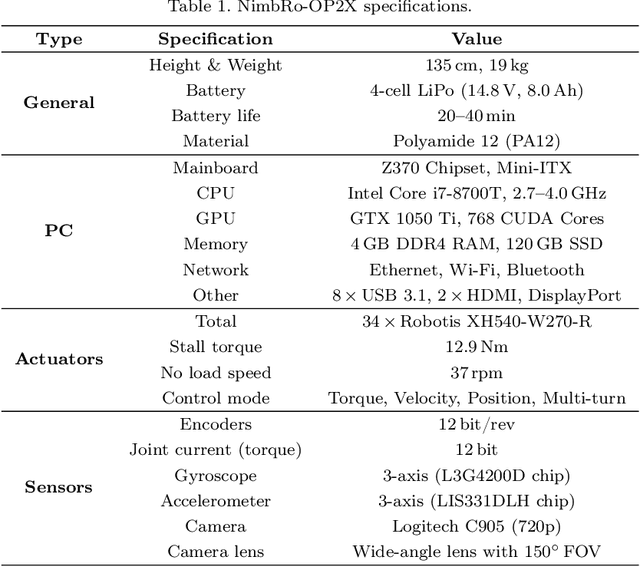

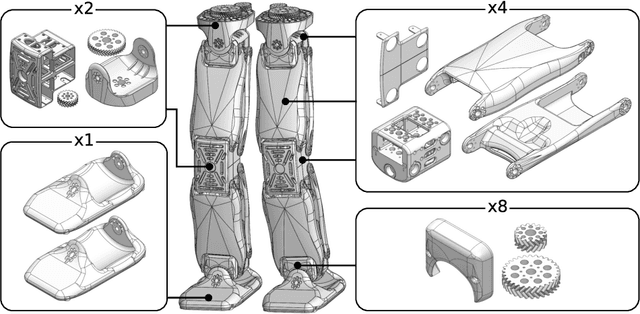

NimbRo-OP2X: Affordable Adult-sized 3D-printed Open-Source Humanoid Robot for Research

Oct 19, 2020

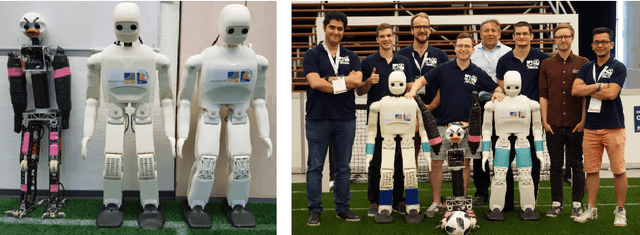

Abstract:For several years, high development and production costs of humanoid robots restricted researchers interested in working in the field. To overcome this problem, several research groups have opted to work with simulated or smaller robots, whose acquisition costs are significantly lower. However, due to scale differences and imperfect simulation replicability, results may not be directly reproducible on real, adult-sized robots. In this paper, we present the NimbRo-OP2X, a capable and affordable adult-sized humanoid platform aiming to significantly lower the entry barrier for humanoid robot research. With a height of 135 cm and weight of only 19 kg, the robot can interact in an unmodified, human environment without special safety equipment. Modularity in hardware and software allow this platform enough flexibility to operate in different scenarios and applications with minimal effort. The robot is equipped with an on-board computer with GPU, which enables the implementation of state-of-the-art approaches for object detection and human perception demanded by areas such as manipulation and human-robot interaction. Finally, the capabilities of the NimbRo-OP2X, especially in terms of locomotion stability and visual perception, are evaluated. This includes the performance at RoboCup 2018, where NimbRo-OP2X won all possible awards in the AdultSize class.

Category-Level 3D Non-Rigid Registration from Single-View RGB Images

Aug 17, 2020

Abstract:In this paper, we propose a novel approach to solve the 3D non-rigid registration problem from RGB images using Convolutional Neural Networks (CNNs). Our objective is to find a deformation field (typically used for transferring knowledge between instances, e.g., grasping skills) that warps a given 3D canonical model into a novel instance observed by a single-view RGB image. This is done by training a CNN that infers a deformation field for the visible parts of the canonical model and by employing a learned shape (latent) space for inferring the deformations of the occluded parts. As result of the registration, the observed model is reconstructed. Because our method does not need depth information, it can register objects that are typically hard to perceive with RGB-D sensors, e.g. with transparent or shiny surfaces. Even without depth data, our approach outperforms the Coherent Point Drift (CPD) registration method for the evaluated object categories.

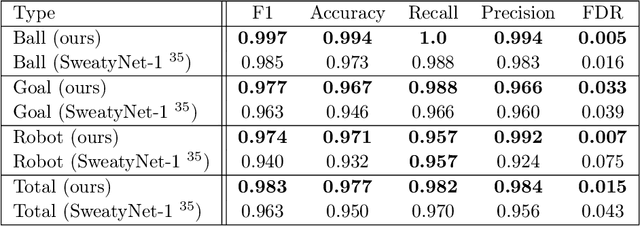

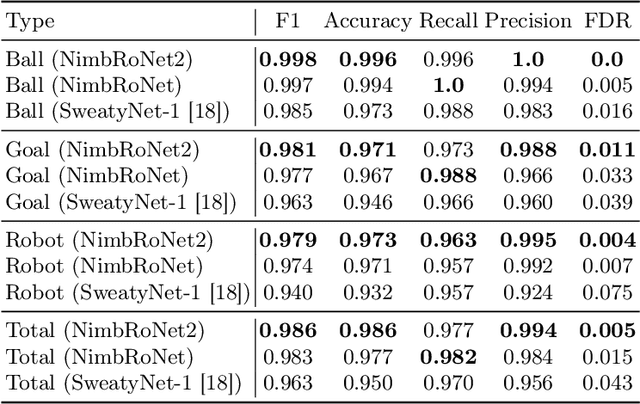

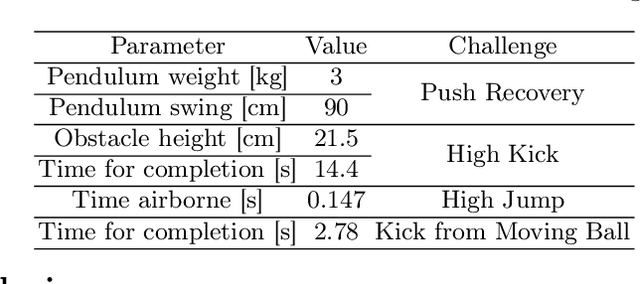

RoboCup 2019 AdultSize Winner NimbRo: Deep Learning Perception, In-Walk Kick, Push Recovery, and Team Play Capabilities

Dec 17, 2019

Abstract:Individual and team capabilities are challenged every year by rule changes and the increasing performance of the soccer teams at RoboCup Humanoid League. For RoboCup 2019 in the AdultSize class, the number of players (2 vs. 2 games) and the field dimensions were increased, which demanded for team coordination and robust visual perception and localization modules. In this paper, we present the latest developments that lead team NimbRo to win the soccer tournament, drop-in games, technical challenges and the Best Humanoid Award of the RoboCup Humanoid League 2019 in Sydney. These developments include a deep learning vision system, in-walk kicks, step-based push-recovery, and team play strategies.

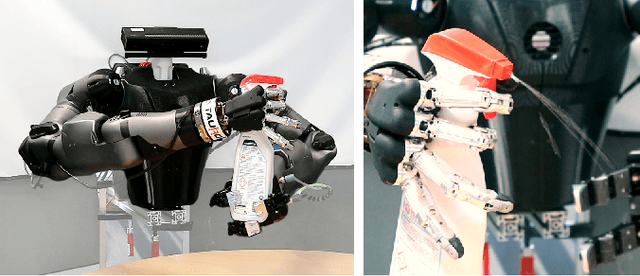

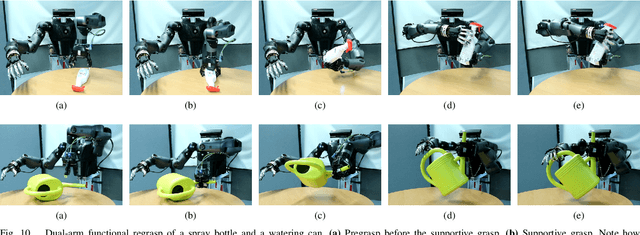

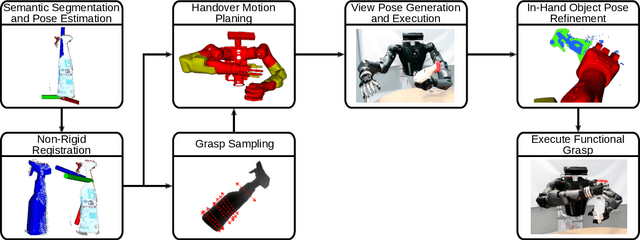

Autonomous Bimanual Functional Regrasping of Novel Object Class Instances

Oct 01, 2019

Abstract:In human-made scenarios, robots need to be able to fully operate objects in their surroundings, i.e., objects are required to be functionally grasped rather than only picked. This imposes very strict constraints on the object pose such that a direct grasp can be performed. Inspired by the anthropomorphic nature of humanoid robots, we propose an approach that first grasps an object with one hand, obtaining full control over its pose, and performs the functional grasp with the second hand subsequently. Thus, we develop a fully autonomous pipeline for dual-arm functional regrasping of novel familiar objects, i.e., objects never seen before that belong to a known object category, e.g., spray bottles. This process involves semantic segmentation, object pose estimation, non-rigid mesh registration, grasp sampling, handover pose generation and in-hand pose refinement. The latter is used to compensate for the unpredictable object movement during the first grasp. The approach is applied to a human-like upper body. To the best knowledge of the authors, this is the first system that exhibits autonomous bimanual functional regrasping capabilities. We demonstrate that our system yields reliable success rates and can be applied on-line to real-world tasks using only one off-the-shelf RGB-D sensor.

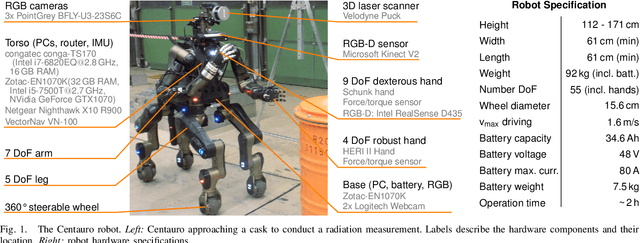

Flexible Disaster Response of Tomorrow -- Final Presentation and Evaluation of the CENTAURO System

Sep 19, 2019

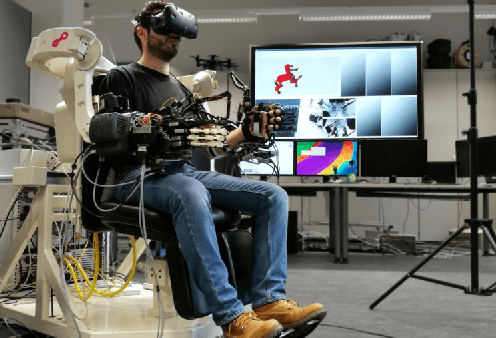

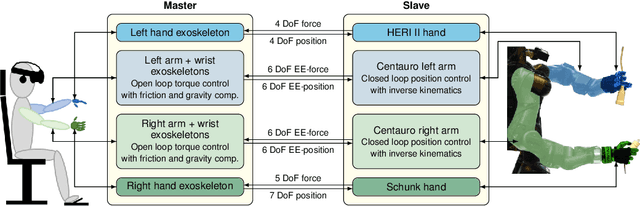

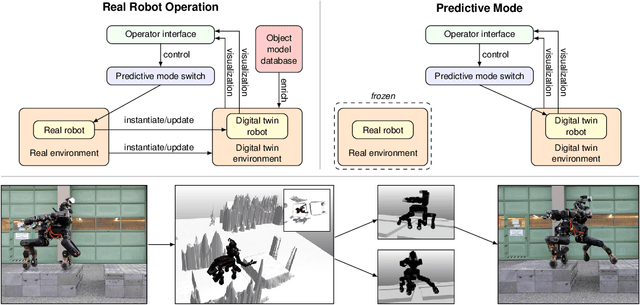

Abstract:Mobile manipulation robots have high potential to support rescue forces in disaster-response missions. Despite the difficulties imposed by real-world scenarios, robots are promising to perform mission tasks from a safe distance. In the CENTAURO project, we developed a disaster-response system which consists of the highly flexible Centauro robot and suitable control interfaces including an immersive tele-presence suit and support-operator controls on different levels of autonomy. In this article, we give an overview of the final CENTAURO system. In particular, we explain several high-level design decisions and how those were derived from requirements and extensive experience of Kerntechnische Hilfsdienst GmbH, Karlsruhe, Germany (KHG). We focus on components which were recently integrated and report about a systematic evaluation which demonstrated system capabilities and revealed valuable insights.

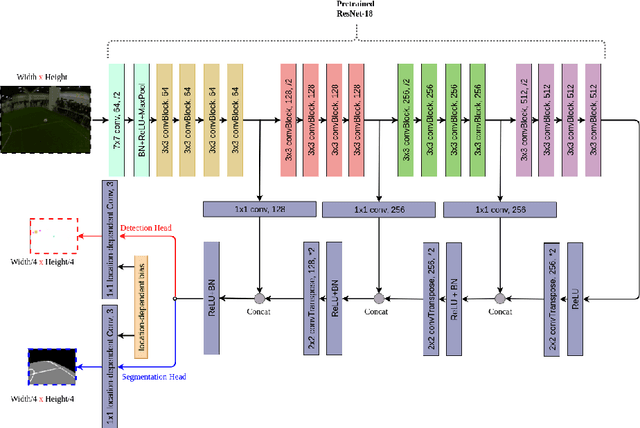

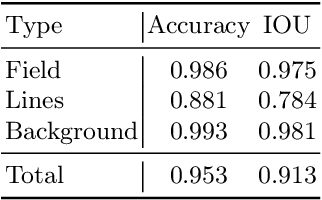

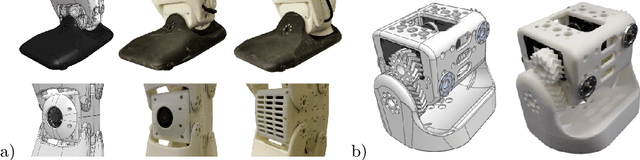

NimbRo Robots Winning RoboCup 2018 Humanoid AdultSize Soccer Competitions

Sep 05, 2019

Abstract:Over the past few years, the Humanoid League rules have changed towards more realistic and challenging game environments, which encourage teams to advance their robot soccer performances. In this paper, we present the software and hardware designs that led our team NimbRo to win the competitions in the AdultSize league -- including the soccer tournament, the drop-in games, and the technical challenges at RoboCup 2018 in Montreal. Altogether, this resulted in NimbRo winning the Best Humanoid Award. In particular, we describe our deep-learning approaches for visual perception and our new fully 3D printed robot NimbRo-OP2X.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge