Antonio Di Guardo

Remote Mobile Manipulation with the Centauro Robot: Full-body Telepresence and Autonomous Operator Assistance

Aug 05, 2019

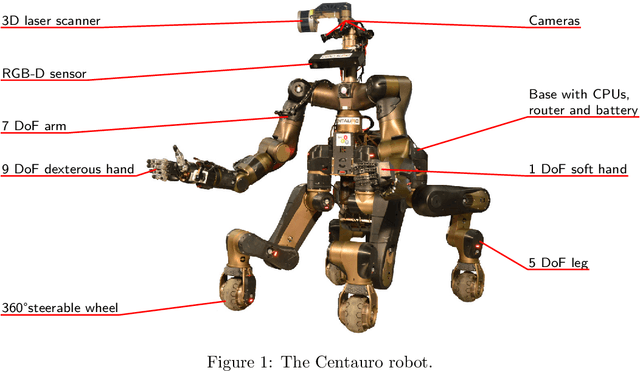

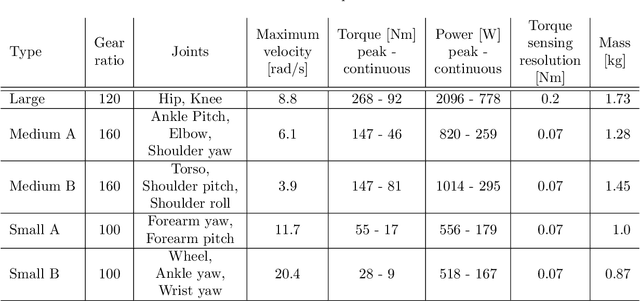

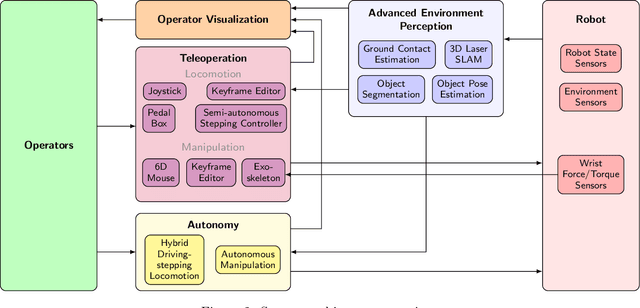

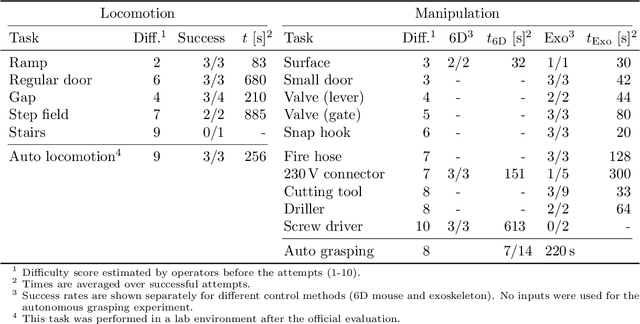

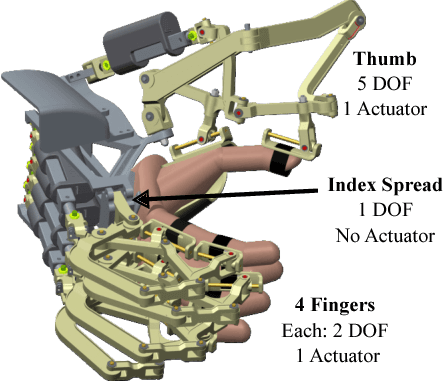

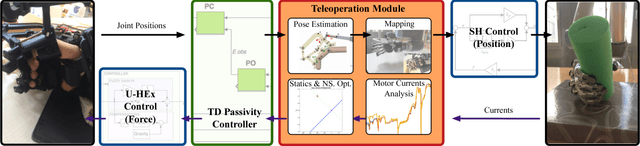

Abstract:Solving mobile manipulation tasks in inaccessible and dangerous environments is an important application of robots to support humans. Example domains are construction and maintenance of manned and unmanned stations on the moon and other planets. Suitable platforms require flexible and robust hardware, a locomotion approach that allows for navigating a wide variety of terrains, dexterous manipulation capabilities, and respective user interfaces. We present the CENTAURO system which has been designed for these requirements and consists of the Centauro robot and a set of advanced operator interfaces with complementary strength enabling the system to solve a wide range of realistic mobile manipulation tasks. The robot possesses a centaur-like body plan and is driven by torque-controlled compliant actuators. Four articulated legs ending in steerable wheels allow for omnidirectional driving as well as for making steps. An anthropomorphic upper body with two arms ending in five-finger hands enables human-like manipulation. The robot perceives its environment through a suite of multimodal sensors. The resulting platform complexity goes beyond the complexity of most known systems which puts the focus on a suitable operator interface. An operator can control the robot through a telepresence suit, which allows for flexibly solving a large variety of mobile manipulation tasks. Locomotion and manipulation functionalities on different levels of autonomy support the operation. The proposed user interfaces enable solving a wide variety of tasks without previous task-specific training. The integrated system is evaluated in numerous teleoperated experiments that are described along with lessons learned.

Learning Postural Synergies for Categorical Grasping through Shape Space Registration

Oct 18, 2018

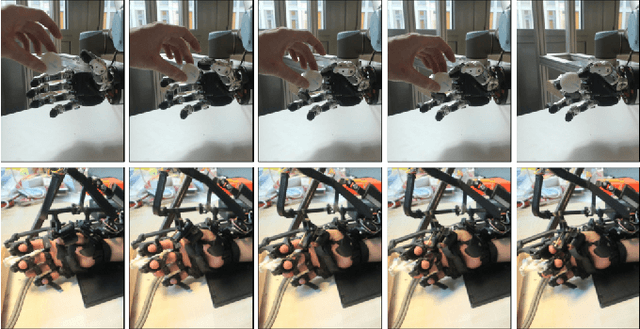

Abstract:Every time a person encounters an object with a given degree of familiarity, he/she immediately knows how to grasp it. Adaptation of the movement of the hand according to the object geometry happens effortlessly because of the accumulated knowledge of previous experiences grasping similar objects. In this paper, we present a novel method for inferring grasp configurations based on the object shape. Grasping knowledge is gathered in a synergy space of the robotic hand built by following a human grasping taxonomy. The synergy space is constructed through human demonstrations employing a exoskeleton that provides force feedback, which provides the advantage of evaluating the quality of the grasp. The shape descriptor is obtained by means of a categorical non-rigid registration that encodes typical intra-class variations. This approach is especially suitable for on-line scenarios where only a portion of the object's surface is observable. This method is demonstrated through simulation and real robot experiments by grasping objects never seen before by the robot.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge