Antonio Frisoli

A Soft Fabric-Based Thermal Haptic Device for VR and Teleoperation

Aug 28, 2025Abstract:This paper presents a novel fabric-based thermal-haptic interface for virtual reality and teleoperation. It integrates pneumatic actuation and conductive fabric with an innovative ultra-lightweight design, achieving only 2~g for each finger unit. By embedding heating elements within textile pneumatic chambers, the system delivers modulated pressure and thermal stimuli to fingerpads through a fully soft, wearable interface. Comprehensive characterization demonstrates rapid thermal modulation with heating rates up to 3$^{\circ}$C/s, enabling dynamic thermal feedback for virtual or teleoperation interactions. The pneumatic subsystem generates forces up to 8.93~N at 50~kPa, while optimization of fingerpad-actuator clearance enhances cooling efficiency with minimal force reduction. Experimental validation conducted with two different user studies shows high temperature identification accuracy (0.98 overall) across three thermal levels, and significant manipulation improvements in a virtual pick-and-place tasks. Results show enhanced success rates (88.5\% to 96.4\%, p = 0.029) and improved force control precision (p = 0.013) when haptic feedback is enabled, validating the effectiveness of the integrated thermal-haptic approach for advanced human-machine interaction applications.

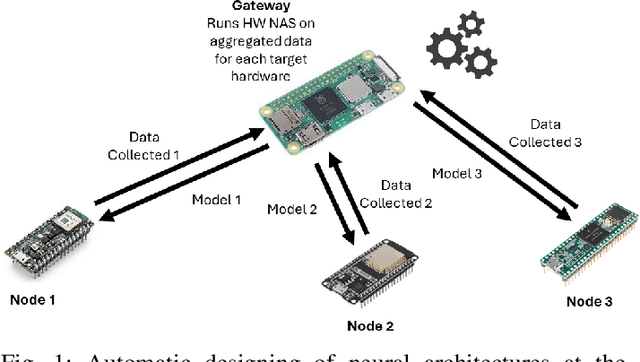

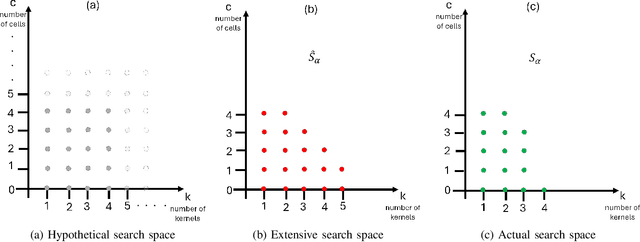

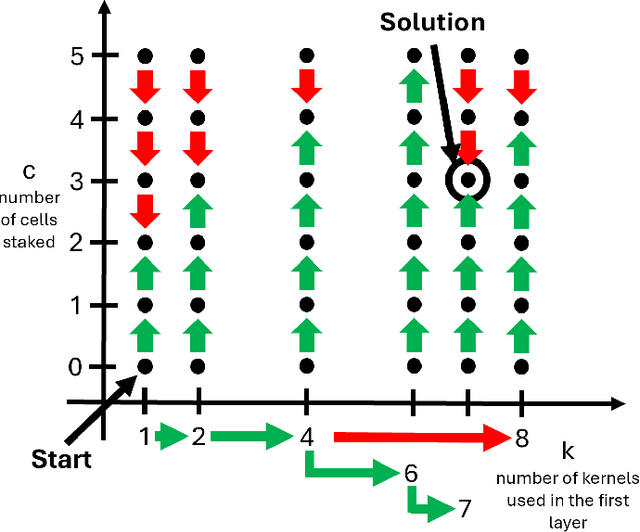

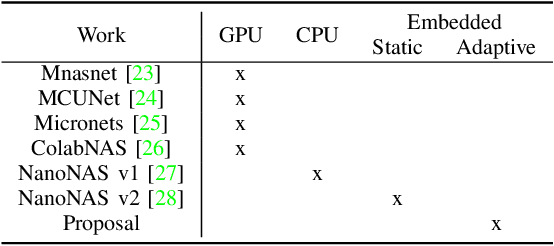

Searching Neural Architectures for Sensor Nodes on IoT Gateways

May 29, 2025

Abstract:This paper presents an automatic method for the design of Neural Networks (NNs) at the edge, enabling Machine Learning (ML) access even in privacy-sensitive Internet of Things (IoT) applications. The proposed method runs on IoT gateways and designs NNs for connected sensor nodes without sharing the collected data outside the local network, keeping the data in the site of collection. This approach has the potential to enable ML for Healthcare Internet of Things (HIoT) and Industrial Internet of Things (IIoT), designing hardware-friendly and custom NNs at the edge for personalized healthcare and advanced industrial services such as quality control, predictive maintenance, or fault diagnosis. By preventing data from being disclosed to cloud services, this method safeguards sensitive information, including industrial secrets and personal data. The outcomes of a thorough experimental session confirm that -- on the Visual Wake Words dataset -- the proposed approach can achieve state-of-the-art results by exploiting a search procedure that runs in less than 10 hours on the Raspberry Pi Zero 2.

User-centered evaluation of the Wearable Walker lower limb exoskeleton, preliminary assessment based on the Experience protocol

Aug 16, 2024

Abstract:Using lower-limbs exoskeletons provides potential advantages in terms of productivity and safety associated with reduced stress. However, complex issues in human-robot interaction are still open, such as the physiological effects of exoskeletons and the impact on the user's subjective experience. In this work, an innovative exoskeleton, the Wearable Walker, is assessed using the EXPERIENCE benchmarking protocol from the EUROBENCH project. The Wearable Walker is a lower-limb exoskeleton that enhances human abilities, such as carrying loads. The device uses a unique control approach called Blend Control that provides smooth assistance torques. It operates two models simultaneously, one in the case in which the left foot is grounded and another for the grounded right foot. These models generate assistive torques combined to provide continuous and smooth overall assistance, preventing any abrupt changes in torque due to model switching. The EXPERIENCE protocol consists of walking on flat ground while gathering physiological signals such as heart rate, its variability, respiration rate, and galvanic skin response and completing a questionnaire. The test was performed with five healthy subjects. The scope of the present study is twofold: to evaluate the specific exoskeleton and its current control system to gain insight into possible improvements and to present a case study for a formal and replicable benchmarking of wearable robots.

Colab NAS: Obtaining lightweight task-specific convolutional neural networks following Occam's razor

Dec 15, 2022

Abstract:The current trend of applying transfer learning from CNNs trained on large datasets can be an overkill when the target application is a custom and delimited problem with enough data to train a network from scratch. On the other hand, the training of custom and lighter CNNs requires expertise, in the from-scratch case, and or high-end resources, as in the case of hardware-aware neural architecture search (HW NAS), limiting access to the technology by non-habitual NN developers. For this reason, we present Colab NAS, an affordable HW NAS technique for producing lightweight task-specific CNNs. Its novel derivative-free search strategy, inspired by Occam's razor, allows it to obtain state-of-the-art results on the Visual Wake Word dataset in just 4.5 GPU hours using free online GPU services such as Google Colaboratory and Kaggle Kernel.

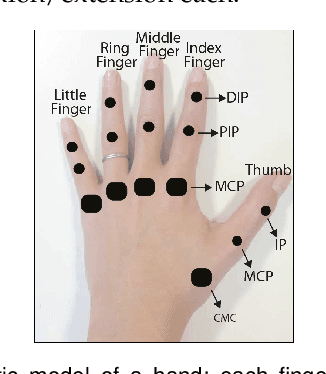

Design and Kinematic Optimization of a Novel Underactuated Robotic Hand Exoskeleton

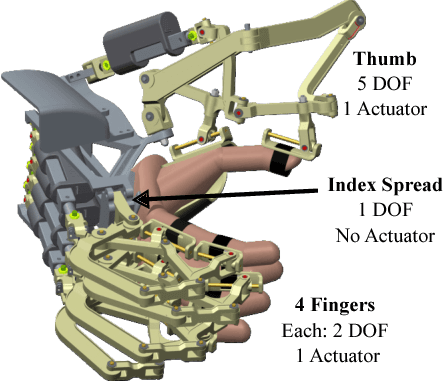

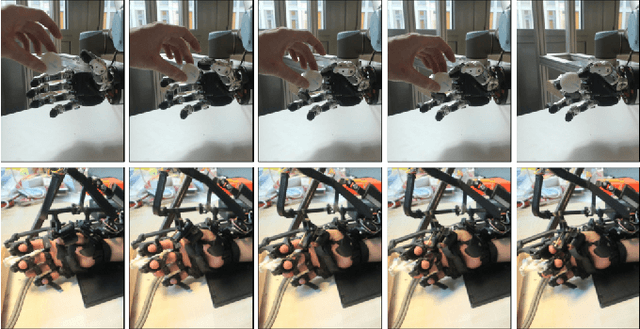

May 07, 2020

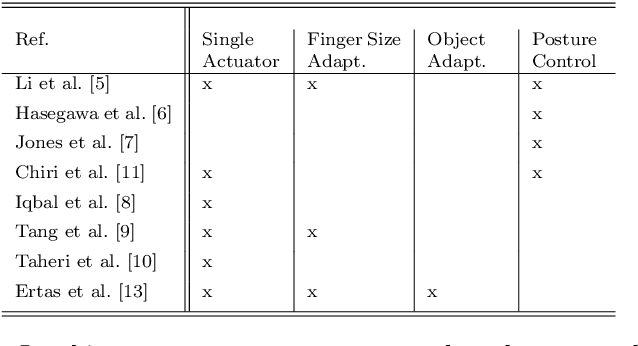

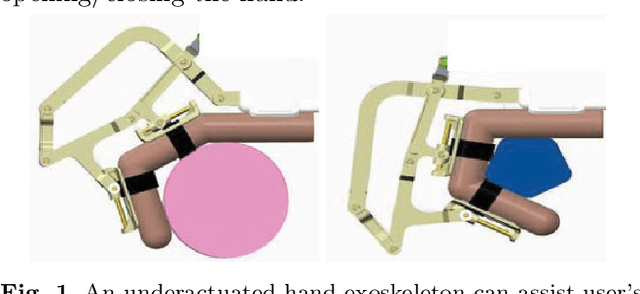

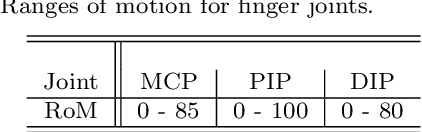

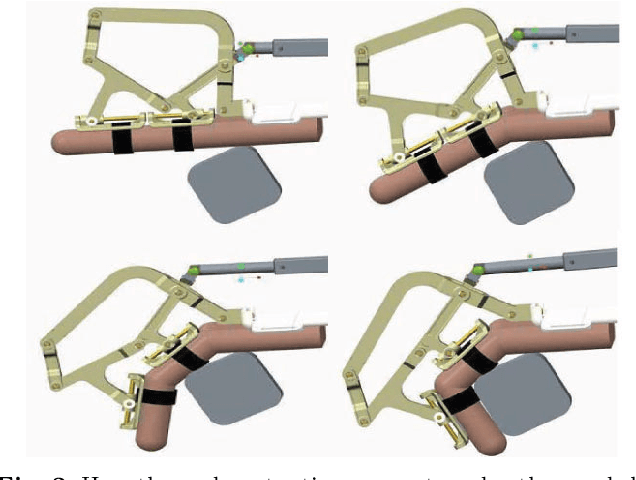

Abstract:This study presents the design and the kinematic optimization of a novel, underactuated, linkage-based robotic hand exoskeleton to assist users in performing grasping tasks. The device has been designed to apply only normal forces to the finger phalanges during flexion/extension of the fingers, while providing automatic adaptability for different finger sizes. Thus, the easiness of the attachment to the user's fingers and better comfort have been ensured. The analyses of the device kinematic pose, statics, and stability of grasp have been performed. These analyses have been used to optimize the link lengths of the mechanism, ensuring that a reasonable range of motion is satisfied while maximizing the force transmission on the finger joints. Finally, the usability of a prototype with multiple fingers has been tested during grasping tasks with different objects.

* 12 pages

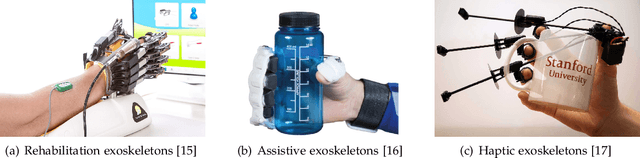

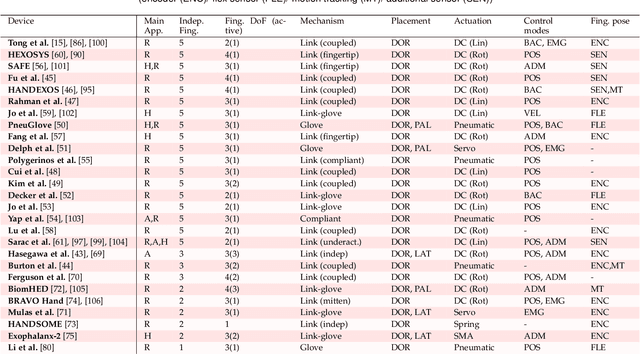

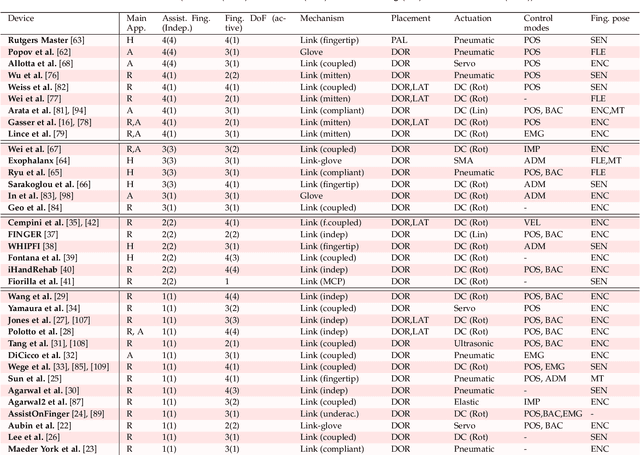

Design Requirements of Generic Hand Exoskeletons and Survey of Hand Exoskeletons for Rehabilitation, Assistive or Haptic Use

Nov 14, 2019

Abstract:Most current hand exoskeletons have been designed specifically for rehabilitation, assistive or haptic applications to simplify the design requirements. Clinical studies on post-stroke rehabilitation have shown that adapting assistive or haptic applications into physical therapy sessions significantly improves the motor learning and treatment process. The recent technology can lead to the creation of generic hand exoskeletons that are application-agnostic. In this paper, our motivation is to create guidelines and best practices for generic exoskeletons by reviewing the literature of current devices. First, we describe each application and briefly explain their design requirements, and then list the design selections to achieve these requirements. Then, we detail each selection by investigating the existing exoskeletons based on their design choices, and by highlighting their impact on application types. With the motivation of creating efficient generic exoskeletons in the future, we finally summarize the best practices in the literature.

* 15 pages

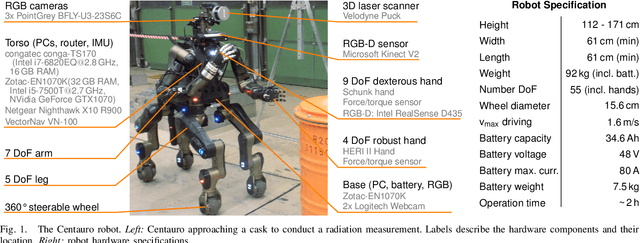

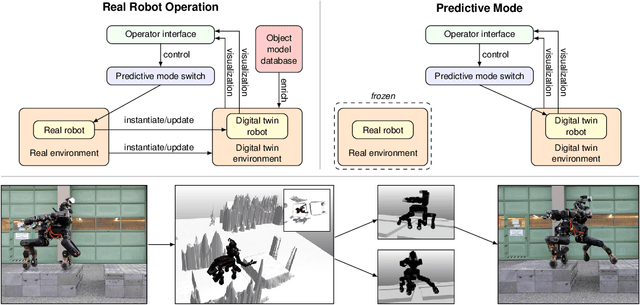

Flexible Disaster Response of Tomorrow -- Final Presentation and Evaluation of the CENTAURO System

Sep 19, 2019

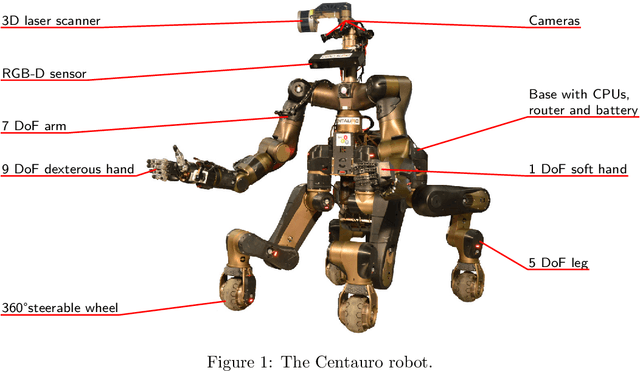

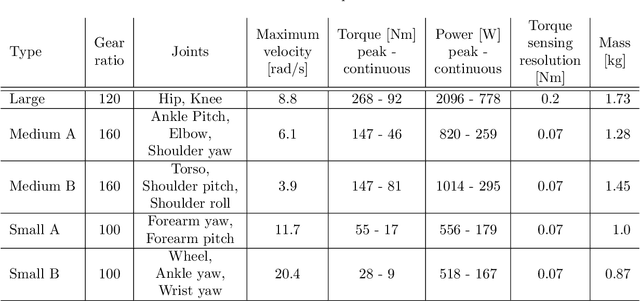

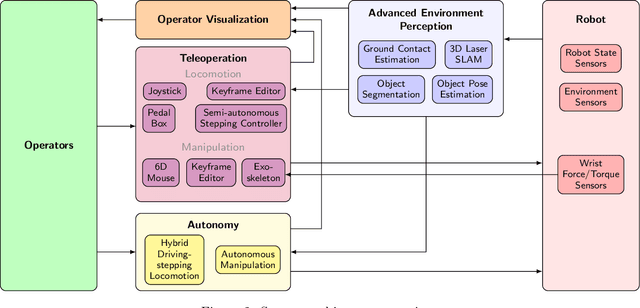

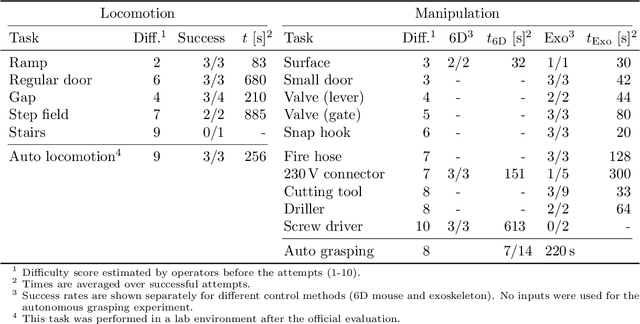

Abstract:Mobile manipulation robots have high potential to support rescue forces in disaster-response missions. Despite the difficulties imposed by real-world scenarios, robots are promising to perform mission tasks from a safe distance. In the CENTAURO project, we developed a disaster-response system which consists of the highly flexible Centauro robot and suitable control interfaces including an immersive tele-presence suit and support-operator controls on different levels of autonomy. In this article, we give an overview of the final CENTAURO system. In particular, we explain several high-level design decisions and how those were derived from requirements and extensive experience of Kerntechnische Hilfsdienst GmbH, Karlsruhe, Germany (KHG). We focus on components which were recently integrated and report about a systematic evaluation which demonstrated system capabilities and revealed valuable insights.

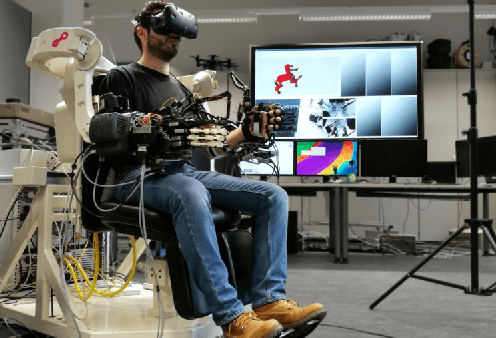

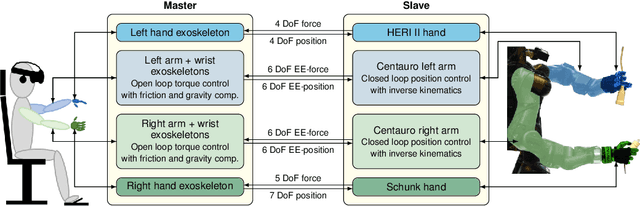

Remote Mobile Manipulation with the Centauro Robot: Full-body Telepresence and Autonomous Operator Assistance

Aug 05, 2019

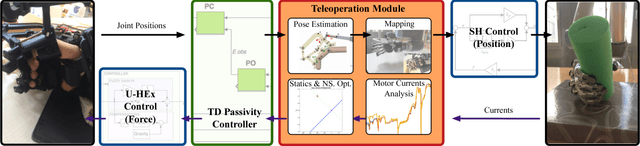

Abstract:Solving mobile manipulation tasks in inaccessible and dangerous environments is an important application of robots to support humans. Example domains are construction and maintenance of manned and unmanned stations on the moon and other planets. Suitable platforms require flexible and robust hardware, a locomotion approach that allows for navigating a wide variety of terrains, dexterous manipulation capabilities, and respective user interfaces. We present the CENTAURO system which has been designed for these requirements and consists of the Centauro robot and a set of advanced operator interfaces with complementary strength enabling the system to solve a wide range of realistic mobile manipulation tasks. The robot possesses a centaur-like body plan and is driven by torque-controlled compliant actuators. Four articulated legs ending in steerable wheels allow for omnidirectional driving as well as for making steps. An anthropomorphic upper body with two arms ending in five-finger hands enables human-like manipulation. The robot perceives its environment through a suite of multimodal sensors. The resulting platform complexity goes beyond the complexity of most known systems which puts the focus on a suitable operator interface. An operator can control the robot through a telepresence suit, which allows for flexibly solving a large variety of mobile manipulation tasks. Locomotion and manipulation functionalities on different levels of autonomy support the operation. The proposed user interfaces enable solving a wide variety of tasks without previous task-specific training. The integrated system is evaluated in numerous teleoperated experiments that are described along with lessons learned.

Learning Postural Synergies for Categorical Grasping through Shape Space Registration

Oct 18, 2018

Abstract:Every time a person encounters an object with a given degree of familiarity, he/she immediately knows how to grasp it. Adaptation of the movement of the hand according to the object geometry happens effortlessly because of the accumulated knowledge of previous experiences grasping similar objects. In this paper, we present a novel method for inferring grasp configurations based on the object shape. Grasping knowledge is gathered in a synergy space of the robotic hand built by following a human grasping taxonomy. The synergy space is constructed through human demonstrations employing a exoskeleton that provides force feedback, which provides the advantage of evaluating the quality of the grasp. The shape descriptor is obtained by means of a categorical non-rigid registration that encodes typical intra-class variations. This approach is especially suitable for on-line scenarios where only a portion of the object's surface is observable. This method is demonstrated through simulation and real robot experiments by grasping objects never seen before by the robot.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge