Niels van Duijkeren

Semi-Infinite Programming for Collision-Avoidance in Optimal and Model Predictive Control

Aug 17, 2025

Abstract:This paper presents a novel approach for collision avoidance in optimal and model predictive control, in which the environment is represented by a large number of points and the robot as a union of padded polygons. The conditions that none of the points shall collide with the robot can be written in terms of an infinite number of constraints per obstacle point. We show that the resulting semi-infinite programming (SIP) optimal control problem (OCP) can be efficiently tackled through a combination of two methods: local reduction and an external active-set method. Specifically, this involves iteratively identifying the closest point obstacles, determining the lower-level distance minimizer among all feasible robot shape parameters, and solving the upper-level finitely-constrained subproblems. In addition, this paper addresses robust collision avoidance in the presence of ellipsoidal state uncertainties. Enforcing constraint satisfaction over all possible uncertainty realizations extends the dimension of constraint infiniteness. The infinitely many constraints arising from translational uncertainty are handled by local reduction together with the robot shape parameterization, while rotational uncertainty is addressed via a backoff reformulation. A controller implemented based on the proposed method is demonstrated on a real-world robot running at 20Hz, enabling fast and collision-free navigation in tight spaces. An application to 3D collision avoidance is also demonstrated in simulation.

Real-Time-Feasible Collision-Free Motion Planning For Ellipsoidal Objects

Sep 18, 2024

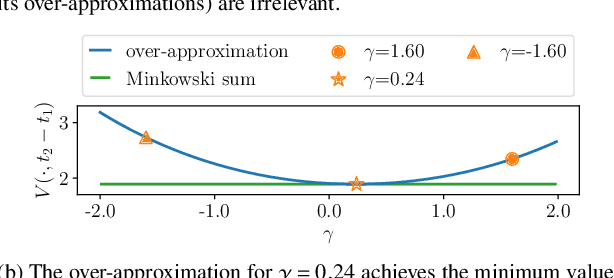

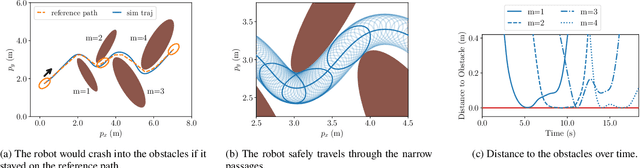

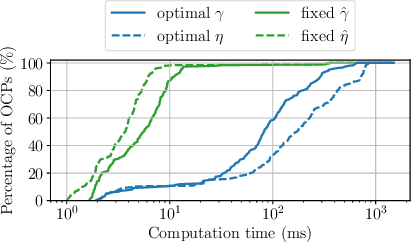

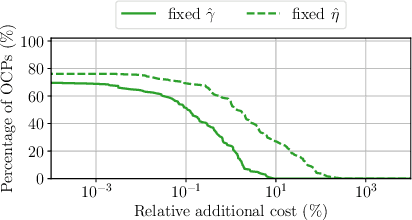

Abstract:Online planning of collision-free trajectories is a fundamental task for robotics and self-driving car applications. This paper revisits collision avoidance between ellipsoidal objects using differentiable constraints. Two ellipsoids do not overlap if and only if the endpoint of the vector between the center points of the ellipsoids does not lie in the interior of the Minkowski sum of the ellipsoids. This condition is formulated using a parametric over-approximation of the Minkowski sum, which can be made tight in any given direction. The resulting collision avoidance constraint is included in an optimal control problem (OCP) and evaluated in comparison to the separating-hyperplane approach. Not only do we observe that the Minkowski-sum formulation is computationally more efficient in our experiments, but also that using pre-determined over-approximation parameters based on warm-start trajectories leads to a very limited increase in suboptimality. This gives rise to a novel real-time scheme for collision-free motion planning with model predictive control (MPC). Both the real-time feasibility and the effectiveness of the constraint formulation are demonstrated in challenging real-world experiments.

Stochastic Model Predictive Control with Optimal Linear Feedback for Mobile Robots in Dynamic Environments

Jul 19, 2024Abstract:Robot navigation around humans can be a challenging problem since human movements are hard to predict. Stochastic model predictive control (MPC) can account for such uncertainties and approximately bound the probability of a collision to take place. In this paper, to counteract the rapidly growing human motion uncertainty over time, we incorporate state feedback in the stochastic MPC. This allows the robot to more closely track reference trajectories. To this end the feedback policy is left as a degree of freedom in the optimal control problem. The stochastic MPC with feedback is validated in simulation experiments and is compared against nominal MPC and stochastic MPC without feedback. The added computation time can be limited by reducing the number of additional variables for the feedback law with a small compromise in control performance.

Receding Horizon Re-ordering of Multi-Agent Execution Schedules

Dec 07, 2023

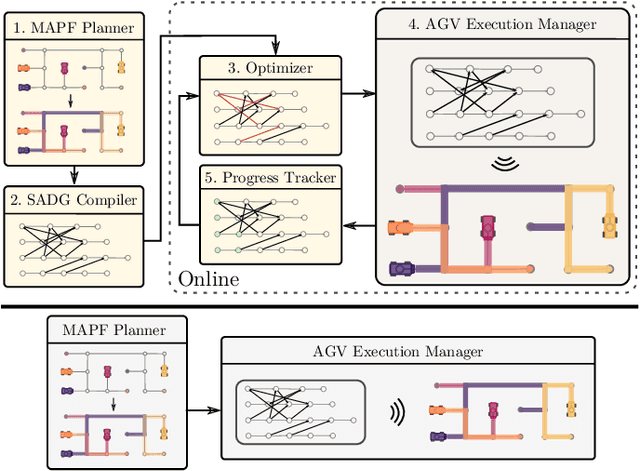

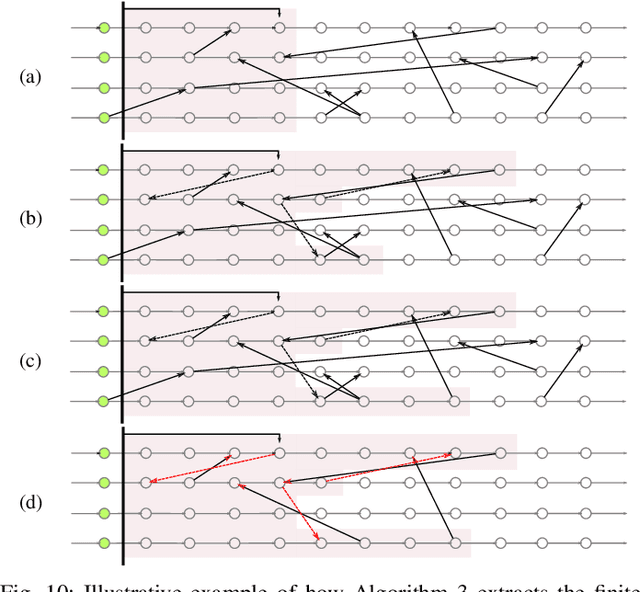

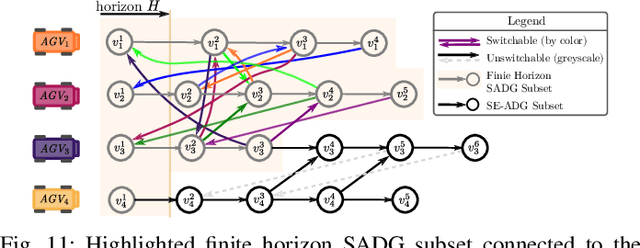

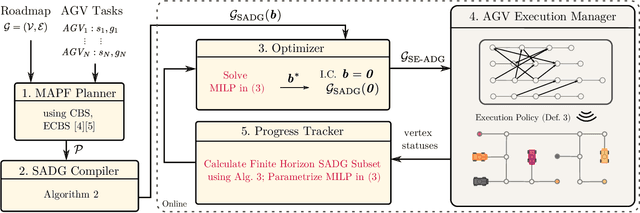

Abstract:The trajectory planning for a fleet of Automated Guided Vehicles (AGVs) on a roadmap is commonly referred to as the Multi-Agent Path Finding (MAPF) problem, the solution to which dictates each AGV's spatial and temporal location until it reaches it's goal without collision. When executing MAPF plans in dynamic workspaces, AGVs can be frequently delayed, e.g., due to encounters with humans or third-party vehicles. If the remainder of the AGVs keeps following their individual plans, synchrony of the fleet is lost and some AGVs may pass through roadmap intersections in a different order than originally planned. Although this could reduce the cumulative route completion time of the AGVs, generally, a change in the original ordering can cause conflicts such as deadlocks. In practice, synchrony is therefore often enforced by using a MAPF execution policy employing, e.g., an Action Dependency Graph (ADG) to maintain ordering. To safely re-order without introducing deadlocks, we present the concept of the Switchable Action Dependency Graph (SADG). Using the SADG, we formulate a comparatively low-dimensional Mixed-Integer Linear Program (MILP) that repeatedly re-orders AGVs in a recursively feasible manner, thus maintaining deadlock-free guarantees, while dynamically minimizing the cumulative route completion time of all AGVs. Various simulations validate the efficiency of our approach when compared to the original ADG method as well as robust MAPF solution approaches.

An Industrial Perspective on Multi-Agent Decision Making for Interoperable Robot Navigation following the VDA5050 Standard

Nov 24, 2023

Abstract:This paper provides a perspective on the literature and current challenges in Multi-Agent Systems for interoperable robot navigation in industry. The focus is on the multi-agent decision stack for Autonomous Mobile Robots operating in mixed environments with humans, manually driven vehicles, and legacy Automated Guided Vehicles. We provide typical characteristics of such Multi-Agent Systems observed today and how these are expected to change on the short term due to the new standard VDA5050 and the interoperability framework OpenRMF. We present recent changes in fleet management standards and the role of open middleware frameworks like ROS2 reaching industrial-grade quality. Approaches to increase the robustness and performance of multi-robot navigation systems for transportation are discussed, and research opportunities are derived.

The e-Bike Motor Assembly: Towards Advanced Robotic Manipulation for Flexible Manufacturing

Apr 20, 2023

Abstract:Robotic manipulation is currently undergoing a profound paradigm shift due to the increasing needs for flexible manufacturing systems, and at the same time, because of the advances in enabling technologies such as sensing, learning, optimization, and hardware. This demands for robots that can observe and reason about their workspace, and that are skillfull enough to complete various assembly processes in weakly-structured settings. Moreover, it remains a great challenge to enable operators for teaching robots on-site, while managing the inherent complexity of perception, control, motion planning and reaction to unexpected situations. Motivated by real-world industrial applications, this paper demonstrates the potential of such a paradigm shift in robotics on the industrial case of an e-Bike motor assembly. The paper presents a concept for teaching and programming adaptive robots on-site and demonstrates their potential for the named applications. The framework includes: (i) a method to teach perception systems onsite in a self-supervised manner, (ii) a general representation of object-centric motion skills and force-sensitive assembly skills, both learned from demonstration, (iii) a sequencing approach that exploits a human-designed plan to perform complex tasks, and (iv) a system solution for adapting and optimizing skills online. The aforementioned components are interfaced through a four-layer software architecture that makes our framework a tangible industrial technology. To demonstrate the generality of the proposed framework, we provide, in addition to the motivating e-Bike motor assembly, a further case study on dense box packing for logistics automation.

End-to-End Learning of Hybrid Inverse Dynamics Models for Precise and Compliant Impedance Control

May 27, 2022

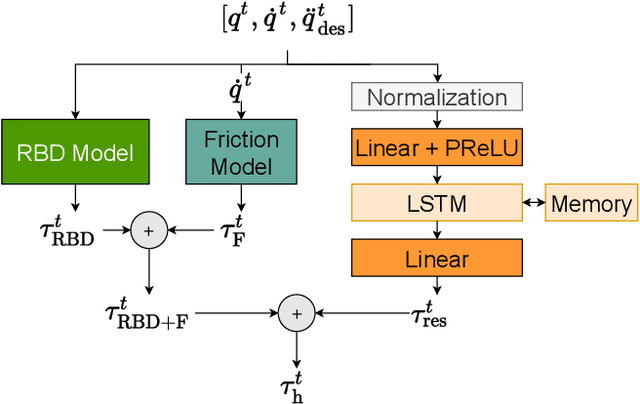

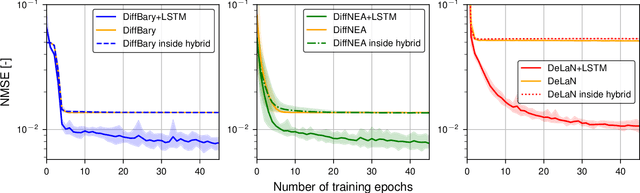

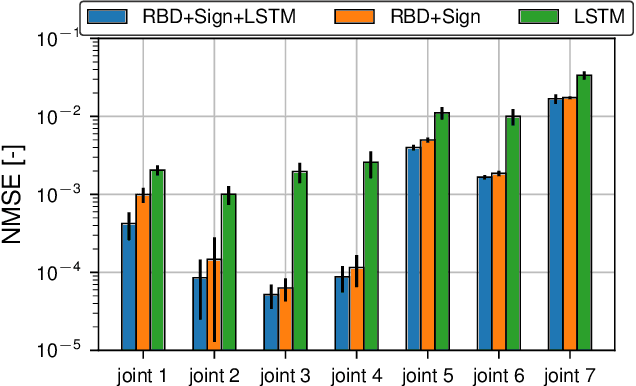

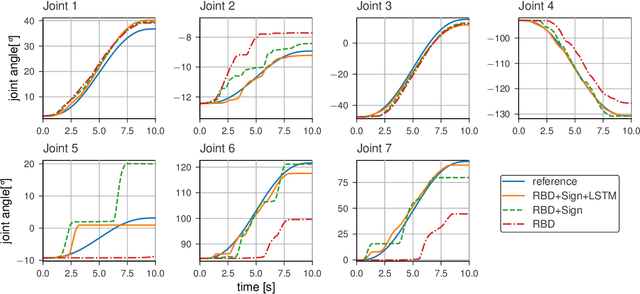

Abstract:It is well-known that inverse dynamics models can improve tracking performance in robot control. These models need to precisely capture the robot dynamics, which consist of well-understood components, e.g., rigid body dynamics, and effects that remain challenging to capture, e.g., stick-slip friction and mechanical flexibilities. Such effects exhibit hysteresis and partial observability, rendering them, particularly challenging to model. Hence, hybrid models, which combine a physical prior with data-driven approaches are especially well-suited in this setting. We present a novel hybrid model formulation that enables us to identify fully physically consistent inertial parameters of a rigid body dynamics model which is paired with a recurrent neural network architecture, allowing us to capture unmodeled partially observable effects using the network memory. We compare our approach against state-of-the-art inverse dynamics models on a 7 degree of freedom manipulator. Using data sets obtained through an optimal experiment design approach, we study the accuracy of offline torque prediction and generalization capabilities of joint learning methods. In control experiments on the real system, we evaluate the model as a feed-forward term for impedance control and show the feedback gains can be drastically reduced to achieve a given tracking accuracy.

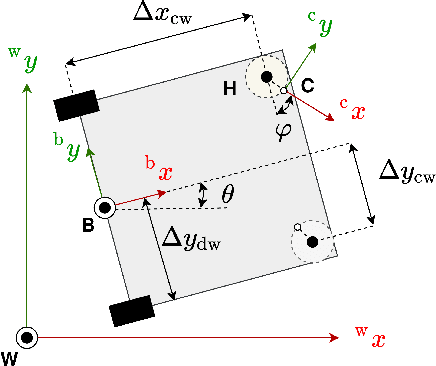

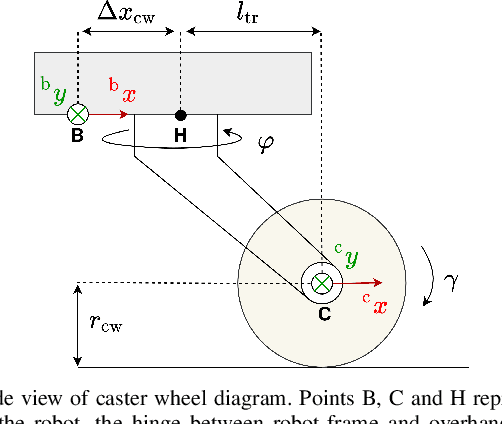

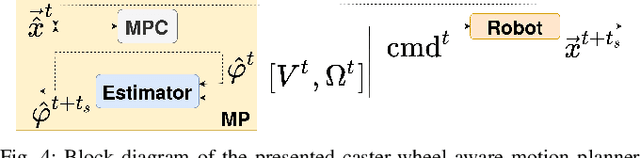

A caster-wheel-aware MPC-based motion planner for mobile robotics

Oct 11, 2021

Abstract:Differential drive mobile robots often use one or more caster wheels for balance. Caster wheels are appreciated for their ability to turn in any direction almost on the spot, allowing the robot to do the same and thereby greatly simplifying the motion planning and control. However, in aligning the caster wheels to the intended direction of motion they produce a so-called bore torque. As a result, additional motor torque is required to move the robot, which may in some cases exceed the motor capacity or compromise the motion planner's accuracy. Instead of taking a decoupled approach, where the navigation and disturbance rejection algorithms are separated, we propose to embed the caster wheel awareness into the motion planner. To do so, we present a caster-wheel-aware term that is compatible with MPC-based control methods, leveraging the existence of caster wheels in the motion planning stage. As a proof of concept, this term is combined with a a model-predictive trajectory tracking controller. Since this method requires knowledge of the caster wheel angle and rolling speed, an observer that estimates these states is also presented. The efficacy of the approach is shown in experiments on an intralogistics robot and compared against a decoupled bore-torque reduction approach and a caster-wheel agnostic controller. Moreover, the experiments show that the presented caster wheel estimator performs sufficiently well and therefore avoids the need for additional sensors.

Learning Forceful Manipulation Skills from Multi-modal Human Demonstrations

Sep 09, 2021

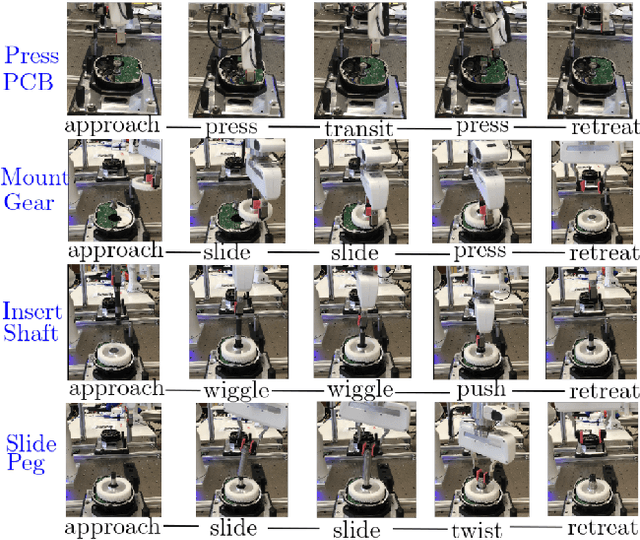

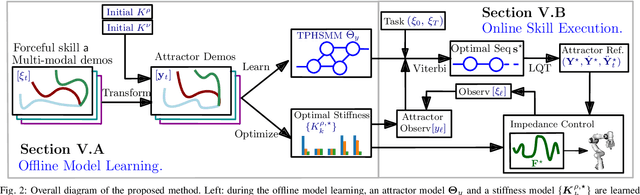

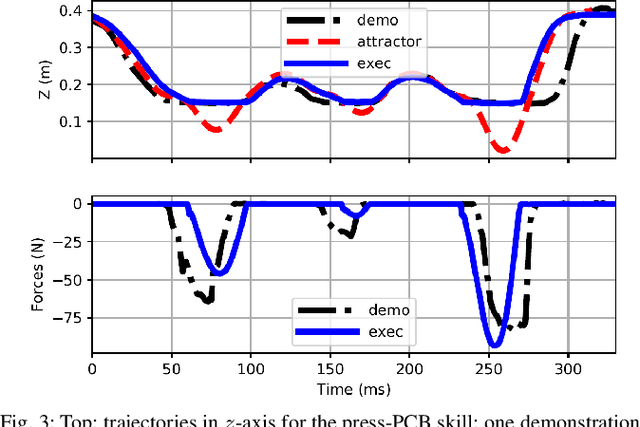

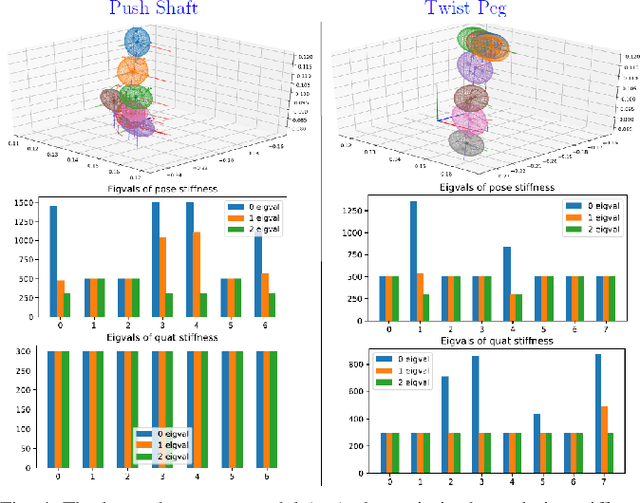

Abstract:Learning from Demonstration (LfD) provides an intuitive and fast approach to program robotic manipulators. Task parameterized representations allow easy adaptation to new scenes and online observations. However, this approach has been limited to pose-only demonstrations and thus only skills with spatial and temporal features. In this work, we extend the LfD framework to address forceful manipulation skills, which are of great importance for industrial processes such as assembly. For such skills, multi-modal demonstrations including robot end-effector poses, force and torque readings, and operation scene are essential. Our objective is to reproduce such skills reliably according to the demonstrated pose and force profiles within different scenes. The proposed method combines our previous work on task-parameterized optimization and attractor-based impedance control. The learned skill model consists of (i) the attractor model that unifies the pose and force features, and (ii) the stiffness model that optimizes the stiffness for different stages of the skill. Furthermore, an online execution algorithm is proposed to adapt the skill execution to real-time observations of robot poses, measured forces, and changed scenes. We validate this method rigorously on a 7-DoF robot arm over several steps of an E-bike motor assembly process, which require different types of forceful interaction such as insertion, sliding and twisting.

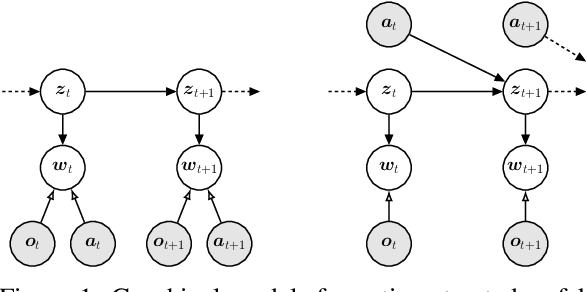

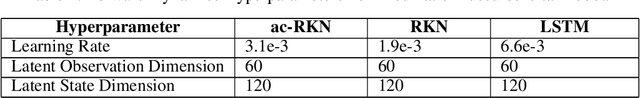

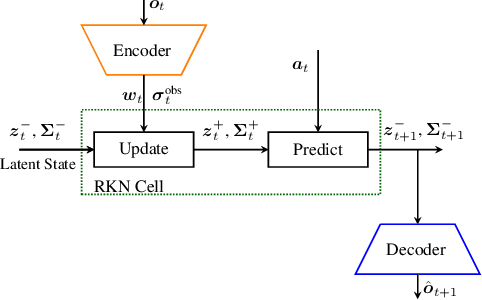

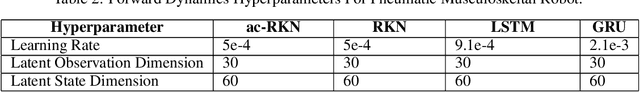

Action-Conditional Recurrent Kalman Networks For Forward and Inverse Dynamics Learning

Nov 05, 2020

Abstract:Estimating accurate forward and inverse dynamics models is a crucial component of model-based control for sophisticated robots such as robots driven by hydraulics, artificial muscles, or robots dealing with different contact situations. Analytic models to such processes are often unavailable or inaccurate due to complex hysteresis effects, unmodelled friction and stiction phenomena,and unknown effects during contact situations. A promising approach is to obtain spatio-temporal models in a data-driven way using recurrent neural networks, as they can overcome those issues. However, such models often do not meet accuracy demands sufficiently, degenerate in performance for the required high sampling frequencies and cannot provide uncertainty estimates. We adopt a recent probabilistic recurrent neural network architecture, called Re-current Kalman Networks (RKNs), to model learning by conditioning its transition dynamics on the control actions. RKNs outperform standard recurrent networks such as LSTMs on many state estimation tasks. Inspired by Kalman filters, the RKN provides an elegant way to achieve action conditioning within its recurrent cell by leveraging additive interactions between the current latent state and the action variables. We present two architectures, one for forward model learning and one for inverse model learning. Both architectures significantly outperform exist-ing model learning frameworks as well as analytical models in terms of prediction performance on a variety of real robot dynamics models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge