Patrick Kesper

The e-Bike Motor Assembly: Towards Advanced Robotic Manipulation for Flexible Manufacturing

Apr 20, 2023

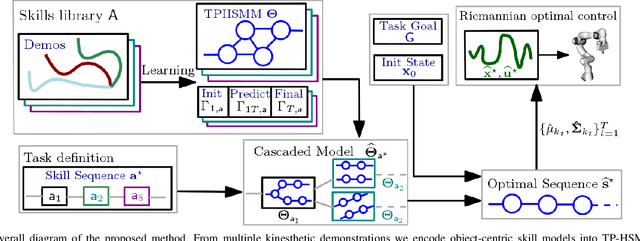

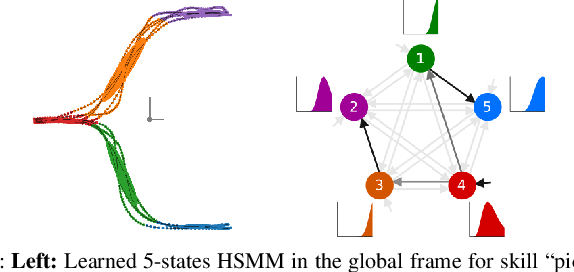

Abstract:Robotic manipulation is currently undergoing a profound paradigm shift due to the increasing needs for flexible manufacturing systems, and at the same time, because of the advances in enabling technologies such as sensing, learning, optimization, and hardware. This demands for robots that can observe and reason about their workspace, and that are skillfull enough to complete various assembly processes in weakly-structured settings. Moreover, it remains a great challenge to enable operators for teaching robots on-site, while managing the inherent complexity of perception, control, motion planning and reaction to unexpected situations. Motivated by real-world industrial applications, this paper demonstrates the potential of such a paradigm shift in robotics on the industrial case of an e-Bike motor assembly. The paper presents a concept for teaching and programming adaptive robots on-site and demonstrates their potential for the named applications. The framework includes: (i) a method to teach perception systems onsite in a self-supervised manner, (ii) a general representation of object-centric motion skills and force-sensitive assembly skills, both learned from demonstration, (iii) a sequencing approach that exploits a human-designed plan to perform complex tasks, and (iv) a system solution for adapting and optimizing skills online. The aforementioned components are interfaced through a four-layer software architecture that makes our framework a tangible industrial technology. To demonstrate the generality of the proposed framework, we provide, in addition to the motivating e-Bike motor assembly, a further case study on dense box packing for logistics automation.

Learning and Sequencing of Object-Centric Manipulation Skills for Industrial Tasks

Aug 24, 2020

Abstract:Enabling robots to quickly learn manipulation skills is an important, yet challenging problem. Such manipulation skills should be flexible, e.g., be able adapt to the current workspace configuration. Furthermore, to accomplish complex manipulation tasks, robots should be able to sequence several skills and adapt them to changing situations. In this work, we propose a rapid robot skill-sequencing algorithm, where the skills are encoded by object-centric hidden semi-Markov models. The learned skill models can encode multimodal (temporal and spatial) trajectory distributions. This approach significantly reduces manual modeling efforts, while ensuring a high degree of flexibility and re-usability of learned skills. Given a task goal and a set of generic skills, our framework computes smooth transitions between skill instances. To compute the corresponding optimal end-effector trajectory in task space we rely on Riemannian optimal controller. We demonstrate this approach on a 7 DoF robot arm for industrial assembly tasks.

Short-Term Prediction and Multi-Camera Fusion on Semantic Grids

Mar 21, 2019

Abstract:An environment representation (ER) is a substantial part of every autonomous system. It introduces a common interface between perception and other system components, such as decision making, and allows downstream algorithms to deal with abstracted data without knowledge of the used sensor. In this work, we propose and evaluate a novel architecture that generates an egocentric, grid-based, predictive, and semantically-interpretable ER. In particular, we provide a proof of concept for the spatio-temporal fusion of multiple camera sequences and short-term prediction in such an ER. Our design utilizes a strong semantic segmentation network together with depth and egomotion estimates to first extract semantic information from multiple camera streams and then transform these separately into egocentric temporally-aligned bird's-eye view grids. A deep encoder-decoder network is trained to fuse a stack of these grids into a unified semantic grid representation and to predict the dynamics of its surrounding. We evaluate this representation on real-world sequences of the Cityscapes dataset and show that our architecture can make accurate predictions in complex sensor fusion scenarios and significantly outperforms a model-driven baseline in a category-based evaluation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge