Marco Todescato

Modeling Resilience of Collaborative AI Systems

Jan 23, 2024

Abstract:A Collaborative Artificial Intelligence System (CAIS) performs actions in collaboration with the human to achieve a common goal. CAISs can use a trained AI model to control human-system interaction, or they can use human interaction to dynamically learn from humans in an online fashion. In online learning with human feedback, the AI model evolves by monitoring human interaction through the system sensors in the learning state, and actuates the autonomous components of the CAIS based on the learning in the operational state. Therefore, any disruptive event affecting these sensors may affect the AI model's ability to make accurate decisions and degrade the CAIS performance. Consequently, it is of paramount importance for CAIS managers to be able to automatically track the system performance to understand the resilience of the CAIS upon such disruptive events. In this paper, we provide a new framework to model CAIS performance when the system experiences a disruptive event. With our framework, we introduce a model of performance evolution of CAIS. The model is equipped with a set of measures that aim to support CAIS managers in the decision process to achieve the required resilience of the system. We tested our framework on a real-world case study of a robot collaborating online with the human, when the system is experiencing a disruptive event. The case study shows that our framework can be adopted in CAIS and integrated into the online execution of the CAIS activities.

CAIS-DMA: A Decision-Making Assistant for Collaborative AI Systems

Nov 08, 2023Abstract:A Collaborative Artificial Intelligence System (CAIS) is a cyber-physical system that learns actions in collaboration with humans in a shared environment to achieve a common goal. In particular, a CAIS is equipped with an AI model to support the decision-making process of this collaboration. When an event degrades the performance of CAIS (i.e., a disruptive event), this decision-making process may be hampered or even stopped. Thus, it is of paramount importance to monitor the learning of the AI model, and eventually support its decision-making process in such circumstances. This paper introduces a new methodology to automatically support the decision-making process in CAIS when the system experiences performance degradation after a disruptive event. To this aim, we develop a framework that consists of three components: one manages or simulates CAIS's environment and disruptive events, the second automates the decision-making process, and the third provides a visual analysis of CAIS behavior. Overall, our framework automatically monitors the decision-making process, intervenes whenever a performance degradation occurs, and recommends the next action. We demonstrate our framework by implementing an example with a real-world collaborative robot, where the framework recommends the next action that balances between minimizing the recovery time (i.e., resilience), and minimizing the energy adverse effects (i.e., greenness).

GResilience: Trading Off Between the Greenness and the Resilience of Collaborative AI Systems

Nov 08, 2023Abstract:A Collaborative Artificial Intelligence System (CAIS) works with humans in a shared environment to achieve a common goal. To recover from a disruptive event that degrades its performance and ensures its resilience, a CAIS may then need to perform a set of actions either by the system, by the humans, or collaboratively together. As for any other system, recovery actions may cause energy adverse effects due to the additional required energy. Therefore, it is of paramount importance to understand which of the above actions can better trade-off between resilience and greenness. In this in-progress work, we propose an approach to automatically evaluate CAIS recovery actions for their ability to trade-off between the resilience and greenness of the system. We have also designed an experiment protocol and its application to a real CAIS demonstrator. Our approach aims to attack the problem from two perspectives: as a one-agent decision problem through optimization, which takes the decision based on the score of resilience and greenness, and as a two-agent decision problem through game theory, which takes the decision based on the payoff computed for resilience and greenness as two players of a cooperative game.

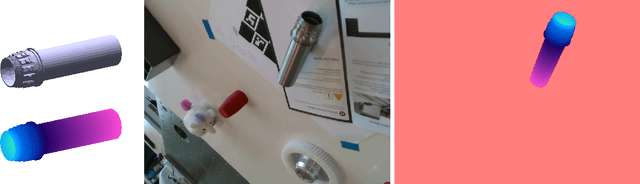

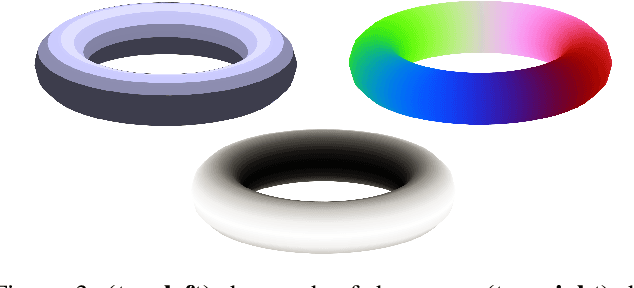

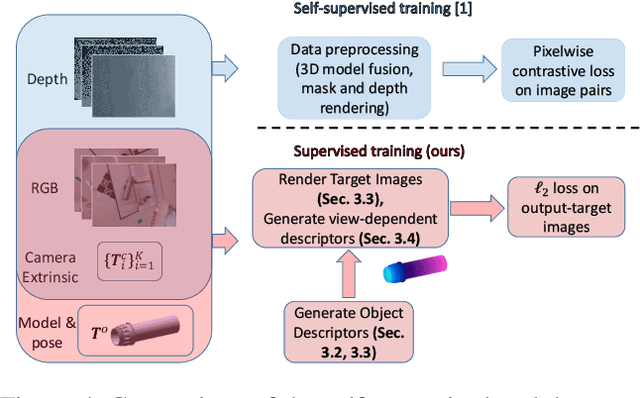

Supervised Training of Dense Object Nets using Optimal Descriptors for Industrial Robotic Applications

Feb 16, 2021

Abstract:Dense Object Nets (DONs) by Florence, Manuelli and Tedrake (2018) introduced dense object descriptors as a novel visual object representation for the robotics community. It is suitable for many applications including object grasping, policy learning, etc. DONs map an RGB image depicting an object into a descriptor space image, which implicitly encodes key features of an object invariant to the relative camera pose. Impressively, the self-supervised training of DONs can be applied to arbitrary objects and can be evaluated and deployed within hours. However, the training approach relies on accurate depth images and faces challenges with small, reflective objects, typical for industrial settings, when using consumer grade depth cameras. In this paper we show that given a 3D model of an object, we can generate its descriptor space image, which allows for supervised training of DONs. We rely on Laplacian Eigenmaps (LE) to embed the 3D model of an object into an optimally generated space. While our approach uses more domain knowledge, it can be efficiently applied even for smaller and reflective objects, as it does not rely on depth information. We compare the training methods on generating 6D grasps for industrial objects and show that our novel supervised training approach improves the pick-and-place performance in industry-relevant tasks.

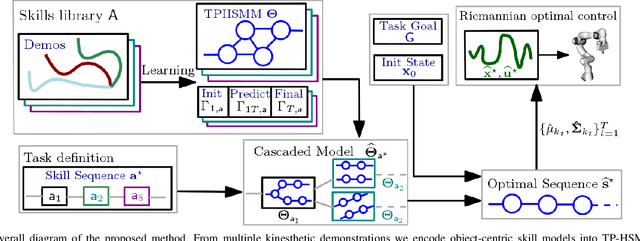

Learning and Sequencing of Object-Centric Manipulation Skills for Industrial Tasks

Aug 24, 2020

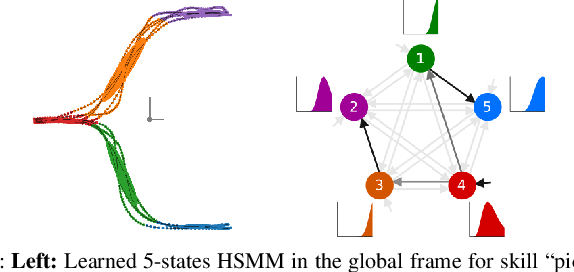

Abstract:Enabling robots to quickly learn manipulation skills is an important, yet challenging problem. Such manipulation skills should be flexible, e.g., be able adapt to the current workspace configuration. Furthermore, to accomplish complex manipulation tasks, robots should be able to sequence several skills and adapt them to changing situations. In this work, we propose a rapid robot skill-sequencing algorithm, where the skills are encoded by object-centric hidden semi-Markov models. The learned skill models can encode multimodal (temporal and spatial) trajectory distributions. This approach significantly reduces manual modeling efforts, while ensuring a high degree of flexibility and re-usability of learned skills. Given a task goal and a set of generic skills, our framework computes smooth transitions between skill instances. To compute the corresponding optimal end-effector trajectory in task space we rely on Riemannian optimal controller. We demonstrate this approach on a 7 DoF robot arm for industrial assembly tasks.

Efficient Spatio-Temporal Gaussian Regression via Kalman Filtering

May 03, 2017

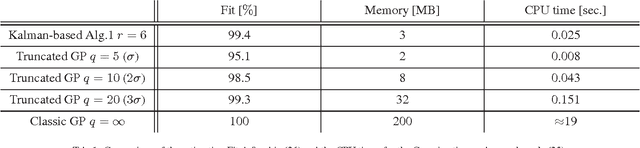

Abstract:In this work we study the non-parametric reconstruction of spatio-temporal dynamical Gaussian processes (GPs) via GP regression from sparse and noisy data. GPs have been mainly applied to spatial regression where they represent one of the most powerful estimation approaches also thanks to their universal representing properties. Their extension to dynamical processes has been instead elusive so far since classical implementations lead to unscalable algorithms. We then propose a novel procedure to address this problem by coupling GP regression and Kalman filtering. In particular, assuming space/time separability of the covariance (kernel) of the process and rational time spectrum, we build a finite-dimensional discrete-time state-space process representation amenable of Kalman filtering. With sampling over a finite set of fixed spatial locations, our major finding is that the Kalman filter state at instant $t_k$ represents a sufficient statistic to compute the minimum variance estimate of the process at any $t \geq t_k$ over the entire spatial domain. This result can be interpreted as a novel Kalman representer theorem for dynamical GPs. We then extend the study to situations where the set of spatial input locations can vary over time. The proposed algorithms are finally tested on both synthetic and real field data, also providing comparisons with standard GP and truncated GP regression techniques.

Multi-agents adaptive estimation and coverage control using Gaussian regression

Jul 22, 2014

Abstract:We consider a scenario where the aim of a group of agents is to perform the optimal coverage of a region according to a sensory function. In particular, centroidal Voronoi partitions have to be computed. The difficulty of the task is that the sensory function is unknown and has to be reconstructed on line from noisy measurements. Hence, estimation and coverage needs to be performed at the same time. We cast the problem in a Bayesian regression framework, where the sensory function is seen as a Gaussian random field. Then, we design a set of control inputs which try to well balance coverage and estimation, also discussing convergence properties of the algorithm. Numerical experiments show the effectivness of the new approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge