Barbara Russo

Modeling Resilience of Collaborative AI Systems

Jan 23, 2024

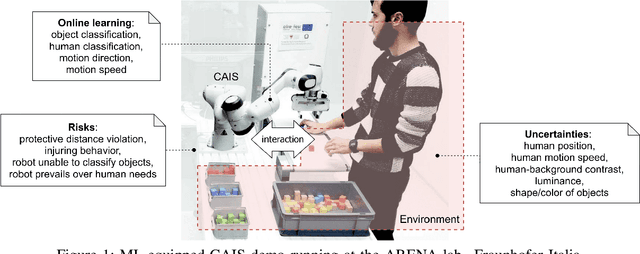

Abstract:A Collaborative Artificial Intelligence System (CAIS) performs actions in collaboration with the human to achieve a common goal. CAISs can use a trained AI model to control human-system interaction, or they can use human interaction to dynamically learn from humans in an online fashion. In online learning with human feedback, the AI model evolves by monitoring human interaction through the system sensors in the learning state, and actuates the autonomous components of the CAIS based on the learning in the operational state. Therefore, any disruptive event affecting these sensors may affect the AI model's ability to make accurate decisions and degrade the CAIS performance. Consequently, it is of paramount importance for CAIS managers to be able to automatically track the system performance to understand the resilience of the CAIS upon such disruptive events. In this paper, we provide a new framework to model CAIS performance when the system experiences a disruptive event. With our framework, we introduce a model of performance evolution of CAIS. The model is equipped with a set of measures that aim to support CAIS managers in the decision process to achieve the required resilience of the system. We tested our framework on a real-world case study of a robot collaborating online with the human, when the system is experiencing a disruptive event. The case study shows that our framework can be adopted in CAIS and integrated into the online execution of the CAIS activities.

CAIS-DMA: A Decision-Making Assistant for Collaborative AI Systems

Nov 08, 2023Abstract:A Collaborative Artificial Intelligence System (CAIS) is a cyber-physical system that learns actions in collaboration with humans in a shared environment to achieve a common goal. In particular, a CAIS is equipped with an AI model to support the decision-making process of this collaboration. When an event degrades the performance of CAIS (i.e., a disruptive event), this decision-making process may be hampered or even stopped. Thus, it is of paramount importance to monitor the learning of the AI model, and eventually support its decision-making process in such circumstances. This paper introduces a new methodology to automatically support the decision-making process in CAIS when the system experiences performance degradation after a disruptive event. To this aim, we develop a framework that consists of three components: one manages or simulates CAIS's environment and disruptive events, the second automates the decision-making process, and the third provides a visual analysis of CAIS behavior. Overall, our framework automatically monitors the decision-making process, intervenes whenever a performance degradation occurs, and recommends the next action. We demonstrate our framework by implementing an example with a real-world collaborative robot, where the framework recommends the next action that balances between minimizing the recovery time (i.e., resilience), and minimizing the energy adverse effects (i.e., greenness).

GResilience: Trading Off Between the Greenness and the Resilience of Collaborative AI Systems

Nov 08, 2023Abstract:A Collaborative Artificial Intelligence System (CAIS) works with humans in a shared environment to achieve a common goal. To recover from a disruptive event that degrades its performance and ensures its resilience, a CAIS may then need to perform a set of actions either by the system, by the humans, or collaboratively together. As for any other system, recovery actions may cause energy adverse effects due to the additional required energy. Therefore, it is of paramount importance to understand which of the above actions can better trade-off between resilience and greenness. In this in-progress work, we propose an approach to automatically evaluate CAIS recovery actions for their ability to trade-off between the resilience and greenness of the system. We have also designed an experiment protocol and its application to a real CAIS demonstrator. Our approach aims to attack the problem from two perspectives: as a one-agent decision problem through optimization, which takes the decision based on the score of resilience and greenness, and as a two-agent decision problem through game theory, which takes the decision based on the payoff computed for resilience and greenness as two players of a cooperative game.

Collaborative AI Needs Stronger Assurances Driven by Risks

Dec 01, 2021

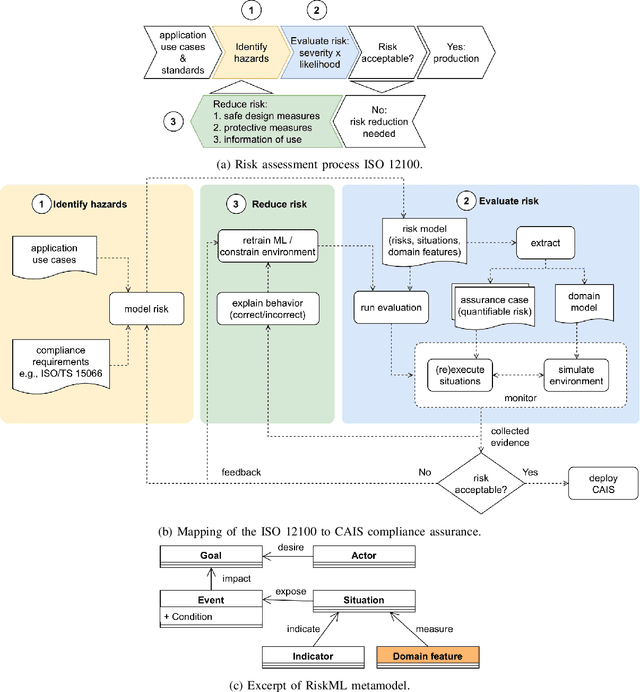

Abstract:Collaborative AI systems (CAISs) aim at working together with humans in a shared space to achieve a common goal. This critical setting yields hazardous circumstances that could harm human beings. Thus, building such systems with strong assurances of compliance with requirements, domain-specific standards and regulations is of greatest importance. Only few scale impact has been reported so far for such systems since much work remains to manage possible risks. We identify emerging problems in this context and then we report our vision, as well as the progress of our multidisciplinary research team composed of software/systems, and mechatronics engineers to develop a risk-driven assurance process for CAISs.

Towards Risk Modeling for Collaborative AI

Mar 12, 2021

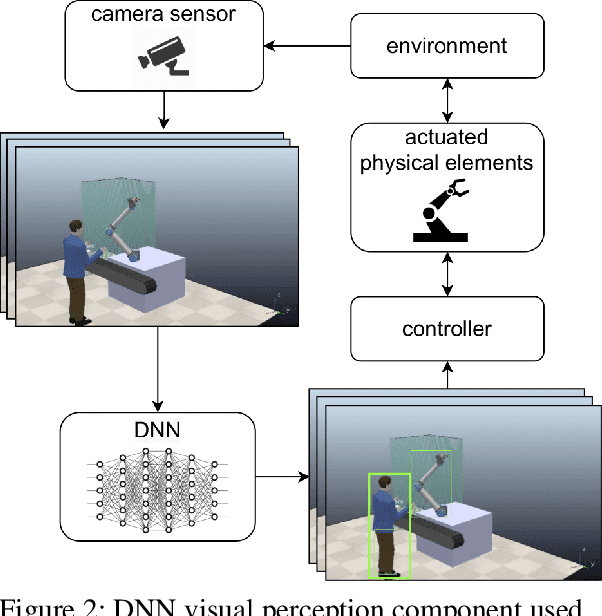

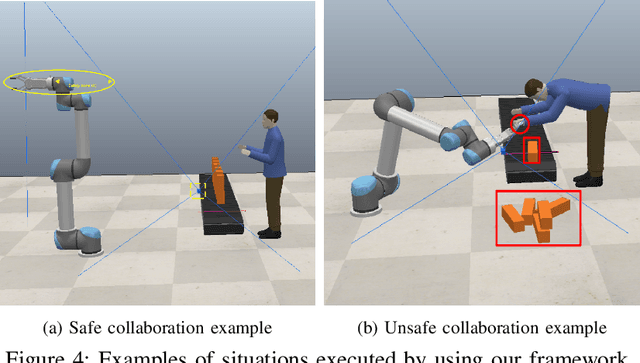

Abstract:Collaborative AI systems aim at working together with humans in a shared space to achieve a common goal. This setting imposes potentially hazardous circumstances due to contacts that could harm human beings. Thus, building such systems with strong assurances of compliance with requirements domain specific standards and regulations is of greatest importance. Challenges associated with the achievement of this goal become even more severe when such systems rely on machine learning components rather than such as top-down rule-based AI. In this paper, we introduce a risk modeling approach tailored to Collaborative AI systems. The risk model includes goals, risk events and domain specific indicators that potentially expose humans to hazards. The risk model is then leveraged to drive assurance methods that feed in turn the risk model through insights extracted from run-time evidence. Our envisioned approach is described by means of a running example in the domain of Industry 4.0, where a robotic arm endowed with a visual perception component, implemented with machine learning, collaborates with a human operator for a production-relevant task.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge