Jubril Gbolahan Adigun

Streamlining Attack Tree Generation: A Fragment-Based Approach

Oct 01, 2023

Abstract:Attack graphs are a tool for analyzing security vulnerabilities that capture different and prospective attacks on a system. As a threat modeling tool, it shows possible paths that an attacker can exploit to achieve a particular goal. However, due to the large number of vulnerabilities that are published on a daily basis, they have the potential to rapidly expand in size. Consequently, this necessitates a significant amount of resources to generate attack graphs. In addition, generating composited attack models for complex systems such as self-adaptive or AI is very difficult due to their nature to continuously change. In this paper, we present a novel fragment-based attack graph generation approach that utilizes information from publicly available information security databases. Furthermore, we also propose a domain-specific language for attack modeling, which we employ in the proposed attack graph generation approach. Finally, we present a demonstrator example showcasing the attack generator's capability to replicate a verified attack chain, as previously confirmed by security experts.

Metamorphic Testing in Autonomous System Simulations

Sep 22, 2022

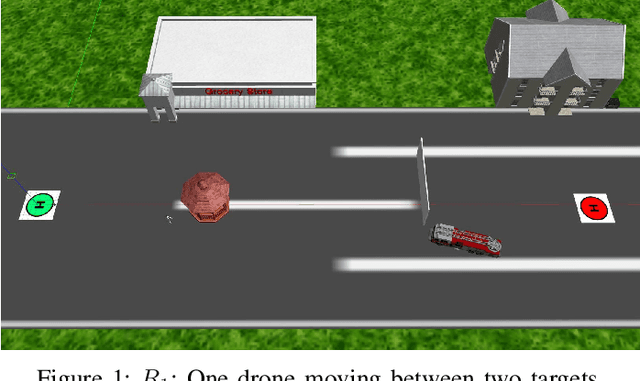

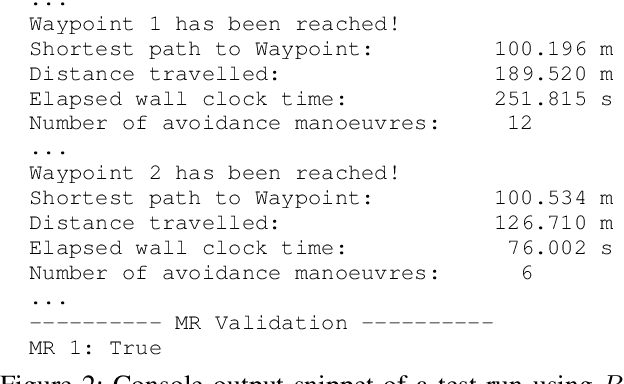

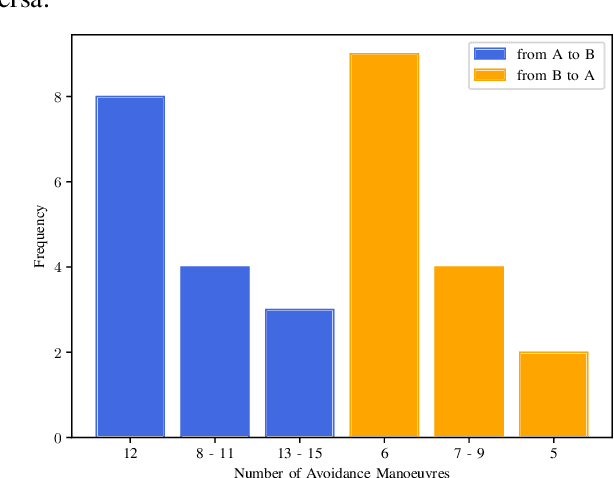

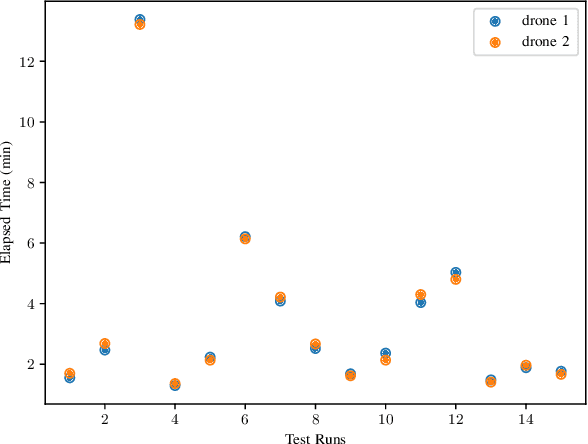

Abstract:Metamorphic testing has proven to be effective for test case generation and fault detection in many domains. It is a software testing strategy that uses certain relations between input-output pairs of a program, referred to as metamorphic relations. This approach is relevant in the autonomous systems domain since it helps in cases where the outcome of a given test input may be difficult to determine. In this paper therefore, we provide an overview of metamorphic testing as well as an implementation in the autonomous systems domain. We implement an obstacle detection and avoidance task in autonomous drones utilising the GNC API alongside a simulation in Gazebo. Particularly, we describe properties and best practices that are crucial for the development of effective metamorphic relations. We also demonstrate two metamorphic relations for metamorphic testing of single and more than one drones, respectively. Our relations reveal several properties and some weak spots of both the implementation and the avoidance algorithm in the light of metamorphic testing. The results indicate that metamorphic testing has great potential in the autonomous systems domain and should be considered for quality assurance in this field.

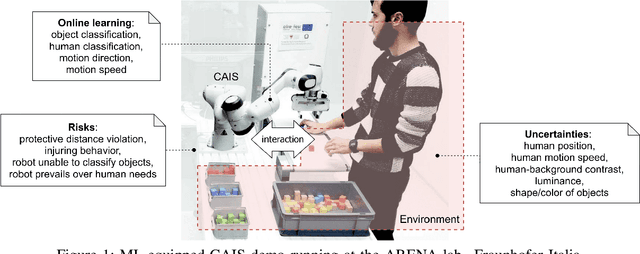

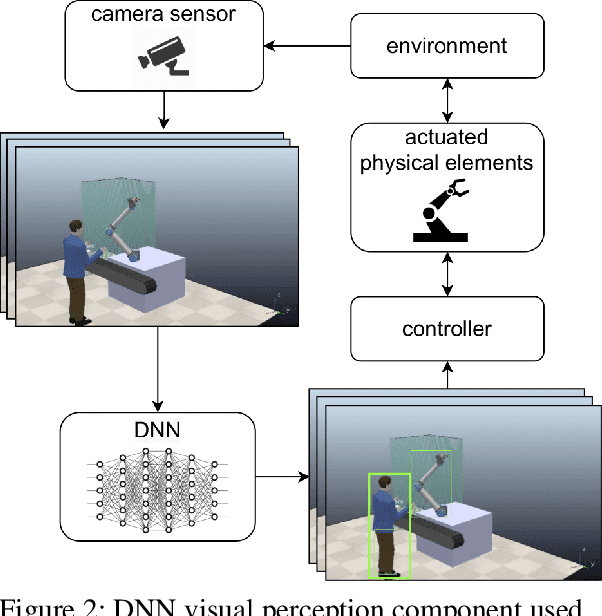

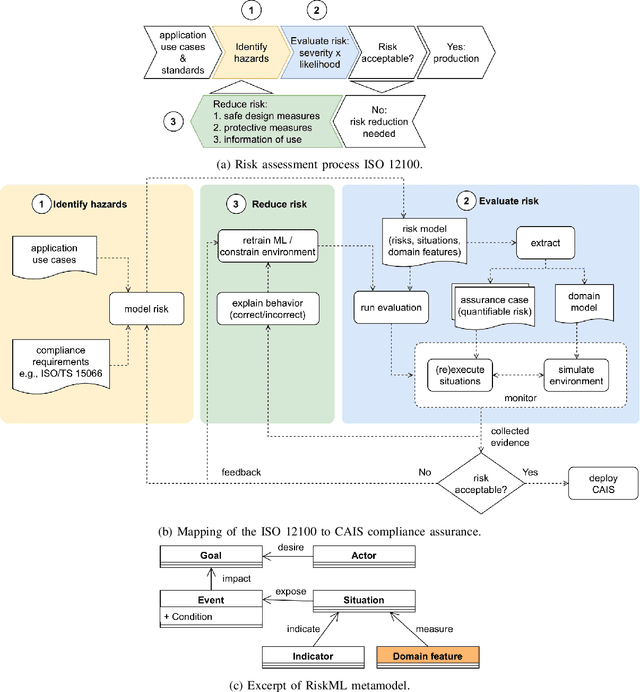

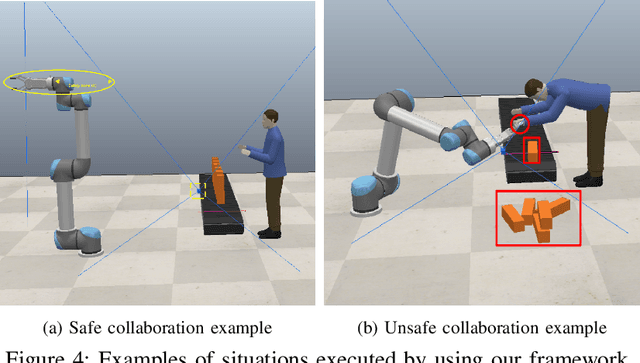

Collaborative AI Needs Stronger Assurances Driven by Risks

Dec 01, 2021

Abstract:Collaborative AI systems (CAISs) aim at working together with humans in a shared space to achieve a common goal. This critical setting yields hazardous circumstances that could harm human beings. Thus, building such systems with strong assurances of compliance with requirements, domain-specific standards and regulations is of greatest importance. Only few scale impact has been reported so far for such systems since much work remains to manage possible risks. We identify emerging problems in this context and then we report our vision, as well as the progress of our multidisciplinary research team composed of software/systems, and mechatronics engineers to develop a risk-driven assurance process for CAISs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge