Antonio Liotta

Sparse Self-Federated Learning for Energy Efficient Cooperative Intelligence in Society 5.0

Jul 10, 2025Abstract:Federated Learning offers privacy-preserving collaborative intelligence but struggles to meet the sustainability demands of emerging IoT ecosystems necessary for Society 5.0-a human-centered technological future balancing social advancement with environmental responsibility. The excessive communication bandwidth and computational resources required by traditional FL approaches make them environmentally unsustainable at scale, creating a fundamental conflict with green AI principles as billions of resource-constrained devices attempt to participate. To this end, we introduce Sparse Proximity-based Self-Federated Learning (SParSeFuL), a resource-aware approach that bridges this gap by combining aggregate computing for self-organization with neural network sparsification to reduce energy and bandwidth consumption.

PATS: Proficiency-Aware Temporal Sampling for Multi-View Sports Skill Assessment

Jun 05, 2025Abstract:Automated sports skill assessment requires capturing fundamental movement patterns that distinguish expert from novice performance, yet current video sampling methods disrupt the temporal continuity essential for proficiency evaluation. To this end, we introduce Proficiency-Aware Temporal Sampling (PATS), a novel sampling strategy that preserves complete fundamental movements within continuous temporal segments for multi-view skill assessment. PATS adaptively segments videos to ensure each analyzed portion contains full execution of critical performance components, repeating this process across multiple segments to maximize information coverage while maintaining temporal coherence. Evaluated on the EgoExo4D benchmark with SkillFormer, PATS surpasses the state-of-the-art accuracy across all viewing configurations (+0.65% to +3.05%) and delivers substantial gains in challenging domains (+26.22% bouldering, +2.39% music, +1.13% basketball). Systematic analysis reveals that PATS successfully adapts to diverse activity characteristics-from high-frequency sampling for dynamic sports to fine-grained segmentation for sequential skills-demonstrating its effectiveness as an adaptive approach to temporal sampling that advances automated skill assessment for real-world applications.

SkillFormer: Unified Multi-View Video Understanding for Proficiency Estimation

May 13, 2025Abstract:Assessing human skill levels in complex activities is a challenging problem with applications in sports, rehabilitation, and training. In this work, we present SkillFormer, a parameter-efficient architecture for unified multi-view proficiency estimation from egocentric and exocentric videos. Building on the TimeSformer backbone, SkillFormer introduces a CrossViewFusion module that fuses view-specific features using multi-head cross-attention, learnable gating, and adaptive self-calibration. We leverage Low-Rank Adaptation to fine-tune only a small subset of parameters, significantly reducing training costs. In fact, when evaluated on the EgoExo4D dataset, SkillFormer achieves state-of-the-art accuracy in multi-view settings while demonstrating remarkable computational efficiency, using 4.5x fewer parameters and requiring 3.75x fewer training epochs than prior baselines. It excels in multiple structured tasks, confirming the value of multi-view integration for fine-grained skill assessment.

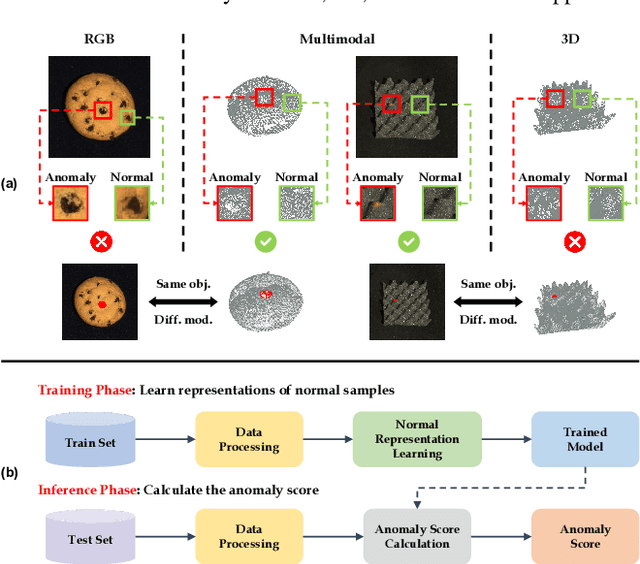

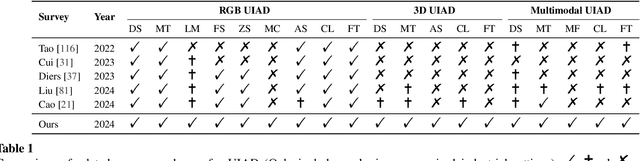

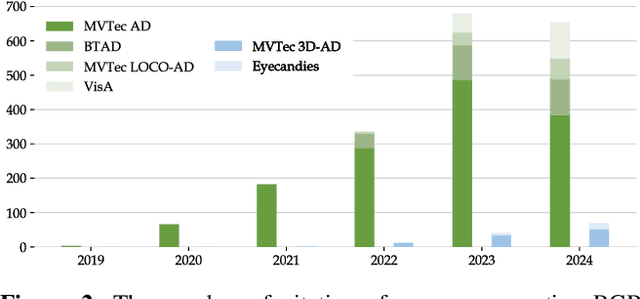

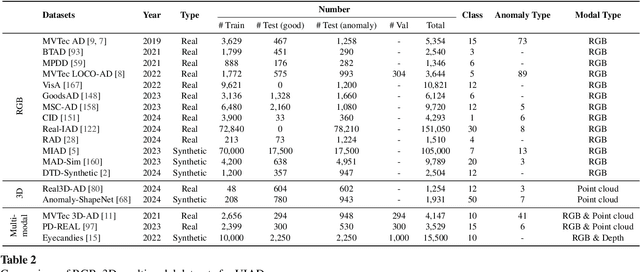

A Survey on RGB, 3D, and Multimodal Approaches for Unsupervised Industrial Anomaly Detection

Oct 29, 2024

Abstract:In the advancement of industrial informatization, Unsupervised Industrial Anomaly Detection (UIAD) technology effectively overcomes the scarcity of abnormal samples and significantly enhances the automation and reliability of smart manufacturing. While RGB, 3D, and multimodal anomaly detection have demonstrated comprehensive and robust capabilities within the industrial informatization sector, existing reviews on industrial anomaly detection have not sufficiently classified and discussed methods in 3D and multimodal settings. We focus on 3D UIAD and multimodal UIAD, providing a comprehensive summary of unsupervised industrial anomaly detection in three modal settings. Firstly, we compare our surveys with recent works, introducing commonly used datasets, evaluation metrics, and the definitions of anomaly detection problems. Secondly, we summarize five research paradigms in RGB, 3D and multimodal UIAD and three emerging industrial manufacturing optimization directions in RGB UIAD, and review three multimodal feature fusion strategies in multimodal settings. Finally, we outline the primary challenges currently faced by UIAD in three modal settings, and offer insights into future development directions, aiming to provide researchers with a thorough reference and offer new perspectives for the advancement of industrial informatization. Corresponding resources are available at https://github.com/Sunny5250/Awesome-Multi-Setting-UIAD.

Modeling Resilience of Collaborative AI Systems

Jan 23, 2024

Abstract:A Collaborative Artificial Intelligence System (CAIS) performs actions in collaboration with the human to achieve a common goal. CAISs can use a trained AI model to control human-system interaction, or they can use human interaction to dynamically learn from humans in an online fashion. In online learning with human feedback, the AI model evolves by monitoring human interaction through the system sensors in the learning state, and actuates the autonomous components of the CAIS based on the learning in the operational state. Therefore, any disruptive event affecting these sensors may affect the AI model's ability to make accurate decisions and degrade the CAIS performance. Consequently, it is of paramount importance for CAIS managers to be able to automatically track the system performance to understand the resilience of the CAIS upon such disruptive events. In this paper, we provide a new framework to model CAIS performance when the system experiences a disruptive event. With our framework, we introduce a model of performance evolution of CAIS. The model is equipped with a set of measures that aim to support CAIS managers in the decision process to achieve the required resilience of the system. We tested our framework on a real-world case study of a robot collaborating online with the human, when the system is experiencing a disruptive event. The case study shows that our framework can be adopted in CAIS and integrated into the online execution of the CAIS activities.

GResilience: Trading Off Between the Greenness and the Resilience of Collaborative AI Systems

Nov 08, 2023Abstract:A Collaborative Artificial Intelligence System (CAIS) works with humans in a shared environment to achieve a common goal. To recover from a disruptive event that degrades its performance and ensures its resilience, a CAIS may then need to perform a set of actions either by the system, by the humans, or collaboratively together. As for any other system, recovery actions may cause energy adverse effects due to the additional required energy. Therefore, it is of paramount importance to understand which of the above actions can better trade-off between resilience and greenness. In this in-progress work, we propose an approach to automatically evaluate CAIS recovery actions for their ability to trade-off between the resilience and greenness of the system. We have also designed an experiment protocol and its application to a real CAIS demonstrator. Our approach aims to attack the problem from two perspectives: as a one-agent decision problem through optimization, which takes the decision based on the score of resilience and greenness, and as a two-agent decision problem through game theory, which takes the decision based on the payoff computed for resilience and greenness as two players of a cooperative game.

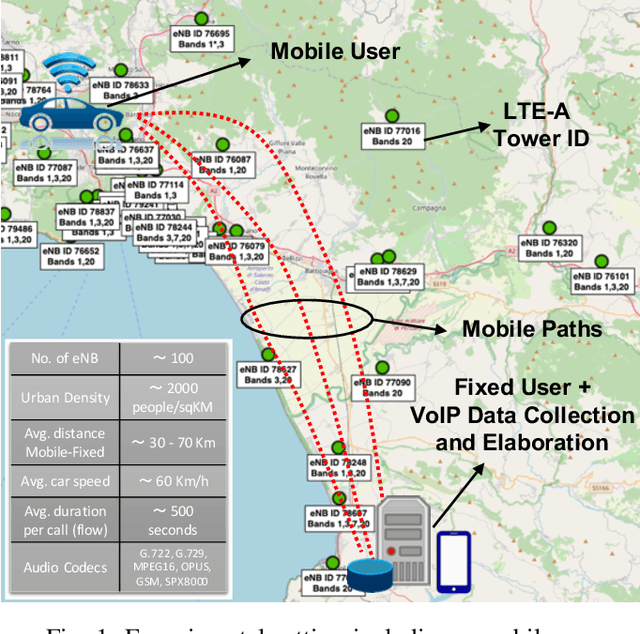

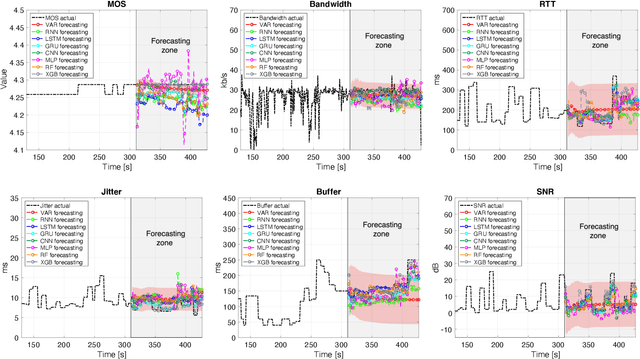

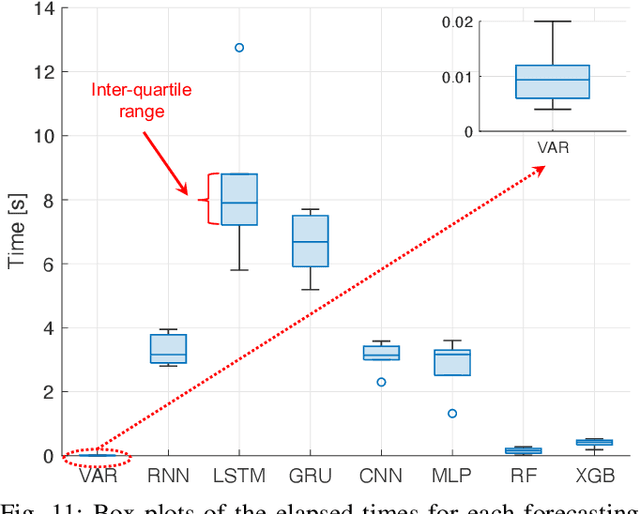

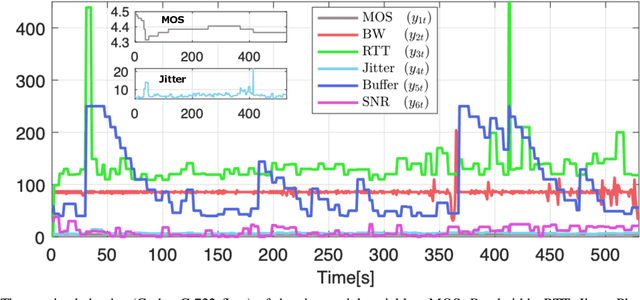

Multivariate Time Series characterization and forecasting of VoIP traffic in real mobile networks

Jul 13, 2023

Abstract:Predicting the behavior of real-time traffic (e.g., VoIP) in mobility scenarios could help the operators to better plan their network infrastructures and to optimize the allocation of resources. Accordingly, in this work the authors propose a forecasting analysis of crucial QoS/QoE descriptors (some of which neglected in the technical literature) of VoIP traffic in a real mobile environment. The problem is formulated in terms of a multivariate time series analysis. Such a formalization allows to discover and model the temporal relationships among various descriptors and to forecast their behaviors for future periods. Techniques such as Vector Autoregressive models and machine learning (deep-based and tree-based) approaches are employed and compared in terms of performance and time complexity, by reframing the multivariate time series problem into a supervised learning one. Moreover, a series of auxiliary analyses (stationarity, orthogonal impulse responses, etc.) are performed to discover the analytical structure of the time series and to provide deep insights about their relationships. The whole theoretical analysis has an experimental counterpart since a set of trials across a real-world LTE-Advanced environment has been performed to collect, post-process and analyze about 600,000 voice packets, organized per flow and differentiated per codec.

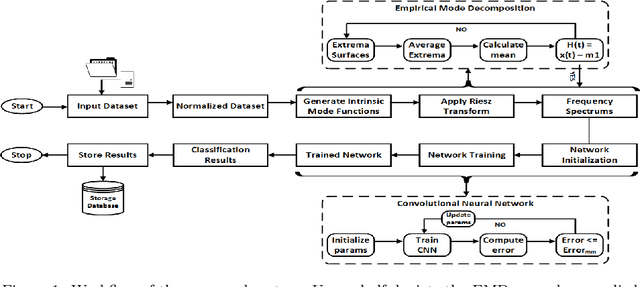

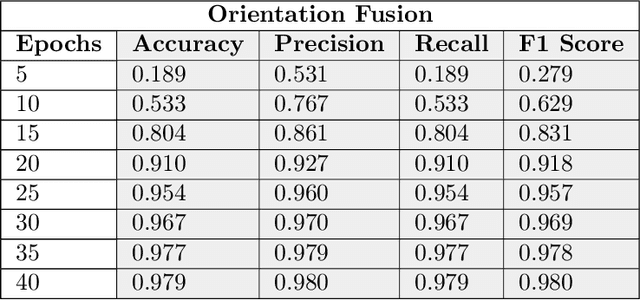

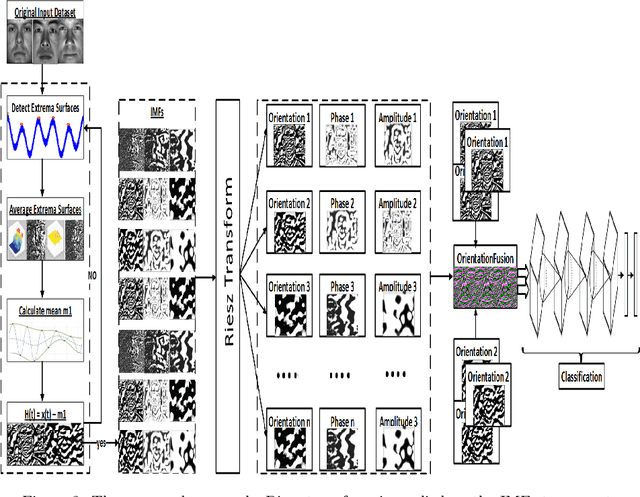

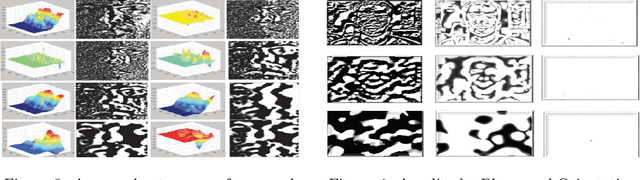

Cloud based Scalable Object Recognition from Video Streams using Orientation Fusion and Convolutional Neural Networks

Jun 19, 2021

Abstract:Object recognition from live video streams comes with numerous challenges such as the variation in illumination conditions and poses. Convolutional neural networks (CNNs) have been widely used to perform intelligent visual object recognition. Yet, CNNs still suffer from severe accuracy degradation, particularly on illumination-variant datasets. To address this problem, we propose a new CNN method based on orientation fusion for visual object recognition. The proposed cloud-based video analytics system pioneers the use of bi-dimensional empirical mode decomposition to split a video frame into intrinsic mode functions (IMFs). We further propose these IMFs to endure Reisz transform to produce monogenic object components, which are in turn used for the training of CNNs. Past works have demonstrated how the object orientation component may be used to pursue accuracy levels as high as 93\%. Herein we demonstrate how a feature-fusion strategy of the orientation components leads to further improving visual recognition accuracy to 97\%. We also assess the scalability of our method, looking at both the number and the size of the video streams under scrutiny. We carry out extensive experimentation on the publicly available Yale dataset, including also a self generated video datasets, finding significant improvements (both in accuracy and scale), in comparison to AlexNet, LeNet and SE-ResNeXt, which are the three most commonly used deep learning models for visual object recognition and classification.

Supervised Feature Selection Techniques in Network Intrusion Detection: a Critical Review

Apr 11, 2021

Abstract:Machine Learning (ML) techniques are becoming an invaluable support for network intrusion detection, especially in revealing anomalous flows, which often hide cyber-threats. Typically, ML algorithms are exploited to classify/recognize data traffic on the basis of statistical features such as inter-arrival times, packets length distribution, mean number of flows, etc. Dealing with the vast diversity and number of features that typically characterize data traffic is a hard problem. This results in the following issues: i) the presence of so many features leads to lengthy training processes (particularly when features are highly correlated), while prediction accuracy does not proportionally improve; ii) some of the features may introduce bias during the classification process, particularly those that have scarce relation with the data traffic to be classified. To this end, by reducing the feature space and retaining only the most significant features, Feature Selection (FS) becomes a crucial pre-processing step in network management and, specifically, for the purposes of network intrusion detection. In this review paper, we complement other surveys in multiple ways: i) evaluating more recent datasets (updated w.r.t. obsolete KDD 99) by means of a designed-from-scratch Python-based procedure; ii) providing a synopsis of most credited FS approaches in the field of intrusion detection, including Multi-Objective Evolutionary techniques; iii) assessing various experimental analyses such as feature correlation, time complexity, and performance. Our comparisons offer useful guidelines to network/security managers who are considering the incorporation of ML concepts into network intrusion detection, where trade-offs between performance and resource consumption are crucial.

Experimental Review of Neural-based approaches for Network Intrusion Management

Sep 18, 2020

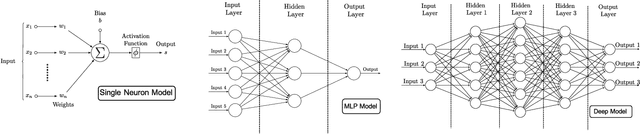

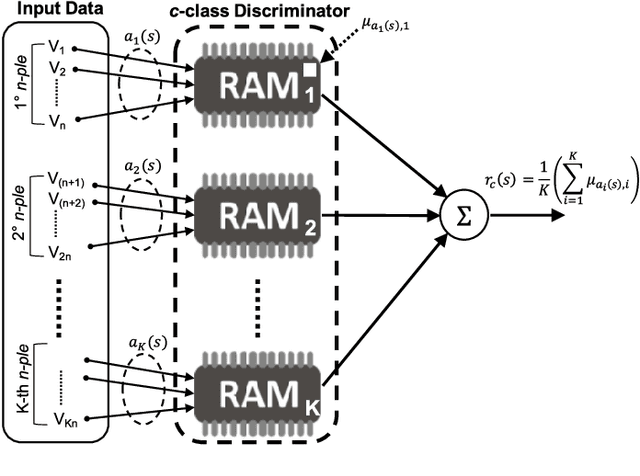

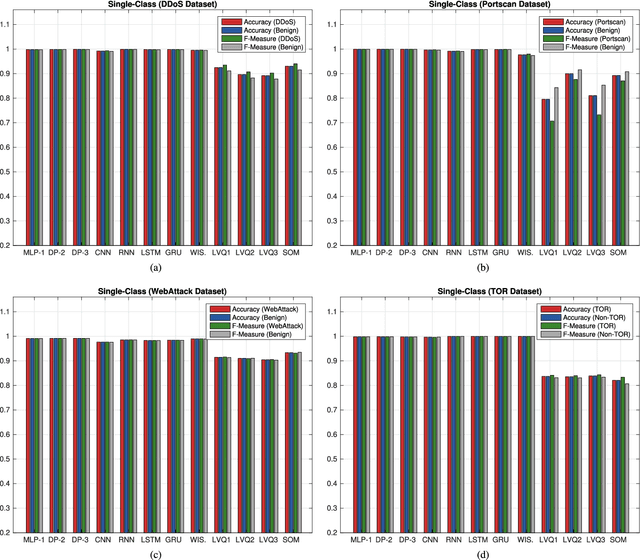

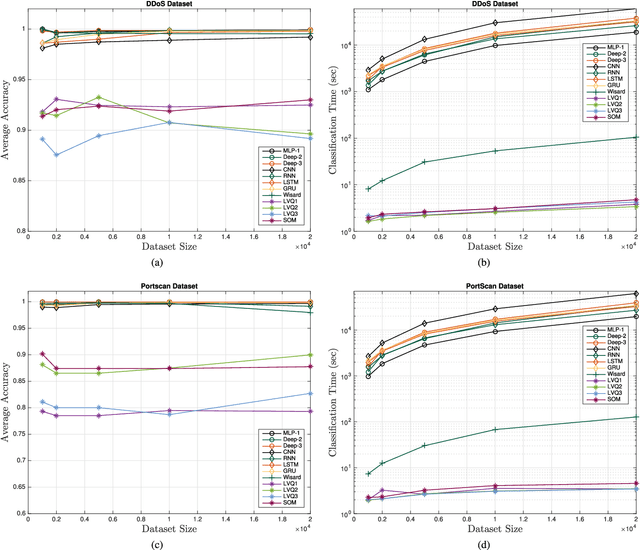

Abstract:The use of Machine Learning (ML) techniques in Intrusion Detection Systems (IDS) has taken a prominent role in the network security management field, due to the substantial number of sophisticated attacks that often pass undetected through classic IDSs. These are typically aimed at recognising attacks based on a specific signature, or at detecting anomalous events. However, deterministic, rule-based methods often fail to differentiate particular (rarer) network conditions (as in peak traffic during specific network situations) from actual cyber attacks. In this paper we provide an experimental-based review of neural-based methods applied to intrusion detection issues. Specifically, we i) offer a complete view of the most prominent neural-based techniques relevant to intrusion detection, including deep-based approaches or weightless neural networks, which feature surprising outcomes; ii) evaluate novel datasets (updated w.r.t. the obsolete KDD99 set) through a designed-from-scratch Python-based routine; iii) perform experimental analyses including time complexity and performance (accuracy and F-measure), considering both single-class and multi-class problems, and identifying trade-offs between resource consumption and performance. Our evaluation quantifies the value of neural networks, particularly when state-of-the-art datasets are used to train the models. This leads to interesting guidelines for security managers and computer network practitioners who are looking at the incorporation of neural-based ML into IDS.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge