Marcus Makowski

Department of diagnostic and interventional Radiology, Technical University of Munich, Munich, Germany

Parametric shape models for vessels learned from segmentations via differentiable voxelization

Jul 03, 2025Abstract:Vessels are complex structures in the body that have been studied extensively in multiple representations. While voxelization is the most common of them, meshes and parametric models are critical in various applications due to their desirable properties. However, these representations are typically extracted through segmentations and used disjointly from each other. We propose a framework that joins the three representations under differentiable transformations. By leveraging differentiable voxelization, we automatically extract a parametric shape model of the vessels through shape-to-segmentation fitting, where we learn shape parameters from segmentations without the explicit need for ground-truth shape parameters. The vessel is parametrized as centerlines and radii using cubic B-splines, ensuring smoothness and continuity by construction. Meshes are differentiably extracted from the learned shape parameters, resulting in high-fidelity meshes that can be manipulated post-fit. Our method can accurately capture the geometry of complex vessels, as demonstrated by the volumetric fits in experiments on aortas, aneurysms, and brain vessels.

From Text to Image: Exploring GPT-4Vision's Potential in Advanced Radiological Analysis across Subspecialties

Nov 24, 2023

Abstract:The study evaluates and compares GPT-4 and GPT-4Vision for radiological tasks, suggesting GPT-4Vision may recognize radiological features from images, thereby enhancing its diagnostic potential over text-based descriptions.

Evaluation of GPT-4 for chest X-ray impression generation: A reader study on performance and perception

Nov 12, 2023

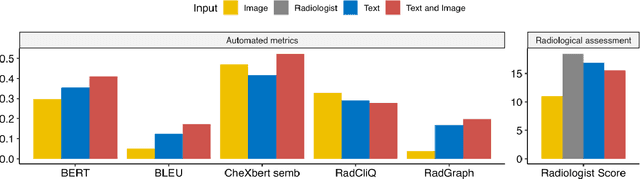

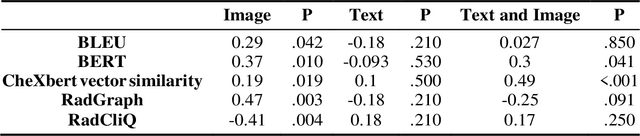

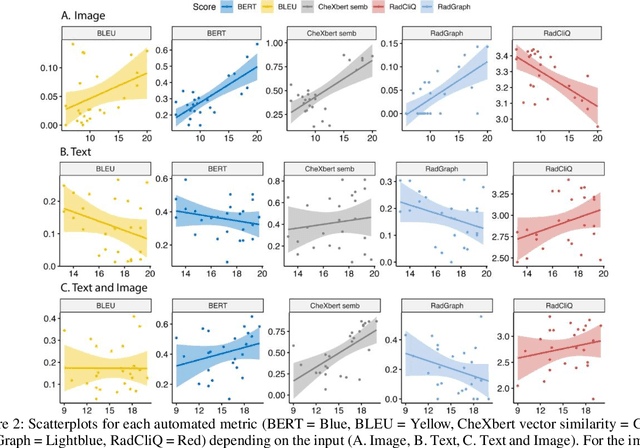

Abstract:The remarkable generative capabilities of multimodal foundation models are currently being explored for a variety of applications. Generating radiological impressions is a challenging task that could significantly reduce the workload of radiologists. In our study we explored and analyzed the generative abilities of GPT-4 for Chest X-ray impression generation. To generate and evaluate impressions of chest X-rays based on different input modalities (image, text, text and image), a blinded radiological report was written for 25-cases of the publicly available NIH-dataset. GPT-4 was given image, finding section or both sequentially to generate an input dependent impression. In a blind randomized reading, 4-radiologists rated the impressions and were asked to classify the impression origin (Human, AI), providing justification for their decision. Lastly text model evaluation metrics and their correlation with the radiological score (summation of the 4 dimensions) was assessed. According to the radiological score, the human-written impression was rated highest, although not significantly different to text-based impressions. The automated evaluation metrics showed moderate to substantial correlations to the radiological score for the image impressions, however individual scores were highly divergent among inputs, indicating insufficient representation of radiological quality. Detection of AI-generated impressions varied by input and was 61% for text-based impressions. Impressions classified as AI-generated had significantly worse radiological scores even when written by a radiologist, indicating potential bias. Our study revealed significant discrepancies between a radiological assessment and common automatic evaluation metrics depending on the model input. The detection of AI-generated findings is subject to bias that highly rated impressions are perceived as human-written.

Private, fair and accurate: Training large-scale, privacy-preserving AI models in radiology

Feb 03, 2023Abstract:Artificial intelligence (AI) models are increasingly used in the medical domain. However, as medical data is highly sensitive, special precautions to ensure the protection of said data are required. The gold standard for privacy preservation is the introduction of differential privacy (DP) to model training. However, prior work has shown that DP has negative implications on model accuracy and fairness. Therefore, the purpose of this study is to demonstrate that the privacy-preserving training of AI models for chest radiograph diagnosis is possible with high accuracy and fairness compared to non-private training. N=193,311 high quality clinical chest radiographs were retrospectively collected and manually labeled by experienced radiologists, who assigned one or more of the following diagnoses: cardiomegaly, congestion, pleural effusion, pneumonic infiltration and atelectasis, to each side (where applicable). The non-private AI models were compared with privacy-preserving (DP) models with respect to privacy-utility trade-offs (measured as area under the receiver-operator-characteristic curve (AUROC)), and privacy-fairness trade-offs (measured as Pearson-R or Statistical Parity Difference). The non-private AI model achieved an average AUROC score of 0.90 over all labels, whereas the DP AI model with a privacy budget of epsilon=7.89 resulted in an AUROC of 0.87, i.e., a mere 2.6% performance decrease compared to non-private training. The privacy-preserving training of diagnostic AI models can achieve high performance with a small penalty on model accuracy and does not amplify discrimination against age, sex or co-morbidity. We thus encourage practitioners to integrate state-of-the-art privacy-preserving techniques into medical AI model development.

Longitudinal Self-Supervision for COVID-19 Pathology Quantification

Mar 21, 2022

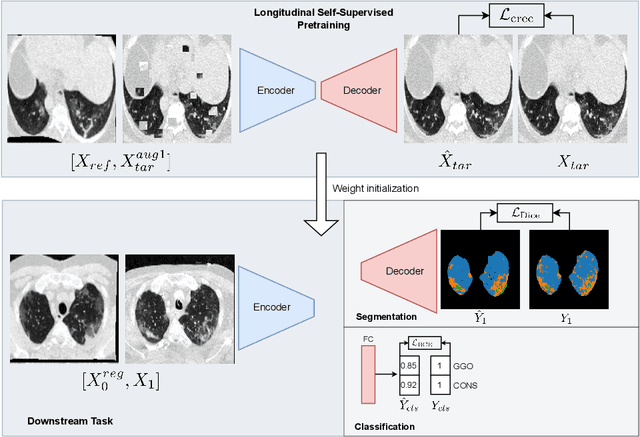

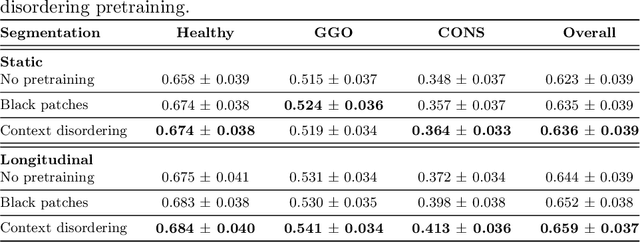

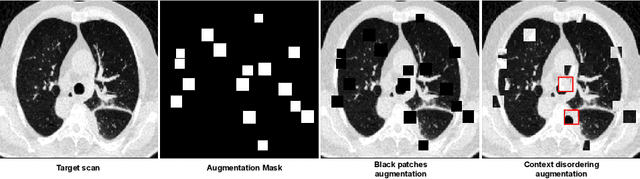

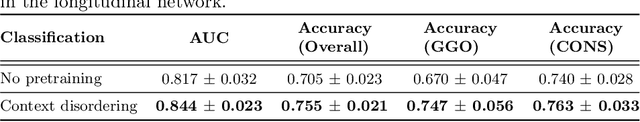

Abstract:Quantifying COVID-19 infection over time is an important task to manage the hospitalization of patients during a global pandemic. Recently, deep learning-based approaches have been proposed to help radiologists automatically quantify COVID-19 pathologies on longitudinal CT scans. However, the learning process of deep learning methods demands extensive training data to learn the complex characteristics of infected regions over longitudinal scans. It is challenging to collect a large-scale dataset, especially for longitudinal training. In this study, we want to address this problem by proposing a new self-supervised learning method to effectively train longitudinal networks for the quantification of COVID-19 infections. For this purpose, longitudinal self-supervision schemes are explored on clinical longitudinal COVID-19 CT scans. Experimental results show that the proposed method is effective, helping the model better exploit the semantics of longitudinal data and improve two COVID-19 quantification tasks.

Interactive Segmentation for COVID-19 Infection Quantification on Longitudinal CT scans

Oct 03, 2021

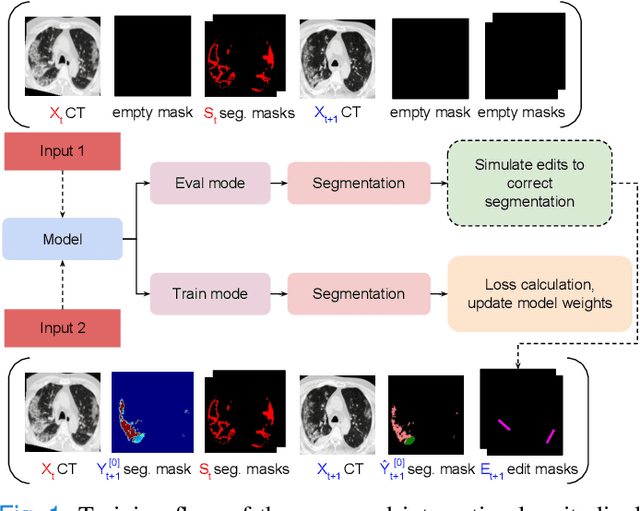

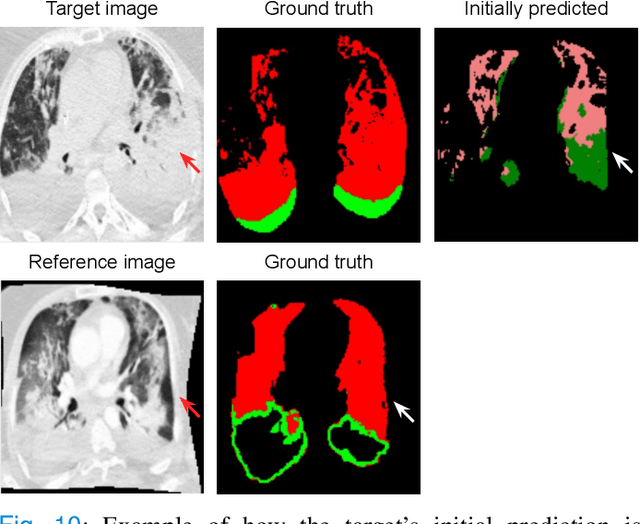

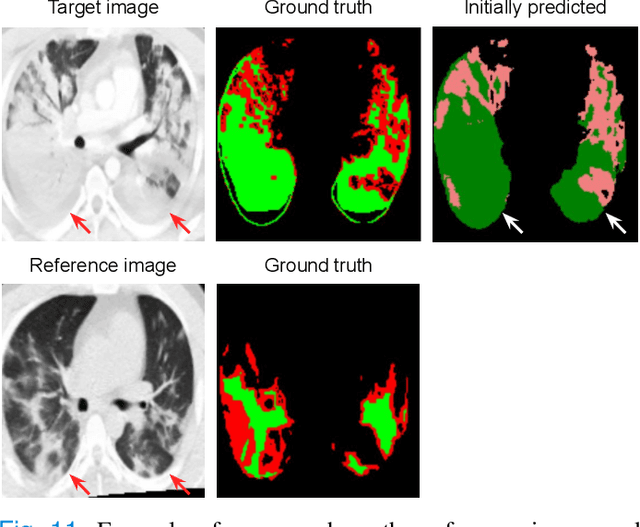

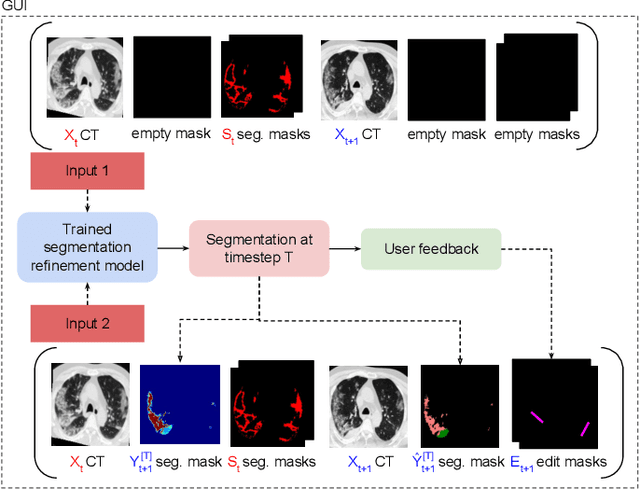

Abstract:Consistent segmentation of COVID-19 patient's CT scans across multiple time points is essential to assess disease progression and response to therapy accurately. Existing automatic and interactive segmentation models for medical images only use data from a single time point (static). However, valuable segmentation information from previous time points is often not used to aid the segmentation of a patient's follow-up scans. Also, fully automatic segmentation techniques frequently produce results that would need further editing for clinical use. In this work, we propose a new single network model for interactive segmentation that fully utilizes all available past information to refine the segmentation of follow-up scans. In the first segmentation round, our model takes 3D volumes of medical images from two-time points (target and reference) as concatenated slices with the additional reference time point segmentation as a guide to segment the target scan. In subsequent segmentation refinement rounds, user feedback in the form of scribbles that correct the segmentation and the target's previous segmentation results are additionally fed into the model. This ensures that the segmentation information from previous refinement rounds is retained. Experimental results on our in-house multiclass longitudinal COVID-19 dataset show that the proposed model outperforms its static version and can assist in localizing COVID-19 infections in patient's follow-up scans.

Sensitivity analysis in differentially private machine learning using hybrid automatic differentiation

Jul 09, 2021Abstract:In recent years, formal methods of privacy protection such as differential privacy (DP), capable of deployment to data-driven tasks such as machine learning (ML), have emerged. Reconciling large-scale ML with the closed-form reasoning required for the principled analysis of individual privacy loss requires the introduction of new tools for automatic sensitivity analysis and for tracking an individual's data and their features through the flow of computation. For this purpose, we introduce a novel \textit{hybrid} automatic differentiation (AD) system which combines the efficiency of reverse-mode AD with an ability to obtain a closed-form expression for any given quantity in the computational graph. This enables modelling the sensitivity of arbitrary differentiable function compositions, such as the training of neural networks on private data. We demonstrate our approach by analysing the individual DP guarantees of statistical database queries. Moreover, we investigate the application of our technique to the training of DP neural networks. Our approach can enable the principled reasoning about privacy loss in the setting of data processing, and further the development of automatic sensitivity analysis and privacy budgeting systems.

Differentially private federated deep learning for multi-site medical image segmentation

Jul 06, 2021

Abstract:Collaborative machine learning techniques such as federated learning (FL) enable the training of models on effectively larger datasets without data transfer. Recent initiatives have demonstrated that segmentation models trained with FL can achieve performance similar to locally trained models. However, FL is not a fully privacy-preserving technique and privacy-centred attacks can disclose confidential patient data. Thus, supplementing FL with privacy-enhancing technologies (PTs) such as differential privacy (DP) is a requirement for clinical applications in a multi-institutional setting. The application of PTs to FL in medical imaging and the trade-offs between privacy guarantees and model utility, the ramifications on training performance and the susceptibility of the final models to attacks have not yet been conclusively investigated. Here we demonstrate the first application of differentially private gradient descent-based FL on the task of semantic segmentation in computed tomography. We find that high segmentation performance is possible under strong privacy guarantees with an acceptable training time penalty. We furthermore demonstrate the first successful gradient-based model inversion attack on a semantic segmentation model and show that the application of DP prevents it from divulging sensitive image features.

Privacy-preserving medical image analysis

Dec 10, 2020

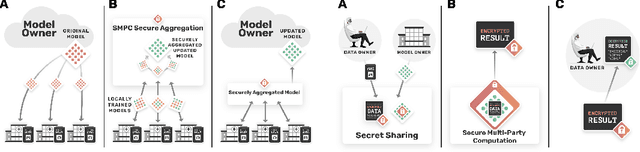

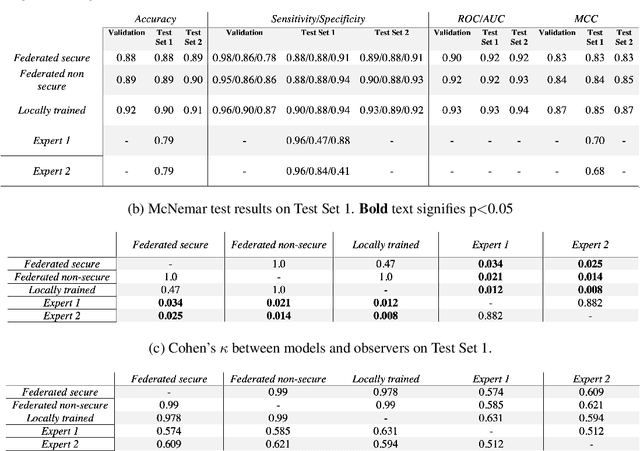

Abstract:The utilisation of artificial intelligence in medicine and healthcare has led to successful clinical applications in several domains. The conflict between data usage and privacy protection requirements in such systems must be resolved for optimal results as well as ethical and legal compliance. This calls for innovative solutions such as privacy-preserving machine learning (PPML). We present PriMIA (Privacy-preserving Medical Image Analysis), a software framework designed for PPML in medical imaging. In a real-life case study we demonstrate significantly better classification performance of a securely aggregated federated learning model compared to human experts on unseen datasets. Furthermore, we show an inference-as-a-service scenario for end-to-end encrypted diagnosis, where neither the data nor the model are revealed. Lastly, we empirically evaluate the framework's security against a gradient-based model inversion attack and demonstrate that no usable information can be recovered from the model.

Efficient, high-performance pancreatic segmentation using multi-scale feature extraction

Sep 02, 2020

Abstract:For artificial intelligence-based image analysis methods to reach clinical applicability, the development of high-performance algorithms is crucial. For example, existent segmentation algorithms based on natural images are neither efficient in their parameter use nor optimized for medical imaging. Here we present MoNet, a highly optimized neural-network-based pancreatic segmentation algorithm focused on achieving high performance by efficient multi-scale image feature utilization.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge