Leili Goli

Interactive Segmentation for COVID-19 Infection Quantification on Longitudinal CT scans

Oct 03, 2021

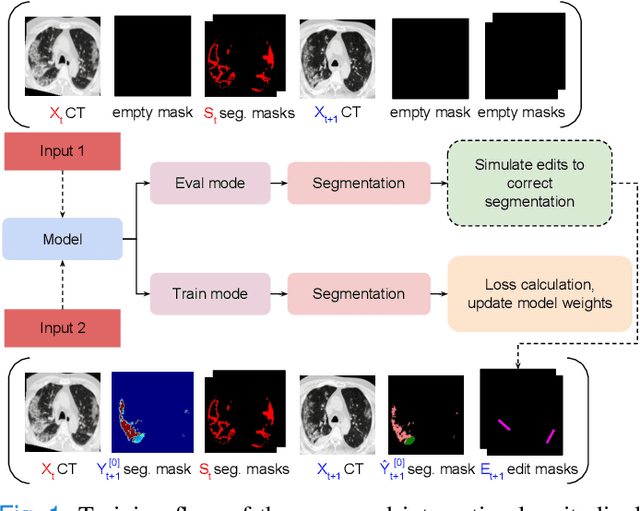

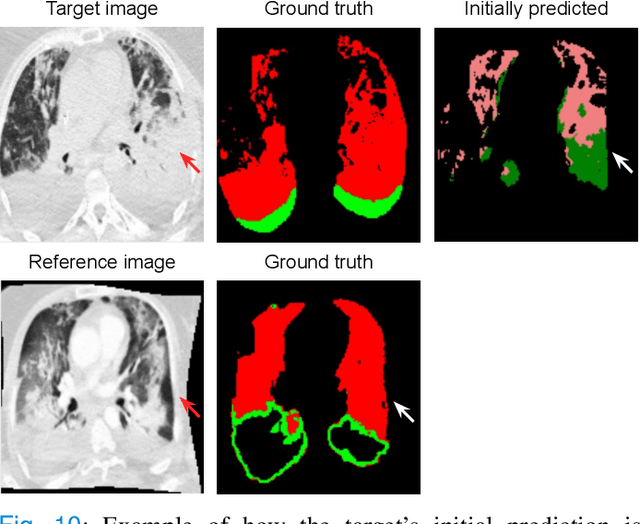

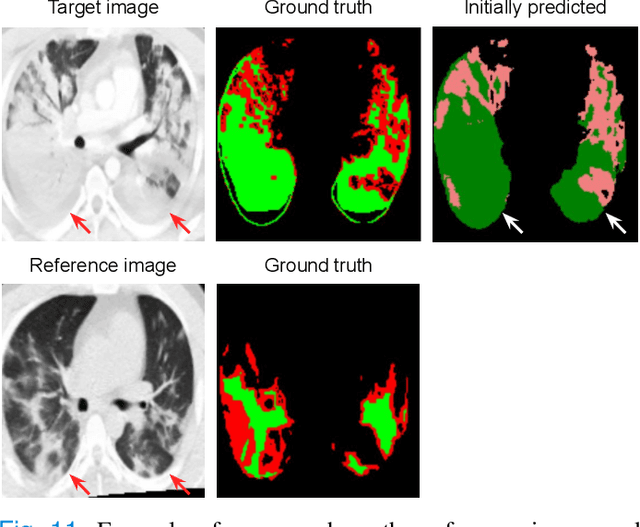

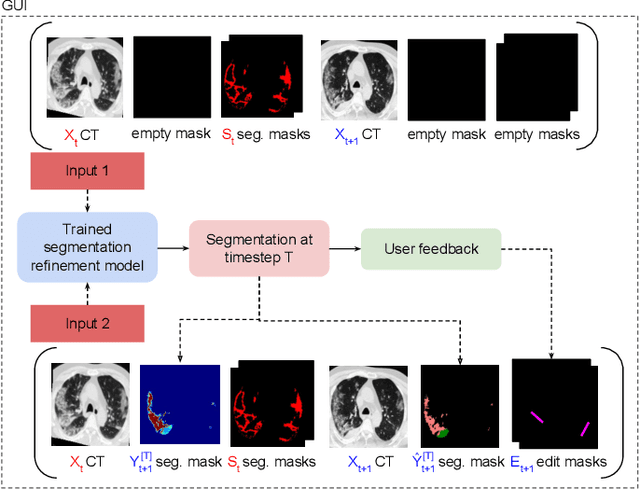

Abstract:Consistent segmentation of COVID-19 patient's CT scans across multiple time points is essential to assess disease progression and response to therapy accurately. Existing automatic and interactive segmentation models for medical images only use data from a single time point (static). However, valuable segmentation information from previous time points is often not used to aid the segmentation of a patient's follow-up scans. Also, fully automatic segmentation techniques frequently produce results that would need further editing for clinical use. In this work, we propose a new single network model for interactive segmentation that fully utilizes all available past information to refine the segmentation of follow-up scans. In the first segmentation round, our model takes 3D volumes of medical images from two-time points (target and reference) as concatenated slices with the additional reference time point segmentation as a guide to segment the target scan. In subsequent segmentation refinement rounds, user feedback in the form of scribbles that correct the segmentation and the target's previous segmentation results are additionally fed into the model. This ensures that the segmentation information from previous refinement rounds is retained. Experimental results on our in-house multiclass longitudinal COVID-19 dataset show that the proposed model outperforms its static version and can assist in localizing COVID-19 infections in patient's follow-up scans.

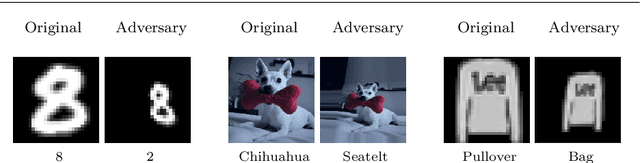

Generating Unrestricted Adversarial Examples via Three Parameters

Mar 13, 2021

Abstract:Deep neural networks have been shown to be vulnerable to adversarial examples deliberately constructed to misclassify victim models. As most adversarial examples have restricted their perturbations to $L_{p}$-norm, existing defense methods have focused on these types of perturbations and less attention has been paid to unrestricted adversarial examples; which can create more realistic attacks, able to deceive models without affecting human predictions. To address this problem, the proposed adversarial attack generates an unrestricted adversarial example with a limited number of parameters. The attack selects three points on the input image and based on their locations transforms the image into an adversarial example. By limiting the range of movement and location of these three points and using a discriminatory network, the proposed unrestricted adversarial example preserves the image appearance. Experimental results show that the proposed adversarial examples obtain an average success rate of 93.5% in terms of human evaluation on the MNIST and SVHN datasets. It also reduces the model accuracy by an average of 73% on six datasets MNIST, FMNIST, SVHN, CIFAR10, CIFAR100, and ImageNet. It should be noted that, in the case of attacks, lower accuracy in the victim model denotes a more successful attack. The adversarial train of the attack also improves model robustness against a randomly transformed image.

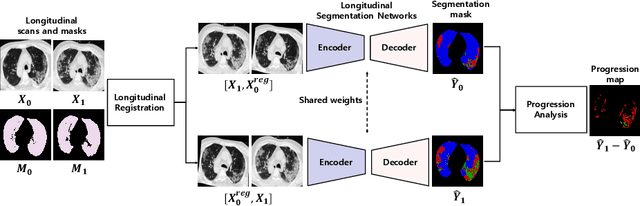

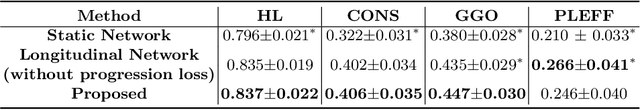

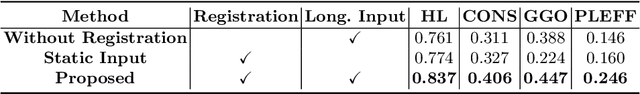

Longitudinal Quantitative Assessment of COVID-19 Infection Progression from Chest CTs

Mar 12, 2021

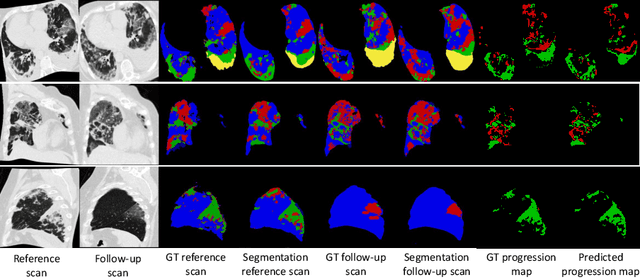

Abstract:Chest computed tomography (CT) has played an essential diagnostic role in assessing patients with COVID-19 by showing disease-specific image features such as ground-glass opacity and consolidation. Image segmentation methods have proven to help quantify the disease burden and even help predict the outcome. The availability of longitudinal CT series may also result in an efficient and effective method to reliably assess the progression of COVID-19, monitor the healing process and the response to different therapeutic strategies. In this paper, we propose a new framework to identify infection at a voxel level (identification of healthy lung, consolidation, and ground-glass opacity) and visualize the progression of COVID-19 using sequential low-dose non-contrast CT scans. In particular, we devise a longitudinal segmentation network that utilizes the reference scan information to improve the performance of disease identification. Experimental results on a clinical longitudinal dataset collected in our institution show the effectiveness of the proposed method compared to the static deep neural networks for disease quantification.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge