Kaiwei Che

ETTFS: An Efficient Training Framework for Time-to-First-Spike Neuron

Oct 31, 2024Abstract:Spiking Neural Networks (SNNs) have attracted considerable attention due to their biologically inspired, event-driven nature, making them highly suitable for neuromorphic hardware. Time-to-First-Spike (TTFS) coding, where neurons fire only once during inference, offers the benefits of reduced spike counts, enhanced energy efficiency, and faster processing. However, SNNs employing TTFS coding often suffer from diminished classification accuracy. This paper presents an efficient training framework for TTFS that not only improves accuracy but also accelerates the training process. Unlike most previous approaches, we first identify two key issues limiting the performance of TTFS neurons: information disminishing and imbalanced membrane potential distribution. To address these challenges, we propose a novel initialization strategy. Additionally, we introduce a temporal weighting decoding method that aggregates temporal outputs through a weighted sum, supporting BPTT. Moreover, we re-evaluate the pooling layer in TTFS neurons and find that average pooling is better suited than max-pooling for this coding scheme. Our experimental results show that the proposed training framework leads to more stable training and significant performance improvements, achieving state-of-the-art (SOTA) results on both the MNIST and Fashion-MNIST datasets.

Spatial-Temporal Search for Spiking Neural Networks

Oct 24, 2024

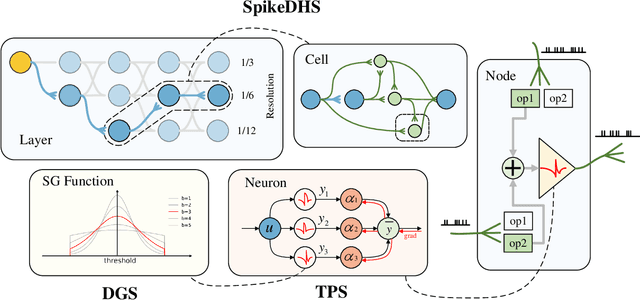

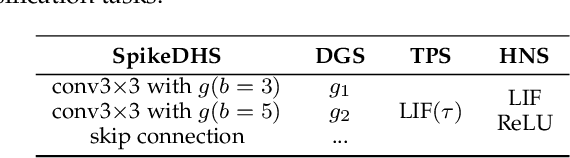

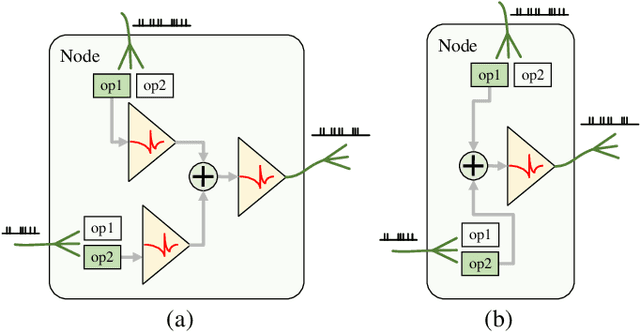

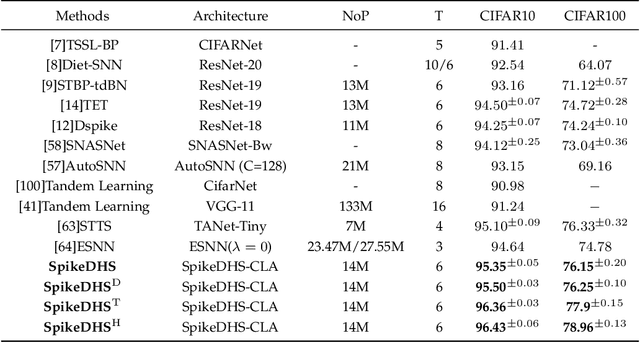

Abstract:Spiking Neural Networks (SNNs) are considered as a potential candidate for the next generation of artificial intelligence with appealing characteristics such as sparse computation and inherent temporal dynamics. By adopting architectures of Artificial Neural Networks (ANNs), SNNs achieve competitive performances on benchmark tasks like image classification. However, successful architectures of ANNs are not optimal for SNNs. In this work, we apply Neural Architecture Search (NAS) to find suitable architectures for SNNs. Previous NAS methods for SNNs focus primarily on the spatial dimension, with a notable lack of consideration for the temporal dynamics that are of critical importance for SNNs. Drawing inspiration from the heterogeneity of biological neural networks, we propose a differentiable approach to optimize SNN on both spatial and temporal dimensions. At spatial level, we have developed a spike-based differentiable hierarchical search (SpikeDHS) framework, where spike-based operation is optimized on both the cell and the layer level under computational constraints. We further propose a differentiable surrogate gradient search (DGS) method to evolve local SG functions independently during training. At temporal level, we explore an optimal configuration of diverse temporal dynamics on different types of spiking neurons by evolving their time constants, based on which we further develop hybrid networks combining SNN and ANN, balancing both accuracy and efficiency. Our methods achieve comparable classification performance of CIFAR10/100 and ImageNet with accuracies of 96.43%, 78.96%, and 70.21%, respectively. On event-based deep stereo, our methods find optimal layer variation and surpass the accuracy of specially designed ANNs with 26$\times$ lower computational cost ($6.7\mathrm{mJ}$), demonstrating the potential of SNN in processing highly sparse and dynamic signals.

Spikformer V2: Join the High Accuracy Club on ImageNet with an SNN Ticket

Jan 04, 2024Abstract:Spiking Neural Networks (SNNs), known for their biologically plausible architecture, face the challenge of limited performance. The self-attention mechanism, which is the cornerstone of the high-performance Transformer and also a biologically inspired structure, is absent in existing SNNs. To this end, we explore the potential of leveraging both self-attention capability and biological properties of SNNs, and propose a novel Spiking Self-Attention (SSA) and Spiking Transformer (Spikformer). The SSA mechanism eliminates the need for softmax and captures the sparse visual feature employing spike-based Query, Key, and Value. This sparse computation without multiplication makes SSA efficient and energy-saving. Further, we develop a Spiking Convolutional Stem (SCS) with supplementary convolutional layers to enhance the architecture of Spikformer. The Spikformer enhanced with the SCS is referred to as Spikformer V2. To train larger and deeper Spikformer V2, we introduce a pioneering exploration of Self-Supervised Learning (SSL) within the SNN. Specifically, we pre-train Spikformer V2 with masking and reconstruction style inspired by the mainstream self-supervised Transformer, and then finetune the Spikformer V2 on the image classification on ImageNet. Extensive experiments show that Spikformer V2 outperforms other previous surrogate training and ANN2SNN methods. An 8-layer Spikformer V2 achieves an accuracy of 80.38% using 4 time steps, and after SSL, a 172M 16-layer Spikformer V2 reaches an accuracy of 81.10% with just 1 time step. To the best of our knowledge, this is the first time that the SNN achieves 80+% accuracy on ImageNet. The code will be available at Spikformer V2.

Neural Network-Based Histologic Remission Prediction In Ulcerative Colitis

Aug 28, 2023

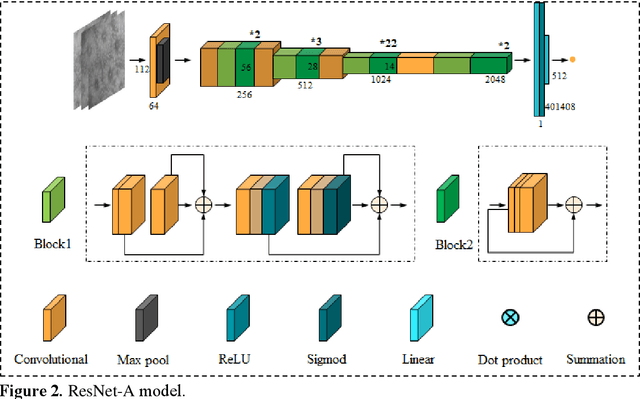

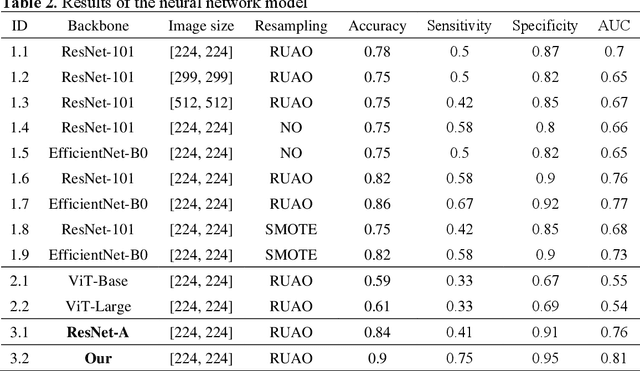

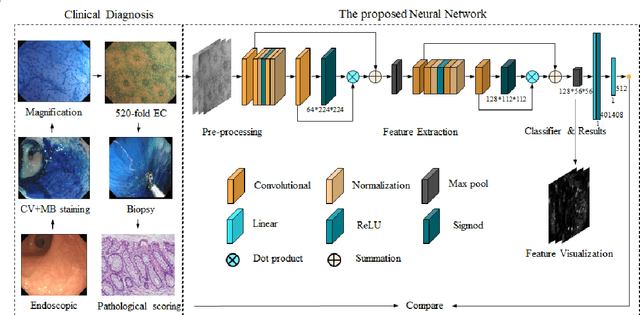

Abstract:BACKGROUND & AIMS: Histological remission (HR) is advocated and considered as a new therapeutic target in ulcerative colitis (UC). Diagnosis of histologic remission currently relies on biopsy; during this process, patients are at risk for bleeding, infection, and post-biopsy fibrosis. In addition, histologic response scoring is complex and time-consuming, and there is heterogeneity among pathologists. Endocytoscopy (EC) is a novel ultra-high magnification endoscopic technique that can provide excellent in vivo assessment of glands. Based on the EC technique, we propose a neural network model that can assess histological disease activity in UC using EC images to address the above issues. The experiment results demonstrate that the proposed method can assist patients in precise treatment and prognostic assessment. METHODS: We construct a neural network model for UC evaluation. A total of 5105 images of 154 intestinal segments from 87 patients undergoing EC treatment at a center in China between March 2022 and March 2023 are scored according to the Geboes score. Subsequently, 103 intestinal segments are used as the training set, 16 intestinal segments are used as the validation set for neural network training, and the remaining 35 intestinal segments are used as the test set to measure the model performance together with the validation set. RESULTS: By treating HR as a negative category and histologic activity as a positive category, the proposed neural network model can achieve an accuracy of 0.9, a specificity of 0.95, a sensitivity of 0.75, and an area under the curve (AUC) of 0.81. CONCLUSION: We develop a specific neural network model that can distinguish histologic remission/activity in EC images of UC, which helps to accelerate clinical histological diagnosis. keywords: ulcerative colitis; Endocytoscopy; Geboes score; neural network.

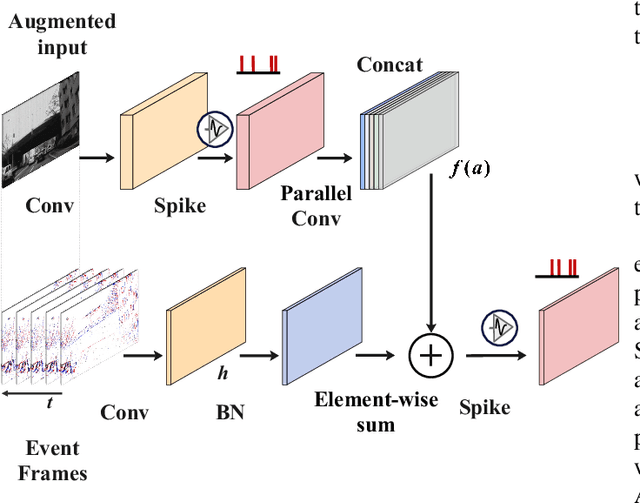

Automotive Object Detection via Learning Sparse Events by Temporal Dynamics of Spiking Neurons

Jul 24, 2023Abstract:Event-based sensors, with their high temporal resolution (1us) and dynamical range (120dB), have the potential to be deployed in high-speed platforms such as vehicles and drones. However, the highly sparse and fluctuating nature of events poses challenges for conventional object detection techniques based on Artificial Neural Networks (ANNs). In contrast, Spiking Neural Networks (SNNs) are well-suited for representing event-based data due to their inherent temporal dynamics. In particular, we demonstrate that the membrane potential dynamics can modulate network activity upon fluctuating events and strengthen features of sparse input. In addition, the spike-triggered adaptive threshold can stabilize training which further improves network performance. Based on this, we develop an efficient spiking feature pyramid network for event-based object detection. Our proposed SNN outperforms previous SNNs and sophisticated ANNs with attention mechanisms, achieving a mean average precision (map50) of 47.7% on the Gen1 benchmark dataset. This result significantly surpasses the previous best SNN by 9.7% and demonstrates the potential of SNNs for event-based vision. Our model has a concise architecture while maintaining high accuracy and much lower computation cost as a result of sparse computation. Our code will be publicly available.

Auto-Spikformer: Spikformer Architecture Search

Jun 01, 2023Abstract:The integration of self-attention mechanisms into Spiking Neural Networks (SNNs) has garnered considerable interest in the realm of advanced deep learning, primarily due to their biological properties. Recent advancements in SNN architecture, such as Spikformer, have demonstrated promising outcomes by leveraging Spiking Self-Attention (SSA) and Spiking Patch Splitting (SPS) modules. However, we observe that Spikformer may exhibit excessive energy consumption, potentially attributable to redundant channels and blocks. To mitigate this issue, we propose Auto-Spikformer, a one-shot Transformer Architecture Search (TAS) method, which automates the quest for an optimized Spikformer architecture. To facilitate the search process, we propose methods Evolutionary SNN neurons (ESNN), which optimizes the SNN parameters, and apply the previous method of weight entanglement supernet training, which optimizes the Vision Transformer (ViT) parameters. Moreover, we propose an accuracy and energy balanced fitness function $\mathcal{F}_{AEB}$ that jointly considers both energy consumption and accuracy, and aims to find a Pareto optimal combination that balances these two objectives. Our experimental results demonstrate the effectiveness of Auto-Spikformer, which outperforms the state-of-the-art method including CNN or ViT models that are manually or automatically designed while significantly reducing energy consumption.

Accurate and Efficient Event-based Semantic Segmentation Using Adaptive Spiking Encoder-Decoder Network

Apr 24, 2023

Abstract:Low-power event-driven computation and inherent temporal dynamics render spiking neural networks (SNNs) ideal candidates for processing highly dynamic and asynchronous signals from event-based sensors. However, due to the challenges in training and architectural design constraints, there is a scarcity of competitive demonstrations of SNNs in event-based dense prediction compared to artificial neural networks (ANNs). In this work, we construct an efficient spiking encoder-decoder network for large-scale event-based semantic segmentation tasks, optimizing the encoder with hierarchical search. To improve learning from highly dynamic event streams, we exploit the intrinsic adaptive threshold of spiking neurons to modulate network activation. Additionally, we develop a dual-path spiking spatially-adaptive modulation (SSAM) block to enhance the representation of sparse events, significantly improving network performance. Our network achieves 72.57% mean intersection over union (MIoU) on the DDD17 dataset and 57.22% MIoU on the newly proposed larger DSEC-Semantic dataset, surpassing current record ANNs by 4% while utilizing much lower computation costs. To the best of our knowledge, this is the first instance of SNNs outperforming ANNs in challenging event-based semantic segmentation tasks, demonstrating their immense potential in event-based vision. Our code will be publicly available.

Deep Learning-based Biological Anatomical Landmark Detection in Colonoscopy Videos

Aug 06, 2021

Abstract:Colonoscopy is a standard imaging tool for visualizing the entire gastrointestinal (GI) tract of patients to capture lesion areas. However, it takes the clinicians excessive time to review a large number of images extracted from colonoscopy videos. Thus, automatic detection of biological anatomical landmarks within the colon is highly demanded, which can help reduce the burden of clinicians by providing guidance information for the locations of lesion areas. In this article, we propose a novel deep learning-based approach to detect biological anatomical landmarks in colonoscopy videos. First, raw colonoscopy video sequences are pre-processed to reject interference frames. Second, a ResNet-101 based network is used to detect three biological anatomical landmarks separately to obtain the intermediate detection results. Third, to achieve more reliable localization of the landmark periods within the whole video period, we propose to post-process the intermediate detection results by identifying the incorrectly predicted frames based on their temporal distribution and reassigning them back to the correct class. Finally, the average detection accuracy reaches 99.75\%. Meanwhile, the average IoU of 0.91 shows a high degree of similarity between our predicted landmark periods and ground truth. The experimental results demonstrate that our proposed model is capable of accurately detecting and localizing biological anatomical landmarks from colonoscopy videos.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge