Jiangxing Liao

Automotive Object Detection via Learning Sparse Events by Temporal Dynamics of Spiking Neurons

Jul 24, 2023Abstract:Event-based sensors, with their high temporal resolution (1us) and dynamical range (120dB), have the potential to be deployed in high-speed platforms such as vehicles and drones. However, the highly sparse and fluctuating nature of events poses challenges for conventional object detection techniques based on Artificial Neural Networks (ANNs). In contrast, Spiking Neural Networks (SNNs) are well-suited for representing event-based data due to their inherent temporal dynamics. In particular, we demonstrate that the membrane potential dynamics can modulate network activity upon fluctuating events and strengthen features of sparse input. In addition, the spike-triggered adaptive threshold can stabilize training which further improves network performance. Based on this, we develop an efficient spiking feature pyramid network for event-based object detection. Our proposed SNN outperforms previous SNNs and sophisticated ANNs with attention mechanisms, achieving a mean average precision (map50) of 47.7% on the Gen1 benchmark dataset. This result significantly surpasses the previous best SNN by 9.7% and demonstrates the potential of SNNs for event-based vision. Our model has a concise architecture while maintaining high accuracy and much lower computation cost as a result of sparse computation. Our code will be publicly available.

Accurate and Efficient Event-based Semantic Segmentation Using Adaptive Spiking Encoder-Decoder Network

Apr 24, 2023

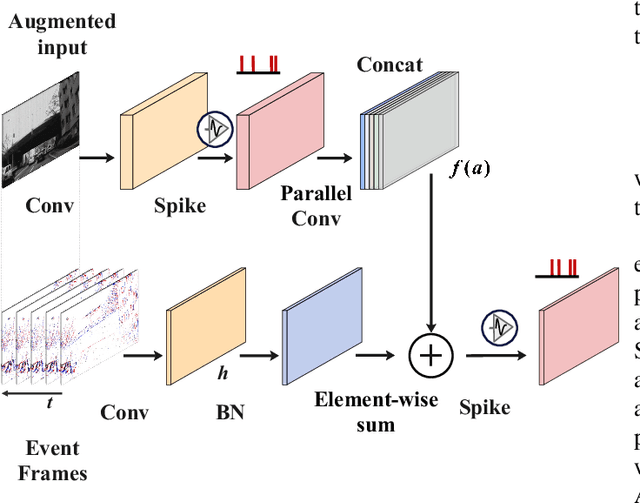

Abstract:Low-power event-driven computation and inherent temporal dynamics render spiking neural networks (SNNs) ideal candidates for processing highly dynamic and asynchronous signals from event-based sensors. However, due to the challenges in training and architectural design constraints, there is a scarcity of competitive demonstrations of SNNs in event-based dense prediction compared to artificial neural networks (ANNs). In this work, we construct an efficient spiking encoder-decoder network for large-scale event-based semantic segmentation tasks, optimizing the encoder with hierarchical search. To improve learning from highly dynamic event streams, we exploit the intrinsic adaptive threshold of spiking neurons to modulate network activation. Additionally, we develop a dual-path spiking spatially-adaptive modulation (SSAM) block to enhance the representation of sparse events, significantly improving network performance. Our network achieves 72.57% mean intersection over union (MIoU) on the DDD17 dataset and 57.22% MIoU on the newly proposed larger DSEC-Semantic dataset, surpassing current record ANNs by 4% while utilizing much lower computation costs. To the best of our knowledge, this is the first instance of SNNs outperforming ANNs in challenging event-based semantic segmentation tasks, demonstrating their immense potential in event-based vision. Our code will be publicly available.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge