Junyu Li

NTIRE 2025 Image Shadow Removal Challenge Report

Jun 18, 2025

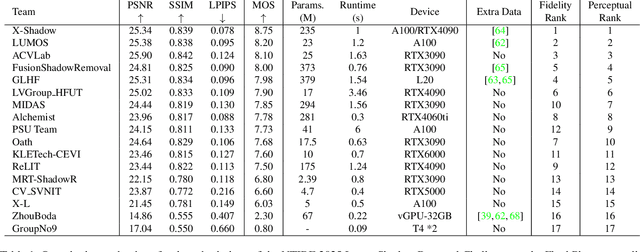

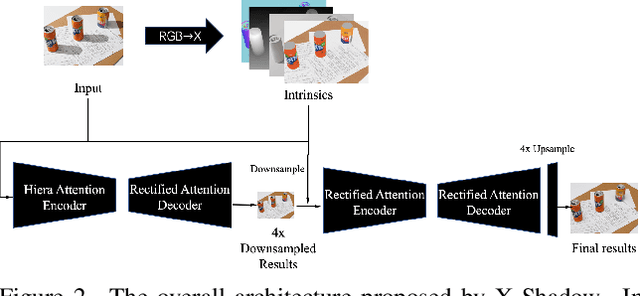

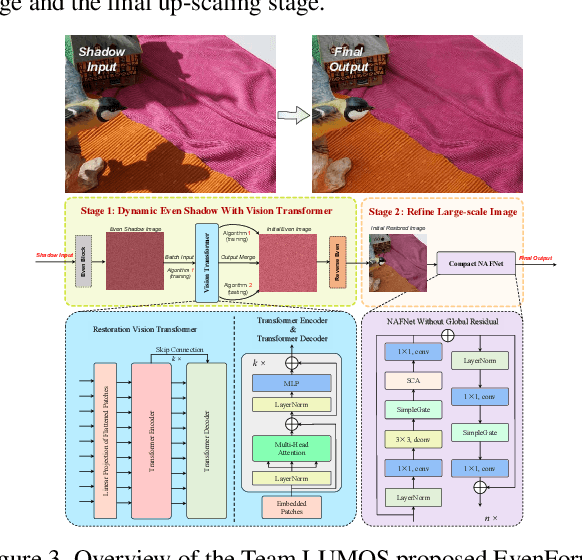

Abstract:This work examines the findings of the NTIRE 2025 Shadow Removal Challenge. A total of 306 participants have registered, with 17 teams successfully submitting their solutions during the final evaluation phase. Following the last two editions, this challenge had two evaluation tracks: one focusing on reconstruction fidelity and the other on visual perception through a user study. Both tracks were evaluated with images from the WSRD+ dataset, simulating interactions between self- and cast-shadows with a large number of diverse objects, textures, and materials.

M4: Multi-Proxy Multi-Gate Mixture of Experts Network for Multiple Instance Learning in Histopathology Image Analysis

Jul 24, 2024

Abstract:Multiple instance learning (MIL) has been successfully applied for whole slide images (WSIs) analysis in computational pathology, enabling a wide range of prediction tasks from tumor subtyping to inferring genetic mutations and multi-omics biomarkers. However, existing MIL methods predominantly focus on single-task learning, resulting in not only overall low efficiency but also the overlook of inter-task relatedness. To address these issues, we proposed an adapted architecture of Multi-gate Mixture-of-experts with Multi-proxy for Multiple instance learning (M4), and applied this framework for simultaneous prediction of multiple genetic mutations from WSIs. The proposed M4 model has two main innovations: (1) utilizing a mixture of experts with multiple gating strategies for multi-genetic mutation prediction on a single pathological slide; (2) constructing multi-proxy expert network and gate network for comprehensive and effective modeling of pathological image information. Our model achieved significant improvements across five tested TCGA datasets in comparison to current state-of-the-art single-task methods. The code is available at:https://github.com/Bigyehahaha/M4.

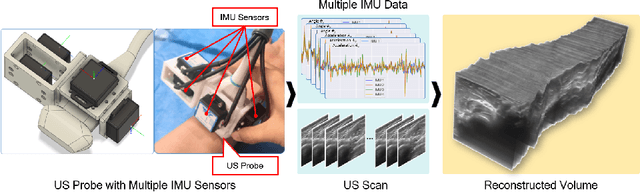

Multi-IMU with Online Self-Consistency for Freehand 3D Ultrasound Reconstruction

Jul 19, 2023

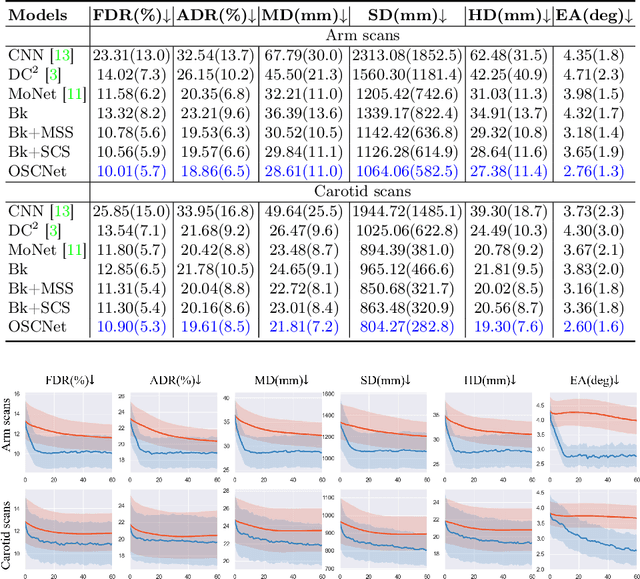

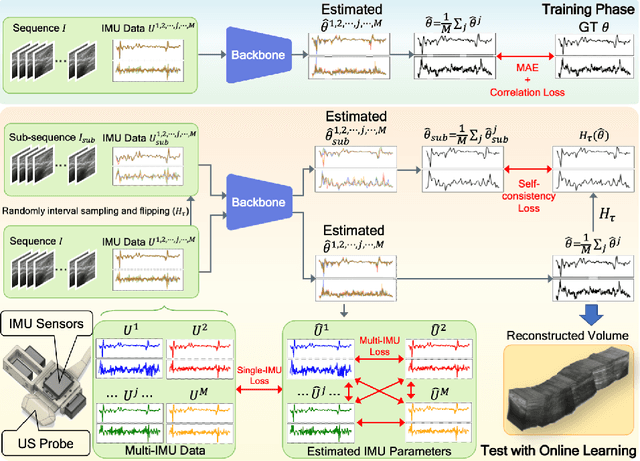

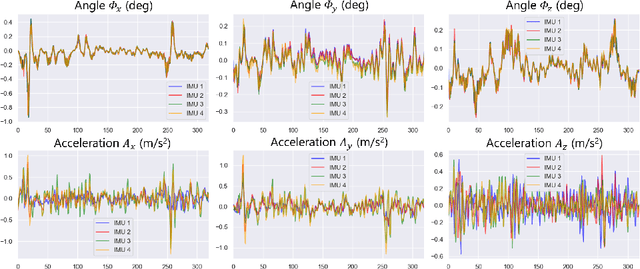

Abstract:Ultrasound (US) imaging is a popular tool in clinical diagnosis, offering safety, repeatability, and real-time capabilities. Freehand 3D US is a technique that provides a deeper understanding of scanned regions without increasing complexity. However, estimating elevation displacement and accumulation error remains challenging, making it difficult to infer the relative position using images alone. The addition of external lightweight sensors has been proposed to enhance reconstruction performance without adding complexity, which has been shown to be beneficial. We propose a novel online self-consistency network (OSCNet) using multiple inertial measurement units (IMUs) to improve reconstruction performance. OSCNet utilizes a modal-level self-supervised strategy to fuse multiple IMU information and reduce differences between reconstruction results obtained from each IMU data. Additionally, a sequence-level self-consistency strategy is proposed to improve the hierarchical consistency of prediction results among the scanning sequence and its sub-sequences. Experiments on large-scale arm and carotid datasets with multiple scanning tactics demonstrate that our OSCNet outperforms previous methods, achieving state-of-the-art reconstruction performance.

MUVF-YOLOX: A Multi-modal Ultrasound Video Fusion Network for Renal Tumor Diagnosis

Jul 15, 2023Abstract:Early diagnosis of renal cancer can greatly improve the survival rate of patients. Contrast-enhanced ultrasound (CEUS) is a cost-effective and non-invasive imaging technique and has become more and more frequently used for renal tumor diagnosis. However, the classification of benign and malignant renal tumors can still be very challenging due to the highly heterogeneous appearance of cancer and imaging artifacts. Our aim is to detect and classify renal tumors by integrating B-mode and CEUS-mode ultrasound videos. To this end, we propose a novel multi-modal ultrasound video fusion network that can effectively perform multi-modal feature fusion and video classification for renal tumor diagnosis. The attention-based multi-modal fusion module uses cross-attention and self-attention to extract modality-invariant features and modality-specific features in parallel. In addition, we design an object-level temporal aggregation (OTA) module that can automatically filter low-quality features and efficiently integrate temporal information from multiple frames to improve the accuracy of tumor diagnosis. Experimental results on a multicenter dataset show that the proposed framework outperforms the single-modal models and the competing methods. Furthermore, our OTA module achieves higher classification accuracy than the frame-level predictions. Our code is available at \url{https://github.com/JeunyuLi/MUAF}.

Uniform tensor clustering by jointly exploring sample affinities of various orders

Feb 03, 2023Abstract:Conventional clustering methods based on pairwise affinity usually suffer from the concentration effect while processing huge dimensional features yet low sample sizes data, resulting in inaccuracy to encode the sample proximity and suboptimal performance in clustering. To address this issue, we propose a unified tensor clustering method (UTC) that characterizes sample proximity using multiple samples' affinity, thereby supplementing rich spatial sample distributions to boost clustering. Specifically, we find that the triadic tensor affinity can be constructed via the Khari-Rao product of two affinity matrices. Furthermore, our early work shows that the fourth-order tensor affinity is defined by the Kronecker product. Therefore, we utilize arithmetical products, Khatri-Rao and Kronecker products, to mathematically integrate different orders of affinity into a unified tensor clustering framework. Thus, the UTC jointly learns a joint low-dimensional embedding to combine various orders. Finally, a numerical scheme is designed to solve the problem. Experiments on synthetic datasets and real-world datasets demonstrate that 1) the usage of high-order tensor affinity could provide a supplementary characterization of sample proximity to the popular affinity matrix; 2) the proposed method of UTC is affirmed to enhance clustering by exploiting different order affinities when processing high-dimensional data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge