Jing Lu

Kuaishou

Decoding Speech Envelopes from Electroencephalogram with a Contrastive Pearson Correlation Coefficient Loss

Jan 29, 2026Abstract:Recent advances in reconstructing speech envelopes from Electroencephalogram (EEG) signals have enabled continuous auditory attention decoding (AAD) in multi-speaker environments. Most Deep Neural Network (DNN)-based envelope reconstruction models are trained to maximize the Pearson correlation coefficients (PCC) between the attended envelope and the reconstructed envelope (attended PCC). While the difference between the attended PCC and the unattended PCC plays an essential role in auditory attention decoding, existing methods often focus on maximizing the attended PCC. We therefore propose a contrastive PCC loss which represents the difference between the attended PCC and the unattended PCC. The proposed approach is evaluated on three public EEG AAD datasets using four DNN architectures. Across many settings, the proposed objective improves envelope separability and AAD accuracy, while also revealing dataset- and architecture-dependent failure cases.

VoCodec: An Efficient Lightweight Low-Bitrate Speech Codec

Jan 19, 2026Abstract:Recent advancements in end-to-end neural speech codecs enable compressing audio at extremely low bitrates while maintaining high-fidelity reconstruction. Meanwhile, low computational complexity and low latency are crucial for real-time communication. In this paper, we propose VoCodec, a speech codec model featuring a computational complexity of only 349.29M multiply-accumulate operations per second (MACs/s) and a latency of 30 ms. With the competitive vocoder Vocos as its backbone, the proposed model ranked fourth on Track 1 in the 2025 LRAC Challenge and achieved the highest subjective evaluation score (MUSHRA) on the clean speech test set. Additionally, we cascade a lightweight neural network at the front end to extend its capability of speech enhancement. Experimental results demonstrate that the two systems achieve competitive performance across multiple evaluation metrics. Speech samples can be found at https://acceleration123.github.io/.

SPECTRE: Spectral Pre-training Embeddings with Cylindrical Temporal Rotary Position Encoding for Fine-Grained sEMG-Based Movement Decoding

Dec 27, 2025Abstract:Decoding fine-grained movement from non-invasive surface Electromyography (sEMG) is a challenge for prosthetic control due to signal non-stationarity and low signal-to-noise ratios. Generic self-supervised learning (SSL) frameworks often yield suboptimal results on sEMG as they attempt to reconstruct noisy raw signals and lack the inductive bias to model the cylindrical topology of electrode arrays. To overcome these limitations, we introduce SPECTRE, a domain-specific SSL framework. SPECTRE features two primary contributions: a physiologically-grounded pre-training task and a novel positional encoding. The pre-training involves masked prediction of discrete pseudo-labels from clustered Short-Time Fourier Transform (STFT) representations, compelling the model to learn robust, physiologically relevant frequency patterns. Additionally, our Cylindrical Rotary Position Embedding (CyRoPE) factorizes embeddings along linear temporal and annular spatial dimensions, explicitly modeling the forearm sensor topology to capture muscle synergies. Evaluations on multiple datasets, including challenging data from individuals with amputation, demonstrate that SPECTRE establishes a new state-of-the-art for movement decoding, significantly outperforming both supervised baselines and generic SSL approaches. Ablation studies validate the critical roles of both spectral pre-training and CyRoPE. SPECTRE provides a robust foundation for practical myoelectric interfaces capable of handling real-world sEMG complexities.

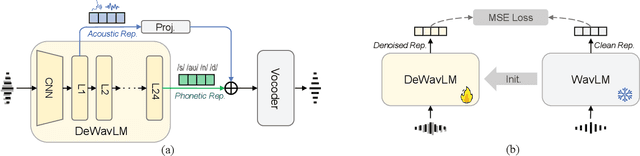

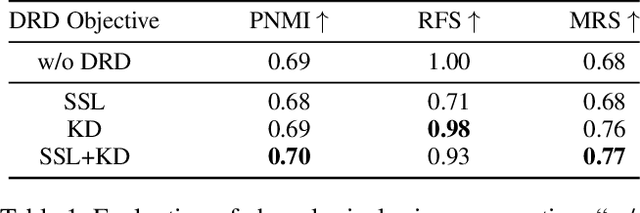

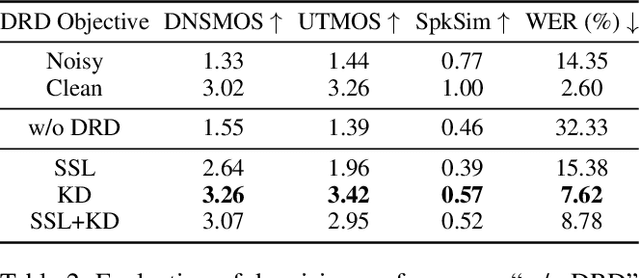

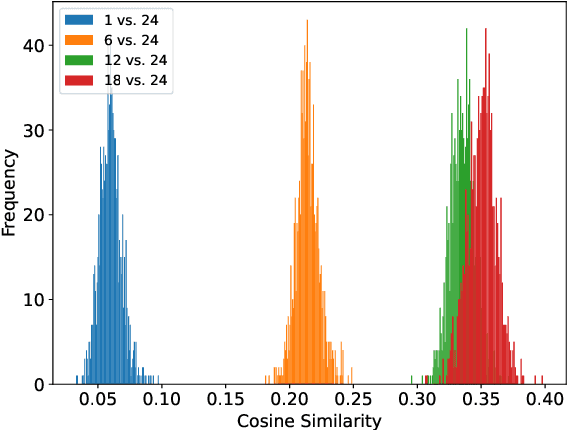

PASE: Leveraging the Phonological Prior of WavLM for Low-Hallucination Generative Speech Enhancement

Nov 17, 2025

Abstract:Generative models have shown remarkable performance in speech enhancement (SE), achieving superior perceptual quality over traditional discriminative approaches. However, existing generative SE approaches often overlook the risk of hallucination under severe noise, leading to incorrect spoken content or inconsistent speaker characteristics, which we term linguistic and acoustic hallucinations, respectively. We argue that linguistic hallucination stems from models' failure to constrain valid phonological structures and it is a more fundamental challenge. While language models (LMs) are well-suited for capturing the underlying speech structure through modeling the distribution of discrete tokens, existing approaches are limited in learning from noise-corrupted representations, which can lead to contaminated priors and hallucinations. To overcome these limitations, we propose the Phonologically Anchored Speech Enhancer (PASE), a generative SE framework that leverages the robust phonological prior embedded in the pre-trained WavLM model to mitigate hallucinations. First, we adapt WavLM into a denoising expert via representation distillation to clean its final-layer features. Guided by the model's intrinsic phonological prior, this process enables robust denoising while minimizing linguistic hallucinations. To further reduce acoustic hallucinations, we train the vocoder with a dual-stream representation: the high-level phonetic representation provides clean linguistic content, while a low-level acoustic representation retains speaker identity and prosody. Experimental results demonstrate that PASE not only surpasses state-of-the-art discriminative models in perceptual quality, but also significantly outperforms prior generative models with substantially lower linguistic and acoustic hallucinations.

Auditory Attention Decoding from Ear-EEG Signals: A Dataset with Dynamic Attention Switching and Rigorous Cross-Validation

Oct 22, 2025Abstract:Recent promising results in auditory attention decoding (AAD) using scalp electroencephalography (EEG) have motivated the exploration of cEEGrid, a flexible and portable ear-EEG system. While prior cEEGrid-based studies have confirmed the feasibility of AAD, they often neglect the dynamic nature of attentional states in real-world contexts. To address this gap, a novel cEEGrid dataset featuring three concurrent speakers distributed across three of five distinct spatial locations is introduced. The novel dataset is designed to probe attentional tracking and switching in realistic scenarios. Nested leave-one-out validation-an approach more rigorous than conventional single-loop leave-one-out validation-is employed to reduce biases stemming from EEG's intricate temporal dynamics. Four rule-based models are evaluated: Wiener filter (WF), canonical component analysis (CCA), common spatial pattern (CSP) and Riemannian Geometry-based classifier (RGC). With a 30-second decision window, WF and CCA models achieve decoding accuracies of 41.5% and 41.4%, respectively, while CSP and RGC models yield 37.8% and 37.6% accuracies using a 10-second window. Notably, both WF and CCA successfully track attentional state switches across all experimental tasks. Additionally, higher decoding accuracies are observed for electrodes positioned at the upper cEEGrid layout and near the listener's right ear. These findings underscore the utility of dynamic, ecologically valid paradigms and rigorous validation in advancing AAD research with cEEGrid.

GLM-4.5: Agentic, Reasoning, and Coding (ARC) Foundation Models

Aug 08, 2025Abstract:We present GLM-4.5, an open-source Mixture-of-Experts (MoE) large language model with 355B total parameters and 32B activated parameters, featuring a hybrid reasoning method that supports both thinking and direct response modes. Through multi-stage training on 23T tokens and comprehensive post-training with expert model iteration and reinforcement learning, GLM-4.5 achieves strong performance across agentic, reasoning, and coding (ARC) tasks, scoring 70.1% on TAU-Bench, 91.0% on AIME 24, and 64.2% on SWE-bench Verified. With much fewer parameters than several competitors, GLM-4.5 ranks 3rd overall among all evaluated models and 2nd on agentic benchmarks. We release both GLM-4.5 (355B parameters) and a compact version, GLM-4.5-Air (106B parameters), to advance research in reasoning and agentic AI systems. Code, models, and more information are available at https://github.com/zai-org/GLM-4.5.

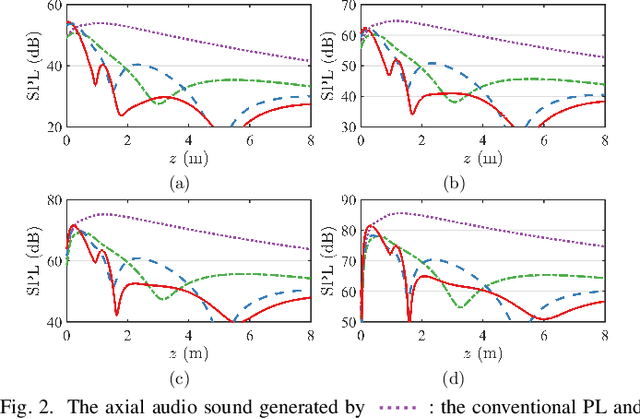

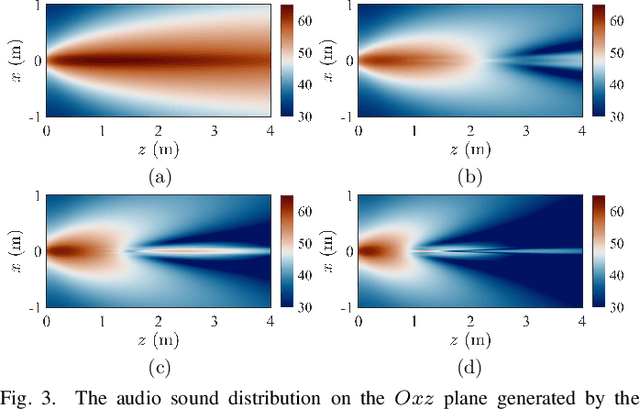

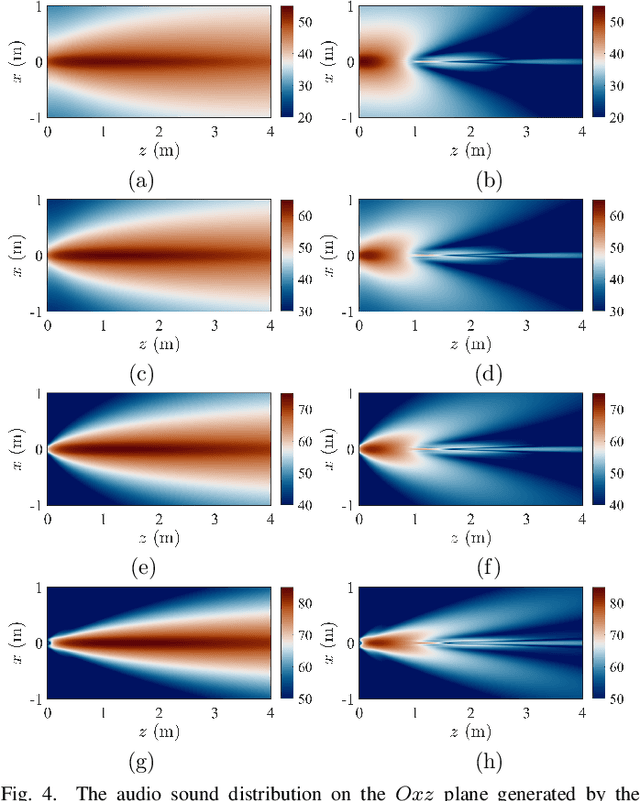

A k-space approach to modeling multi-channel parametric array loudspeaker systems

Jul 30, 2025Abstract:Multi-channel parametric array loudspeaker (MCPAL) systems offer enhanced flexibility and promise for generating highly directional audio beams in real-world applications. However, efficient and accurate prediction of their generated sound fields remains a major challenge due to the complex nonlinear behavior and multi-channel signal processing involved. To overcome this obstacle, we propose a k-space approach for modeling arbitrary MCPAL systems arranged on a baffled planar surface. In our method, the linear ultrasound field is first solved using the angular spectrum approach, and the quasilinear audio sound field is subsequently computed efficiently in k-space. By leveraging three-dimensional fast Fourier transforms, our approach not only achieves high computational and memory efficiency but also maintains accuracy without relying on the paraxial approximation. For typical configurations studied, the proposed method demonstrates a speed-up of more than four orders of magnitude compared to the direct integration method. Our proposed approach paved the way for simulating and designing advanced MCPAL systems.

A Lightweight Hybrid Dual Channel Speech Enhancement System under Low-SNR Conditions

May 26, 2025

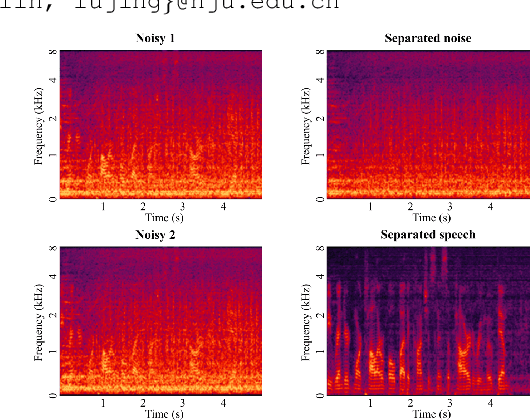

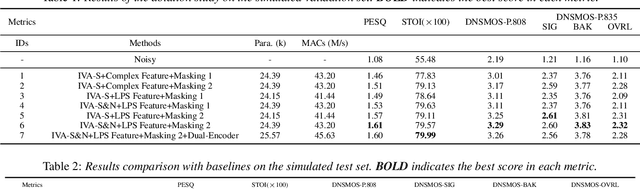

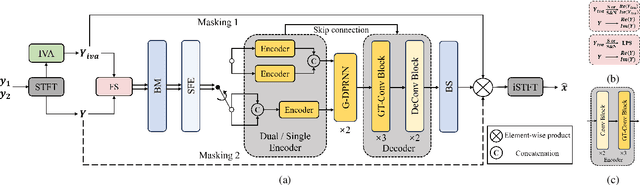

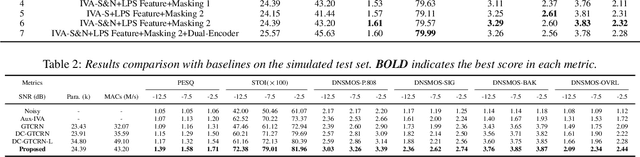

Abstract:Although deep learning based multi-channel speech enhancement has achieved significant advancements, its practical deployment is often limited by constrained computational resources, particularly in low signal-to-noise ratio (SNR) conditions. In this paper, we propose a lightweight hybrid dual-channel speech enhancement system that combines independent vector analysis (IVA) with a modified version of the dual-channel grouped temporal convolutional recurrent network (GTCRN). IVA functions as a coarse estimator, providing auxiliary information for both speech and noise, while the modified GTCRN further refines the speech quality. We investigate several modifications to ensure the comprehensive utilization of both original and auxiliary information. Experimental results demonstrate the effectiveness of the proposed system, achieving enhanced speech with minimal parameters and low computational complexity.

TS-URGENet: A Three-stage Universal Robust and Generalizable Speech Enhancement Network

May 24, 2025Abstract:Universal speech enhancement aims to handle input speech with different distortions and input formats. To tackle this challenge, we present TS-URGENet, a Three-Stage Universal, Robust, and Generalizable speech Enhancement Network. To address various distortions, the proposed system employs a novel three-stage architecture consisting of a filling stage, a separation stage, and a restoration stage. The filling stage mitigates packet loss by preliminarily filling lost regions under noise interference, ensuring signal continuity. The separation stage suppresses noise, reverberation, and clipping distortion to improve speech clarity. Finally, the restoration stage compensates for bandwidth limitation, codec artifacts, and residual packet loss distortion, refining the overall speech quality. Our proposed TS-URGENet achieved outstanding performance in the Interspeech 2025 URGENT Challenge, ranking 2nd in Track 1.

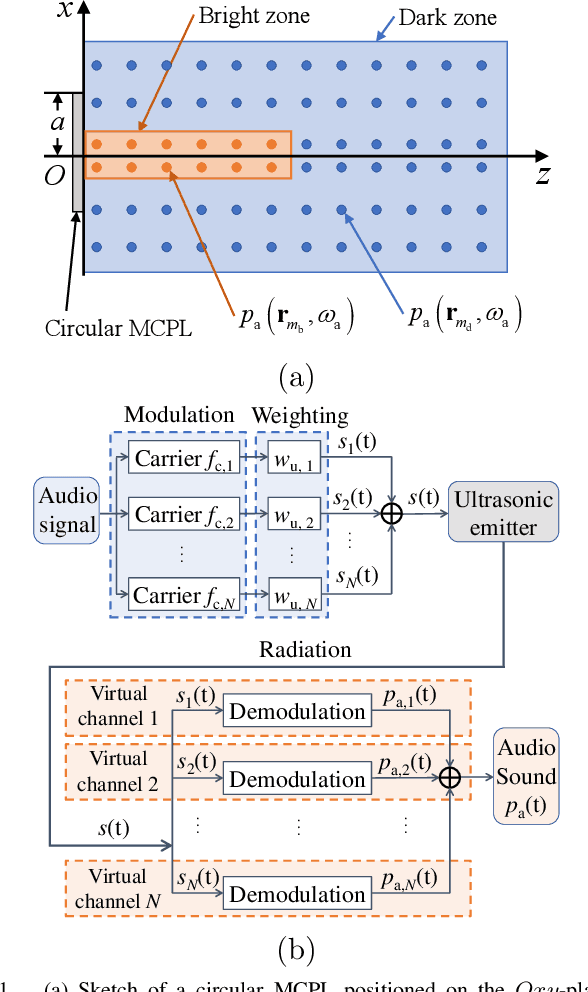

Generating Localized Audible Zones Using a Single-Channel Parametric Loudspeaker

Apr 24, 2025

Abstract:Advanced sound zone control (SZC) techniques typically rely on massive multi-channel loudspeaker arrays to create high-contrast personal sound zones, making single-loudspeaker SZC seem impossible. In this Letter, we challenge this paradigm by introducing the multi-carrier parametric loudspeaker (MCPL), which enables SZC using only a single loudspeaker. In our approach, distinct audio signals are modulated onto separate ultrasonic carrier waves at different frequencies and combined into a single composite signal. This signal is emitted by a single-channel ultrasonic transducer, and through nonlinear demodulation in air, the audio signals interact to virtually form multi-channel outputs. This novel capability allows the application of existing SZC algorithms originally designed for multi-channel loudspeaker arrays. Simulations validate the effectiveness of our proposed single-channel MCPL, demonstrating its potential as a promising alternative to traditional multi-loudspeaker systems for achieving high-contrast SZC. Our work opens new avenues for simplifying SZC systems without compromising performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge