Yang Qi

aiXiv: A Next-Generation Open Access Ecosystem for Scientific Discovery Generated by AI Scientists

Aug 20, 2025Abstract:Recent advances in large language models (LLMs) have enabled AI agents to autonomously generate scientific proposals, conduct experiments, author papers, and perform peer reviews. Yet this flood of AI-generated research content collides with a fragmented and largely closed publication ecosystem. Traditional journals and conferences rely on human peer review, making them difficult to scale and often reluctant to accept AI-generated research content; existing preprint servers (e.g. arXiv) lack rigorous quality-control mechanisms. Consequently, a significant amount of high-quality AI-generated research lacks appropriate venues for dissemination, hindering its potential to advance scientific progress. To address these challenges, we introduce aiXiv, a next-generation open-access platform for human and AI scientists. Its multi-agent architecture allows research proposals and papers to be submitted, reviewed, and iteratively refined by both human and AI scientists. It also provides API and MCP interfaces that enable seamless integration of heterogeneous human and AI scientists, creating a scalable and extensible ecosystem for autonomous scientific discovery. Through extensive experiments, we demonstrate that aiXiv is a reliable and robust platform that significantly enhances the quality of AI-generated research proposals and papers after iterative revising and reviewing on aiXiv. Our work lays the groundwork for a next-generation open-access ecosystem for AI scientists, accelerating the publication and dissemination of high-quality AI-generated research content. Code is available at https://github.com/aixiv-org. Website is available at https://forms.gle/DxQgCtXFsJ4paMtn8.

Stochastic Forward-Forward Learning through Representational Dimensionality Compression

May 22, 2025Abstract:The Forward-Forward (FF) algorithm provides a bottom-up alternative to backpropagation (BP) for training neural networks, relying on a layer-wise "goodness" function to guide learning. Existing goodness functions, inspired by energy-based learning (EBL), are typically defined as the sum of squared post-synaptic activations, neglecting the correlations between neurons. In this work, we propose a novel goodness function termed dimensionality compression that uses the effective dimensionality (ED) of fluctuating neural responses to incorporate second-order statistical structure. Our objective minimizes ED for clamped inputs when noise is considered while maximizing it across the sample distribution, promoting structured representations without the need to prepare negative samples. We demonstrate that this formulation achieves competitive performance compared to other non-BP methods. Moreover, we show that noise plays a constructive role that can enhance generalization and improve inference when predictions are derived from the mean of squared outputs, which is equivalent to making predictions based on the energy term. Our findings contribute to the development of more biologically plausible learning algorithms and suggest a natural fit for neuromorphic computing, where stochasticity is a computational resource rather than a nuisance. The code is available at https://github.com/ZhichaoZhu/StochasticForwardForward

Statistical Limits in Random Tensors with Multiple Correlated Spikes

Mar 05, 2025Abstract:We use tools from random matrix theory to study the multi-spiked tensor model, i.e., a rank-$r$ deformation of a symmetric random Gaussian tensor. In particular, thanks to the nature of local optimization methods used to find the maximum likelihood estimator of this model, we propose to study the phase transition phenomenon for finding critical points of the corresponding optimization problem, i.e., those points defined by the Karush-Kuhn-Tucker (KKT) conditions. Moreover, we characterize the limiting alignments between the estimated signals corresponding to a critical point of the likelihood and the ground truth signals. With the help of these results, we propose a new estimator of the rank-$r$ tensor weights by solving a system of polynomial equations, which is asymptotically unbiased contrary the maximum likelihood estimator.

Multi-Center Study on Deep Learning-Assisted Detection and Classification of Fetal Central Nervous System Anomalies Using Ultrasound Imaging

Jan 01, 2025

Abstract:Prenatal ultrasound evaluates fetal growth and detects congenital abnormalities during pregnancy, but the examination of ultrasound images by radiologists requires expertise and sophisticated equipment, which would otherwise fail to improve the rate of identifying specific types of fetal central nervous system (CNS) abnormalities and result in unnecessary patient examinations. We construct a deep learning model to improve the overall accuracy of the diagnosis of fetal cranial anomalies to aid prenatal diagnosis. In our collected multi-center dataset of fetal craniocerebral anomalies covering four typical anomalies of the fetal central nervous system (CNS): anencephaly, encephalocele (including meningocele), holoprosencephaly, and rachischisis, patient-level prediction accuracy reaches 94.5%, with an AUROC value of 99.3%. In the subgroup analyzes, our model is applicable to the entire gestational period, with good identification of fetal anomaly types for any gestational period. Heatmaps superimposed on the ultrasound images not only provide a visual interpretation for the algorithm but also provide an intuitive visual aid to the physician by highlighting key areas that need to be reviewed, helping the physician to quickly identify and validate key areas. Finally, the retrospective reader study demonstrates that by combining the automatic prediction of the DL system with the professional judgment of the radiologist, the diagnostic accuracy and efficiency can be effectively improved and the misdiagnosis rate can be reduced, which has an important clinical application prospect.

Towards free-response paradigm: a theory on decision-making in spiking neural networks

Apr 16, 2024Abstract:The energy-efficient and brain-like information processing abilities of Spiking Neural Networks (SNNs) have attracted considerable attention, establishing them as a crucial element of brain-inspired computing. One prevalent challenge encountered by SNNs is the trade-off between inference speed and accuracy, which requires sufficient time to achieve the desired level of performance. Drawing inspiration from animal behavior experiments that demonstrate a connection between decision-making reaction times, task complexity, and confidence levels, this study seeks to apply these insights to SNNs. The focus is on understanding how SNNs make inferences, with a particular emphasis on untangling the interplay between signal and noise in decision-making processes. The proposed theoretical framework introduces a new optimization objective for SNN training, highlighting the importance of not only the accuracy of decisions but also the development of predictive confidence through learning from past experiences. Experimental results demonstrate that SNNs trained according to this framework exhibit improved confidence expression, leading to better decision-making outcomes. In addition, a strategy is introduced for efficient decision-making during inference, which allows SNNs to complete tasks more quickly and can use stopping times as indicators of decision confidence. By integrating neuroscience insights with neuromorphic computing, this study opens up new possibilities to explore the capabilities of SNNs and advance their application in complex decision-making scenarios.

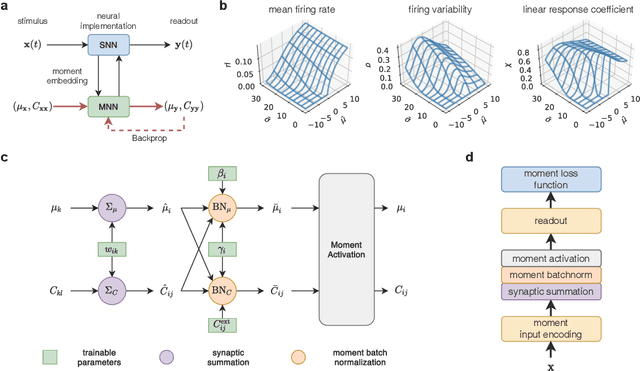

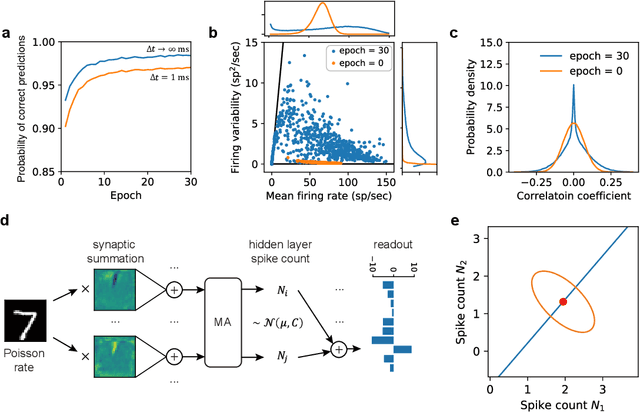

Learn to integrate parts for whole through correlated neural variability

Jan 01, 2024Abstract:Sensory perception originates from the responses of sensory neurons, which react to a collection of sensory signals linked to various physical attributes of a singular perceptual object. Unraveling how the brain extracts perceptual information from these neuronal responses is a pivotal challenge in both computational neuroscience and machine learning. Here we introduce a statistical mechanical theory, where perceptual information is first encoded in the correlated variability of sensory neurons and then reformatted into the firing rates of downstream neurons. Applying this theory, we illustrate the encoding of motion direction using neural covariance and demonstrate high-fidelity direction recovery by spiking neural networks. Networks trained under this theory also show enhanced performance in classifying natural images, achieving higher accuracy and faster inference speed. Our results challenge the traditional view of neural covariance as a secondary factor in neural coding, highlighting its potential influence on brain function.

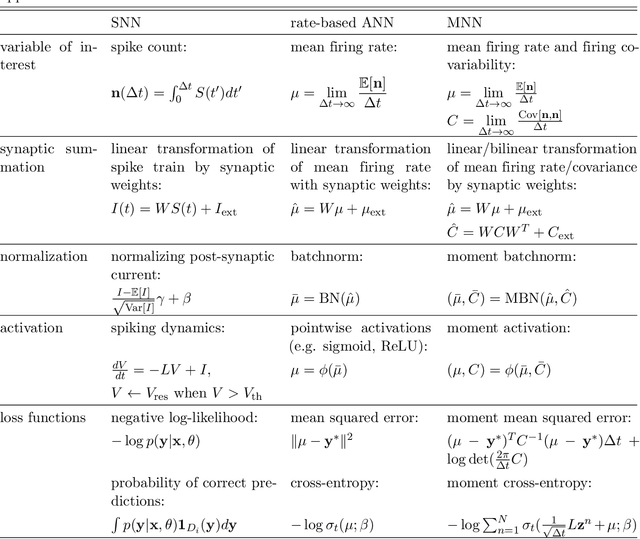

Probabilistic Computation with Emerging Covariance: Towards Efficient Uncertainty Quantification

May 31, 2023Abstract:Building robust, interpretable, and secure artificial intelligence system requires some degree of quantifying and representing uncertainty via a probabilistic perspective, as it allows to mimic human cognitive abilities. However, probabilistic computation presents significant challenges due to its inherent complexity. In this paper, we develop an efficient and interpretable probabilistic computation framework by truncating the probabilistic representation up to its first two moments, i.e., mean and covariance. We instantiate the framework by training a deterministic surrogate of a stochastic network that learns the complex probabilistic representation via combinations of simple activations, encapsulating the non-linearities coupling of the mean and covariance. We show that when the mean is supervised for optimizing the task objective, the unsupervised covariance spontaneously emerging from the non-linear coupling with the mean faithfully captures the uncertainty associated with model predictions. Our research highlights the inherent computability and simplicity of probabilistic computation, enabling its wider application in large-scale settings.

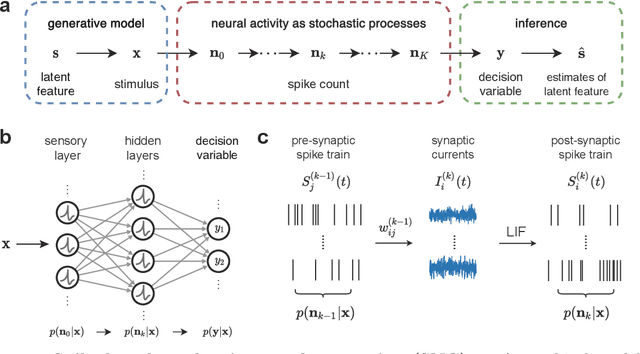

Toward spike-based stochastic neural computing

May 23, 2023

Abstract:Inspired by the highly irregular spiking activity of cortical neurons, stochastic neural computing is an attractive theory for explaining the operating principles of the brain and the ability to represent uncertainty by intelligent agents. However, computing and learning with high-dimensional joint probability distributions of spiking neural activity across large populations of neurons present as a major challenge. To overcome this, we develop a novel moment embedding approach to enable gradient-based learning in spiking neural networks accounting for the propagation of correlated neural variability. We show under the supervised learning setting a spiking neural network trained this way is able to learn the task while simultaneously minimizing uncertainty, and further demonstrate its application to neuromorphic hardware. Built on the principle of spike-based stochastic neural computing, the proposed method opens up new opportunities for developing machine intelligence capable of computing uncertainty and for designing unconventional computing architectures.

Sub-Nyquist Sampling with Optical Pulses for Photonic Blind Source Separation

Jul 25, 2021

Abstract:We proposed and demonstrated an optical pulse sampling method for photonic blind source separation. It can separate large bandwidth of mixed signals by small sampling frequency, which can reduce the workload of digital signal processing.

Photonic Interference Cancellation with Hybrid Free Space Optical Communication and MIMO Receiver

Jul 25, 2021

Abstract:We proposed and demonstrated a hybrid blind source separation system which can switch between multiple-input and multi-output mode and free space optical communication mode depends on different situation to get best condition for separation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge