Lu Bai

LGAN: An Efficient High-Order Graph Neural Network via the Line Graph Aggregation

Dec 11, 2025

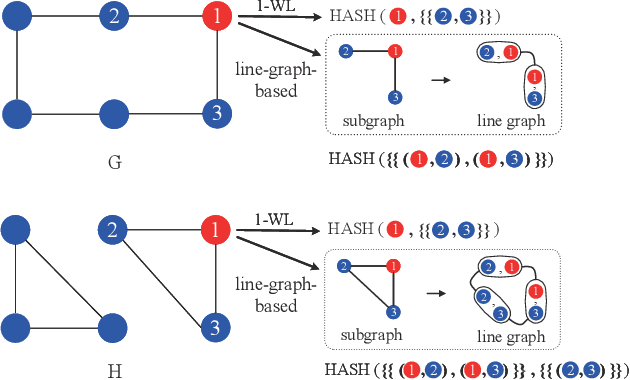

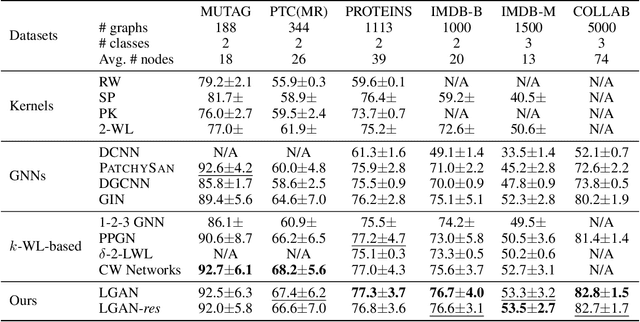

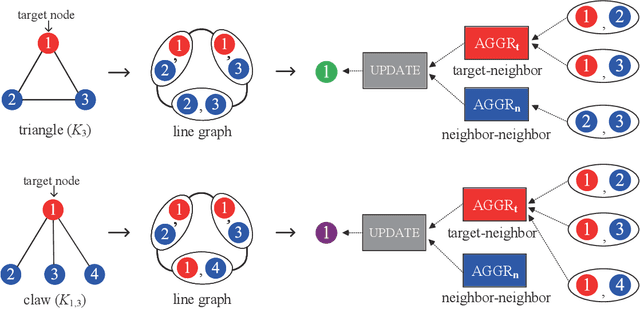

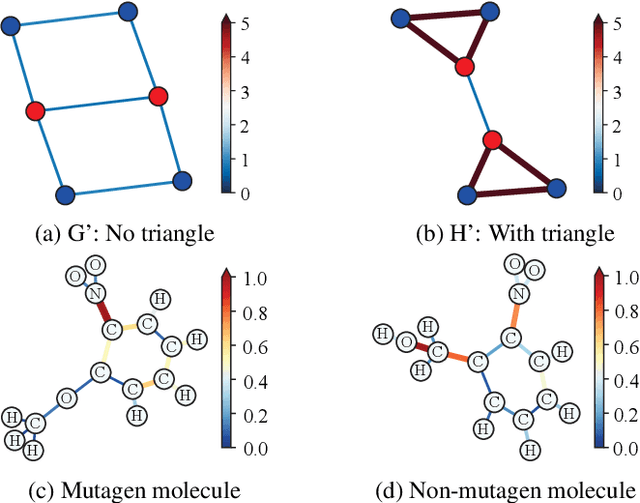

Abstract:Graph Neural Networks (GNNs) have emerged as a dominant paradigm for graph classification. Specifically, most existing GNNs mainly rely on the message passing strategy between neighbor nodes, where the expressivity is limited by the 1-dimensional Weisfeiler-Lehman (1-WL) test. Although a number of k-WL-based GNNs have been proposed to overcome this limitation, their computational cost increases rapidly with k, significantly restricting the practical applicability. Moreover, since the k-WL models mainly operate on node tuples, these k-WL-based GNNs cannot retain fine-grained node- or edge-level semantics required by attribution methods (e.g., Integrated Gradients), leading to the less interpretable problem. To overcome the above shortcomings, in this paper, we propose a novel Line Graph Aggregation Network (LGAN), that constructs a line graph from the induced subgraph centered at each node to perform the higher-order aggregation. We theoretically prove that the LGAN not only possesses the greater expressive power than the 2-WL under injective aggregation assumptions, but also has lower time complexity. Empirical evaluations on benchmarks demonstrate that the LGAN outperforms state-of-the-art k-WL-based GNNs, while offering better interpretability.

WiCo-MG: Wireless Channel Foundation Model for Multipath Generation via Synesthesia of Machines

Nov 19, 2025Abstract:Precise modeling of channel multipath is essential for understanding wireless propagation environments and optimizing communication systems. In particular, sixth-generation (6G) artificial intelligence (AI)-native communication systems demand massive and high-quality multipath channel data to enable intelligent model training and performance optimization. In this paper, we propose a wireless channel foundation model (WiCo) for multipath generation (WiCo-MG) via Synesthesia of Machines (SoM). To provide a solid training foundation for WiCo-MG, a new synthetic intelligent sensing-communication dataset for uncrewed aerial vehicle (UAV)-to-ground (U2G) communications is constructed. To overcome the challenges of cross-modal alignment and mapping, a two-stage training framework is proposed. In the first stage, sensing images are embedded into discrete-continuous SoM feature spaces, and multipath maps are embedded into a sensing-initialized discrete SoM space to align the representations. In the second stage, a mixture of shared and routed experts (S-R MoE) Transformer with frequency-aware expert routing learns the mapping from sensing to channel SoM feature spaces, enabling decoupled and adaptive multipath generation. Experimental results demonstrate that WiCo-MG achieves state-of-the-art in-distribution generation performance and superior out-of-distribution generalization, reducing NMSE by more than 2.59 dB over baselines, while exhibiting strong scalability in model and dataset growth and extensibility to new multipath parameters and tasks. Owing to higher accuracy, stronger generalization, and better scalability, WiCo-MG is expected to enable massive and high-fidelity channel data generation for the development of 6G AI-native communication systems.

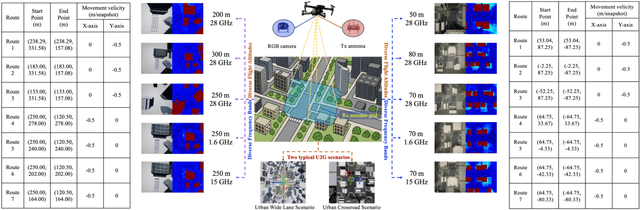

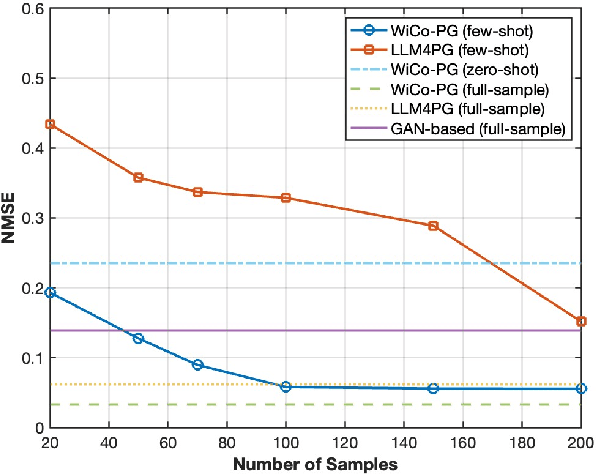

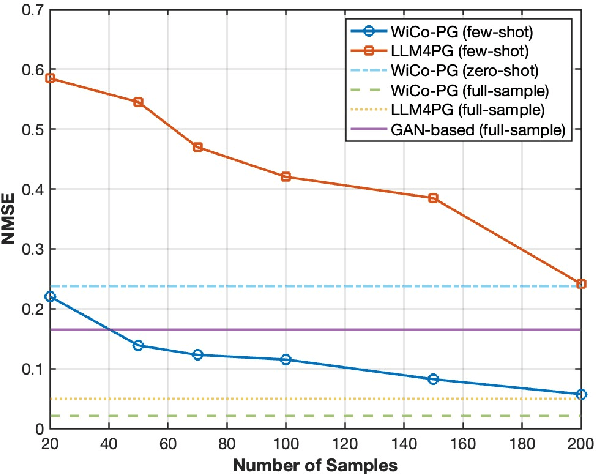

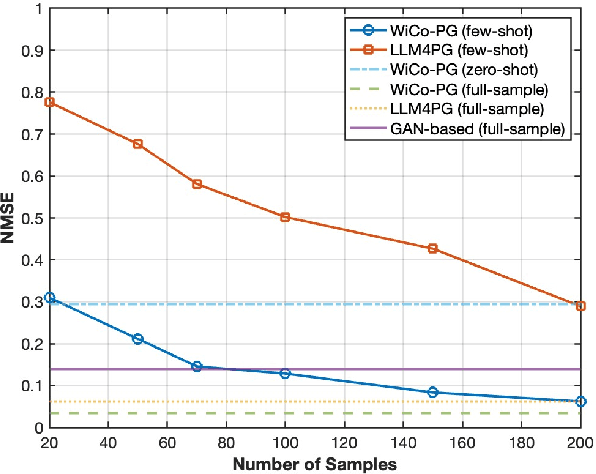

WiCo-PG: Wireless Channel Foundation Model for Pathloss Map Generation via Synesthesia of Machines

Nov 19, 2025

Abstract:A wireless channel foundation model for pathloss map generation (WiCo-PG) via Synesthesia of Machines (SoM) is developed for the first time. Considering sixth-generation (6G) uncrewed aerial vehicle (UAV)-to-ground (U2G) scenarios, a new multi-modal sensing-communication dataset is constructed for WiCo-PG pre-training, including multiple U2G scenarios, diverse flight altitudes, and diverse frequency bands. Based on the constructed dataset, the proposed WiCo-PG enables cross-modal pathloss map generation by leveraging RGB images from different scenarios and flight altitudes. In WiCo-PG, a novel network architecture designed for cross-modal pathloss map generation based on dual vector quantized generative adversarial networks (VQGANs) and Transformer is proposed. Furthermore, a novel frequency-guided shared-routed mixture of experts (S-R MoE) architecture is designed for cross-modal pathloss map generation. Simulation results demonstrate that the proposed WiCo-PG achieves improved pathloss map generation accuracy through pre-training with a normalized mean squared error (NMSE) of 0.012, outperforming the large language model (LLM)-based scheme, i.e., LLM4PG, and the conventional deep learning-based scheme by more than 6.98 dB. The enhanced generality of the proposed WiCo-PG can further outperform the LLM4PG by at least 1.37 dB using 2.7% samples in few-shot generalization.

LLM4MG: Adapting Large Language Model for Multipath Generation via Synesthesia of Machines

Sep 18, 2025Abstract:Based on Synesthesia of Machines (SoM), a large language model (LLM) is adapted for multipath generation (LLM4MG) for the first time. Considering a typical sixth-generation (6G) vehicle-to-infrastructure (V2I) scenario, a new multi-modal sensing-communication dataset is constructed, named SynthSoM-V2I, including channel multipath information, millimeter wave (mmWave) radar sensory data, RGB-D images, and light detection and ranging (LiDAR) point clouds. Based on the SynthSoM-V2I dataset, the proposed LLM4MG leverages Large Language Model Meta AI (LLaMA) 3.2 for multipath generation via multi-modal sensory data. The proposed LLM4MG aligns the multi-modal feature space with the LLaMA semantic space through feature extraction and fusion networks. To further achieve general knowledge transfer from the pre-trained LLaMA for multipath generation via multi-modal sensory data, the low-rank adaptation (LoRA) parameter-efficient fine-tuning and propagation-aware prompt engineering are exploited. Simulation results demonstrate that the proposed LLM4MG outperforms conventional deep learning-based methods in terms of line-of-sight (LoS)/non-LoS (NLoS) classification with accuracy of 92.76%, multipath power/delay generation precision with normalized mean square error (NMSE) of 0.099/0.032, and cross-vehicular traffic density (VTD), cross-band, and cross-scenario generalization. The utility of the proposed LLM4MG is validated by real-world generalization. The necessity of high-precision multipath generation for system design is also demonstrated by channel capacity comparison.

Multi-Modal Intelligent Channel Modeling Framework for 6G-Enabled Networked Intelligent Systems

Sep 09, 2025Abstract:The design and technology development of 6G-enabled networked intelligent systems needs an accurate real-time channel model as the cornerstone. However, with the new requirements of 6G-enabled networked intelligent systems, the conventional channel modeling methods face many limitations. Fortunately, the multi-modal sensors equipped on the intelligent agents bring timely opportunities, i.e., the intelligent integration and mutually beneficial mechanism between communications and multi-modal sensing could be investigated based on the artificial intelligence (AI) technologies. In this case, the mapping relationship between physical environment and electromagnetic channel could be explored via Synesthesia of Machines (SoM). This article presents a novel multi-modal intelligent channel modeling (MMICM) framework for 6G-enabled networked intelligent systems, which establishes a nonlinear model between multi-modal sensing and channel characteristics, including large-scale and small-scale channel characteristics. The architecture and features of proposed intelligent modeling framework are expounded and the key technologies involved are also analyzed. Finally, the system-engaged applications and potential research directions of MMICM framework are outlined.

LLM4SP: Large Language Models for Scatterer Prediction via Synesthesia of Machines

May 23, 2025

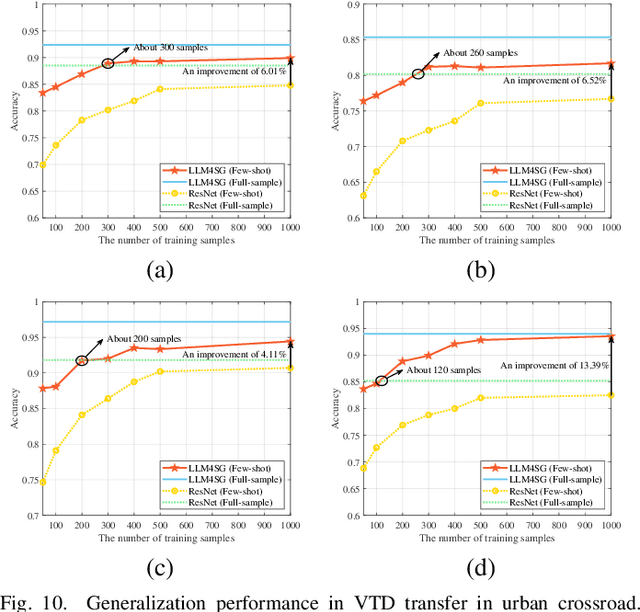

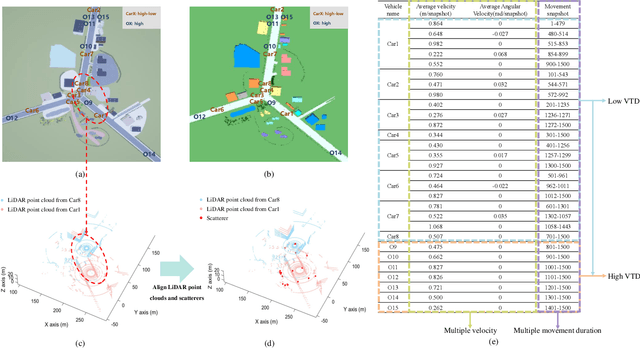

Abstract:Guided by Synesthesia of Machines (SoM), the nonlinear mapping relationship between sensory and communication information serves as a powerful tool to enhance both the accuracy and generalization of vehicle-to-vehicle (V2V) multi-modal intelligent channel modeling (MMICM) in intelligent transportation systems (ITSs). To explore the general mapping relationship between physical environment and electromagnetic space, a new intelligent sensing-communication integration dataset, named V2V-M3, is constructed for multiple scenarios in V2V communications with multiple frequency bands and multiple vehicular traffic densities (VTDs). Leveraging the strong representation and cross-modal inference capabilities of large language models (LLMs), a novel LLM-based method for Scatterer Prediction (LLM4SP) from light detection and ranging (LiDAR) point clouds is developed. To address the inherent and significant differences across multi-modal data, synergistically optimized four-module architecture, i.e., preprocessor, embedding, backbone, and output modules, are designed by considering the sensing/channel characteristics and electromagnetic propagation mechanism. On the basis of cross-modal representation alignment and positional encoding, the network of LLM4SP is fine-tuned to capture the general mapping relationship between LiDAR point clouds and scatterers. Simulation results demonstrate that the proposed LLM4SP achieves superior performance in full-sample and generalization testing, significantly outperforming small models across different frequency bands, scenarios, and VTDs.

WaveNet-Volterra Neural Networks for Active Noise Control: A Fully Causal Approach

Apr 06, 2025

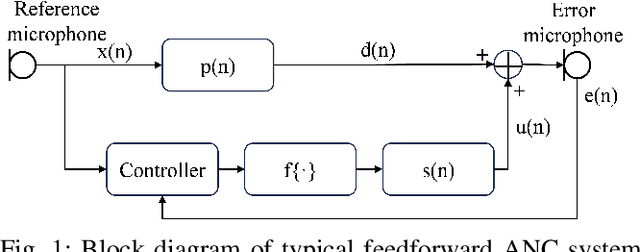

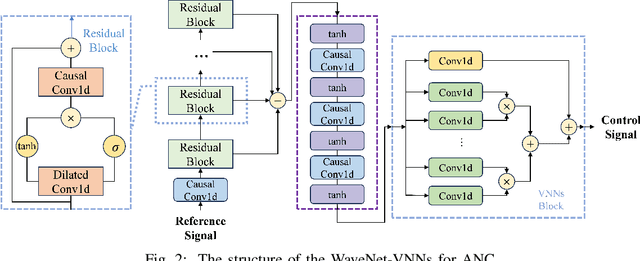

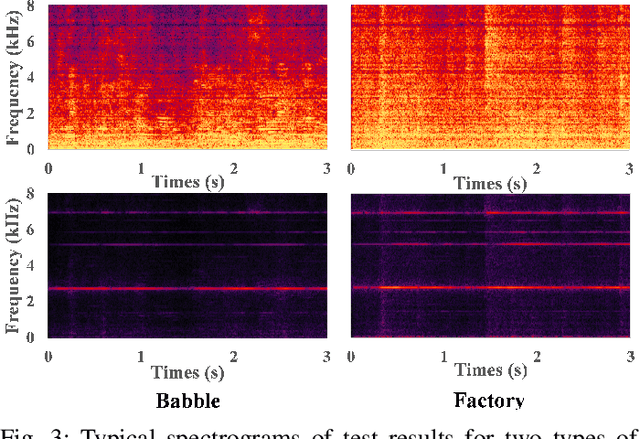

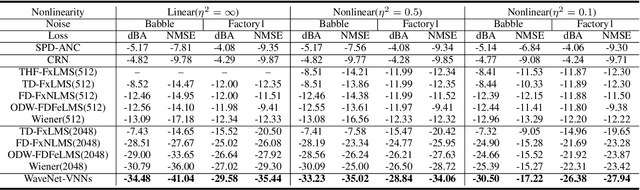

Abstract:Active Noise Control (ANC) systems are challenged by nonlinear distortions, which degrade the performance of traditional adaptive filters. While deep learning-based ANC algorithms have emerged to address nonlinearity, existing approaches often overlook critical limitations: (1) end-to-end Deep Neural Network (DNN) models frequently violate causality constraints inherent to real-time ANC applications; (2) many studies compare DNN-based methods against simplified or low-order adaptive filters rather than fully optimized high-order counterparts. In this letter, we propose a causality-preserving time-domain ANC framework that synergizes WaveNet with Volterra Neural Networks (VNNs), explicitly addressing system nonlinearity while ensuring strict causal operation. Unlike prior DNN-based approaches, our method is benchmarked against both state-of-the-art deep learning architectures and rigorously optimized high-order adaptive filters, including Wiener solutions. Simulations demonstrate that the proposed framework achieves superior performance over existing DNN methods and traditional algorithms, revealing that prior claims of DNN superiority stem from incomplete comparisons with suboptimal traditional baselines. Source code is available at https://github.com/Lu-Baihh/WaveNet-VNNs-for-ANC.git.

Improving Question Embeddings with Cognitiv Representation Optimization for Knowledge Tracing

Apr 05, 2025Abstract:The Knowledge Tracing (KT) aims to track changes in students' knowledge status and predict their future answers based on their historical answer records. Current research on KT modeling focuses on predicting student' future performance based on existing, unupdated records of student learning interactions. However, these approaches ignore the distractors (such as slipping and guessing) in the answering process and overlook that static cognitive representations are temporary and limited. Most of them assume that there are no distractors in the answering process and that the record representations fully represent the students' level of understanding and proficiency in knowledge. In this case, it may lead to many insynergy and incoordination issue in the original records. Therefore we propose a Cognitive Representation Optimization for Knowledge Tracing (CRO-KT) model, which utilizes a dynamic programming algorithm to optimize structure of cognitive representations. This ensures that the structure matches the students' cognitive patterns in terms of the difficulty of the exercises. Furthermore, we use the co-optimization algorithm to optimize the cognitive representations of the sub-target exercises in terms of the overall situation of exercises responses by considering all the exercises with co-relationships as a single goal. Meanwhile, the CRO-KT model fuses the learned relational embeddings from the bipartite graph with the optimized record representations in a weighted manner, enhancing the expression of students' cognition. Finally, experiments are conducted on three publicly available datasets respectively to validate the effectiveness of the proposed cognitive representation optimization model.

A Multi-modal Intelligent Channel Model for 6G Multi-UAV-to-Multi-Vehicle Communications

Jan 15, 2025

Abstract:In this paper, a novel multi-modal intelligent channel model for sixth-generation (6G) multiple-unmanned aerial vehicle (multi-UAV)-to-multi-vehicle communications is proposed. To thoroughly explore the mapping relationship between the physical environment and the electromagnetic space in the complex multi-UAV-to-multi-vehicle scenario, two new parameters, i.e., terrestrial traffic density (TTD) and aerial traffic density (ATD), are developed and a new sensing-communication intelligent integrated dataset is constructed in suburban scenario under different TTD and ATD conditions. With the aid of sensing data, i.e., light detection and ranging (LiDAR) point clouds, the parameters of static scatterers, terrestrial dynamic scatterers, and aerial dynamic scatterers in the electromagnetic space, e.g., number, distance, angle, and power, are quantified under different TTD and ATD conditions in the physical environment. In the proposed model, the channel non-stationarity and consistency on the time and space domains and the channel non-stationarity on the frequency domain are simultaneously mimicked. The channel statistical properties, such as time-space-frequency correlation function (TSF-CF), time stationary interval (TSI), and Doppler power spectral density (DPSD), are derived and simulated. Simulation results match ray-tracing (RT) results well, which verifies the accuracy of the proposed multi-UAV-to-multi-vehicle channel model.

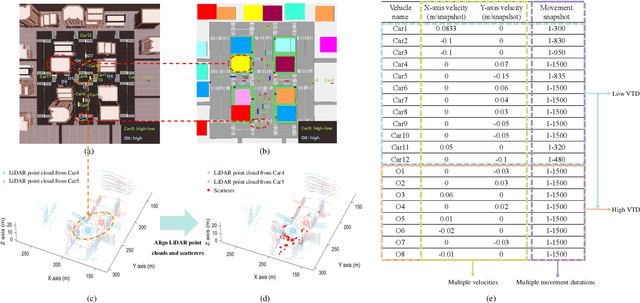

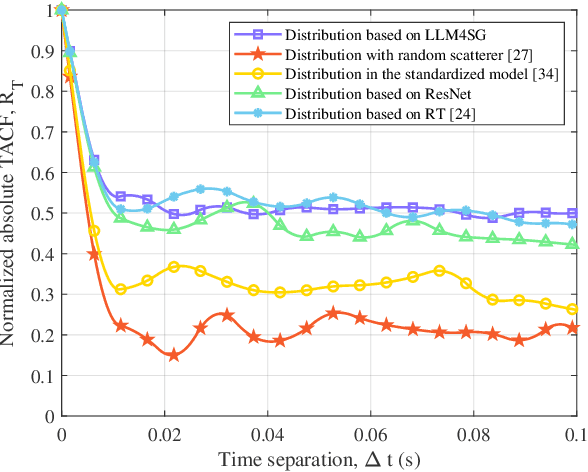

Synesthesia of Machines Based Multi-Modal Intelligent V2V Channel Model

Jan 13, 2025Abstract:This paper proposes a novel sixth-generation (6G) multi-modal intelligent vehicle-to-vehicle (V2V) channel model from light detection and ranging (LiDAR) point clouds based on Synesthesia of Machines (SoM). To explore the mapping relationship between physical environment and electromagnetic space, a new V2V high-fidelity mixed sensing-communication integration simulation dataset with different vehicular traffic densities (VTDs) is constructed. Based on the constructed dataset, a novel scatterer recognition (ScaR) algorithm utilizing neural network SegNet is developed to recognize scatterer spatial attributes from LiDAR point clouds via SoM. In the developed ScaR algorithm, the mapping relationship between LiDAR point clouds and scatterers is explored, where the distribution of scatterers is obtained in the form of grid maps. Furthermore, scatterers are distinguished into dynamic and static scatterers based on LiDAR point cloud features, where parameters, e.g., distance, angle, and number, related to scatterers are determined. Through ScaR, dynamic and static scatterers change with the variation of LiDAR point clouds over time, which precisely models channel non-stationarity and consistency under different VTDs. Some important channel statistical properties, such as time-frequency correlation function (TF-CF) and Doppler power spectral density (DPSD), are obtained. Simulation results match well with ray-tracing (RT)-based results, thus demonstrating the necessity of exploring the mapping relationship and the utility of the proposed model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge