Xin Yuan

Equal contributions

Beyond Seen Bounds: Class-Centric Polarization for Single-Domain Generalized Deep Metric Learning

Jan 14, 2026Abstract:Single-domain generalized deep metric learning (SDG-DML) faces the dual challenge of both category and domain shifts during testing, limiting real-world applications. Therefore, aiming to learn better generalization ability on both unseen categories and domains is a realistic goal for the SDG-DML task. To deliver the aspiration, existing SDG-DML methods employ the domain expansion-equalization strategy to expand the source data and generate out-of-distribution samples. However, these methods rely on proxy-based expansion, which tends to generate samples clustered near class proxies, failing to simulate the broad and distant domain shifts encountered in practice. To alleviate the problem, we propose CenterPolar, a novel SDG-DML framework that dynamically expands and constrains domain distributions to learn a generalizable DML model for wider target domain distributions. Specifically, \textbf{CenterPolar} contains two collaborative class-centric polarization phases: (1) Class-Centric Centrifugal Expansion ($C^3E$) and (2) Class-Centric Centripetal Constraint ($C^4$). In the first phase, $C^3E$ drives the source domain distribution by shifting the source data away from class centroids using centrifugal expansion to generalize to more unseen domains. In the second phase, to consolidate domain-invariant class information for the generalization ability to unseen categories, $C^4$ pulls all seen and unseen samples toward their class centroids while enforcing inter-class separation via centripetal constraint. Extensive experimental results on widely used CUB-200-2011 Ext., Cars196 Ext., DomainNet, PACS, and Office-Home datasets demonstrate the superiority and effectiveness of our CenterPolar over existing state-of-the-art methods. The code will be released after acceptance.

PrivGemo: Privacy-Preserving Dual-Tower Graph Retrieval for Empowering LLM Reasoning with Memory Augmentation

Jan 13, 2026Abstract:Knowledge graphs (KGs) provide structured evidence that can ground large language model (LLM) reasoning for knowledge-intensive question answering. However, many practical KGs are private, and sending retrieved triples or exploration traces to closed-source LLM APIs introduces leakage risk. Existing privacy treatments focus on masking entity names, but they still face four limitations: structural leakage under semantic masking, uncontrollable remote interaction, fragile multi-hop and multi-entity reasoning, and limited experience reuse for stability and efficiency. To address these issues, we propose PrivGemo, a privacy-preserving retrieval-augmented framework for KG-grounded reasoning with memory-guided exposure control. PrivGemo uses a dual-tower design to keep raw KG knowledge local while enabling remote reasoning over an anonymized view that goes beyond name masking to limit both semantic and structural exposure. PrivGemo supports multi-hop, multi-entity reasoning by retrieving anonymized long-hop paths that connect all topic entities, while keeping grounding and verification on the local KG. A hierarchical controller and a privacy-aware experience memory further reduce unnecessary exploration and remote interactions. Comprehensive experiments on six benchmarks show that PrivGemo achieves overall state-of-the-art results, outperforming the strongest baseline by up to 17.1%. Furthermore, PrivGemo enables smaller models (e.g., Qwen3-4B) to achieve reasoning performance comparable to that of GPT-4-Turbo.

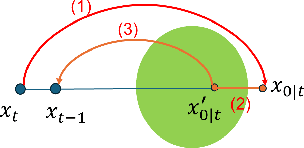

3One2: One-step Regression Plus One-step Diffusion for One-hot Modulation in Dual-path Video Snapshot Compressive Imaging

Dec 19, 2025Abstract:Video snapshot compressive imaging (SCI) captures dynamic scene sequences through a two-dimensional (2D) snapshot, fundamentally relying on optical modulation for hardware compression and the corresponding software reconstruction. While mainstream video SCI using random binary modulation has demonstrated success, it inevitably results in temporal aliasing during compression. One-hot modulation, activating only one sub-frame per pixel, provides a promising solution for achieving perfect temporal decoupling, thereby alleviating issues associated with aliasing. However, no algorithms currently exist to fully exploit this potential. To bridge this gap, we propose an algorithm specifically designed for one-hot masks. First, leveraging the decoupling properties of one-hot modulation, we transform the reconstruction task into a generative video inpainting problem and introduce a stochastic differential equation (SDE) of the forward process that aligns with the hardware compression process. Next, we identify limitations of the pure diffusion method for video SCI and propose a novel framework that combines one-step regression initialization with one-step diffusion refinement. Furthermore, to mitigate the spatial degradation caused by one-hot modulation, we implement a dual optical path at the hardware level, utilizing complementary information from another path to enhance the inpainted video. To our knowledge, this is the first work integrating diffusion into video SCI reconstruction. Experiments conducted on synthetic datasets and real scenes demonstrate the effectiveness of our method.

SLCFormer: Spectral-Local Context Transformer with Physics-Grounded Flare Synthesis for Nighttime Flare Removal

Dec 17, 2025Abstract:Lens flare is a common nighttime artifact caused by strong light sources scattering within camera lenses, leading to hazy streaks, halos, and glare that degrade visual quality. However, existing methods usually fail to effectively address nonuniform scattered flares, which severely reduces their applicability to complex real-world scenarios with diverse lighting conditions. To address this issue, we propose SLCFormer, a novel spectral-local context transformer framework for effective nighttime lens flare removal. SLCFormer integrates two key modules: the Frequency Fourier and Excitation Module (FFEM), which captures efficient global contextual representations in the frequency domain to model flare characteristics, and the Directionally-Enhanced Spatial Module (DESM) for local structural enhancement and directional features in the spatial domain for precise flare removal. Furthermore, we introduce a ZernikeVAE-based scatter flare generation pipeline to synthesize physically realistic scatter flares with spatially varying PSFs, bridging optical physics and data-driven training. Extensive experiments on the Flare7K++ dataset demonstrate that our method achieves state-of-the-art performance, outperforming existing approaches in both quantitative metrics and perceptual visual quality, and generalizing robustly to real nighttime scenes with complex flare artifacts.

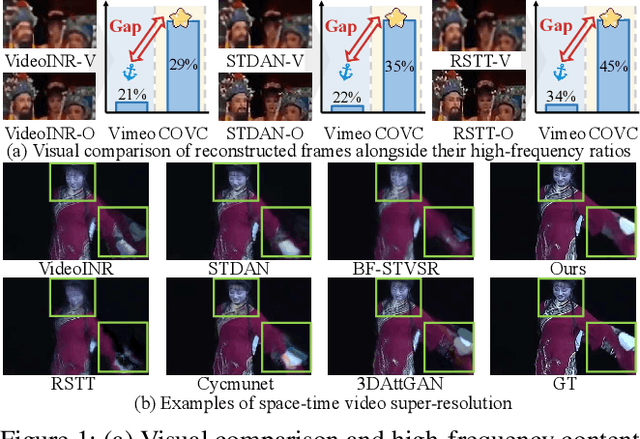

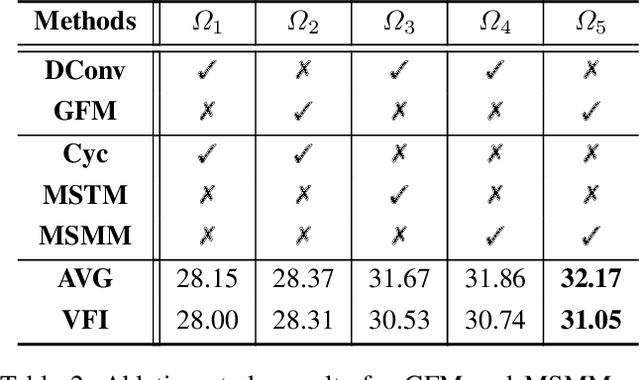

MambaOVSR: Multiscale Fusion with Global Motion Modeling for Chinese Opera Video Super-Resolution

Nov 09, 2025

Abstract:Chinese opera is celebrated for preserving classical art. However, early filming equipment limitations have degraded videos of last-century performances by renowned artists (e.g., low frame rates and resolution), hindering archival efforts. Although space-time video super-resolution (STVSR) has advanced significantly, applying it directly to opera videos remains challenging. The scarcity of datasets impedes the recovery of high frequency details, and existing STVSR methods lack global modeling capabilities, compromising visual quality when handling opera's characteristic large motions. To address these challenges, we pioneer a large scale Chinese Opera Video Clip (COVC) dataset and propose the Mamba-based multiscale fusion network for space-time Opera Video Super-Resolution (MambaOVSR). Specifically, MambaOVSR involves three novel components: the Global Fusion Module (GFM) for motion modeling through a multiscale alternating scanning mechanism, and the Multiscale Synergistic Mamba Module (MSMM) for alignment across different sequence lengths. Additionally, our MambaVR block resolves feature artifacts and positional information loss during alignment. Experimental results on the COVC dataset show that MambaOVSR significantly outperforms the SOTA STVSR method by an average of 1.86 dB in terms of PSNR. Dataset and Code will be publicly released.

Texture-aware Intrinsic Image Decomposition with Model- and Learning-based Priors

Sep 11, 2025Abstract:This paper aims to recover the intrinsic reflectance layer and shading layer given a single image. Though this intrinsic image decomposition problem has been studied for decades, it remains a significant challenge in cases of complex scenes, i.e. spatially-varying lighting effect and rich textures. In this paper, we propose a novel method for handling severe lighting and rich textures in intrinsic image decomposition, which enables to produce high-quality intrinsic images for real-world images. Specifically, we observe that previous learning-based methods tend to produce texture-less and over-smoothing intrinsic images, which can be used to infer the lighting and texture information given a RGB image. In this way, we design a texture-guided regularization term and formulate the decomposition problem into an optimization framework, to separate the material textures and lighting effect. We demonstrate that combining the novel texture-aware prior can produce superior results to existing approaches.

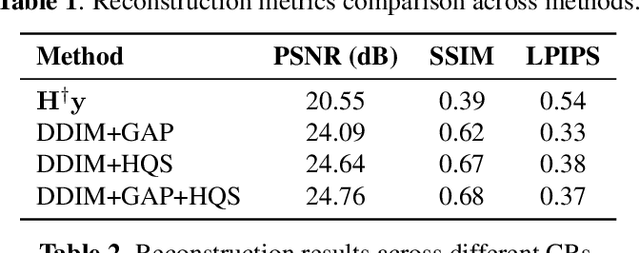

Plug-and-play Diffusion Models for Image Compressive Sensing with Data Consistency Projection

Sep 11, 2025

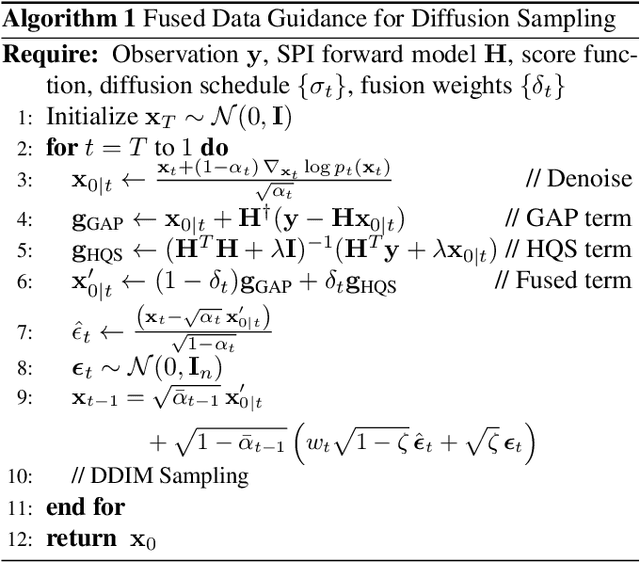

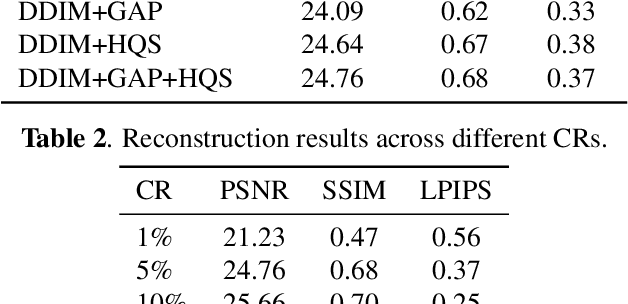

Abstract:We explore the connection between Plug-and-Play (PnP) methods and Denoising Diffusion Implicit Models (DDIM) for solving ill-posed inverse problems, with a focus on single-pixel imaging. We begin by identifying key distinctions between PnP and diffusion models-particularly in their denoising mechanisms and sampling procedures. By decoupling the diffusion process into three interpretable stages: denoising, data consistency enforcement, and sampling, we provide a unified framework that integrates learned priors with physical forward models in a principled manner. Building upon this insight, we propose a hybrid data-consistency module that linearly combines multiple PnP-style fidelity terms. This hybrid correction is applied directly to the denoised estimate, improving measurement consistency without disrupting the diffusion sampling trajectory. Experimental results on single-pixel imaging tasks demonstrate that our method achieves better reconstruction quality.

Sparse Transformer for Ultra-sparse Sampled Video Compressive Sensing

Sep 10, 2025Abstract:Digital cameras consume ~0.1 microjoule per pixel to capture and encode video, resulting in a power usage of ~20W for a 4K sensor operating at 30 fps. Imagining gigapixel cameras operating at 100-1000 fps, the current processing model is unsustainable. To address this, physical layer compressive measurement has been proposed to reduce power consumption per pixel by 10-100X. Video Snapshot Compressive Imaging (SCI) introduces high frequency modulation in the optical sensor layer to increase effective frame rate. A commonly used sampling strategy of video SCI is Random Sampling (RS) where each mask element value is randomly set to be 0 or 1. Similarly, image inpainting (I2P) has demonstrated that images can be recovered from a fraction of the image pixels. Inspired by I2P, we propose Ultra-Sparse Sampling (USS) regime, where at each spatial location, only one sub-frame is set to 1 and all others are set to 0. We then build a Digital Micro-mirror Device (DMD) encoding system to verify the effectiveness of our USS strategy. Ideally, we can decompose the USS measurement into sub-measurements for which we can utilize I2P algorithms to recover high-speed frames. However, due to the mismatch between the DMD and CCD, the USS measurement cannot be perfectly decomposed. To this end, we propose BSTFormer, a sparse TransFormer that utilizes local Block attention, global Sparse attention, and global Temporal attention to exploit the sparsity of the USS measurement. Extensive results on both simulated and real-world data show that our method significantly outperforms all previous state-of-the-art algorithms. Additionally, an essential advantage of the USS strategy is its higher dynamic range than that of the RS strategy. Finally, from the application perspective, the USS strategy is a good choice to implement a complete video SCI system on chip due to its fixed exposure time.

zkUnlearner: A Zero-Knowledge Framework for Verifiable Unlearning with Multi-Granularity and Forgery-Resistance

Sep 08, 2025Abstract:As the demand for exercising the "right to be forgotten" grows, the need for verifiable machine unlearning has become increasingly evident to ensure both transparency and accountability. We present {\em zkUnlearner}, the first zero-knowledge framework for verifiable machine unlearning, specifically designed to support {\em multi-granularity} and {\em forgery-resistance}. First, we propose a general computational model that employs a {\em bit-masking} technique to enable the {\em selectivity} of existing zero-knowledge proofs of training for gradient descent algorithms. This innovation enables not only traditional {\em sample-level} unlearning but also more advanced {\em feature-level} and {\em class-level} unlearning. Our model can be translated to arithmetic circuits, ensuring compatibility with a broad range of zero-knowledge proof systems. Furthermore, our approach overcomes key limitations of existing methods in both efficiency and privacy. Second, forging attacks present a serious threat to the reliability of unlearning. Specifically, in Stochastic Gradient Descent optimization, gradients from unlearned data, or from minibatches containing it, can be forged using alternative data samples or minibatches that exclude it. We propose the first effective strategies to resist state-of-the-art forging attacks. Finally, we benchmark a zkSNARK-based instantiation of our framework and perform comprehensive performance evaluations to validate its practicality.

Unfolding Framework with Complex-Valued Deformable Attention for High-Quality Computer-Generated Hologram Generation

Aug 29, 2025Abstract:Computer-generated holography (CGH) has gained wide attention with deep learning-based algorithms. However, due to its nonlinear and ill-posed nature, challenges remain in achieving accurate and stable reconstruction. Specifically, ($i$) the widely used end-to-end networks treat the reconstruction model as a black box, ignoring underlying physical relationships, which reduces interpretability and flexibility. ($ii$) CNN-based CGH algorithms have limited receptive fields, hindering their ability to capture long-range dependencies and global context. ($iii$) Angular spectrum method (ASM)-based models are constrained to finite near-fields.In this paper, we propose a Deep Unfolding Network (DUN) that decomposes gradient descent into two modules: an adaptive bandwidth-preserving model (ABPM) and a phase-domain complex-valued denoiser (PCD), providing more flexibility. ABPM allows for wider working distances compared to ASM-based methods. At the same time, PCD leverages its complex-valued deformable self-attention module to capture global features and enhance performance, achieving a PSNR over 35 dB. Experiments on simulated and real data show state-of-the-art results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge