Jianping An

Sherman

Dual-View Predictive Diffusion: Lightweight Speech Enhancement via Spectrogram-Image Synergy

Jan 31, 2026Abstract:Diffusion models have recently set new benchmarks in Speech Enhancement (SE). However, most existing score-based models treat speech spectrograms merely as generic 2D images, applying uniform processing that ignores the intrinsic structural sparsity of audio, which results in inefficient spectral representation and prohibitive computational complexity. To bridge this gap, we propose DVPD, an extremely lightweight Dual-View Predictive Diffusion model, which uniquely exploits the dual nature of spectrograms as both visual textures and physical frequency-domain representations across both training and inference stages. Specifically, during training, we optimize spectral utilization via the Frequency-Adaptive Non-uniform Compression (FANC) encoder, which preserves critical low-frequency harmonics while pruning high-frequency redundancies. Simultaneously, we introduce a Lightweight Image-based Spectro-Awareness (LISA) module to capture features from a visual perspective with minimal overhead. During inference, we propose a Training-free Lossless Boost (TLB) strategy that leverages the same dual-view priors to refine generation quality without any additional fine-tuning. Extensive experiments across various benchmarks demonstrate that DVPD achieves state-of-the-art performance while requiring only 35% of the parameters and 40% of the inference MACs compared to SOTA lightweight model, PGUSE. These results highlight DVPD's superior ability to balance high-fidelity speech quality with extreme architectural efficiency. Code and audio samples are available at the anonymous website: {https://anonymous.4open.science/r/dvpd_demo-E630}

Blind Channel Estimation for RIS-Assisted Millimeter Wave Communication Systems

Aug 25, 2025Abstract:In the research of RIS-assisted communication systems, channel estimation is a problem of vital importance for further performance optimization. In order to reduce the pilot overhead to the greatest extent, blind channel estimation methods are required, which can estimate the channel and the transmit signals at the same time without transmitting pilot sequence. Different from existing researches in traditional MIMO systems, the RIS-assisted two-hop channel brings new challenges to the blind channel estimation design. Hence, a novel blind channel estimation method based on compressed sensing for RIS-assisted multiuser millimeter wave communication systems is proposed for the first time in this paper. Specifically, for accurately estimating the RIS-assisted two-hop channel without transmitting pilots, we propose a block-wise transmission scheme. Among different blocks of data transmission, RIS elements are reconfigured for better estimating the cascade channel. Inside each block, data for each user are mapped to a codeword for realizing the transmit signal recovery and equivalent channel estimation simultaneously. Simulation results demonstrate that our method can achieve a considerable accuracy of channel estimation and transmit signal recovery.

DualStream Contextual Fusion Network: Efficient Target Speaker Extraction by Leveraging Mixture and Enrollment Interactions

Feb 12, 2025

Abstract:Target speaker extraction focuses on extracting a target speech signal from an environment with multiple speakers by leveraging an enrollment. Existing methods predominantly rely on speaker embeddings obtained from the enrollment, potentially disregarding the contextual information and the internal interactions between the mixture and enrollment. In this paper, we propose a novel DualStream Contextual Fusion Network (DCF-Net) in the time-frequency (T-F) domain. Specifically, DualStream Fusion Block (DSFB) is introduced to obtain contextual information and capture the interactions between contextualized enrollment and mixture representation across both spatial and channel dimensions, and then rich and consistent representations are utilized to guide the extraction network for better extraction. Experimental results demonstrate that DCF-Net outperforms state-of-the-art (SOTA) methods, achieving a scale-invariant signal-to-distortion ratio improvement (SI-SDRi) of 21.6 dB on the benchmark dataset, and exhibits its robustness and effectiveness in both noise and reverberation scenarios. In addition, the wrong extraction results of our model, called target confusion problem, reduce to 0.4%, which highlights the potential of DCF-Net for practical applications.

Massive Unsourced Random Access for Near-Field Communications

Jan 25, 2024

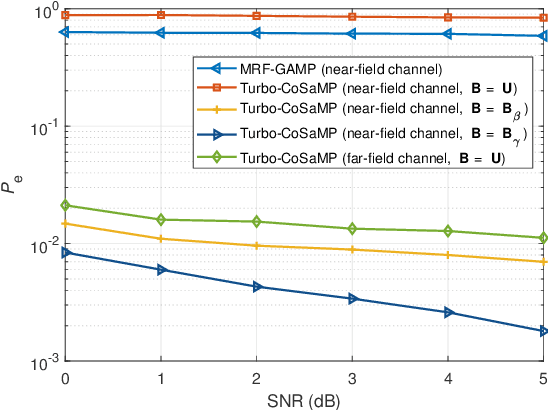

Abstract:This paper investigates the unsourced random access (URA) problem with a massive multiple-input multiple-output receiver that serves wireless devices in the near-field of radiation. We employ an uncoupled transmission protocol without appending redundancies to the slot-wise encoded messages. To exploit the channel sparsity for block length reduction while facing the collapsed sparse structure in the angular domain of near-field channels, we propose a sparse channel sampling method that divides the angle-distance (polar) domain based on the maximum permissible coherence. Decoding starts with retrieving active codewords and channels from each slot. We address the issue by leveraging the structured channel sparsity in the spatial and polar domains and propose a novel turbo-based recovery algorithm. Furthermore, we investigate an off-grid compressed sensing method to refine discretely estimated channel parameters over the continuum that improves the detection performance. Afterward, without the assistance of redundancies, we recouple the separated messages according to the similarity of the users' channel information and propose a modified K-medoids method to handle the constraints and collisions involved in channel clustering. Simulations reveal that via exploiting the channel sparsity, the proposed URA scheme achieves high spectral efficiency and surpasses existing multi-slot-based schemes. Moreover, with more measurements provided by the overcomplete channel sampling, the near-field-suited scheme outperforms its counterpart of the far-field.

AI Generated Signal for Wireless Sensing

Dec 22, 2023

Abstract:Deep learning has significantly advanced wireless sensing technology by leveraging substantial amounts of high-quality training data. However, collecting wireless sensing data encounters diverse challenges, including unavoidable data noise, limited data scale due to significant collection overhead, and the necessity to reacquire data in new environments. Taking inspiration from the achievements of AI-generated content, this paper introduces a signal generation method that achieves data denoising, augmentation, and synthesis by disentangling distinct attributes within the signal, such as individual and environment. The approach encompasses two pivotal modules: structured signal selection and signal disentanglement generation. Structured signal selection establishes a minimal signal set with the target attributes for subsequent attribute disentanglement. Signal disentanglement generation disentangles the target attributes and reassembles them to generate novel signals. Extensive experimental results demonstrate that the proposed method can generate data that closely resembles real-world data on two wireless sensing datasets, exhibiting state-of-the-art performance. Our approach presents a robust framework for comprehending and manipulating attribute-specific information in wireless sensing.

Multiple Satellites Collaboration for Joint Code-aided CFOs and CPOs Estimation

Sep 25, 2023

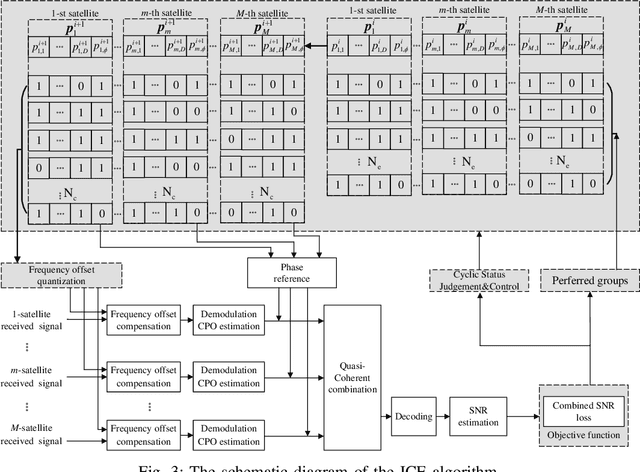

Abstract:Low Earth Orbit (LEO) satellites are being extensively researched in the development of secure Internet of Remote Things (IoRT). In scenarios with miniaturized terminals, the limited transmission power and long transmission distance often lead to low Signal-to-Noise Ratio (SNR) at the satellite receiver, which degrades communication performance. A solution to address this issue is the utilization of cooperative satellites, which can combine signals received from multiple satellites, thereby significantly improve SNR. However, in order to maximize the combination gain, the signal coherent combining is necessary, which requires the carrier frequency and phase of each receiving signal to be aligned. Under low SNR circumstances, carrier parameter estimation can be a significant challenge, especially for short burst transmission with no training sequence. In order to tackle it, we propose an iterative code-aided estimation algorithm for joint Carrier Frequency Offset (CFO) and Carrier Phase Offset (CPO). The Cram\'er-Rao Lower Bound (CRLB) is suggested as the limit on the parameter estimation performance. Simulation results demonstrate that the proposed algorithm can approach Bit Error Rate (BER) performance bound within 0.4 dB with regards to four-satellite collaboration.

DeViT: Decomposing Vision Transformers for Collaborative Inference in Edge Devices

Sep 10, 2023

Abstract:Recent years have witnessed the great success of vision transformer (ViT), which has achieved state-of-the-art performance on multiple computer vision benchmarks. However, ViT models suffer from vast amounts of parameters and high computation cost, leading to difficult deployment on resource-constrained edge devices. Existing solutions mostly compress ViT models to a compact model but still cannot achieve real-time inference. To tackle this issue, we propose to explore the divisibility of transformer structure, and decompose the large ViT into multiple small models for collaborative inference at edge devices. Our objective is to achieve fast and energy-efficient collaborative inference while maintaining comparable accuracy compared with large ViTs. To this end, we first propose a collaborative inference framework termed DeViT to facilitate edge deployment by decomposing large ViTs. Subsequently, we design a decomposition-and-ensemble algorithm based on knowledge distillation, termed DEKD, to fuse multiple small decomposed models while dramatically reducing communication overheads, and handle heterogeneous models by developing a feature matching module to promote the imitations of decomposed models from the large ViT. Extensive experiments for three representative ViT backbones on four widely-used datasets demonstrate our method achieves efficient collaborative inference for ViTs and outperforms existing lightweight ViTs, striking a good trade-off between efficiency and accuracy. For example, our DeViTs improves end-to-end latency by 2.89$\times$ with only 1.65% accuracy sacrifice using CIFAR-100 compared to the large ViT, ViT-L/16, on the GPU server. DeDeiTs surpasses the recent efficient ViT, MobileViT-S, by 3.54% in accuracy on ImageNet-1K, while running 1.72$\times$ faster and requiring 55.28% lower energy consumption on the edge device.

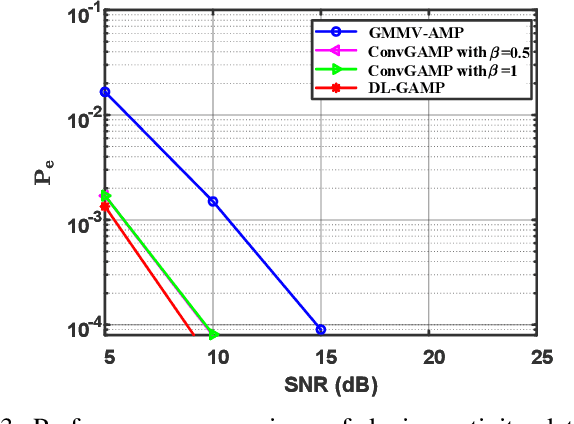

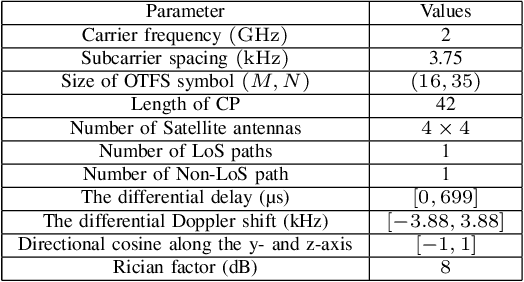

LEO Satellite-Enabled Grant-Free Random Access with MIMO-OTFS

Aug 03, 2022

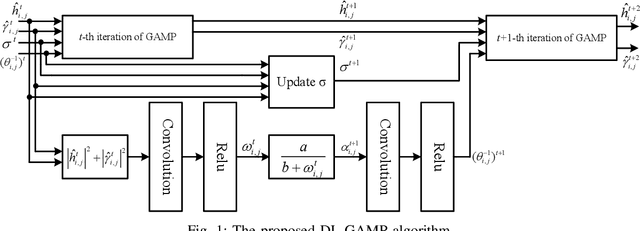

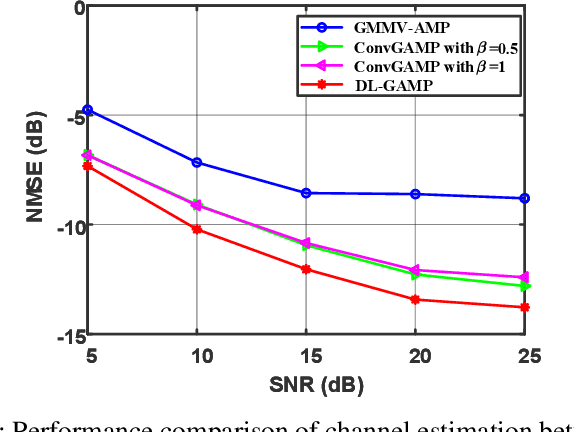

Abstract:This paper investigates joint channel estimation and device activity detection in the LEO satellite-enabled grant-free random access systems with large differential delay and Doppler shift. In addition, the multiple-input multiple-output (MIMO) with orthogonal time-frequency space modulation (OTFS) is utilized to combat the dynamics of the terrestrial-satellite link. To simplify the computation process, we estimate the channel tensor in parallel along the delay dimension. Then, the deep learning and expectation-maximization approach are integrated into the generalized approximate message passing with cross-correlation--based Gaussian prior to capture the channel sparsity in the delay-Doppler-angle domain and learn the hyperparameters. Finally, active devices are detected by computing energy of the estimated channel. Simulation results demonstrate that the proposed algorithms outperform conventional methods.

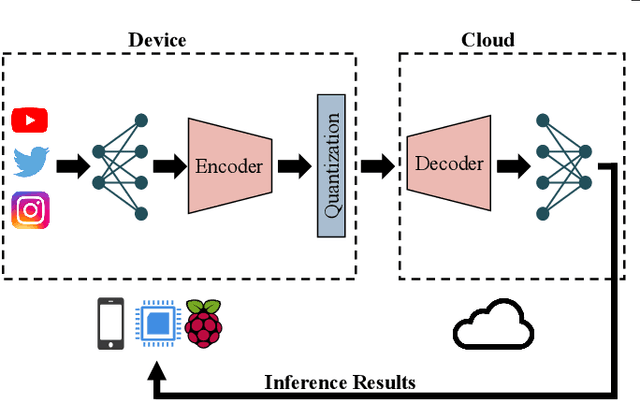

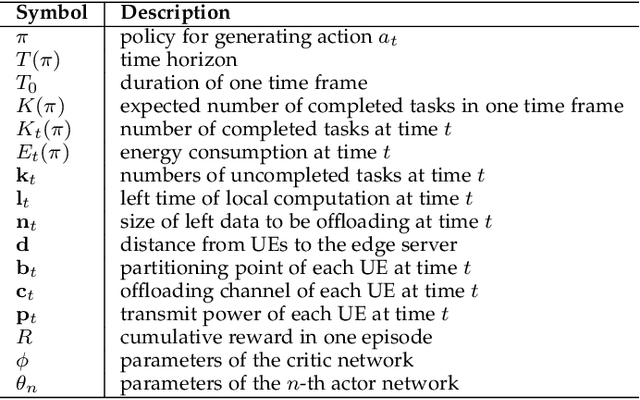

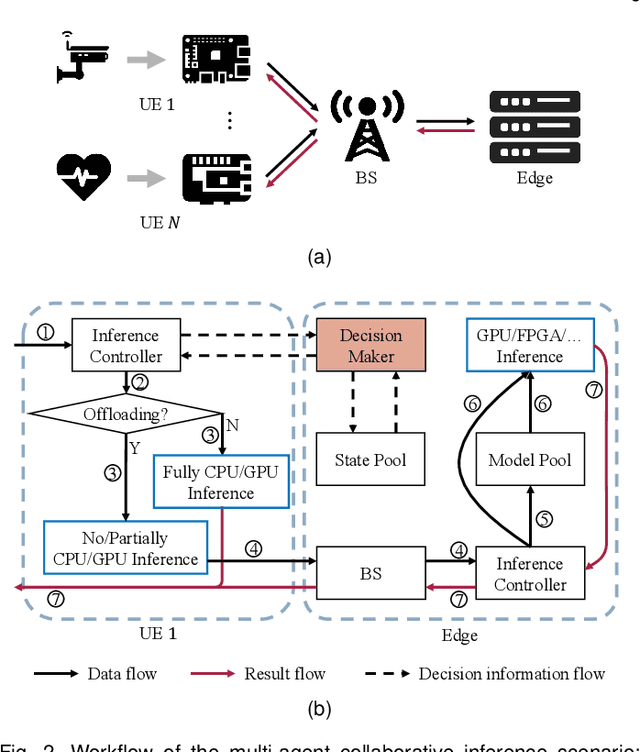

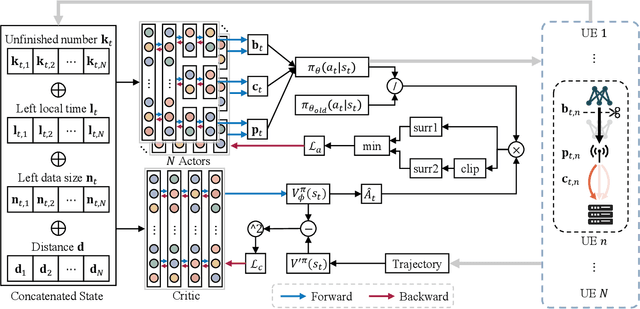

Multi-Agent Collaborative Inference via DNN Decoupling: Intermediate Feature Compression and Edge Learning

May 24, 2022

Abstract:Recently, deploying deep neural network (DNN) models via collaborative inference, which splits a pre-trained model into two parts and executes them on user equipment (UE) and edge server respectively, becomes attractive. However, the large intermediate feature of DNN impedes flexible decoupling, and existing approaches either focus on the single UE scenario or simply define tasks considering the required CPU cycles, but ignore the indivisibility of a single DNN layer. In this paper, we study the multi-agent collaborative inference scenario, where a single edge server coordinates the inference of multiple UEs. Our goal is to achieve fast and energy-efficient inference for all UEs. To achieve this goal, we first design a lightweight autoencoder-based method to compress the large intermediate feature. Then we define tasks according to the inference overhead of DNNs and formulate the problem as a Markov decision process (MDP). Finally, we propose a multi-agent hybrid proximal policy optimization (MAHPPO) algorithm to solve the optimization problem with a hybrid action space. We conduct extensive experiments with different types of networks, and the results show that our method can reduce up to 56\% of inference latency and save up to 72\% of energy consumption.

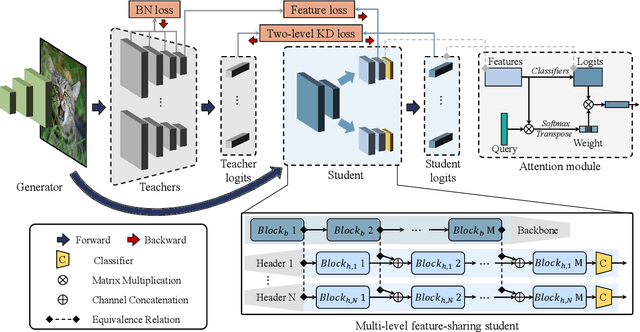

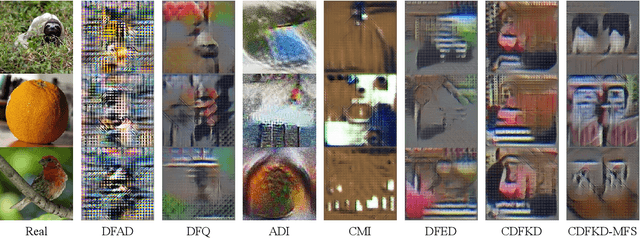

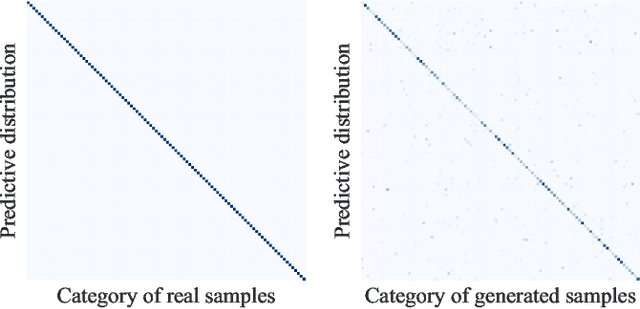

CDFKD-MFS: Collaborative Data-free Knowledge Distillation via Multi-level Feature Sharing

May 24, 2022

Abstract:Recently, the compression and deployment of powerful deep neural networks (DNNs) on resource-limited edge devices to provide intelligent services have become attractive tasks. Although knowledge distillation (KD) is a feasible solution for compression, its requirement on the original dataset raises privacy concerns. In addition, it is common to integrate multiple pretrained models to achieve satisfactory performance. How to compress multiple models into a tiny model is challenging, especially when the original data are unavailable. To tackle this challenge, we propose a framework termed collaborative data-free knowledge distillation via multi-level feature sharing (CDFKD-MFS), which consists of a multi-header student module, an asymmetric adversarial data-free KD module, and an attention-based aggregation module. In this framework, the student model equipped with a multi-level feature-sharing structure learns from multiple teacher models and is trained together with a generator in an asymmetric adversarial manner. When some real samples are available, the attention module adaptively aggregates predictions of the student headers, which can further improve performance. We conduct extensive experiments on three popular computer visual datasets. In particular, compared with the most competitive alternative, the accuracy of the proposed framework is 1.18\% higher on the CIFAR-100 dataset, 1.67\% higher on the Caltech-101 dataset, and 2.99\% higher on the mini-ImageNet dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge