Jian Yu

LingLanMiDian: Systematic Evaluation of LLMs on TCM Knowledge and Clinical Reasoning

Feb 02, 2026Abstract:Large language models (LLMs) are advancing rapidly in medical NLP, yet Traditional Chinese Medicine (TCM) with its distinctive ontology, terminology, and reasoning patterns requires domain-faithful evaluation. Existing TCM benchmarks are fragmented in coverage and scale and rely on non-unified or generation-heavy scoring that hinders fair comparison. We present the LingLanMiDian (LingLan) benchmark, a large-scale, expert-curated, multi-task suite that unifies evaluation across knowledge recall, multi-hop reasoning, information extraction, and real-world clinical decision-making. LingLan introduces a consistent metric design, a synonym-tolerant protocol for clinical labels, a per-dataset 400-item Hard subset, and a reframing of diagnosis and treatment recommendation into single-choice decision recognition. We conduct comprehensive, zero-shot evaluations on 14 leading open-source and proprietary LLMs, providing a unified perspective on their strengths and limitations in TCM commonsense knowledge understanding, reasoning, and clinical decision support; critically, the evaluation on Hard subset reveals a substantial gap between current models and human experts in TCM-specialized reasoning. By bridging fundamental knowledge and applied reasoning through standardized evaluation, LingLan establishes a unified, quantitative, and extensible foundation for advancing TCM LLMs and domain-specific medical AI research. All evaluation data and code are available at https://github.com/TCMAI-BJTU/LingLan and http://tcmnlp.com.

Seedance 1.5 pro: A Native Audio-Visual Joint Generation Foundation Model

Dec 23, 2025Abstract:Recent strides in video generation have paved the way for unified audio-visual generation. In this work, we present Seedance 1.5 pro, a foundational model engineered specifically for native, joint audio-video generation. Leveraging a dual-branch Diffusion Transformer architecture, the model integrates a cross-modal joint module with a specialized multi-stage data pipeline, achieving exceptional audio-visual synchronization and superior generation quality. To ensure practical utility, we implement meticulous post-training optimizations, including Supervised Fine-Tuning (SFT) on high-quality datasets and Reinforcement Learning from Human Feedback (RLHF) with multi-dimensional reward models. Furthermore, we introduce an acceleration framework that boosts inference speed by over 10X. Seedance 1.5 pro distinguishes itself through precise multilingual and dialect lip-syncing, dynamic cinematic camera control, and enhanced narrative coherence, positioning it as a robust engine for professional-grade content creation. Seedance 1.5 pro is now accessible on Volcano Engine at https://console.volcengine.com/ark/region:ark+cn-beijing/experience/vision?type=GenVideo.

Reframe Your Life Story: Interactive Narrative Therapist and Innovative Moment Assessment with Large Language Models

Jul 27, 2025

Abstract:Recent progress in large language models (LLMs) has opened new possibilities for mental health support, yet current approaches lack realism in simulating specialized psychotherapy and fail to capture therapeutic progression over time. Narrative therapy, which helps individuals transform problematic life stories into empowering alternatives, remains underutilized due to limited access and social stigma. We address these limitations through a comprehensive framework with two core components. First, INT (Interactive Narrative Therapist) simulates expert narrative therapists by planning therapeutic stages, guiding reflection levels, and generating contextually appropriate expert-like responses. Second, IMA (Innovative Moment Assessment) provides a therapy-centric evaluation method that quantifies effectiveness by tracking "Innovative Moments" (IMs), critical narrative shifts in client speech signaling therapy progress. Experimental results on 260 simulated clients and 230 human participants reveal that INT consistently outperforms standard LLMs in therapeutic quality and depth. We further demonstrate the effectiveness of INT in synthesizing high-quality support conversations to facilitate social applications.

IMAGGarment-1: Fine-Grained Garment Generation for Controllable Fashion Design

Apr 17, 2025

Abstract:This paper presents IMAGGarment-1, a fine-grained garment generation (FGG) framework that enables high-fidelity garment synthesis with precise control over silhouette, color, and logo placement. Unlike existing methods that are limited to single-condition inputs, IMAGGarment-1 addresses the challenges of multi-conditional controllability in personalized fashion design and digital apparel applications. Specifically, IMAGGarment-1 employs a two-stage training strategy to separately model global appearance and local details, while enabling unified and controllable generation through end-to-end inference. In the first stage, we propose a global appearance model that jointly encodes silhouette and color using a mixed attention module and a color adapter. In the second stage, we present a local enhancement model with an adaptive appearance-aware module to inject user-defined logos and spatial constraints, enabling accurate placement and visual consistency. To support this task, we release GarmentBench, a large-scale dataset comprising over 180K garment samples paired with multi-level design conditions, including sketches, color references, logo placements, and textual prompts. Extensive experiments demonstrate that our method outperforms existing baselines, achieving superior structural stability, color fidelity, and local controllability performance. The code and model are available at https://github.com/muzishen/IMAGGarment-1.

ClinicalBench: Can LLMs Beat Traditional ML Models in Clinical Prediction?

Nov 10, 2024Abstract:Large Language Models (LLMs) hold great promise to revolutionize current clinical systems for their superior capacities on medical text processing tasks and medical licensing exams. Meanwhile, traditional ML models such as SVM and XGBoost have still been mainly adopted in clinical prediction tasks. An emerging question is Can LLMs beat traditional ML models in clinical prediction? Thus, we build a new benchmark ClinicalBench to comprehensively study the clinical predictive modeling capacities of both general-purpose and medical LLMs, and compare them with traditional ML models. ClinicalBench embraces three common clinical prediction tasks, two databases, 14 general-purpose LLMs, 8 medical LLMs, and 11 traditional ML models. Through extensive empirical investigation, we discover that both general-purpose and medical LLMs, even with different model scales, diverse prompting or fine-tuning strategies, still cannot beat traditional ML models in clinical prediction yet, shedding light on their potential deficiency in clinical reasoning and decision-making. We call for caution when practitioners adopt LLMs in clinical applications. ClinicalBench can be utilized to bridge the gap between LLMs' development for healthcare and real-world clinical practice.

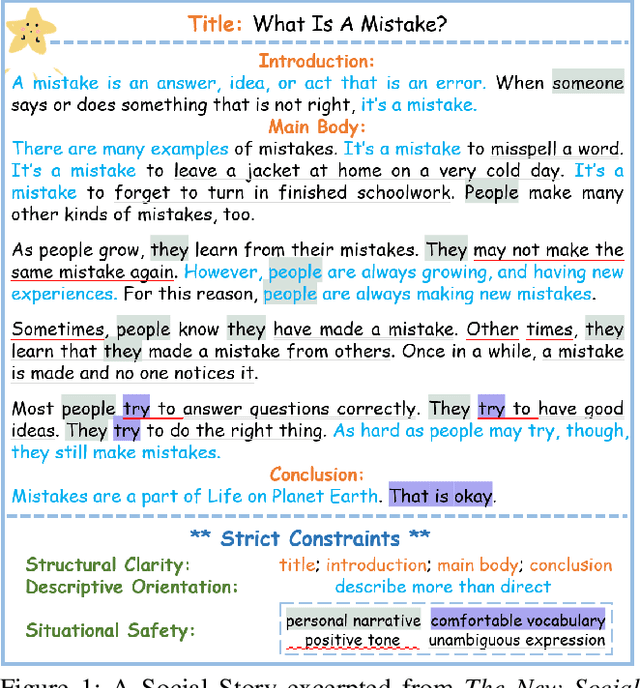

SS-Bench: A Benchmark for Social Story Generation and Evaluation

Jun 22, 2024

Abstract:Children with Autism Spectrum Disorder (ASD) often misunderstand social situations and struggle to participate in daily routines. Psychology experts write Social Stories under strict constraints of structural clarity, descriptive orientation, and situational safety to enhance their abilities in these regimes. However, Social Stories are costly in creation and often limited in diversity and timeliness. As Large Language Models (LLMs) become increasingly powerful, there is a growing need for more automated, affordable, and accessible methods to generate Social Stories in real-time with broad coverage. Adapting LLMs to meet the unique and strict constraints of Social Stories is a challenging issue. To this end, we propose \textbf{SS-Bench}, a \textbf{S}ocial \textbf{S}tory \textbf{Bench}mark for generating and evaluating Social Stories. Specifically, we develop a constraint-driven strategy named \textbf{\textsc{StarSow}} to hierarchically prompt LLMs to generate Social Stories and build a benchmark, which has been validated through experiments to fine-tune smaller models for generating qualified Social Stories. Additionally, we introduce \textbf{Quality Assessment Criteria}, employed in human and GPT evaluations, to verify the effectiveness of the generated stories. We hope this work benefits the autism community and catalyzes future research focusing on particular groups.

Challenges in Binary Classification

Jun 19, 2024Abstract:Binary Classification plays an important role in machine learning. For linear classification, SVM is the optimal binary classification method. For nonlinear classification, the SVM algorithm needs to complete the classification task by using the kernel function. Although the SVM algorithm with kernel function is very effective, the selection of kernel function is empirical, which means that the kernel function may not be optimal. Therefore, it is worth studying how to obtain an optimal binary classifier. In this paper, the problem of finding the optimal binary classifier is considered as a variational problem. We design the objective function of this variational problem through the max-min problem of the (Euclidean) distance between two classes. For linear classification, it can be deduced that SVM is a special case of this variational problem framework. For Euclidean distance, it is proved that the proposed variational problem has some limitations for nonlinear classification. Therefore, how to design a more appropriate objective function to find the optimal binary classifier is still an open problem. Further, it's discussed some challenges and problems in finding the optimal classifier.

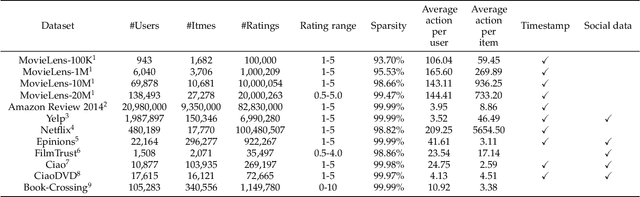

Towards Robust Recommendation: A Review and an Adversarial Robustness Evaluation Library

Apr 27, 2024

Abstract:Recently, recommender system has achieved significant success. However, due to the openness of recommender systems, they remain vulnerable to malicious attacks. Additionally, natural noise in training data and issues such as data sparsity can also degrade the performance of recommender systems. Therefore, enhancing the robustness of recommender systems has become an increasingly important research topic. In this survey, we provide a comprehensive overview of the robustness of recommender systems. Based on our investigation, we categorize the robustness of recommender systems into adversarial robustness and non-adversarial robustness. In the adversarial robustness, we introduce the fundamental principles and classical methods of recommender system adversarial attacks and defenses. In the non-adversarial robustness, we analyze non-adversarial robustness from the perspectives of data sparsity, natural noise, and data imbalance. Additionally, we summarize commonly used datasets and evaluation metrics for evaluating the robustness of recommender systems. Finally, we also discuss the current challenges in the field of recommender system robustness and potential future research directions. Additionally, to facilitate fair and efficient evaluation of attack and defense methods in adversarial robustness, we propose an adversarial robustness evaluation library--ShillingREC, and we conduct evaluations of basic attack models and recommendation models. ShillingREC project is released at https://github.com/chengleileilei/ShillingREC.

Study on the static detection of ICF target based on muonic X-ray sphere encoded imaging

Apr 18, 2024

Abstract:Muon Induced X-ray Emission (MIXE) was discovered by Chinese physicist Zhang Wenyu as early as 1947, and it can conduct non-destructive elemental analysis inside samples. Research has shown that MIXE can retain the high efficiency of direct imaging while benefiting from the low noise of pinhole imaging through encoding holes. The related technology significantly improves the counting rate while maintaining imaging quality. The sphere encoding technology effectively solves the imaging blurring caused by the tilting of the encoding system, and successfully images micrometer sized X-ray sources. This paper will combine MIXE and X-ray sphere coding imaging techniques, including ball coding and zone plates, to study the method of non-destructive deep structure imaging of ICF targets and obtaining sub element distribution. This method aims to develop a new method for ICF target detection, which is particularly important for inertial confinement fusion. At the same time, this method can be used to detect and analyze materials that are difficult to penetrate or sensitive, and is expected to solve the problem of element resolution and imaging that traditional technologies cannot overcome. It will provide new methods for the future development of multiple fields such as particle physics, material science, and X-ray optics.

BSDP: Brain-inspired Streaming Dual-level Perturbations for Online Open World Object Detection

Mar 05, 2024

Abstract:Humans can easily distinguish the known and unknown categories and can recognize the unknown object by learning it once instead of repeating it many times without forgetting the learned object. Hence, we aim to make deep learning models simulate the way people learn. We refer to such a learning manner as OnLine Open World Object Detection(OLOWOD). Existing OWOD approaches pay more attention to the identification of unknown categories, while the incremental learning part is also very important. Besides, some neuroscience research shows that specific noises allow the brain to form new connections and neural pathways which may improve learning speed and efficiency. In this paper, we take the dual-level information of old samples as perturbations on new samples to make the model good at learning new knowledge without forgetting the old knowledge. Therefore, we propose a simple plug-and-play method, called Brain-inspired Streaming Dual-level Perturbations(BSDP), to solve the OLOWOD problem. Specifically, (1) we first calculate the prototypes of previous categories and use the distance between samples and the prototypes as the sample selecting strategy to choose old samples for replay; (2) then take the prototypes as the streaming feature-level perturbations of new samples, so as to improve the plasticity of the model through revisiting the old knowledge; (3) and also use the distribution of the features of the old category samples to generate adversarial data in the form of streams as the data-level perturbations to enhance the robustness of the model to new categories. We empirically evaluate BSDP on PASCAL VOC and MS-COCO, and the excellent results demonstrate the promising performance of our proposed method and learning manner.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge