Ji-Hoon Kim

Learning Where It Matters: Geometric Anchoring for Robust Preference Alignment

Feb 04, 2026Abstract:Direct Preference Optimization (DPO) and related methods align large language models from pairwise preferences by regularizing updates against a fixed reference policy. As the policy drifts, a static reference, however, can become increasingly miscalibrated, leading to distributional mismatch and amplifying spurious preference signals under noisy supervision. Conversely, reference-free variants avoid mismatch but often suffer from unconstrained reward drift. We propose Geometric Anchor Preference Optimization (GAPO), which replaces the fixed reference with a dynamic, geometry-aware anchor: an adversarial local perturbation of the current policy within a small radius that serves as a pessimistic baseline. This anchor enables an adaptive reweighting mechanism, modulating the importance of each preference pair based on its local sensitivity. We further introduce the Anchor Gap, the reward discrepancy between the policy and its anchor, and show under smoothness conditions that it approximates worst-case local margin degradation. Optimizing a logistic objective weighted by this gap downweights geometrically brittle instances while emphasizing robust preference signals. Across diverse noise settings, GAPO consistently improves robustness while matching or improving performance on standard LLM alignment and reasoning benchmarks.

UNMIXX: Untangling Highly Correlated Singing Voices Mixtures

Jan 19, 2026Abstract:We introduce UNMIXX, a novel framework for multiple singing voices separation (MSVS). While related to speech separation, MSVS faces unique challenges: data scarcity and the highly correlated nature of singing voices mixture. To address these issues, we propose UNMIXX with three key components: (1) musically informed mixing strategy to construct highly correlated, music-like mixtures, (2) cross-source attention that drives representations of two singers apart via reverse attention, and (3) magnitude penalty loss penalizing erroneously assigned interfering energy. UNMIXX not only addresses data scarcity by simulating realistic training data, but also excels at separating highly correlated mixtures through cross-source interactions at both the architectural and loss levels. Our extensive experiments demonstrate that UNMIXX greatly enhances performance, with SDRi gains exceeding 2.2 dB over prior work.

TAVID: Text-Driven Audio-Visual Interactive Dialogue Generation

Dec 23, 2025Abstract:The objective of this paper is to jointly synthesize interactive videos and conversational speech from text and reference images. With the ultimate goal of building human-like conversational systems, recent studies have explored talking or listening head generation as well as conversational speech generation. However, these works are typically studied in isolation, overlooking the multimodal nature of human conversation, which involves tightly coupled audio-visual interactions. In this paper, we introduce TAVID, a unified framework that generates both interactive faces and conversational speech in a synchronized manner. TAVID integrates face and speech generation pipelines through two cross-modal mappers (i.e., a motion mapper and a speaker mapper), which enable bidirectional exchange of complementary information between the audio and visual modalities. We evaluate our system across four dimensions: talking face realism, listening head responsiveness, dyadic interaction fluency, and speech quality. Extensive experiments demonstrate the effectiveness of our approach across all these aspects.

Accelerating Diffusion-based Text-to-Speech Model Training with Dual Modality Alignment

May 26, 2025Abstract:The goal of this paper is to optimize the training process of diffusion-based text-to-speech models. While recent studies have achieved remarkable advancements, their training demands substantial time and computational costs, largely due to the implicit guidance of diffusion models in learning complex intermediate representations. To address this, we propose A-DMA, an effective strategy for Accelerating training with Dual Modality Alignment. Our method introduces a novel alignment pipeline leveraging both text and speech modalities: text-guided alignment, which incorporates contextual representations, and speech-guided alignment, which refines semantic representations. By aligning hidden states with discriminative features, our training scheme reduces the reliance on diffusion models for learning complex representations. Extensive experiments demonstrate that A-DMA doubles the convergence speed while achieving superior performance over baselines. Code and demo samples are available at: https://github.com/ZhikangNiu/A-DMA

AlignDiT: Multimodal Aligned Diffusion Transformer for Synchronized Speech Generation

Apr 29, 2025Abstract:In this paper, we address the task of multimodal-to-speech generation, which aims to synthesize high-quality speech from multiple input modalities: text, video, and reference audio. This task has gained increasing attention due to its wide range of applications, such as film production, dubbing, and virtual avatars. Despite recent progress, existing methods still suffer from limitations in speech intelligibility, audio-video synchronization, speech naturalness, and voice similarity to the reference speaker. To address these challenges, we propose AlignDiT, a multimodal Aligned Diffusion Transformer that generates accurate, synchronized, and natural-sounding speech from aligned multimodal inputs. Built upon the in-context learning capability of the DiT architecture, AlignDiT explores three effective strategies to align multimodal representations. Furthermore, we introduce a novel multimodal classifier-free guidance mechanism that allows the model to adaptively balance information from each modality during speech synthesis. Extensive experiments demonstrate that AlignDiT significantly outperforms existing methods across multiple benchmarks in terms of quality, synchronization, and speaker similarity. Moreover, AlignDiT exhibits strong generalization capability across various multimodal tasks, such as video-to-speech synthesis and visual forced alignment, consistently achieving state-of-the-art performance. The demo page is available at https://mm.kaist.ac.kr/projects/AlignDiT .

SCRec: A Scalable Computational Storage System with Statistical Sharding and Tensor-train Decomposition for Recommendation Models

Apr 01, 2025Abstract:Deep Learning Recommendation Models (DLRMs) play a crucial role in delivering personalized content across web applications such as social networking and video streaming. However, with improvements in performance, the parameter size of DLRMs has grown to terabyte (TB) scales, accompanied by memory bandwidth demands exceeding TB/s levels. Furthermore, the workload intensity within the model varies based on the target mechanism, making it difficult to build an optimized recommendation system. In this paper, we propose SCRec, a scalable computational storage recommendation system that can handle TB-scale industrial DLRMs while guaranteeing high bandwidth requirements. SCRec utilizes a software framework that features a mixed-integer programming (MIP)-based cost model, efficiently fetching data based on data access patterns and adaptively configuring memory-centric and compute-centric cores. Additionally, SCRec integrates hardware acceleration cores to enhance DLRM computations, particularly allowing for the high-performance reconstruction of approximated embedding vectors from extremely compressed tensor-train (TT) format. By combining its software framework and hardware accelerators, while eliminating data communication overhead by being implemented on a single server, SCRec achieves substantial improvements in DLRM inference performance. It delivers up to 55.77$\times$ speedup compared to a CPU-DRAM system with no loss in accuracy and up to 13.35$\times$ energy efficiency gains over a multi-GPU system.

EXION: Exploiting Inter- and Intra-Iteration Output Sparsity for Diffusion Models

Jan 10, 2025

Abstract:Over the past few years, diffusion models have emerged as novel AI solutions, generating diverse multi-modal outputs from text prompts. Despite their capabilities, they face challenges in computing, such as excessive latency and energy consumption due to their iterative architecture. Although prior works specialized in transformer acceleration can be applied, the iterative nature of diffusion models remains unresolved. In this paper, we present EXION, the first SW-HW co-designed diffusion accelerator that solves the computation challenges by exploiting the unique inter- and intra-iteration output sparsity in diffusion models. To this end, we propose two SW-level optimizations. First, we introduce the FFN-Reuse algorithm that identifies and skips redundant computations in FFN layers across different iterations (inter-iteration sparsity). Second, we use a modified eager prediction method that employs two-step leading-one detection to accurately predict the attention score, skipping unnecessary computations within an iteration (intra-iteration sparsity). We also introduce a novel data compaction mechanism named ConMerge, which can enhance HW utilization by condensing and merging sparse matrices into compact forms. Finally, it has a dedicated HW architecture that supports the above sparsity-inducing algorithms, translating high output sparsity into improved energy efficiency and performance. To verify the feasibility of the EXION, we first demonstrate that it has no impact on accuracy in various types of multi-modal diffusion models. We then instantiate EXION in both server- and edge-level settings and compare its performance against GPUs with similar specifications. Our evaluation shows that EXION achieves dramatic improvements in performance and energy efficiency by 3.2-379.3x and 45.1-3067.6x compared to a server GPU and by 42.6-1090.9x and 196.9-4668.2x compared to an edge GPU.

AdaptVC: High Quality Voice Conversion with Adaptive Learning

Jan 07, 2025

Abstract:The goal of voice conversion is to transform the speech of a source speaker to sound like that of a reference speaker while preserving the original content. A key challenge is to extract disentangled linguistic content from the source and voice style from the reference. While existing approaches leverage various methods to isolate the two, a generalization still requires further attention, especially for robustness in zero-shot scenarios. In this paper, we achieve successful disentanglement of content and speaker features by tuning self-supervised speech features with adapters. The adapters are trained to dynamically encode nuanced features from rich self-supervised features, and the decoder fuses them to produce speech that accurately resembles the reference with minimal loss of content. Moreover, we leverage a conditional flow matching decoder with cross-attention speaker conditioning to further boost the synthesis quality and efficiency. Subjective and objective evaluations in a zero-shot scenario demonstrate that the proposed method outperforms existing models in speech quality and similarity to the reference speech.

CrossSpeech++: Cross-lingual Speech Synthesis with Decoupled Language and Speaker Generation

Dec 28, 2024

Abstract:The goal of this work is to generate natural speech in multiple languages while maintaining the same speaker identity, a task known as cross-lingual speech synthesis. A key challenge of cross-lingual speech synthesis is the language-speaker entanglement problem, which causes the quality of cross-lingual systems to lag behind that of intra-lingual systems. In this paper, we propose CrossSpeech++, which effectively disentangles language and speaker information and significantly improves the quality of cross-lingual speech synthesis. To this end, we break the complex speech generation pipeline into two simple components: language-dependent and speaker-dependent generators. The language-dependent generator produces linguistic variations that are not biased by specific speaker attributes. The speaker-dependent generator models acoustic variations that characterize speaker identity. By handling each type of information in separate modules, our method can effectively disentangle language and speaker representation. We conduct extensive experiments using various metrics, and demonstrate that CrossSpeech++ achieves significant improvements in cross-lingual speech synthesis, outperforming existing methods by a large margin.

V2SFlow: Video-to-Speech Generation with Speech Decomposition and Rectified Flow

Nov 29, 2024

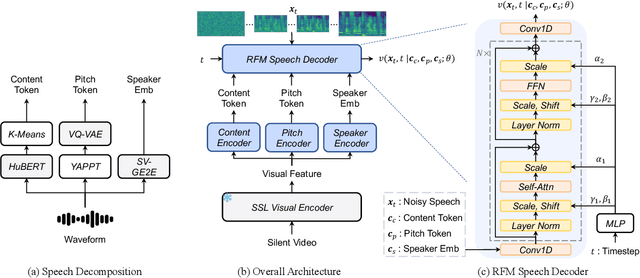

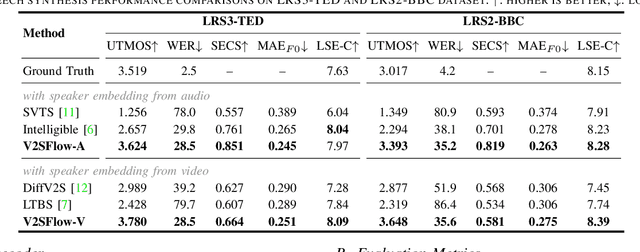

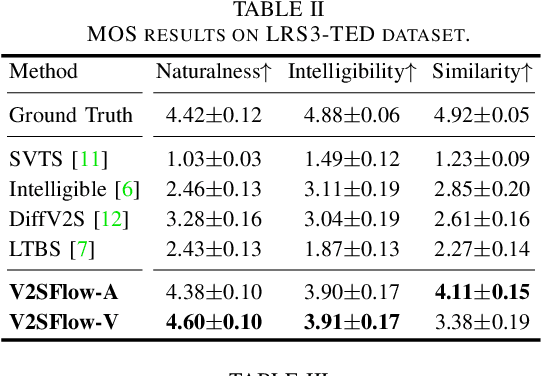

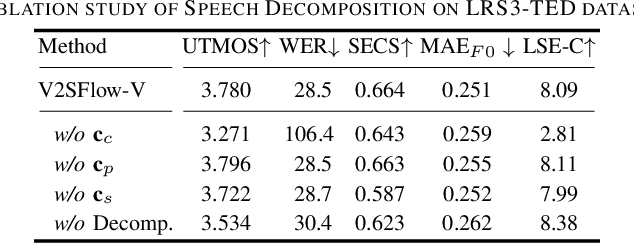

Abstract:In this paper, we introduce V2SFlow, a novel Video-to-Speech (V2S) framework designed to generate natural and intelligible speech directly from silent talking face videos. While recent V2S systems have shown promising results on constrained datasets with limited speakers and vocabularies, their performance often degrades on real-world, unconstrained datasets due to the inherent variability and complexity of speech signals. To address these challenges, we decompose the speech signal into manageable subspaces (content, pitch, and speaker information), each representing distinct speech attributes, and predict them directly from the visual input. To generate coherent and realistic speech from these predicted attributes, we employ a rectified flow matching decoder built on a Transformer architecture, which models efficient probabilistic pathways from random noise to the target speech distribution. Extensive experiments demonstrate that V2SFlow significantly outperforms state-of-the-art methods, even surpassing the naturalness of ground truth utterances.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge