Hong Yan

Principled Synthetic Data Enables the First Scaling Laws for LLMs in Recommendation

Feb 07, 2026Abstract:Large Language Models (LLMs) represent a promising frontier for recommender systems, yet their development has been impeded by the absence of predictable scaling laws, which are crucial for guiding research and optimizing resource allocation. We hypothesize that this may be attributed to the inherent noise, bias, and incompleteness of raw user interaction data in prior continual pre-training (CPT) efforts. This paper introduces a novel, layered framework for generating high-quality synthetic data that circumvents such issues by creating a curated, pedagogical curriculum for the LLM. We provide powerful, direct evidence for the utility of our curriculum by showing that standard sequential models trained on our principled synthetic data significantly outperform ($+130\%$ on recall@100 for SasRec) models trained on real data in downstream ranking tasks, demonstrating its superiority for learning generalizable user preference patterns. Building on this, we empirically demonstrate, for the first time, robust power-law scaling for an LLM that is continually pre-trained on our high-quality, recommendation-specific data. Our experiments reveal consistent and predictable perplexity reduction across multiple synthetic data modalities. These findings establish a foundational methodology for reliable scaling LLM capabilities in the recommendation domain, thereby shifting the research focus from mitigating data deficiencies to leveraging high-quality, structured information.

Partial Domain Adaptation via Importance Sampling-based Shift Correction

Jul 27, 2025Abstract:Partial domain adaptation (PDA) is a challenging task in real-world machine learning scenarios. It aims to transfer knowledge from a labeled source domain to a related unlabeled target domain, where the support set of the source label distribution subsumes the target one. Previous PDA works managed to correct the label distribution shift by weighting samples in the source domain. However, the simple reweighing technique cannot explore the latent structure and sufficiently use the labeled data, and then models are prone to over-fitting on the source domain. In this work, we propose a novel importance sampling-based shift correction (IS$^2$C) method, where new labeled data are sampled from a built sampling domain, whose label distribution is supposed to be the same as the target domain, to characterize the latent structure and enhance the generalization ability of the model. We provide theoretical guarantees for IS$^2$C by proving that the generalization error can be sufficiently dominated by IS$^2$C. In particular, by implementing sampling with the mixture distribution, the extent of shift between source and sampling domains can be connected to generalization error, which provides an interpretable way to build IS$^2$C. To improve knowledge transfer, an optimal transport-based independence criterion is proposed for conditional distribution alignment, where the computation of the criterion can be adjusted to reduce the complexity from $\mathcal{O}(n^3)$ to $\mathcal{O}(n^2)$ in realistic PDA scenarios. Extensive experiments on PDA benchmarks validate the theoretical results and demonstrate the effectiveness of our IS$^2$C over existing methods.

Thinking Beyond Tokens: From Brain-Inspired Intelligence to Cognitive Foundations for Artificial General Intelligence and its Societal Impact

Jul 01, 2025

Abstract:Can machines truly think, reason and act in domains like humans? This enduring question continues to shape the pursuit of Artificial General Intelligence (AGI). Despite the growing capabilities of models such as GPT-4.5, DeepSeek, Claude 3.5 Sonnet, Phi-4, and Grok 3, which exhibit multimodal fluency and partial reasoning, these systems remain fundamentally limited by their reliance on token-level prediction and lack of grounded agency. This paper offers a cross-disciplinary synthesis of AGI development, spanning artificial intelligence, cognitive neuroscience, psychology, generative models, and agent-based systems. We analyze the architectural and cognitive foundations of general intelligence, highlighting the role of modular reasoning, persistent memory, and multi-agent coordination. In particular, we emphasize the rise of Agentic RAG frameworks that combine retrieval, planning, and dynamic tool use to enable more adaptive behavior. We discuss generalization strategies, including information compression, test-time adaptation, and training-free methods, as critical pathways toward flexible, domain-agnostic intelligence. Vision-Language Models (VLMs) are reexamined not just as perception modules but as evolving interfaces for embodied understanding and collaborative task completion. We also argue that true intelligence arises not from scale alone but from the integration of memory and reasoning: an orchestration of modular, interactive, and self-improving components where compression enables adaptive behavior. Drawing on advances in neurosymbolic systems, reinforcement learning, and cognitive scaffolding, we explore how recent architectures begin to bridge the gap between statistical learning and goal-directed cognition. Finally, we identify key scientific, technical, and ethical challenges on the path to AGI.

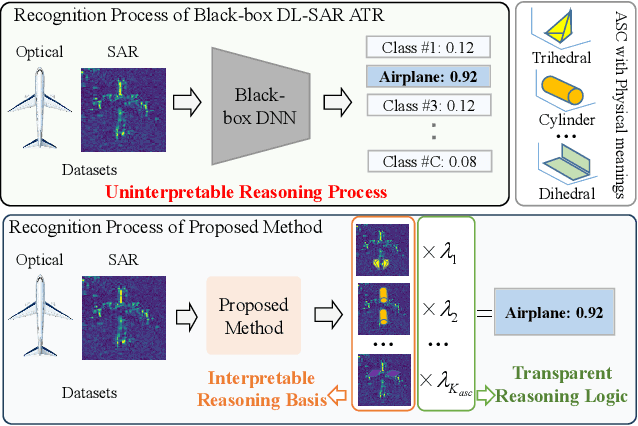

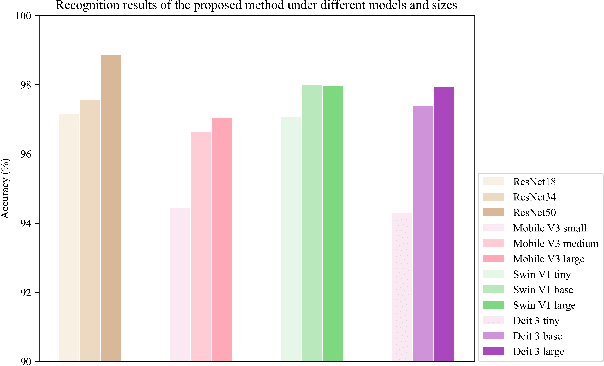

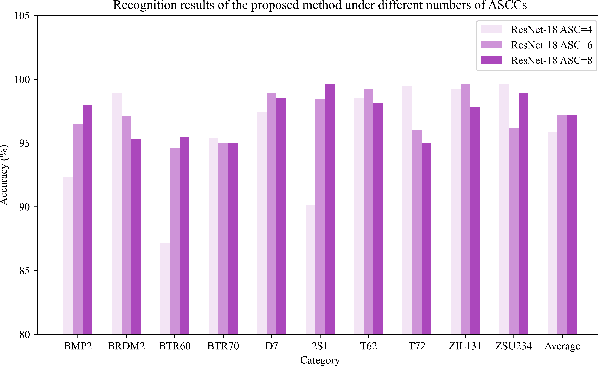

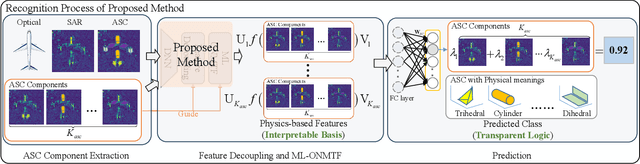

An Interpretable Two-Stage Feature Decomposition Method for Deep Learning-based SAR ATR

Jun 11, 2025

Abstract:Synthetic aperture radar automatic target recognition (SAR ATR) has seen significant performance improvements with deep learning. However, the black-box nature of deep SAR ATR introduces low confidence and high risks in decision-critical SAR applications, hindering practical deployment. To address this issue, deep SAR ATR should provide an interpretable reasoning basis $r_b$ and logic $\lambda_w$, forming the reasoning logic $\sum_{i} {{r_b^i} \times {\lambda_w^i}} =pred$ behind the decisions. Therefore, this paper proposes a physics-based two-stage feature decomposition method for interpretable deep SAR ATR, which transforms uninterpretable deep features into attribute scattering center components (ASCC) with clear physical meanings. First, ASCCs are obtained through a clustering algorithm. To extract independent physical components from deep features, we propose a two-stage decomposition method. In the first stage, a feature decoupling and discrimination module separates deep features into approximate ASCCs with global discriminability. In the second stage, a multilayer orthogonal non-negative matrix tri-factorization (MLO-NMTF) further decomposes the ASCCs into independent components with distinct physical meanings. The MLO-NMTF elegantly aligns with the clustering algorithms to obtain ASCCs. Finally, this method ensures both an interpretable reasoning process and accurate recognition results. Extensive experiments on four benchmark datasets confirm its effectiveness, showcasing the method's interpretability, robust recognition performance, and strong generalization capability.

sparseGeoHOPCA: A Geometric Solution to Sparse Higher-Order PCA Without Covariance Estimation

Jun 10, 2025Abstract:We propose sparseGeoHOPCA, a novel framework for sparse higher-order principal component analysis (SHOPCA) that introduces a geometric perspective to high-dimensional tensor decomposition. By unfolding the input tensor along each mode and reformulating the resulting subproblems as structured binary linear optimization problems, our method transforms the original nonconvex sparse objective into a tractable geometric form. This eliminates the need for explicit covariance estimation and iterative deflation, enabling significant gains in both computational efficiency and interpretability, particularly in high-dimensional and unbalanced data scenarios. We theoretically establish the equivalence between the geometric subproblems and the original SHOPCA formulation, and derive worst-case approximation error bounds based on classical PCA residuals, providing data-dependent performance guarantees. The proposed algorithm achieves a total computational complexity of $O\left(\sum_{n=1}^{N} (k_n^3 + J_n k_n^2)\right)$, which scales linearly with tensor size. Extensive experiments demonstrate that sparseGeoHOPCA accurately recovers sparse supports in synthetic settings, preserves classification performance under 10$\times$ compression, and achieves high-quality image reconstruction on ImageNet, highlighting its robustness and versatility.

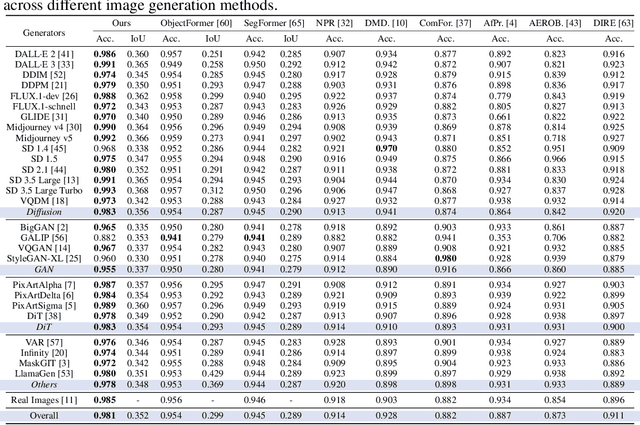

Interpretable and Reliable Detection of AI-Generated Images via Grounded Reasoning in MLLMs

Jun 08, 2025

Abstract:The rapid advancement of image generation technologies intensifies the demand for interpretable and robust detection methods. Although existing approaches often attain high accuracy, they typically operate as black boxes without providing human-understandable justifications. Multi-modal Large Language Models (MLLMs), while not originally intended for forgery detection, exhibit strong analytical and reasoning capabilities. When properly fine-tuned, they can effectively identify AI-generated images and offer meaningful explanations. However, existing MLLMs still struggle with hallucination and often fail to align their visual interpretations with actual image content and human reasoning. To bridge this gap, we construct a dataset of AI-generated images annotated with bounding boxes and descriptive captions that highlight synthesis artifacts, establishing a foundation for human-aligned visual-textual grounded reasoning. We then finetune MLLMs through a multi-stage optimization strategy that progressively balances the objectives of accurate detection, visual localization, and coherent textual explanation. The resulting model achieves superior performance in both detecting AI-generated images and localizing visual flaws, significantly outperforming baseline methods.

VisAlgae 2023: A Dataset and Challenge for Algae Detection in Microscopy Images

May 27, 2025Abstract:Microalgae, vital for ecological balance and economic sectors, present challenges in detection due to their diverse sizes and conditions. This paper summarizes the second "Vision Meets Algae" (VisAlgae 2023) Challenge, aiming to enhance high-throughput microalgae cell detection. The challenge, which attracted 369 participating teams, includes a dataset of 1000 images across six classes, featuring microalgae of varying sizes and distinct features. Participants faced tasks such as detecting small targets, handling motion blur, and complex backgrounds. The top 10 methods, outlined here, offer insights into overcoming these challenges and maximizing detection accuracy. This intersection of algae research and computer vision offers promise for ecological understanding and technological advancement. The dataset can be accessed at: https://github.com/juntaoJianggavin/Visalgae2023/.

CiUAV: A Multi-Task 3D Indoor Localization System for UAVs based on Channel State Information

May 27, 2025

Abstract:Accurate indoor positioning for unmanned aerial vehicles (UAVs) is critical for logistics, surveillance, and emergency response applications, particularly in GPS-denied environments. Existing indoor localization methods, including optical tracking, ultra-wideband, and Bluetooth-based systems, face cost, accuracy, and robustness trade-offs, limiting their practicality for UAV navigation. This paper proposes CiUAV, a novel 3D indoor localization system designed for UAVs, leveraging channel state information (CSI) obtained from low-cost ESP32 IoT-based sensors. The system incorporates a dynamic automatic gain control (AGC) compensation algorithm to mitigate noise and stabilize CSI signals, significantly enhancing the robustness of the measurement. Additionally, a multi-task 3D localization model, Sensor-in-Sample (SiS), is introduced to enhance system robustness by addressing challenges related to incomplete sensor data and limited training samples. SiS achieves this by joint training with varying sensor configurations and sample sizes, ensuring reliable performance even in resource-constrained scenarios. Experiment results demonstrate that CiUAV achieves a LMSE localization error of 0.2629 m in a 3D space, achieving good accuracy and robustness. The proposed system provides a cost-effective and scalable solution, demonstrating its usefulness for UAV applications in resource-constrained indoor environments.

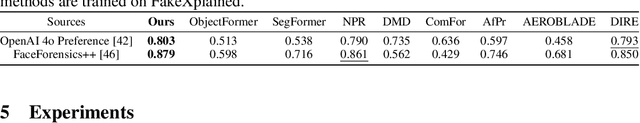

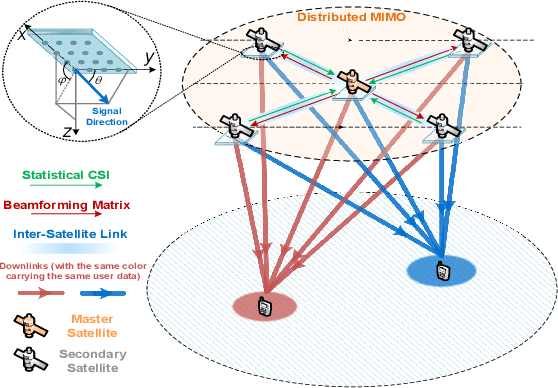

Statistical CSI-Based Distributed Precoding Design for OFDM-Cooperative Multi-Satellite Systems

May 12, 2025

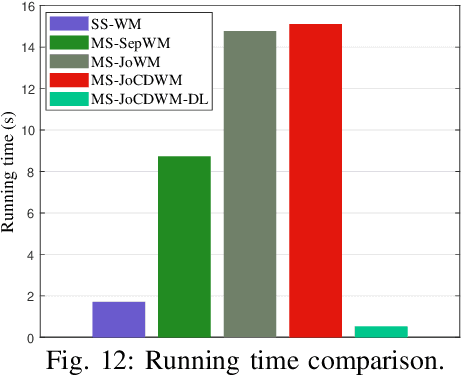

Abstract:This paper investigates the design of distributed precoding for multi-satellite massive MIMO transmissions. We first conduct a detailed analysis of the transceiver model, in which delay and Doppler precompensation is introduced to ensure coherent transmission. In this analysis, we examine the impact of precompensation errors on the transmission model, emphasize the near-independence of inter-satellite interference, and ultimately derive the received signal model. Based on such signal model, we formulate an approximate expected rate maximization problem that considers both statistical channel state information (sCSI) and compensation errors. Unlike conventional approaches that recast such problems as weighted minimum mean square error (WMMSE) minimization, we demonstrate that this transformation fails to maintain equivalence in the considered scenario. To address this, we introduce an equivalent covariance decomposition-based WMMSE (CDWMMSE) formulation derived based on channel covariance matrix decomposition. Taking advantage of the channel characteristics, we develop a low-complexity decomposition method and propose an optimization algorithm. To further reduce computational complexity, we introduce a model-driven scalable deep learning (DL) approach that leverages the equivariance of the mapping from sCSI to the unknown variables in the optimal closed-form solution, enhancing performance through novel dense Transformer network and scaling-invariant loss function design. Simulation results validate the effectiveness and robustness of the proposed method in some practical scenarios. We also demonstrate that the DL approach can adapt to dynamic settings with varying numbers of users and satellites.

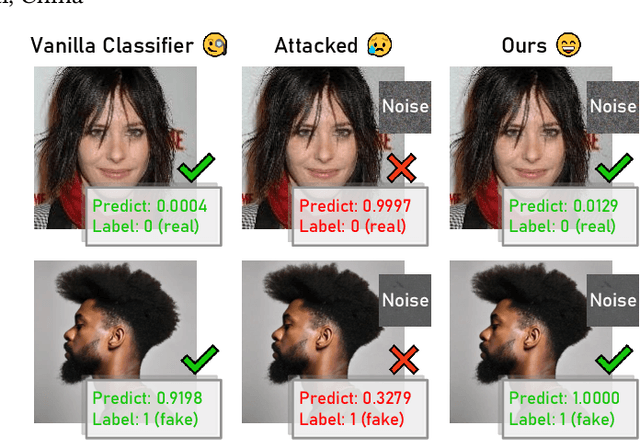

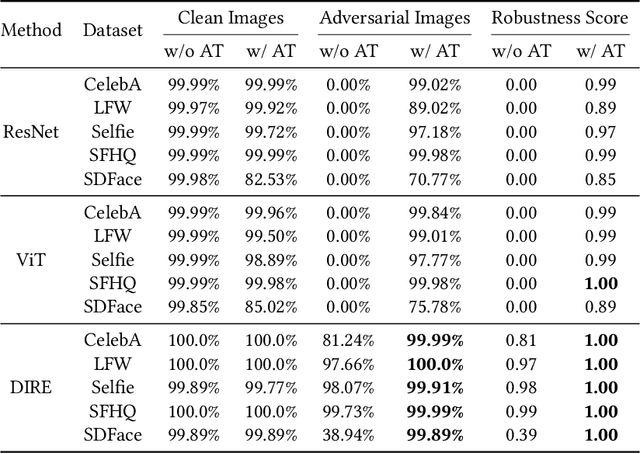

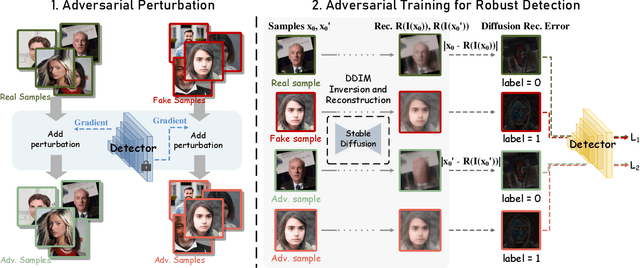

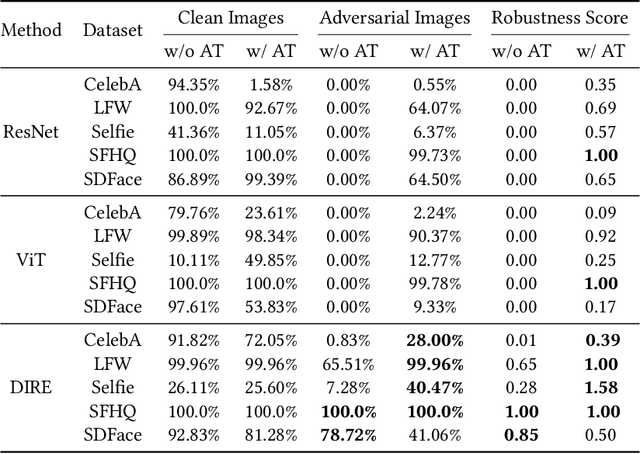

Robustness in AI-Generated Detection: Enhancing Resistance to Adversarial Attacks

May 06, 2025

Abstract:The rapid advancement of generative image technology has introduced significant security concerns, particularly in the domain of face generation detection. This paper investigates the vulnerabilities of current AI-generated face detection systems. Our study reveals that while existing detection methods often achieve high accuracy under standard conditions, they exhibit limited robustness against adversarial attacks. To address these challenges, we propose an approach that integrates adversarial training to mitigate the impact of adversarial examples. Furthermore, we utilize diffusion inversion and reconstruction to further enhance detection robustness. Experimental results demonstrate that minor adversarial perturbations can easily bypass existing detection systems, but our method significantly improves the robustness of these systems. Additionally, we provide an in-depth analysis of adversarial and benign examples, offering insights into the intrinsic characteristics of AI-generated content. All associated code will be made publicly available in a dedicated repository to facilitate further research and verification.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge