Chong Wu

sparseGeoHOPCA: A Geometric Solution to Sparse Higher-Order PCA Without Covariance Estimation

Jun 10, 2025Abstract:We propose sparseGeoHOPCA, a novel framework for sparse higher-order principal component analysis (SHOPCA) that introduces a geometric perspective to high-dimensional tensor decomposition. By unfolding the input tensor along each mode and reformulating the resulting subproblems as structured binary linear optimization problems, our method transforms the original nonconvex sparse objective into a tractable geometric form. This eliminates the need for explicit covariance estimation and iterative deflation, enabling significant gains in both computational efficiency and interpretability, particularly in high-dimensional and unbalanced data scenarios. We theoretically establish the equivalence between the geometric subproblems and the original SHOPCA formulation, and derive worst-case approximation error bounds based on classical PCA residuals, providing data-dependent performance guarantees. The proposed algorithm achieves a total computational complexity of $O\left(\sum_{n=1}^{N} (k_n^3 + J_n k_n^2)\right)$, which scales linearly with tensor size. Extensive experiments demonstrate that sparseGeoHOPCA accurately recovers sparse supports in synthetic settings, preserves classification performance under 10$\times$ compression, and achieves high-quality image reconstruction on ImageNet, highlighting its robustness and versatility.

ELFATT: Efficient Linear Fast Attention for Vision Transformers

Jan 10, 2025

Abstract:The attention mechanism is the key to the success of transformers in different machine learning tasks. However, the quadratic complexity with respect to the sequence length of the vanilla softmax-based attention mechanism becomes the major bottleneck for the application of long sequence tasks, such as vision tasks. Although various efficient linear attention mechanisms have been proposed, they need to sacrifice performance to achieve high efficiency. What's more, memory-efficient methods, such as FlashAttention-1-3, still have quadratic computation complexity which can be further improved. In this paper, we propose a novel efficient linear fast attention (ELFATT) mechanism to achieve low memory input/output operations, linear computational complexity, and high performance at the same time. ELFATT offers 4-7x speedups over the vanilla softmax-based attention mechanism in high-resolution vision tasks without losing performance. ELFATT is FlashAttention friendly. Using FlashAttention-2 acceleration, ELFATT still offers 2-3x speedups over the vanilla softmax-based attention mechanism on high-resolution vision tasks without losing performance. Even on edge GPUs, ELFATT still offers 1.6x to 2.0x speedups compared to state-of-the-art attention mechanisms in various power modes from 5W to 60W. The code of ELFATT is available at [https://github.com/Alicewithrabbit/ELFATT].

Multi-View Variational Autoencoder for Missing Value Imputation in Untargeted Metabolomics

Oct 12, 2023

Abstract:Background: Missing data is a common challenge in mass spectrometry-based metabolomics, which can lead to biased and incomplete analyses. The integration of whole-genome sequencing (WGS) data with metabolomics data has emerged as a promising approach to enhance the accuracy of data imputation in metabolomics studies. Method: In this study, we propose a novel method that leverages the information from WGS data and reference metabolites to impute unknown metabolites. Our approach utilizes a multi-view variational autoencoder to jointly model the burden score, polygenetic risk score (PGS), and linkage disequilibrium (LD) pruned single nucleotide polymorphisms (SNPs) for feature extraction and missing metabolomics data imputation. By learning the latent representations of both omics data, our method can effectively impute missing metabolomics values based on genomic information. Results: We evaluate the performance of our method on empirical metabolomics datasets with missing values and demonstrate its superiority compared to conventional imputation techniques. Using 35 template metabolites derived burden scores, PGS and LD-pruned SNPs, the proposed methods achieved r2-scores > 0.01 for 71.55% of metabolites. Conclusion: The integration of WGS data in metabolomics imputation not only improves data completeness but also enhances downstream analyses, paving the way for more comprehensive and accurate investigations of metabolic pathways and disease associations. Our findings offer valuable insights into the potential benefits of utilizing WGS data for metabolomics data imputation and underscore the importance of leveraging multi-modal data integration in precision medicine research.

Physics Inspired Hybrid Attention for SAR Target Recognition

Sep 27, 2023Abstract:There has been a recent emphasis on integrating physical models and deep neural networks (DNNs) for SAR target recognition, to improve performance and achieve a higher level of physical interpretability. The attributed scattering center (ASC) parameters garnered the most interest, being considered as additional input data or features for fusion in most methods. However, the performance greatly depends on the ASC optimization result, and the fusion strategy is not adaptable to different types of physical information. Meanwhile, the current evaluation scheme is inadequate to assess the model's robustness and generalizability. Thus, we propose a physics inspired hybrid attention (PIHA) mechanism and the once-for-all (OFA) evaluation protocol to address the above issues. PIHA leverages the high-level semantics of physical information to activate and guide the feature group aware of local semantics of target, so as to re-weight the feature importance based on knowledge prior. It is flexible and generally applicable to various physical models, and can be integrated into arbitrary DNNs without modifying the original architecture. The experiments involve a rigorous assessment using the proposed OFA, which entails training and validating a model on either sufficient or limited data and evaluating on multiple test sets with different data distributions. Our method outperforms other state-of-the-art approaches in 12 test scenarios with same ASC parameters. Moreover, we analyze the working mechanism of PIHA and evaluate various PIHA enabled DNNs. The experiments also show PIHA is effective for different physical information. The source code together with the adopted physical information is available at https://github.com/XAI4SAR.

Leveraging Graph-based Cross-modal Information Fusion for Neural Sign Language Translation

Nov 01, 2022

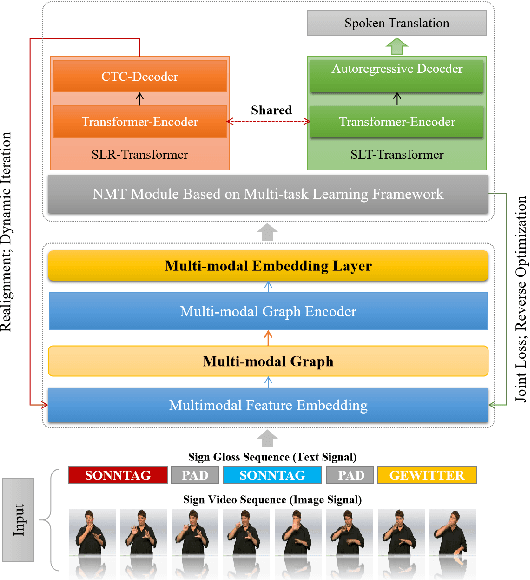

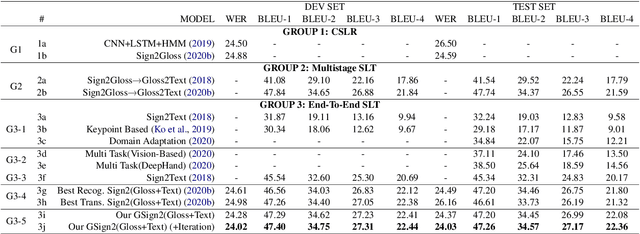

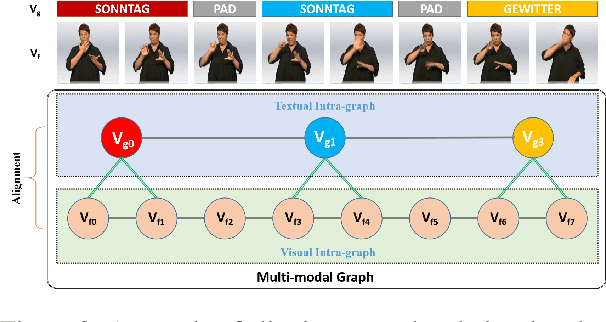

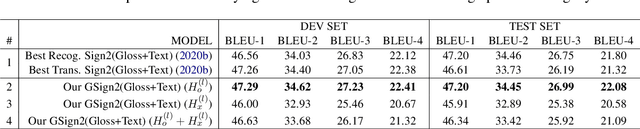

Abstract:Sign Language (SL), as the mother tongue of the deaf community, is a special visual language that most hearing people cannot understand. In recent years, neural Sign Language Translation (SLT), as a possible way for bridging communication gap between the deaf and the hearing people, has attracted widespread academic attention. We found that the current mainstream end-to-end neural SLT models, which tries to learning language knowledge in a weakly supervised manner, could not mine enough semantic information under the condition of low data resources. Therefore, we propose to introduce additional word-level semantic knowledge of sign language linguistics to assist in improving current end-to-end neural SLT models. Concretely, we propose a novel neural SLT model with multi-modal feature fusion based on the dynamic graph, in which the cross-modal information, i.e. text and video, is first assembled as a dynamic graph according to their correlation, and then the graph is processed by a multi-modal graph encoder to generate the multi-modal embeddings for further usage in the subsequent neural translation models. To the best of our knowledge, we are the first to introduce graph neural networks, for fusing multi-modal information, into neural sign language translation models. Moreover, we conducted experiments on a publicly available popular SLT dataset RWTH-PHOENIX-Weather-2014T. and the quantitative experiments show that our method can improve the model.

Graph Neural Network and Superpixel Based Brain Tissue Segmentation (Corrected Version)

Sep 21, 2022

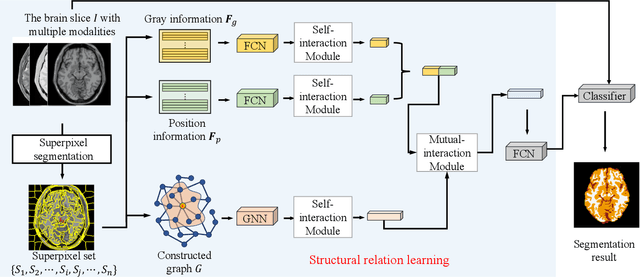

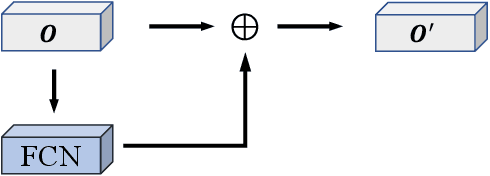

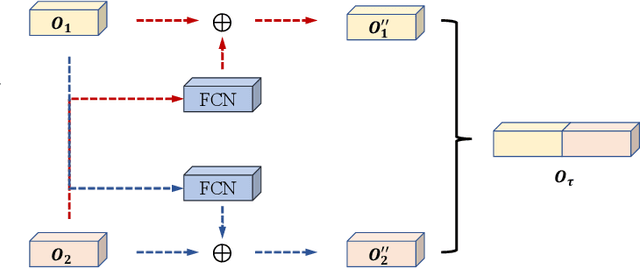

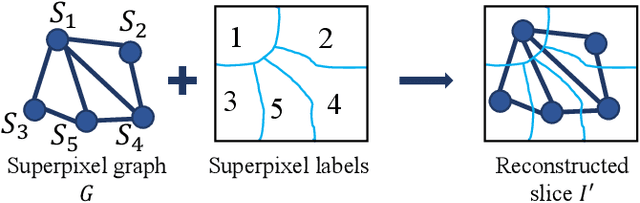

Abstract:Convolutional neural networks (CNNs) are usually used as a backbone to design methods in biomedical image segmentation. However, the limitation of receptive field and large number of parameters limit the performance of these methods. In this paper, we propose a graph neural network (GNN) based method named GNN-SEG for the segmentation of brain tissues. Different to conventional CNN based methods, GNN-SEG takes superpixels as basic processing units and uses GNNs to learn the structure of brain tissues. Besides, inspired by the interaction mechanism in biological vision systems, we propose two kinds of interaction modules for feature enhancement and integration. In the experiments, we compared GNN-SEG with state-of-the-art CNN based methods on four datasets of brain magnetic resonance images. The experimental results show the superiority of GNN-SEG.

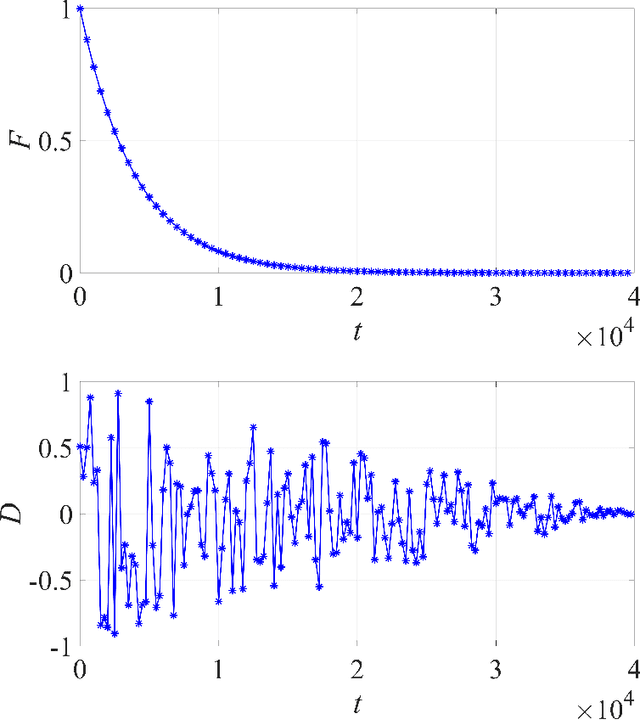

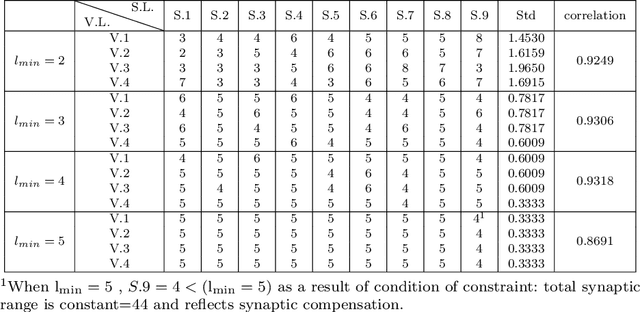

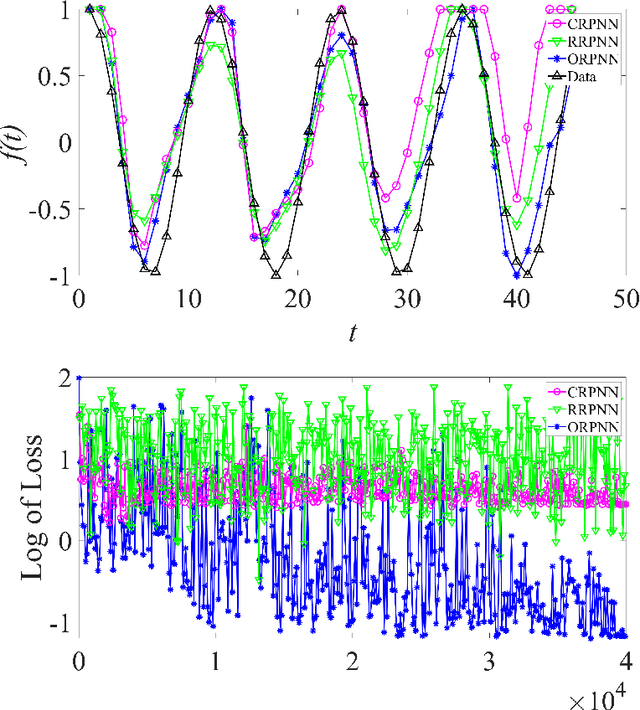

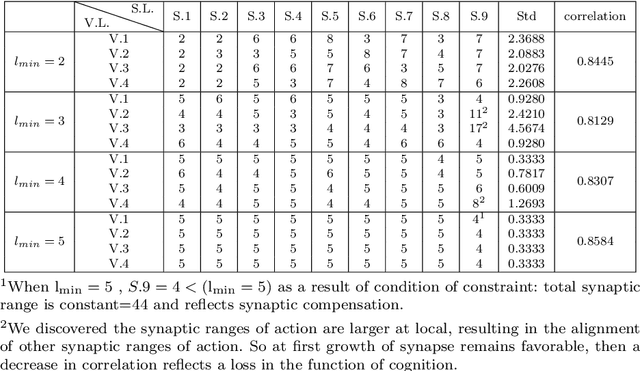

Plasticity Neural Network Based on Astrocytic Influence at Critical Periods, Synaptic Competition and Compensation by Current and Mnemonic Brain Plasticity and Synapse Formation

Mar 19, 2022

Abstract:Based on the RNN frame, we accomplished the model construction, formula derivation and algorithm testing for PNN. We elucidated the mechanism of PNN based on the latest MIT research on synaptic compensation, and also grounded our study on the basis of findings of the Stanford research, which suggested that synapse formation is important for competition in dendrite morphogenesis. The influence of astrocytic impacts on brain plasticity and synapse formation is an important mechanism of our Neural Network at critical periods or the end of critical periods.In the model for critical periods, the hypothesis is that the best brain plasticity so far affects current brain plasticity and the best synapse formation so far affects current synapse formation.Furthermore, PNN takes into account the mnemonic gradient informational synapse formation, and brain plasticity and synapse formation change frame of NN is a new method of Deep Learning.The question we proposed is whether the promotion of neuroscience and brain cognition was achieved by model construction, formula derivation or algorithm testing. We resorted to the Artificial Neural Network (ANN), evolutionary computation and other numerical methods for hypotheses, possible explanations and rules, rather than only biological tests which include cutting-edge imaging and genetic tools.And it has no ethics of animal testing.

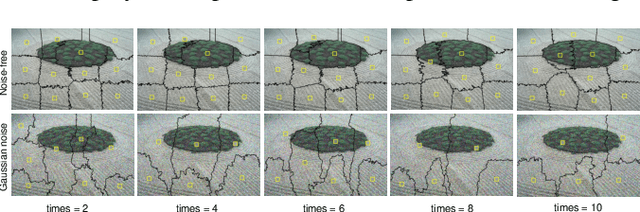

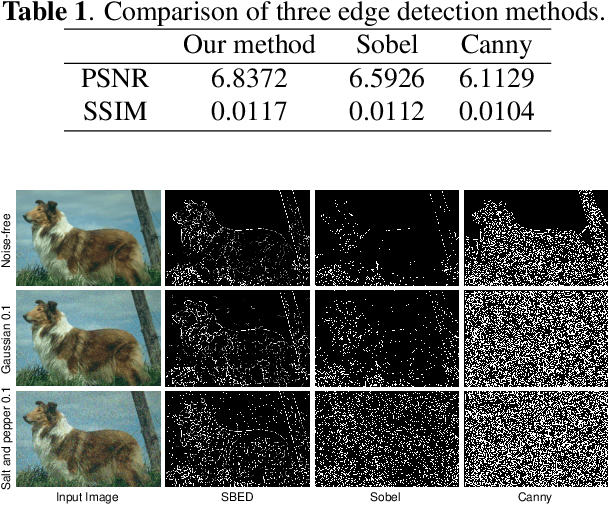

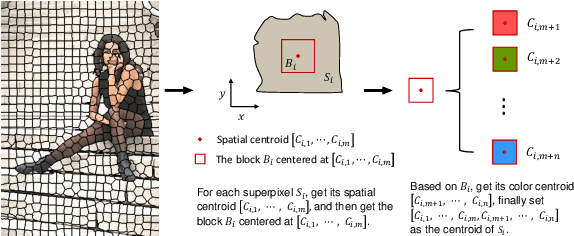

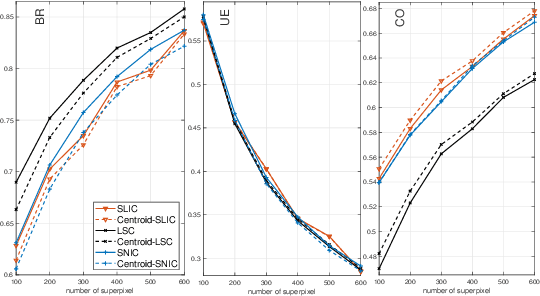

A novel centroid update approach for clustering-based superpixel method and superpixel-based edge detection

Oct 18, 2019

Abstract:Superpixel is widely used in image processing. And among the methods for superpixel generation, clustering-based methods have a high speed and a good performance at the same time. However, most clustering-based superpixel methods are sensitive to noise. To solve these problems, in this paper, we first analyze the features of noise. Then according to the statistical features of noise, we propose a novel centroid updating approach to enhance the robustness of the clustering-based superpixel methods. Besides, we propose a novel superpixel based edge detection method. The experiments on BSD500 dataset show that our approach can significantly enhance the performance of clustering-based superpixel methods in noisy environment. Moreover, we also show that our proposed edge detection method outperforms other classical methods.

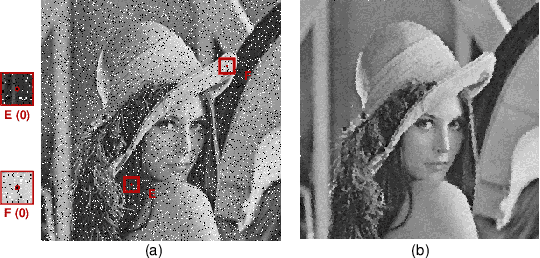

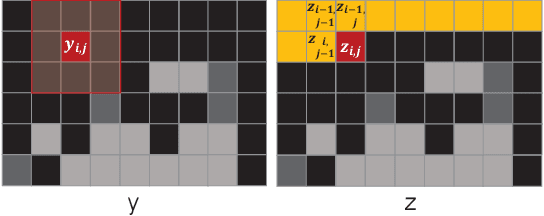

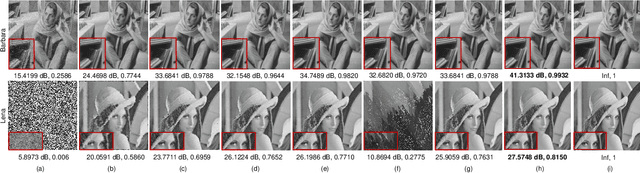

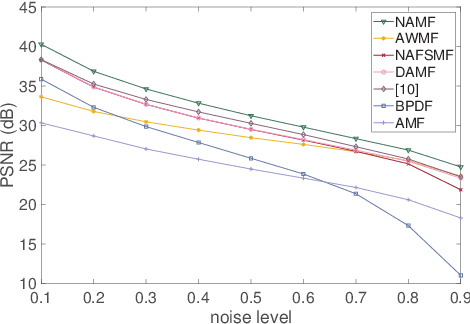

NAMF: A Non-local Adaptive Mean Filter for Salt-and-Pepper Noise Removal

Oct 17, 2019

Abstract:In this paper, a non-local adaptive mean filter (NAMF) is proposed, which can eliminate all levels of salt-and-pepper (SAP) noise. NAMF can be divided into two stages: (1) SAP noise detection; (2) SAP noise elimination. For a given pixel, firstly, we compare it with the maximum or minimum gray value of the noisy image, if it equals then we use a window with adaptive size to further determine whether it is noisy, and the noiseless pixel will be left. Secondly, the noisy pixel will be replaced by the combination of its neighboring pixels. And finally we use a SAP noise based non-local mean filter to further restore it. Our experimental results show that NAMF outperforms state-of-the-art methods in terms of quality for restoring image at all SAP noise levels.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge