Zhongling Huang

Spatial-Spectral Chromatic Coding of Interference Signatures in SAR Imagery: Signal Modeling and Physical-Visual Interpretation

Sep 10, 2025

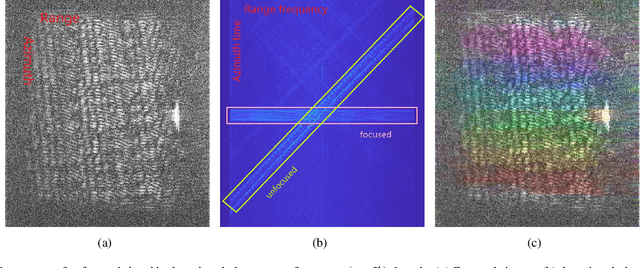

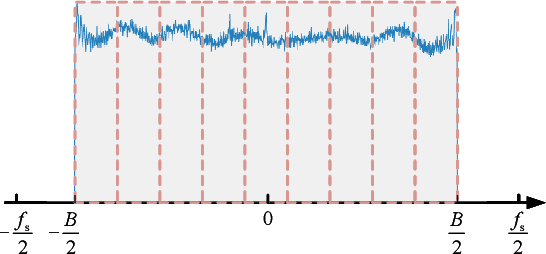

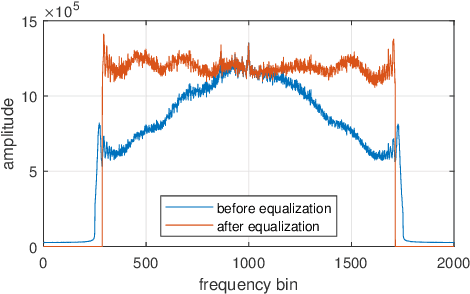

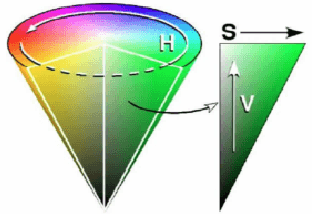

Abstract:Synthetic Aperture Radar (SAR) images are conventionally visualized as grayscale amplitude representations, which often fail to explicitly reveal interference characteristics caused by external radio emitters and unfocused signals. This paper proposes a novel spatial-spectral chromatic coding method for visual analysis of interference patterns in single-look complex (SLC) SAR imagery. The method first generates a series of spatial-spectral images via spectral subband decomposition that preserve both spatial structures and spectral signatures. These images are subsequently chromatically coded into a color representation using RGB/HSV dual-space coding, using a set of specifically designed color palette. This method intrinsically encodes the spatial-spectral properties of interference into visually discernible patterns, enabling rapid visual interpretation without additional processing. To facilitate physical interpretation, mathematical models are established to theoretically analyze the physical mechanisms of responses to various interference types. Experiments using real datasets demonstrate that the method effectively highlights interference regions and unfocused echo or signal responses (e.g., blurring, ambiguities, and moving target effects), providing analysts with a practical tool for visual interpretation, quality assessment, and data diagnosis in SAR imagery.

$\mathbfΦ$-GAN: Physics-Inspired GAN for Generating SAR Images Under Limited Data

Mar 04, 2025Abstract:Approaches for improving generative adversarial networks (GANs) training under a few samples have been explored for natural images. However, these methods have limited effectiveness for synthetic aperture radar (SAR) images, as they do not account for the unique electromagnetic scattering properties of SAR. To remedy this, we propose a physics-inspired regularization method dubbed $\Phi$-GAN, which incorporates the ideal point scattering center (PSC) model of SAR with two physical consistency losses. The PSC model approximates SAR targets using physical parameters, ensuring that $\Phi$-GAN generates SAR images consistent with real physical properties while preventing discriminator overfitting by focusing on PSC-based decision cues. To embed the PSC model into GANs for end-to-end training, we introduce a physics-inspired neural module capable of estimating the physical parameters of SAR targets efficiently. This module retains the interpretability of the physical model and can be trained with limited data. We propose two physical loss functions: one for the generator, guiding it to produce SAR images with physical parameters consistent with real ones, and one for the discriminator, enhancing its robustness by basing decisions on PSC attributes. We evaluate $\Phi$-GAN across several conditional GAN (cGAN) models, demonstrating state-of-the-art performance in data-scarce scenarios on three SAR image datasets.

PolSAM: Polarimetric Scattering Mechanism Informed Segment Anything Model

Dec 17, 2024Abstract:PolSAR data presents unique challenges due to its rich and complex characteristics. Existing data representations, such as complex-valued data, polarimetric features, and amplitude images, are widely used. However, these formats often face issues related to usability, interpretability, and data integrity. Most feature extraction networks for PolSAR are small, limiting their ability to capture features effectively. To address these issues, We propose the Polarimetric Scattering Mechanism-Informed SAM (PolSAM), an enhanced Segment Anything Model (SAM) that integrates domain-specific scattering characteristics and a novel prompt generation strategy. PolSAM introduces Microwave Vision Data (MVD), a lightweight and interpretable data representation derived from polarimetric decomposition and semantic correlations. We propose two key components: the Feature-Level Fusion Prompt (FFP), which fuses visual tokens from pseudo-colored SAR images and MVD to address modality incompatibility in the frozen SAM encoder, and the Semantic-Level Fusion Prompt (SFP), which refines sparse and dense segmentation prompts using semantic information. Experimental results on the PhySAR-Seg datasets demonstrate that PolSAM significantly outperforms existing SAM-based and multimodal fusion models, improving segmentation accuracy, reducing data storage, and accelerating inference time. The source code and datasets will be made publicly available at \url{https://github.com/XAI4SAR/PolSAM}.

Physics-Guided Detector for SAR Airplanes

Nov 19, 2024Abstract:The disperse structure distributions (discreteness) and variant scattering characteristics (variability) of SAR airplane targets lead to special challenges of object detection and recognition. The current deep learning-based detectors encounter challenges in distinguishing fine-grained SAR airplanes against complex backgrounds. To address it, we propose a novel physics-guided detector (PGD) learning paradigm for SAR airplanes that comprehensively investigate their discreteness and variability to improve the detection performance. It is a general learning paradigm that can be extended to different existing deep learning-based detectors with "backbone-neck-head" architectures. The main contributions of PGD include the physics-guided self-supervised learning, feature enhancement, and instance perception, denoted as PGSSL, PGFE, and PGIP, respectively. PGSSL aims to construct a self-supervised learning task based on a wide range of SAR airplane targets that encodes the prior knowledge of various discrete structure distributions into the embedded space. Then, PGFE enhances the multi-scale feature representation of a detector, guided by the physics-aware information learned from PGSSL. PGIP is constructed at the detection head to learn the refined and dominant scattering point of each SAR airplane instance, thus alleviating the interference from the complex background. We propose two implementations, denoted as PGD and PGD-Lite, and apply them to various existing detectors with different backbones and detection heads. The experiments demonstrate the flexibility and effectiveness of the proposed PGD, which can improve existing detectors on SAR airplane detection with fine-grained classification task (an improvement of 3.1\% mAP most), and achieve the state-of-the-art performance (90.7\% mAP) on SAR-AIRcraft-1.0 dataset. The project is open-source at \url{https://github.com/XAI4SAR/PGD}.

Generative Artificial Intelligence Meets Synthetic Aperture Radar: A Survey

Nov 05, 2024

Abstract:SAR images possess unique attributes that present challenges for both human observers and vision AI models to interpret, owing to their electromagnetic characteristics. The interpretation of SAR images encounters various hurdles, with one of the primary obstacles being the data itself, which includes issues related to both the quantity and quality of the data. The challenges can be addressed using generative AI technologies. Generative AI, often known as GenAI, is a very advanced and powerful technology in the field of artificial intelligence that has gained significant attention. The advancement has created possibilities for the creation of texts, photorealistic pictures, videos, and material in various modalities. This paper aims to comprehensively investigate the intersection of GenAI and SAR. First, we illustrate the common data generation-based applications in SAR field and compare them with computer vision tasks, analyzing the similarity, difference, and general challenges of them. Then, an overview of the latest GenAI models is systematically reviewed, including various basic models and their variations targeting the general challenges. Additionally, the corresponding applications in SAR domain are also included. Specifically, we propose to summarize the physical model based simulation approaches for SAR, and analyze the hybrid modeling methods that combine the GenAI and interpretable models. The evaluation methods that have been or could be applied to SAR, are also explored. Finally, the potential challenges and future prospects are discussed. To our best knowledge, this survey is the first exhaustive examination of the interdiscipline of SAR and GenAI, encompassing a wide range of topics, including deep neural networks, physical models, computer vision, and SAR images. The resources of this survey are open-source at \url{https://github.com/XAI4SAR/GenAIxSAR}.

Guidance Disentanglement Network for Optics-Guided Thermal UAV Image Super-Resolution

Oct 27, 2024Abstract:Optics-guided Thermal UAV image Super-Resolution (OTUAV-SR) has attracted significant research interest due to its potential applications in security inspection, agricultural measurement, and object detection. Existing methods often employ single guidance model to generate the guidance features from optical images to assist thermal UAV images super-resolution. However, single guidance models make it difficult to generate effective guidance features under favorable and adverse conditions in UAV scenarios, thus limiting the performance of OTUAV-SR. To address this issue, we propose a novel Guidance Disentanglement network (GDNet), which disentangles the optical image representation according to typical UAV scenario attributes to form guidance features under both favorable and adverse conditions, for robust OTUAV-SR. Moreover, we design an attribute-aware fusion module to combine all attribute-based optical guidance features, which could form a more discriminative representation and fit the attribute-agnostic guidance process. To facilitate OTUAV-SR research in complex UAV scenarios, we introduce VGTSR2.0, a large-scale benchmark dataset containing 3,500 aligned optical-thermal image pairs captured under diverse conditions and scenes. Extensive experiments on VGTSR2.0 demonstrate that GDNet significantly improves OTUAV-SR performance over state-of-the-art methods, especially in the challenging low-light and foggy environments commonly encountered in UAV scenarios. The dataset and code will be publicly available at https://github.com/Jocelyney/GDNet.

X-Fake: Juggling Utility Evaluation and Explanation of Simulated SAR Images

Jul 28, 2024

Abstract:SAR image simulation has attracted much attention due to its great potential to supplement the scarce training data for deep learning algorithms. Consequently, evaluating the quality of the simulated SAR image is crucial for practical applications. The current literature primarily uses image quality assessment techniques for evaluation that rely on human observers' perceptions. However, because of the unique imaging mechanism of SAR, these techniques may produce evaluation results that are not entirely valid. The distribution inconsistency between real and simulated data is the main obstacle that influences the utility of simulated SAR images. To this end, we propose a novel trustworthy utility evaluation framework with a counterfactual explanation for simulated SAR images for the first time, denoted as X-Fake. It unifies a probabilistic evaluator and a causal explainer to achieve a trustworthy utility assessment. We construct the evaluator using a probabilistic Bayesian deep model to learn the posterior distribution, conditioned on real data. Quantitatively, the predicted uncertainty of simulated data can reflect the distribution discrepancy. We build the causal explainer with an introspective variational auto-encoder to generate high-resolution counterfactuals. The latent code of IntroVAE is finally optimized with evaluation indicators and prior information to generate the counterfactual explanation, thus revealing the inauthentic details of simulated data explicitly. The proposed framework is validated on four simulated SAR image datasets obtained from electromagnetic models and generative artificial intelligence approaches. The results demonstrate the proposed X-Fake framework outperforms other IQA methods in terms of utility. Furthermore, the results illustrate that the generated counterfactual explanations are trustworthy, and can further improve the data utility in applications.

Interpretable attributed scattering center extracted via deep unfolding

May 15, 2024

Abstract:Most existing sparse representation-based approaches for attributed scattering center (ASC) extraction adopt traditional iterative optimization algorithms, which suffer from lengthy computation times and limited precision. This paper presents a solution by introducing an interpretable network that can effectively and rapidly extract ASC via deep unfolding. Initially, we create a dictionary containing reliable prior knowledge and apply it to the iterative shrinkage-thresholding algorithm (ISTA). Then, we unfold ISTA into a neural network, employing it to autonomously and precisely optimize the hyperparameters. The interpretability of physics is retained by applying a dictionary with physical meaning. The experiments are conducted on multiple test sets with diverse data distributions and demonstrate the superior performance and generalizability of our method.

Physics Inspired Hybrid Attention for SAR Target Recognition

Sep 27, 2023Abstract:There has been a recent emphasis on integrating physical models and deep neural networks (DNNs) for SAR target recognition, to improve performance and achieve a higher level of physical interpretability. The attributed scattering center (ASC) parameters garnered the most interest, being considered as additional input data or features for fusion in most methods. However, the performance greatly depends on the ASC optimization result, and the fusion strategy is not adaptable to different types of physical information. Meanwhile, the current evaluation scheme is inadequate to assess the model's robustness and generalizability. Thus, we propose a physics inspired hybrid attention (PIHA) mechanism and the once-for-all (OFA) evaluation protocol to address the above issues. PIHA leverages the high-level semantics of physical information to activate and guide the feature group aware of local semantics of target, so as to re-weight the feature importance based on knowledge prior. It is flexible and generally applicable to various physical models, and can be integrated into arbitrary DNNs without modifying the original architecture. The experiments involve a rigorous assessment using the proposed OFA, which entails training and validating a model on either sufficient or limited data and evaluating on multiple test sets with different data distributions. Our method outperforms other state-of-the-art approaches in 12 test scenarios with same ASC parameters. Moreover, we analyze the working mechanism of PIHA and evaluate various PIHA enabled DNNs. The experiments also show PIHA is effective for different physical information. The source code together with the adopted physical information is available at https://github.com/XAI4SAR.

Explainable, Physics Aware, Trustworthy AI Paradigm Shift for Synthetic Aperture Radar

Jan 09, 2023Abstract:The recognition or understanding of the scenes observed with a SAR system requires a broader range of cues, beyond the spatial context. These encompass but are not limited to: imaging geometry, imaging mode, properties of the Fourier spectrum of the images or the behavior of the polarimetric signatures. In this paper, we propose a change of paradigm for explainability in data science for the case of Synthetic Aperture Radar (SAR) data to ground the explainable AI for SAR. It aims to use explainable data transformations based on well-established models to generate inputs for AI methods, to provide knowledgeable feedback for training process, and to learn or improve high-complexity unknown or un-formalized models from the data. At first, we introduce a representation of the SAR system with physical layers: i) instrument and platform, ii) imaging formation, iii) scattering signatures and objects, that can be integrated with an AI model for hybrid modeling. Successively, some illustrative examples are presented to demonstrate how to achieve hybrid modeling for SAR image understanding. The perspective of trustworthy model and supplementary explanations are discussed later. Finally, we draw the conclusion and we deem the proposed concept has applicability to the entire class of coherent imaging sensors and other computational imaging systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge