Andrei Anghel

Cascaded Cross-Modal Transformer for Audio-Textual Classification

Jan 15, 2024

Abstract:Speech classification tasks often require powerful language understanding models to grasp useful features, which becomes problematic when limited training data is available. To attain superior classification performance, we propose to harness the inherent value of multimodal representations by transcribing speech using automatic speech recognition (ASR) models and translating the transcripts into different languages via pretrained translation models. We thus obtain an audio-textual (multimodal) representation for each data sample. Subsequently, we combine language-specific Bidirectional Encoder Representations from Transformers (BERT) with Wav2Vec2.0 audio features via a novel cascaded cross-modal transformer (CCMT). Our model is based on two cascaded transformer blocks. The first one combines text-specific features from distinct languages, while the second one combines acoustic features with multilingual features previously learned by the first transformer block. We employed our system in the Requests Sub-Challenge of the ACM Multimedia 2023 Computational Paralinguistics Challenge. CCMT was declared the winning solution, obtaining an unweighted average recall (UAR) of 65.41% and 85.87% for complaint and request detection, respectively. Moreover, we applied our framework on the Speech Commands v2 and HarperValleyBank dialog data sets, surpassing previous studies reporting results on these benchmarks. Our code is freely available for download at: https://github.com/ristea/ccmt.

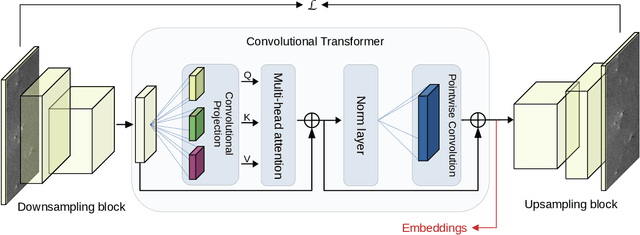

Sea Ice Segmentation From SAR Data by Convolutional Transformer Networks

Jun 13, 2023

Abstract:Sea ice is a crucial component of the Earth's climate system and is highly sensitive to changes in temperature and atmospheric conditions. Accurate and timely measurement of sea ice parameters is important for understanding and predicting the impacts of climate change. Nevertheless, the amount of satellite data acquired over ice areas is huge, making the subjective measurements ineffective. Therefore, automated algorithms must be used in order to fully exploit the continuous data feeds coming from satellites. In this paper, we present a novel approach for sea ice segmentation based on SAR satellite imagery using hybrid convolutional transformer (ConvTr) networks. We show that our approach outperforms classical convolutional networks, while being considerably more efficient than pure transformer models. ConvTr obtained a mean intersection over union (mIoU) of 63.68% on the AI4Arctic data set, assuming an inference time of 120ms for a 400 x 400 squared km product.

Explainable, Physics Aware, Trustworthy AI Paradigm Shift for Synthetic Aperture Radar

Jan 09, 2023Abstract:The recognition or understanding of the scenes observed with a SAR system requires a broader range of cues, beyond the spatial context. These encompass but are not limited to: imaging geometry, imaging mode, properties of the Fourier spectrum of the images or the behavior of the polarimetric signatures. In this paper, we propose a change of paradigm for explainability in data science for the case of Synthetic Aperture Radar (SAR) data to ground the explainable AI for SAR. It aims to use explainable data transformations based on well-established models to generate inputs for AI methods, to provide knowledgeable feedback for training process, and to learn or improve high-complexity unknown or un-formalized models from the data. At first, we introduce a representation of the SAR system with physical layers: i) instrument and platform, ii) imaging formation, iii) scattering signatures and objects, that can be integrated with an AI model for hybrid modeling. Successively, some illustrative examples are presented to demonstrate how to achieve hybrid modeling for SAR image understanding. The perspective of trustworthy model and supplementary explanations are discussed later. Finally, we draw the conclusion and we deem the proposed concept has applicability to the entire class of coherent imaging sensors and other computational imaging systems.

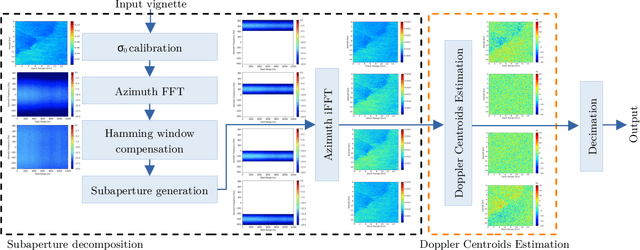

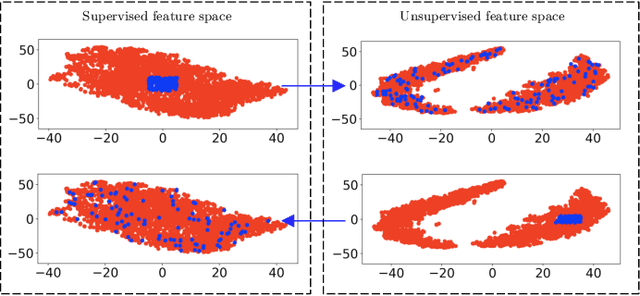

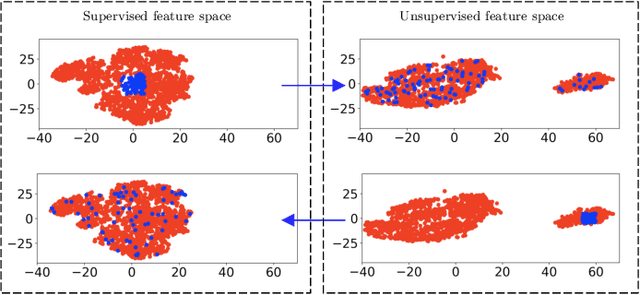

Guided Unsupervised Learning by Subaperture Decomposition for Ocean SAR Image Retrieval

Sep 29, 2022

Abstract:Spaceborne synthetic aperture radar (SAR) can provide accurate images of the ocean surface roughness day-or-night in nearly all weather conditions, being an unique asset for many geophysical applications. Considering the huge amount of data daily acquired by satellites, automated techniques for physical features extraction are needed. Even if supervised deep learning methods attain state-of-the-art results, they require great amount of labeled data, which are difficult and excessively expensive to acquire for ocean SAR imagery. To this end, we use the subaperture decomposition (SD) algorithm to enhance the unsupervised learning retrieval on the ocean surface, empowering ocean researchers to search into large ocean databases. We empirically prove that SD improve the retrieval precision with over 20% for an unsupervised transformer auto-encoder network. Moreover, we show that SD brings important performance boost when Doppler centroid images are used as input data, leading the way to new unsupervised physics guided retrieval algorithms.

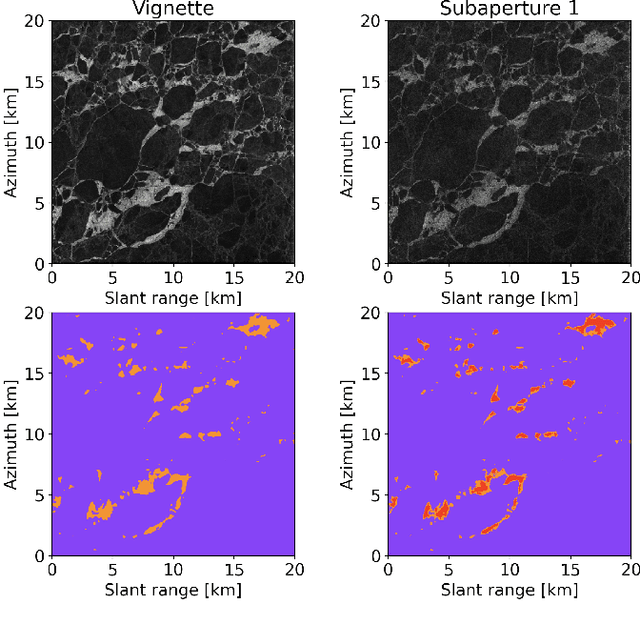

Guided deep learning by subaperture decomposition: ocean patterns from SAR imagery

Apr 09, 2022

Abstract:Spaceborne synthetic aperture radar can provide meters scale images of the ocean surface roughness day or night in nearly all weather conditions. This makes it a unique asset for many geophysical applications. Sentinel 1 SAR wave mode vignettes have made possible to capture many important oceanic and atmospheric phenomena since 2014. However, considering the amount of data provided, expanding applications requires a strategy to automatically process and extract geophysical parameters. In this study, we propose to apply subaperture decomposition as a preprocessing stage for SAR deep learning models. Our data centring approach surpassed the baseline by 0.7, obtaining state of the art on the TenGeoPSARwv data set. In addition, we empirically showed that subaperture decomposition could bring additional information over the original vignette, by rising the number of clusters for an unsupervised segmentation method. Overall, we encourage the development of data centring approaches, showing that, data preprocessing could bring significant performance improvements over existing deep learning models.

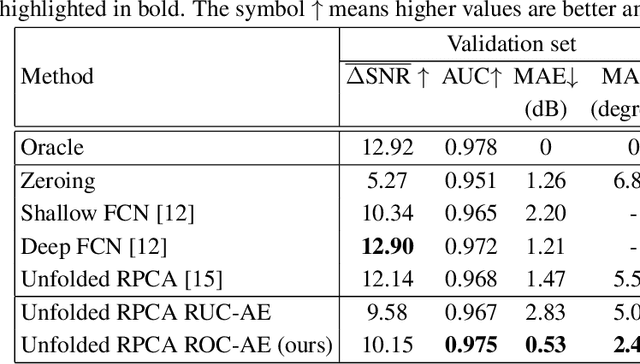

Automotive Radar Interference Mitigation with Unfolded Robust PCA based on Residual Overcomplete Auto-Encoder Blocks

Oct 14, 2020

Abstract:Deep learning methods for automotive radar interference mitigation can succesfully estimate the amplitude of targets, but fail to recover the phase of the respective targets. In this paper, we propose an efficient and effective technique based on unfolded robust Principal Component Analysis (RPCA) that is able to estimate both amplitude and phase in the presence of interference. Our contribution consists in introducing residual overcomplete auto-encoder (ROC-AE) blocks into the recurrent architecture of unfolded RPCA, which results in a deeper model that significantly outperforms unfolded RPCA as well as other deep learning models.

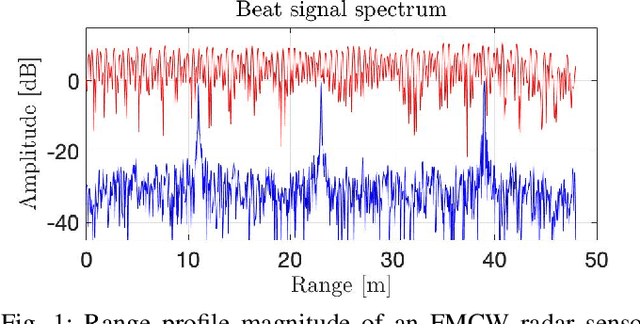

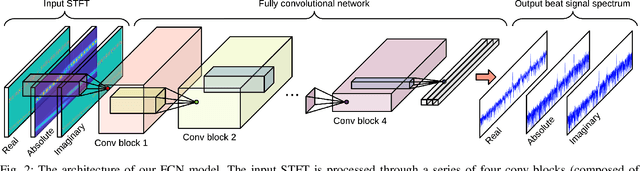

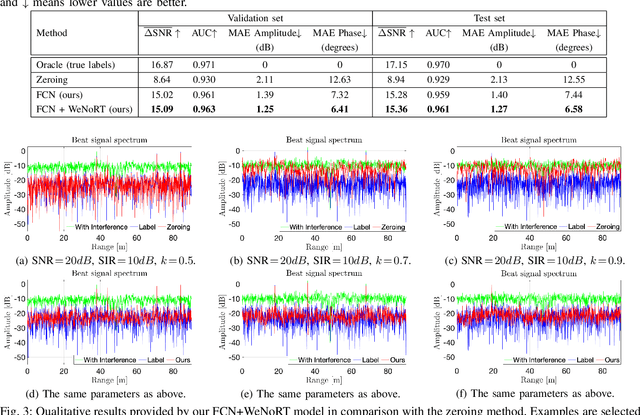

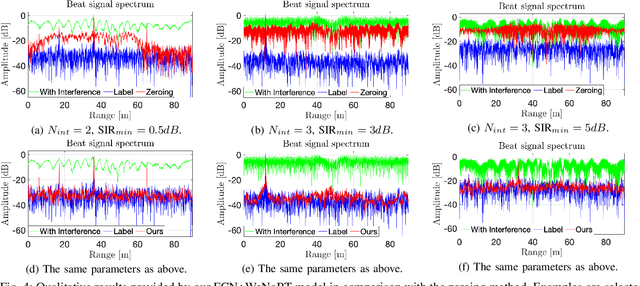

Estimating Magnitude and Phase of Automotive Radar Signals under Multiple Interference Sources with Fully Convolutional Networks

Aug 11, 2020

Abstract:Radar sensors are gradually becoming a wide-spread equipment for road vehicles, playing a crucial role in autonomous driving and road safety. The broad adoption of radar sensors increases the chance of interference among sensors from different vehicles, generating corrupted range profiles and range-Doppler maps. In order to extract distance and velocity of multiple targets from range-Doppler maps, the interference affecting each range profile needs to be mitigated. In this paper, we propose a fully convolutional neural network for automotive radar interference mitigation. In order to train our network in a real-world scenario, we introduce a new data set of realistic automotive radar signals with multiple targets and multiple interferers. To our knowledge, this is the first work to mitigate interference from multiple sources. Furthermore, we introduce a new training regime that eliminates noisy weights, showing superior results compared to the widely-used dropout. While some previous works successfully estimated the magnitude of automotive radar signals, we are the first to propose a deep learning model that can accurately estimate the phase. For instance, our novel approach reduces the phase estimation error with respect to the commonly-adopted zeroing technique by half, from 12.55 degrees to 6.58 degrees. Considering the lack of databases for automotive radar interference mitigation, we release as open source our large-scale data set that closely replicates the real-world automotive scenario for multiple interference cases, allowing others to objectively compare their future work in this domain. Our data set is available for download at: http://github.com/ristea/arim-v2.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge