Mihai Datcu

DLR

Generative Artificial Intelligence Meets Synthetic Aperture Radar: A Survey

Nov 05, 2024

Abstract:SAR images possess unique attributes that present challenges for both human observers and vision AI models to interpret, owing to their electromagnetic characteristics. The interpretation of SAR images encounters various hurdles, with one of the primary obstacles being the data itself, which includes issues related to both the quantity and quality of the data. The challenges can be addressed using generative AI technologies. Generative AI, often known as GenAI, is a very advanced and powerful technology in the field of artificial intelligence that has gained significant attention. The advancement has created possibilities for the creation of texts, photorealistic pictures, videos, and material in various modalities. This paper aims to comprehensively investigate the intersection of GenAI and SAR. First, we illustrate the common data generation-based applications in SAR field and compare them with computer vision tasks, analyzing the similarity, difference, and general challenges of them. Then, an overview of the latest GenAI models is systematically reviewed, including various basic models and their variations targeting the general challenges. Additionally, the corresponding applications in SAR domain are also included. Specifically, we propose to summarize the physical model based simulation approaches for SAR, and analyze the hybrid modeling methods that combine the GenAI and interpretable models. The evaluation methods that have been or could be applied to SAR, are also explored. Finally, the potential challenges and future prospects are discussed. To our best knowledge, this survey is the first exhaustive examination of the interdiscipline of SAR and GenAI, encompassing a wide range of topics, including deep neural networks, physical models, computer vision, and SAR images. The resources of this survey are open-source at \url{https://github.com/XAI4SAR/GenAIxSAR}.

Sea Ice Segmentation From SAR Data by Convolutional Transformer Networks

Jun 13, 2023

Abstract:Sea ice is a crucial component of the Earth's climate system and is highly sensitive to changes in temperature and atmospheric conditions. Accurate and timely measurement of sea ice parameters is important for understanding and predicting the impacts of climate change. Nevertheless, the amount of satellite data acquired over ice areas is huge, making the subjective measurements ineffective. Therefore, automated algorithms must be used in order to fully exploit the continuous data feeds coming from satellites. In this paper, we present a novel approach for sea ice segmentation based on SAR satellite imagery using hybrid convolutional transformer (ConvTr) networks. We show that our approach outperforms classical convolutional networks, while being considerably more efficient than pure transformer models. ConvTr obtained a mean intersection over union (mIoU) of 63.68% on the AI4Arctic data set, assuming an inference time of 120ms for a 400 x 400 squared km product.

Artificial intelligence to advance Earth observation: a perspective

May 15, 2023Abstract:Earth observation (EO) is a prime instrument for monitoring land and ocean processes, studying the dynamics at work, and taking the pulse of our planet. This article gives a bird's eye view of the essential scientific tools and approaches informing and supporting the transition from raw EO data to usable EO-based information. The promises, as well as the current challenges of these developments, are highlighted under dedicated sections. Specifically, we cover the impact of (i) Computer vision; (ii) Machine learning; (iii) Advanced processing and computing; (iv) Knowledge-based AI; (v) Explainable AI and causal inference; (vi) Physics-aware models; (vii) User-centric approaches; and (viii) the much-needed discussion of ethical and societal issues related to the massive use of ML technologies in EO.

Explainable, Physics Aware, Trustworthy AI Paradigm Shift for Synthetic Aperture Radar

Jan 09, 2023Abstract:The recognition or understanding of the scenes observed with a SAR system requires a broader range of cues, beyond the spatial context. These encompass but are not limited to: imaging geometry, imaging mode, properties of the Fourier spectrum of the images or the behavior of the polarimetric signatures. In this paper, we propose a change of paradigm for explainability in data science for the case of Synthetic Aperture Radar (SAR) data to ground the explainable AI for SAR. It aims to use explainable data transformations based on well-established models to generate inputs for AI methods, to provide knowledgeable feedback for training process, and to learn or improve high-complexity unknown or un-formalized models from the data. At first, we introduce a representation of the SAR system with physical layers: i) instrument and platform, ii) imaging formation, iii) scattering signatures and objects, that can be integrated with an AI model for hybrid modeling. Successively, some illustrative examples are presented to demonstrate how to achieve hybrid modeling for SAR image understanding. The perspective of trustworthy model and supplementary explanations are discussed later. Finally, we draw the conclusion and we deem the proposed concept has applicability to the entire class of coherent imaging sensors and other computational imaging systems.

Deep Learning-Based Anomaly Detection in Synthetic Aperture Radar Imaging

Oct 28, 2022Abstract:In this paper, we proposed to investigate unsupervised anomaly detection in Synthetic Aperture Radar (SAR) images. Our approach considers anomalies as abnormal patterns that deviate from their surroundings but without any prior knowledge of their characteristics. In the literature, most model-based algorithms face three main issues. First, the speckle noise corrupts the image and potentially leads to numerous false detections. Second, statistical approaches may exhibit deficiencies in modeling spatial correlation in SAR images. Finally, neural networks based on supervised learning approaches are not recommended due to the lack of annotated SAR data, notably for the class of abnormal patterns. Our proposed method aims to address these issues through a self-supervised algorithm. The speckle is first removed through the deep learning SAR2SAR algorithm. Then, an adversarial autoencoder is trained to reconstruct an anomaly-free SAR image. Finally, a change detection processing step is applied between the input and the output to detect anomalies. Experiments are performed to show the advantages of our method compared to the conventional Reed-Xiaoli algorithm, highlighting the importance of an efficient despeckling pre-processing step.

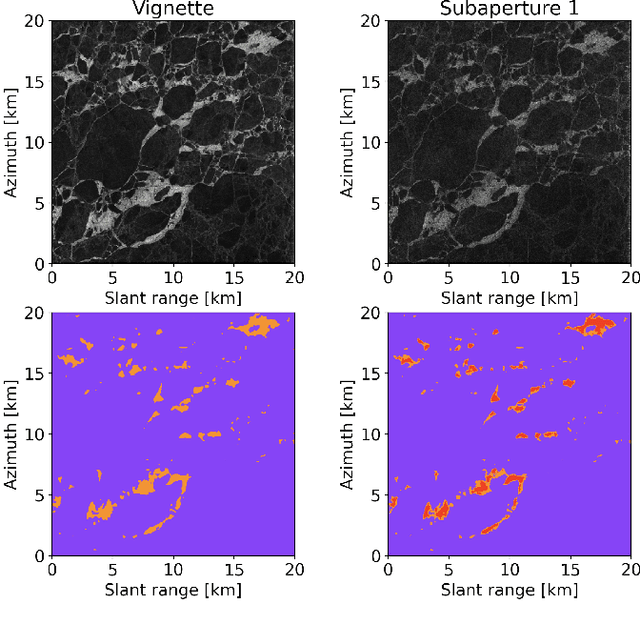

Guided Unsupervised Learning by Subaperture Decomposition for Ocean SAR Image Retrieval

Sep 29, 2022

Abstract:Spaceborne synthetic aperture radar (SAR) can provide accurate images of the ocean surface roughness day-or-night in nearly all weather conditions, being an unique asset for many geophysical applications. Considering the huge amount of data daily acquired by satellites, automated techniques for physical features extraction are needed. Even if supervised deep learning methods attain state-of-the-art results, they require great amount of labeled data, which are difficult and excessively expensive to acquire for ocean SAR imagery. To this end, we use the subaperture decomposition (SD) algorithm to enhance the unsupervised learning retrieval on the ocean surface, empowering ocean researchers to search into large ocean databases. We empirically prove that SD improve the retrieval precision with over 20% for an unsupervised transformer auto-encoder network. Moreover, we show that SD brings important performance boost when Doppler centroid images are used as input data, leading the way to new unsupervised physics guided retrieval algorithms.

Coreset of Hyperspectral Images on Small Quantum Computer

Apr 12, 2022

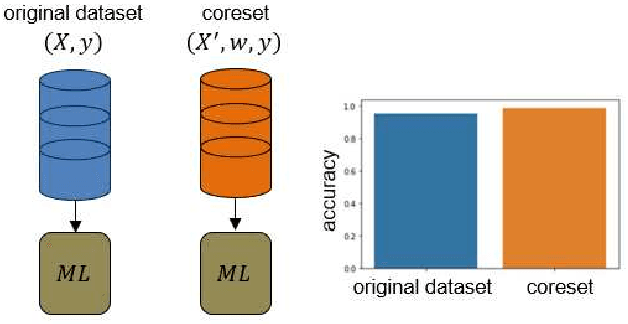

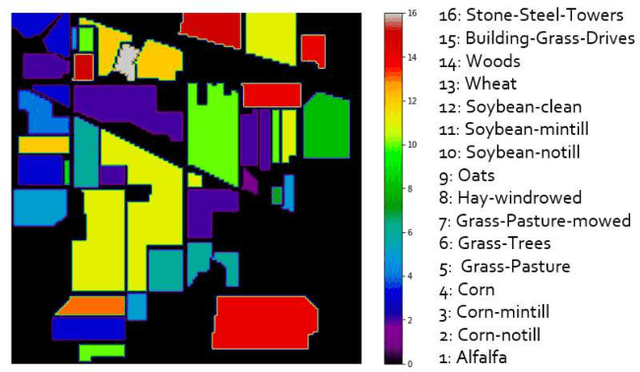

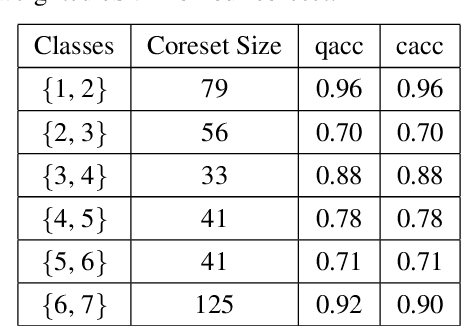

Abstract:Machine Learning (ML) techniques are employed to analyze and process big Remote Sensing (RS) data, and one well-known ML technique is a Support Vector Machine (SVM). An SVM is a quadratic programming (QP) problem, and a D-Wave quantum annealer (D-Wave QA) promises to solve this QP problem more efficiently than a conventional computer. However, the D-Wave QA cannot solve directly the SVM due to its very few input qubits. Hence, we use a coreset ("core of a dataset") of given EO data for training an SVM on this small D-Wave QA. The coreset is a small, representative weighted subset of an original dataset, and any training models generate competitive classes by using the coreset in contrast to by using its original dataset. We measured the closeness between an original dataset and its coreset by employing a Kullback-Leibler (KL) divergence measure. Moreover, we trained the SVM on the coreset data by using both a D-Wave QA and a conventional method. We conclude that the coreset characterizes the original dataset with very small KL divergence measure. In addition, we present our KL divergence results for demonstrating the closeness between our original data and its coreset. As practical RS data, we use Hyperspectral Image (HSI) of Indian Pine, USA.

Guided deep learning by subaperture decomposition: ocean patterns from SAR imagery

Apr 09, 2022

Abstract:Spaceborne synthetic aperture radar can provide meters scale images of the ocean surface roughness day or night in nearly all weather conditions. This makes it a unique asset for many geophysical applications. Sentinel 1 SAR wave mode vignettes have made possible to capture many important oceanic and atmospheric phenomena since 2014. However, considering the amount of data provided, expanding applications requires a strategy to automatically process and extract geophysical parameters. In this study, we propose to apply subaperture decomposition as a preprocessing stage for SAR deep learning models. Our data centring approach surpassed the baseline by 0.7, obtaining state of the art on the TenGeoPSARwv data set. In addition, we empirically showed that subaperture decomposition could bring additional information over the original vignette, by rising the number of clusters for an unsupervised segmentation method. Overall, we encourage the development of data centring approaches, showing that, data preprocessing could bring significant performance improvements over existing deep learning models.

Physically Explainable CNN for SAR Image Classification

Oct 27, 2021

Abstract:Integrating the special electromagnetic characteristics of Synthetic Aperture Radar (SAR) in deep neural networks is essential in order to enhance the explainability and physics awareness of deep learning. In this paper, we firstly propose a novel physics guided and injected neural network for SAR image classification, which is mainly guided by explainable physics models and can be learned with very limited labeled data. The proposed framework comprises three parts: (1) generating physics guided signals using existing explainable models, (2) learning physics-aware features with physics guided network, and (3) injecting the physics-aware features adaptively to the conventional classification deep learning model for prediction. The prior knowledge, physical scattering characteristic of SAR in this paper, is injected into the deep neural network in the form of physics-aware features which is more conducive to understanding the semantic labels of SAR image patches. A hybrid Image-Physics SAR dataset format is proposed, and both Sentinel-1 and Gaofen-3 SAR data are taken for evaluation. The experimental results show that our proposed method substantially improve the classification performance compared with the counterpart data-driven CNN. Moreover, the guidance of explainable physics signals leads to explainability of physics-aware features and the physics consistency of features are also preserved in the predictions. We deem the proposed method would promote the development of physically explainable deep learning in SAR image interpretation field.

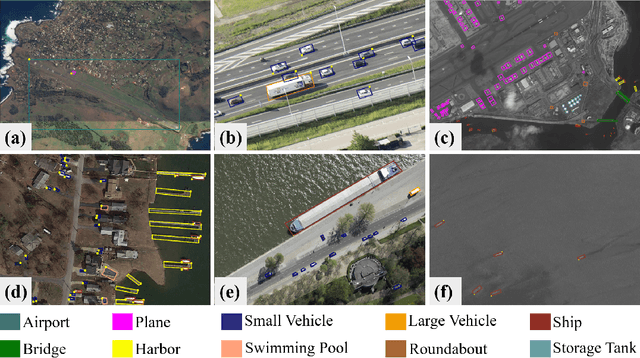

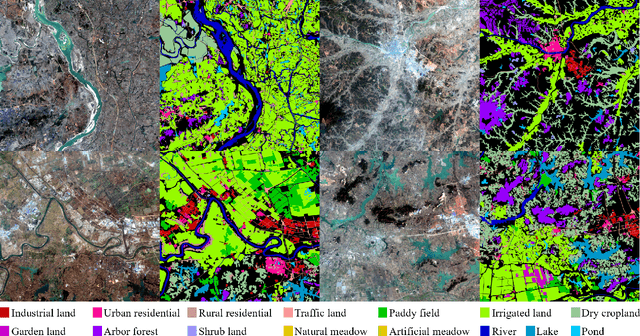

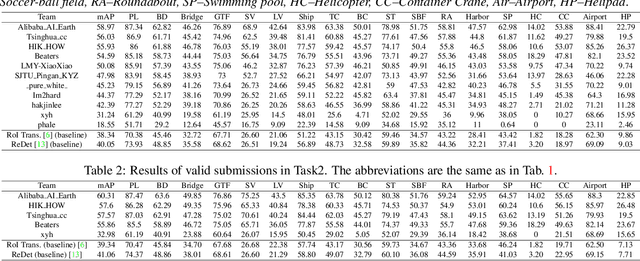

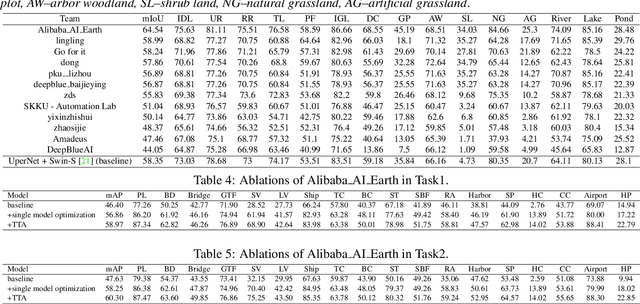

LUAI Challenge 2021 on Learning to Understand Aerial Images

Aug 30, 2021

Abstract:This report summarizes the results of Learning to Understand Aerial Images (LUAI) 2021 challenge held on ICCV 2021, which focuses on object detection and semantic segmentation in aerial images. Using DOTA-v2.0 and GID-15 datasets, this challenge proposes three tasks for oriented object detection, horizontal object detection, and semantic segmentation of common categories in aerial images. This challenge received a total of 146 registrations on the three tasks. Through the challenge, we hope to draw attention from a wide range of communities and call for more efforts on the problems of learning to understand aerial images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge