Corneliu Octavian Dumitru

Physically Explainable CNN for SAR Image Classification

Oct 27, 2021

Abstract:Integrating the special electromagnetic characteristics of Synthetic Aperture Radar (SAR) in deep neural networks is essential in order to enhance the explainability and physics awareness of deep learning. In this paper, we firstly propose a novel physics guided and injected neural network for SAR image classification, which is mainly guided by explainable physics models and can be learned with very limited labeled data. The proposed framework comprises three parts: (1) generating physics guided signals using existing explainable models, (2) learning physics-aware features with physics guided network, and (3) injecting the physics-aware features adaptively to the conventional classification deep learning model for prediction. The prior knowledge, physical scattering characteristic of SAR in this paper, is injected into the deep neural network in the form of physics-aware features which is more conducive to understanding the semantic labels of SAR image patches. A hybrid Image-Physics SAR dataset format is proposed, and both Sentinel-1 and Gaofen-3 SAR data are taken for evaluation. The experimental results show that our proposed method substantially improve the classification performance compared with the counterpart data-driven CNN. Moreover, the guidance of explainable physics signals leads to explainability of physics-aware features and the physics consistency of features are also preserved in the predictions. We deem the proposed method would promote the development of physically explainable deep learning in SAR image interpretation field.

Classification of Large-Scale High-Resolution SAR Images with Deep Transfer Learning

Jan 06, 2020

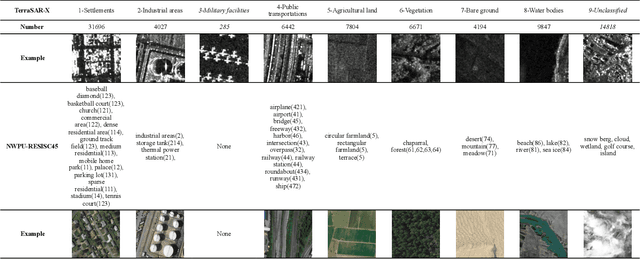

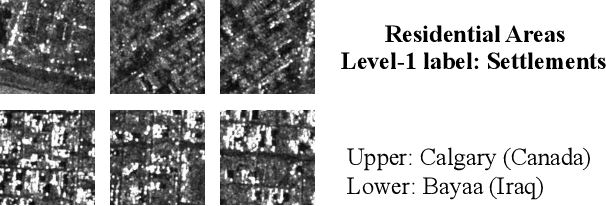

Abstract:The classification of large-scale high-resolution SAR land cover images acquired by satellites is a challenging task, facing several difficulties such as semantic annotation with expertise, changing data characteristics due to varying imaging parameters or regional target area differences, and complex scattering mechanisms being different from optical imaging. Given a large-scale SAR land cover dataset collected from TerraSAR-X images with a hierarchical three-level annotation of 150 categories and comprising more than 100,000 patches, three main challenges in automatically interpreting SAR images of highly imbalanced classes, geographic diversity, and label noise are addressed. In this letter, a deep transfer learning method is proposed based on a similarly annotated optical land cover dataset (NWPU-RESISC45). Besides, a top-2 smooth loss function with cost-sensitive parameters was introduced to tackle the label noise and imbalanced classes' problems. The proposed method shows high efficiency in transferring information from a similarly annotated remote sensing dataset, a robust performance on highly imbalanced classes, and is alleviating the over-fitting problem caused by label noise. What's more, the learned deep model has a good generalization for other SAR-specific tasks, such as MSTAR target recognition with a state-of-the-art classification accuracy of 99.46%.

Dialectical GAN for SAR Image Translation: From Sentinel-1 to TerraSAR-X

Jul 20, 2018

Abstract:Contrary to optical images, Synthetic Aperture Radar (SAR) images are in different electromagnetic spectrum where the human visual system is not accustomed to. Thus, with more and more SAR applications, the demand for enhanced high-quality SAR images has increased considerably. However, high-quality SAR images entail high costs due to the limitations of current SAR devices and their image processing resources. To improve the quality of SAR images and to reduce the costs of their generation, we propose a Dialectical Generative Adversarial Network (Dialectical GAN) to generate high-quality SAR images. This method is based on the analysis of hierarchical SAR information and the "dialectical" structure of GAN frameworks. As a demonstration, a typical example will be shown where a low-resolution SAR image (e.g., a Sentinel-1 image) with large ground coverage is translated into a high-resolution SAR image (e.g., a TerraSAR-X image). Three traditional algorithms are compared, and a new algorithm is proposed based on a network framework by combining conditional WGAN-GP (Wasserstein Generative Adversarial Network - Gradient Penalty) loss functions and Spatial Gram matrices under the rule of dialectics. Experimental results show that the SAR image translation works very well when we compare the results of our proposed method with the selected traditional methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge