Zongxu Pan

SLGNet: Synergizing Structural Priors and Language-Guided Modulation for Multimodal Object Detection

Jan 05, 2026Abstract:Multimodal object detection leveraging RGB and Infrared (IR) images is pivotal for robust perception in all-weather scenarios. While recent adapter-based approaches efficiently transfer RGB-pretrained foundation models to this task, they often prioritize model efficiency at the expense of cross-modal structural consistency. Consequently, critical structural cues are frequently lost when significant domain gaps arise, such as in high-contrast or nighttime environments. Moreover, conventional static multimodal fusion mechanisms typically lack environmental awareness, resulting in suboptimal adaptation and constrained detection performance under complex, dynamic scene variations. To address these limitations, we propose SLGNet, a parameter-efficient framework that synergizes hierarchical structural priors and language-guided modulation within a frozen Vision Transformer (ViT)-based foundation model. Specifically, we design a Structure-Aware Adapter to extract hierarchical structural representations from both modalities and dynamically inject them into the ViT to compensate for structural degradation inherent in ViT-based backbones. Furthermore, we propose a Language-Guided Modulation module that exploits VLM-driven structured captions to dynamically recalibrate visual features, thereby endowing the model with robust environmental awareness. Extensive experiments on the LLVIP, FLIR, KAIST, and DroneVehicle datasets demonstrate that SLGNet establishes new state-of-the-art performance. Notably, on the LLVIP benchmark, our method achieves an mAP of 66.1, while reducing trainable parameters by approximately 87% compared to traditional full fine-tuning. This confirms SLGNet as a robust and efficient solution for multimodal perception.

Classification of Large-Scale High-Resolution SAR Images with Deep Transfer Learning

Jan 06, 2020

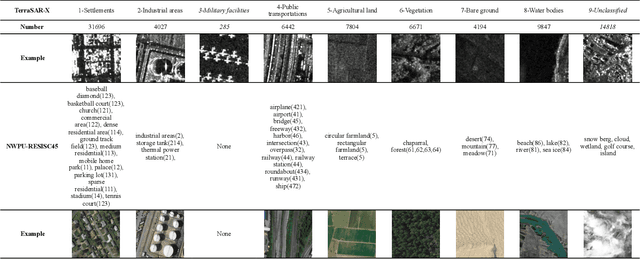

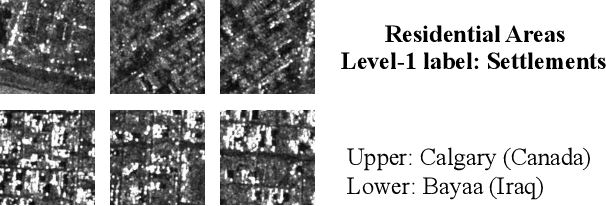

Abstract:The classification of large-scale high-resolution SAR land cover images acquired by satellites is a challenging task, facing several difficulties such as semantic annotation with expertise, changing data characteristics due to varying imaging parameters or regional target area differences, and complex scattering mechanisms being different from optical imaging. Given a large-scale SAR land cover dataset collected from TerraSAR-X images with a hierarchical three-level annotation of 150 categories and comprising more than 100,000 patches, three main challenges in automatically interpreting SAR images of highly imbalanced classes, geographic diversity, and label noise are addressed. In this letter, a deep transfer learning method is proposed based on a similarly annotated optical land cover dataset (NWPU-RESISC45). Besides, a top-2 smooth loss function with cost-sensitive parameters was introduced to tackle the label noise and imbalanced classes' problems. The proposed method shows high efficiency in transferring information from a similarly annotated remote sensing dataset, a robust performance on highly imbalanced classes, and is alleviating the over-fitting problem caused by label noise. What's more, the learned deep model has a good generalization for other SAR-specific tasks, such as MSTAR target recognition with a state-of-the-art classification accuracy of 99.46%.

What, Where and How to Transfer in SAR Target Recognition Based on Deep CNNs

Jun 04, 2019

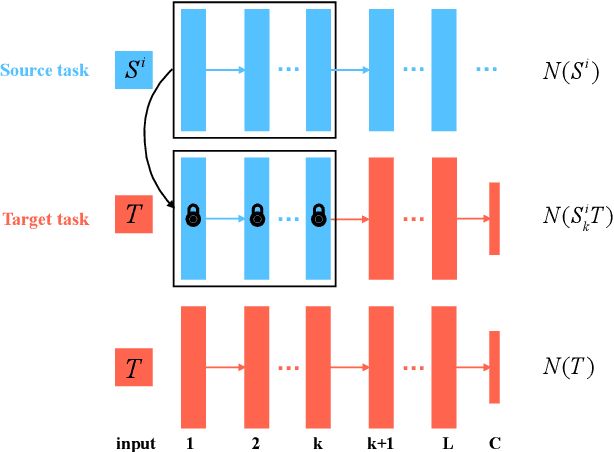

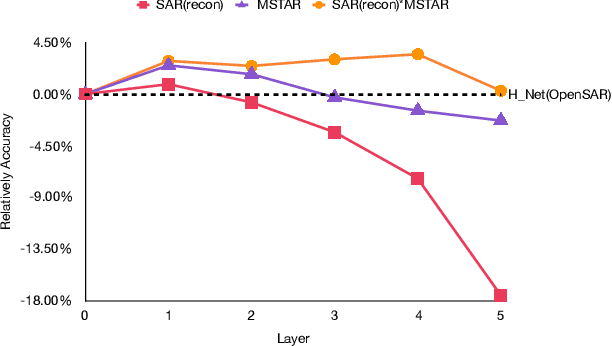

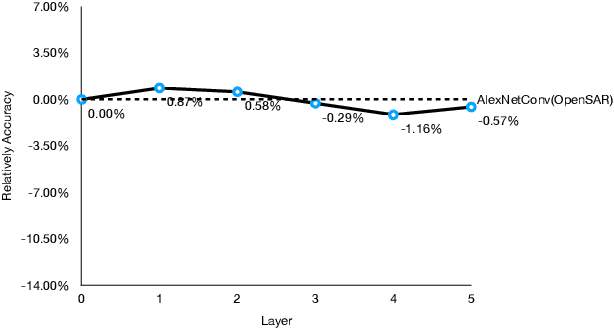

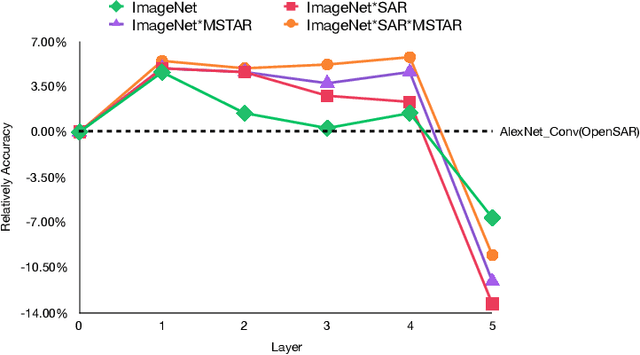

Abstract:Deep convolutional neural networks (DCNNs) have attracted much attention in remote sensing recently. Compared with the large-scale annotated dataset in natural images, the lack of labeled data in remote sensing becomes an obstacle to train a deep network very well, especially in SAR image interpretation. Transfer learning provides an effective way to solve this problem by borrowing the knowledge from the source task to the target task. In optical remote sensing application, a prevalent mechanism is to fine-tune on an existing model pre-trained with a large-scale natural image dataset, such as ImageNet. However, this scheme does not achieve satisfactory performance for SAR application because of the prominent discrepancy between SAR and optical images. In this paper, we attempt to discuss three issues that are seldom studied before in detail: (1) what network and source tasks are better to transfer to SAR targets, (2) in which layer are transferred features more generic to SAR targets and (3) how to transfer effectively to SAR targets recognition. Based on the analysis, a transitive transfer method via multi-source data with domain adaptation is proposed in this paper to decrease the discrepancy between the source data and SAR targets. Several experiments are conducted on OpenSARShip. The results indicate that the universal conclusions about transfer learning in natural images cannot be completely applied to SAR targets, and the analysis of what and where to transfer in SAR target recognition is helpful to decide how to transfer more effectively.

Learning a Rotation Invariant Detector with Rotatable Bounding Box

Nov 26, 2017

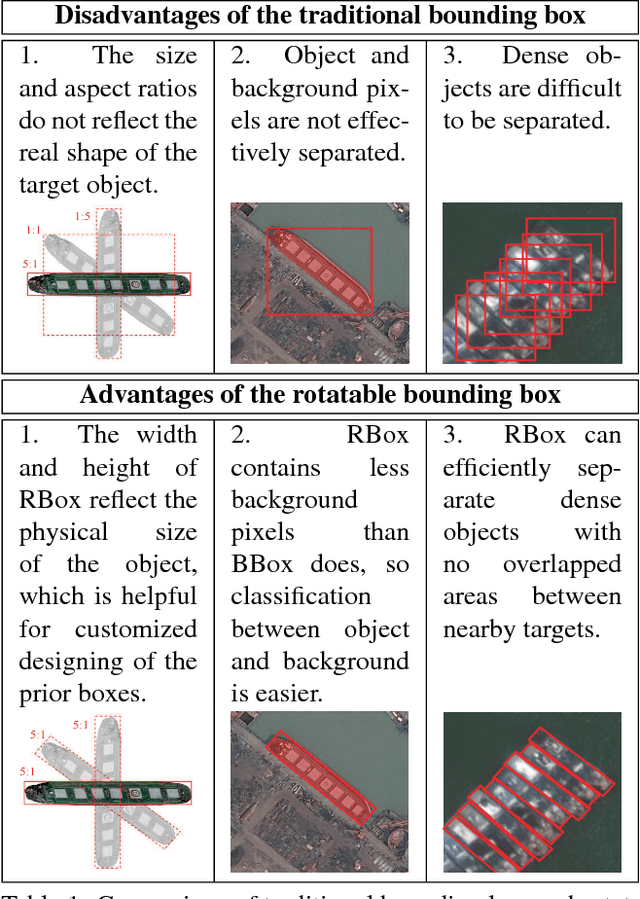

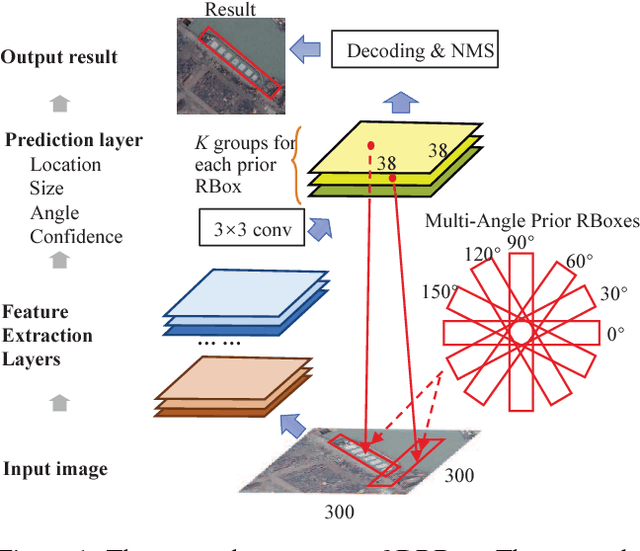

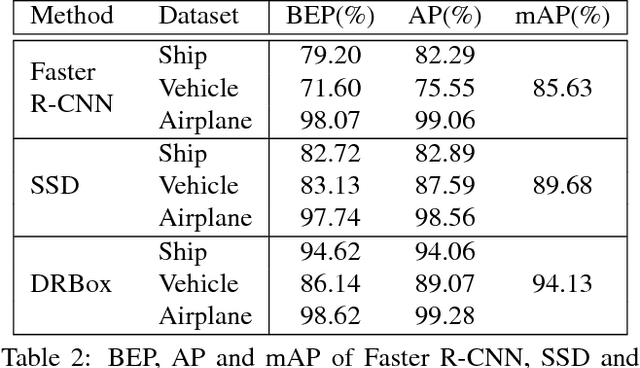

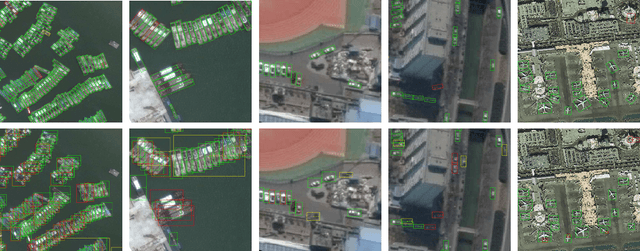

Abstract:Detection of arbitrarily rotated objects is a challenging task due to the difficulties of locating the multi-angle objects and separating them effectively from the background. The existing methods are not robust to angle varies of the objects because of the use of traditional bounding box, which is a rotation variant structure for locating rotated objects. In this article, a new detection method is proposed which applies the newly defined rotatable bounding box (RBox). The proposed detector (DRBox) can effectively handle the situation where the orientation angles of the objects are arbitrary. The training of DRBox forces the detection networks to learn the correct orientation angle of the objects, so that the rotation invariant property can be achieved. DRBox is tested to detect vehicles, ships and airplanes on satellite images, compared with Faster R-CNN and SSD, which are chosen as the benchmark of the traditional bounding box based methods. The results shows that DRBox performs much better than traditional bounding box based methods do on the given tasks, and is more robust against rotation of input image and target objects. Besides, results show that DRBox correctly outputs the orientation angles of the objects, which is very useful for locating multi-angle objects efficiently. The code and models are available at https://github.com/liulei01/DRBox.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge